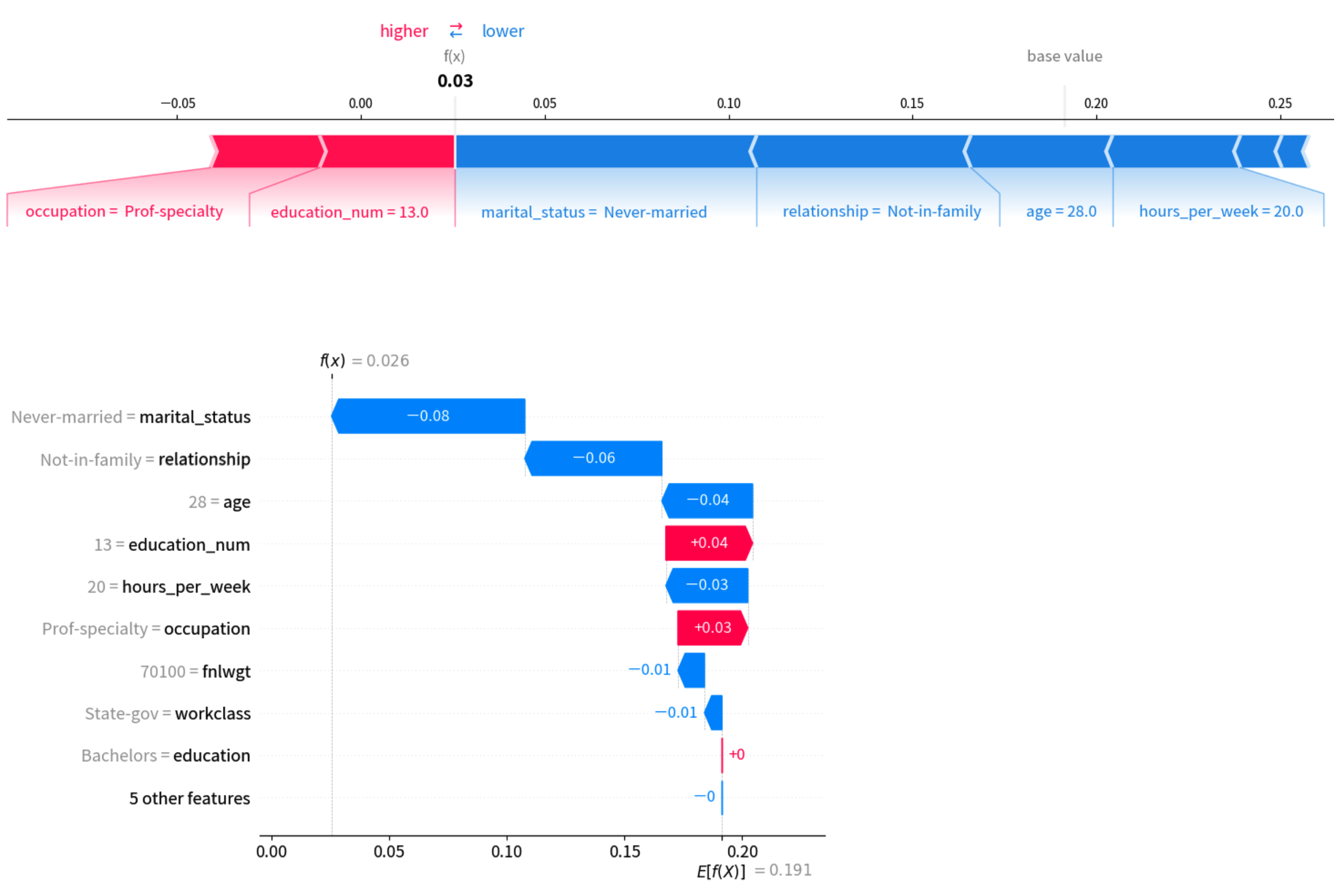

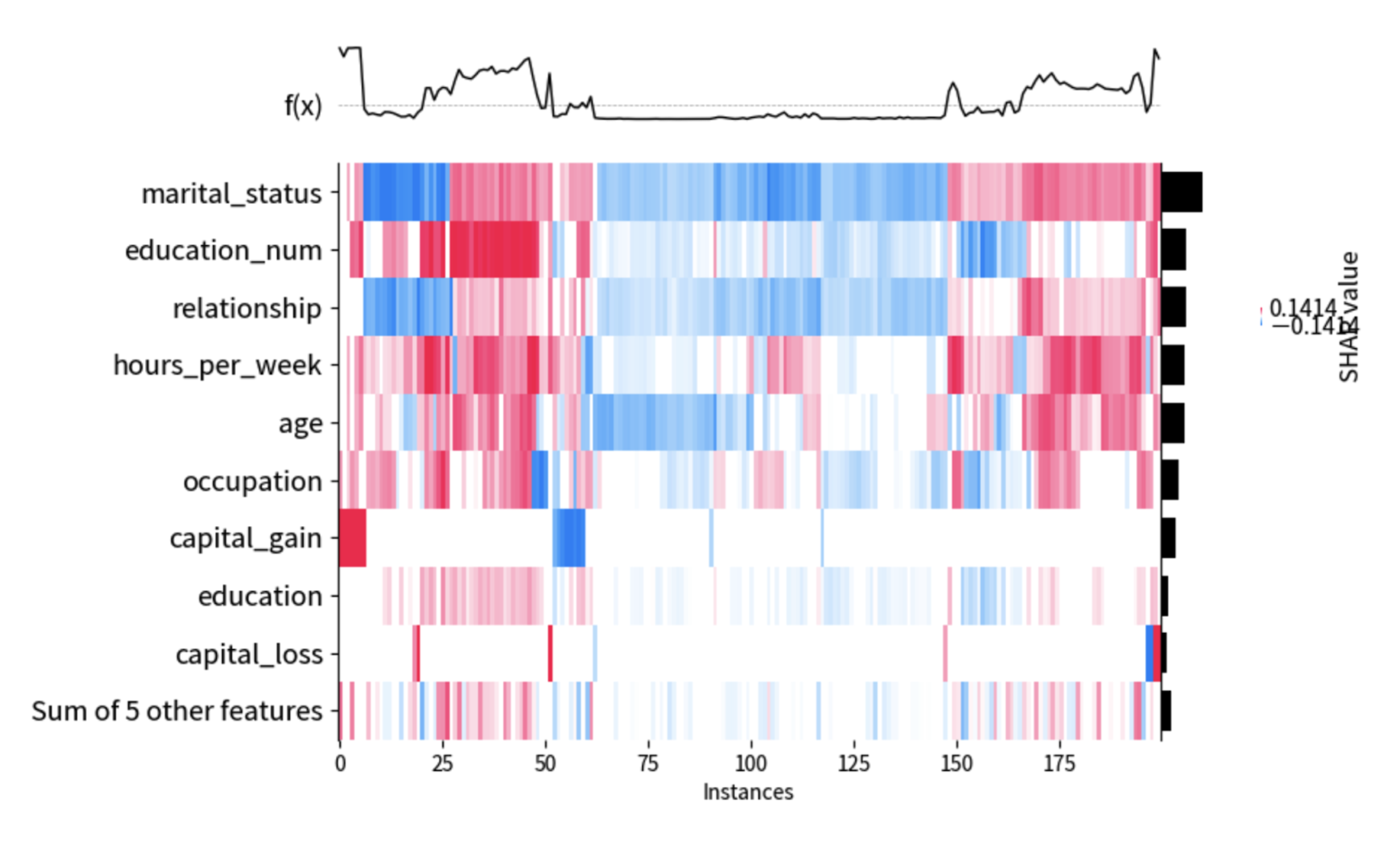

This notebook shows SHAP (SHapley Additive exPlanations) values to interpret the relative importance of features in the resulting predictions. Learn more about SHAP Analysis through this TD Blog Post.

Some sample visualizations are as follows:

Workflow Example

Workflow Example

Find a sample workflow here in Treasure Boxes.

+explain_predictions_by_shap:

ipynb>:

notebook: shapley

model_name: gluon_model # model used for prediction

input_table: ml_test.gluon_test # test data used for prediction| Parameter name | Parameter on Console | Description | Default Value |

|---|---|---|---|

| docker.task_name | Docker Task Mem | Task memory size. Available values are 64g, 128g (default), 256g, 384g, or 512g depending on your contracted tiers | 128g |

| model_name | Model Name | prediction model name | - |

| input_table | Input Table | specify a TD table in dbname.table_name | - |

| shared_model | Shared Model | specify a shared model UUID | None |

| sampling_threshold | Sampling Threshold | threshold used for sampling. See the executed notebook in detail. | 10_000_000 |

| hide_table_contents | Hide Table Contents | suppress showing table contents | false |

| explain_threshold | Explain Threshold | the number of rows to explain shapley values | 200 |

| interpret_samples | Interpret Samples | the number of samples to build the surrogate model to interpret predictions | 100 |

| export_shap_values | Export Shap Values | export shapley values as a TD table | None |