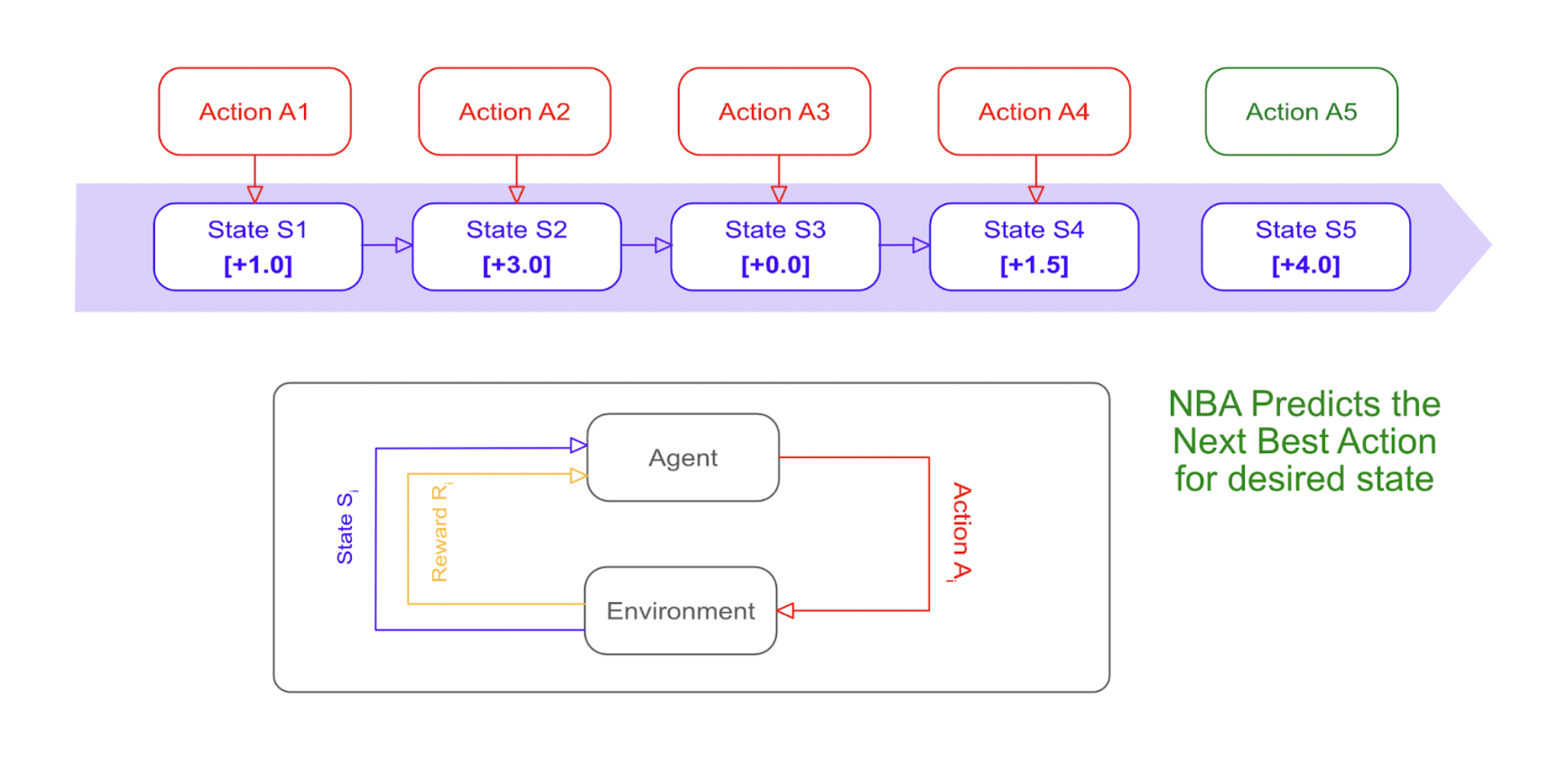

This notebook uses Q-learning to predict the next best action for each session in a particular state.

A Q-table (state → action) is trained and optimized on the train dataset and outputs predictions for Next Best Action for each user_id based on their latest state. This notebook takes an input table. An example input table is as follows:

A Q-table (state → action) is trained and optimized on the train dataset and outputs predictions for Next Best Action for each user_id based on their latest state. This notebook takes an input table. An example input table is as follows:

| user_id | tstamp | state | action | reward (optional) |

|---|---|---|---|---|

| 2a644f3f-ad33-48b3-b837-96c1e194dc17 | 2021-06-14 08:58:59 | /custom-demo/ | client_domain_organic_visit | 0.0 |

| 1740eb3c-03de-4856-891e-8f8bcffbfd6b | 2021-06-14 08:58:25 | /customers/lion/ | client_domain_organic_visit | 0.0 |

| bd378622-0905-44d4-a950-53adcdfc2611 | 2021-06-14 08:25:57 | /learn/cdp-vs-dmp/ | client_domain_organic_visit | 0.0 |

And, the notebook results a next action for each user based on the last state. An example output table is as follows:

| user_id | ... | next_action |

|---|---|---|

| 2a644f3f-ad33-48b3-b837-96c1e194dc17 | ... | cpc |

| 1740eb3c-03de-4856-891e-8f8bcffbfd6b | ... | social |

| bd378622-0905-44d4-a950-53adcdfc2611 | ... | display |

Optionally, a holdout test is conducted for the test dataset and calculates Spend and Revenue on actual actions (randomly sampled) and recommended actions. By providing average Cost Per Action (CPA) and total budget, you can show CPA, Increase in Conversions, and Revenue/Return On Investment (ROI) gain.

Learn more about NBA through this TD Blog Post.

Find a sample workflow here in Treasure Boxes.

+run_nba:

ipynb>:

notebook: NBA

train_table: ml_datasets.nba_train

test_table: ml_datasets.nba_test

budget: 10000

value_per_cv: 100| Parameter name | Parameter on Console | Description | Default Value |

|---|---|---|---|

| docker.task_mem | Docker Task Mem | Task memory size. Available values are 64g, 128g (default), 256g, 384g, or 512g depending on your contracted tiers | 128g |

| train_table | Train Table | specify a TD table used for training | None |

| test_table | Test Table | specify a TD table used for testing and evaluation | None |

| user_column | User Column | user column name | user_id |

| tstamp_column | Tstamp Column | timestamp column name | tstamp |

| state_column | State Column | state column name | state |

| action_column | Action Column | action column name | action |

| reward_column | Reward Column | reward column name | reward |

| budget | Budget | total budget | None |

| value_per_cv | Value Per Cv | average value per conversion | None |

| neg_reward | Neg Reward | negative reward | -10.0 |

| steps | Steps | total steps used in Q learning | 100000 |

| gamma | Gamma | discount factor used in Q-learning | 0.6 |

| lr | Lr | learning rate used in Q-learning | 0.05 |

| ignore_actions | Ignore Actions | action to ignore | None |

| currency | Currency | currency symbol | $ |

| default_action_cost | Default Action Cost | default action cost | 1.0 |

| action_cost | Action Cost | user-defined action costs | {} |

| export_q_table | Export Q Table | TD table name to export Q table | None |

| export_state_action | Export State Action | TD table name to export recommended action for each state | None |

| export_channel_ratio | Export Channel Ratio | TD table name to export comparison results between actual and recommended actions | None |

| export_predictions | Export Predictions | TD table name to export predicted results on the test dataset | None |

| export_model_performance | Export Model Performance | TD table name to export model performance | None |

| audience_name | Audience Name | Audience parent (master) segment name to merge an attribute table | |

| join_key | Join Key | join key column name in a master segment used for Audience integration. If not configured, user_column value is used. | None |