This document describes how to implement incremental prediction and provides a best practice to cope with low latency requirements.

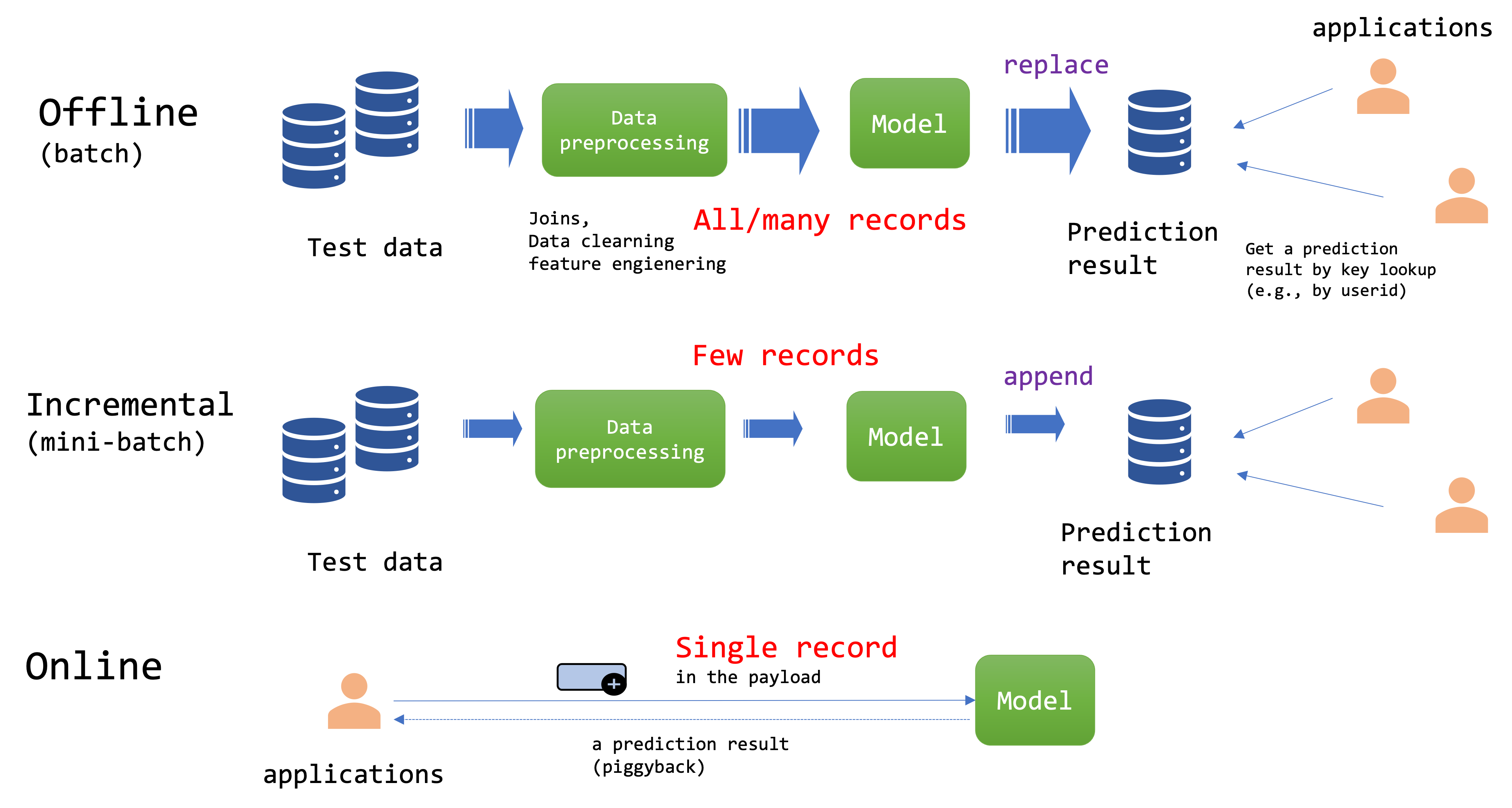

There are three main patterns for prediction:

- Online

- Offline

- Incremental

- Online prediction: Typically implemented as a REST API taking a record in JSON as the input and returning a prediction result. The time taken to complete each prediction can take from milliseconds to seconds. The response for each API call can be complex to calculate because it requires multiple joins, for example, resolving user attributes from cookie id. Ensemble models might not meet low latency requirements for REST API calls.

- Offline prediction: Typically scheduled as a daily batch job. It can achieve high throughput for predictions but it generally takes tens of minutes to several hours to complete each batch of prediction.

- Incremental (mini-batch) prediction: Similar to batch prediction with the difference that tasks can be split into smaller batches. It can take seconds to minutes to complete each mini-batch of prediction. It can not be applied for strict real-time requirements but can be applied for semi-realtime scenarios where a few minutes' latency is acceptable.

When storing prediction results in a key-value store, it is possible to achieve milliseconds for the latency.

Implement incremental mini-batch predictions

Consider the use case of assigning scores to new users, who requested a sales callback for an insurance product. However, the number of users is large and it is required to prioritize them by predicted LTV (Lifetime Value) scores.

Many new customers need to be processed during each five minutes batch and some existing customers may have additional records. So, it's expected to calculate new LTV scores for users who accessed in the last five minutes. Prediction need to be completed within five minutes before the next batch starts.

Assuming the prediction result is stored on ${predicted_table}, you can retrieve the user details sorted by the latest LTV scores, using the following SQL query:

Get latest LTV score for each user

WITH scores AS (

SELECT

userid,

td_last(time, score) as score # use the latest prediction score

FROM

${predicted_table}

WHERE

score >= 0.7 # filter by score threshold

GROUP BY

userid

)

SELECT

l.userid, l.score,

r.user_name, r.user_age, r.user_email, r.user_tel_no

FROM

scores l

JOIN user_info r ON (l.userid=r.userid)

ORDER BY

score DESC

-- LIMIT 100 The following workflow can be used for scheduling mini-batch prediction that appends LTV scores to users who accessed in the last 5 minutes.

A workflow to run mini-batch prediction

# predict every 5 min

timezone: Asia/Tokyo

schedule:

cron>: */5 * * * *

# List users who accessed in the last 5 minutes

+list_users:

td>: queries/list_users.sql

store_last_results: true

# Run mini-batch predictions

+gluon_predict:

ml_predict>:

notebook: gluon_predict

model_name: ${model_name}

input_table: ${input_database}.${input_table}

output_table: ${output_database}.${output_table}

output_mode: append # append scores to output_table

rowid_column: userid

rowid_filter: ${td.last.results.users} It is assumed that the prediction model, specified in ${model_name}, is created on a monthly basis and a verified well-performing model is used for prediction.

In "queries/list_users.sql ", the ids of users who accessed in the last 5 minutes can be listed as follows:

queries/list_users.sql

SELECT

-- comma separated list of users: '111','222'

array_join(transform(array_agg(DISTINCT userid), x -> '''' || x || ''''), ',') as users

FROM

session

WHERE

time >= TD_TIME_ADD(TD_SCHEDULED_TIME(), '-5m', 'JST') -- access in the last 5 minutes The "rowid_column " and "rowid_filter " are used to issue the following SQL query and only the matched rows are used for prediction.

SELECT * FROM ${input_database}.{input_table}

WHERE {rowid_column} in ({rowid_filter})