- Download the sample project

cd ~/Downloads

curl -o nasdaq_analysis.zip \

-L https://gist.github.com/danielnorberg/f839e5f2fd0d1a27d63001f4fd19b947/raw/d2d6dd0e3d419ea5d18b1c1e7ded9ec106c775d4/nasdaq_analysis.zip- Extract the downloaded project

unzip nasdaq_analysis.zip- Enter the workflow project directory

cd nasdaq_analysisAs a reminder, here is the workflow from the introductory tutorial that you will be modifying.

timezone: UTC

schedule:

daily>: 07:00:00

_export:

td:

database: workflow_temp

+task1:

td>: queries/daily_open.sql

create_table: daily_open

+task2:

td>: queries/monthly_open.sql

create_table: monthly_openGo to your Treasure Data jobs page, so you can follow along as you run the following workflows. You can access the page here: https://console.treasuredata.com/jobs

Go into the nasdaq_analysis directory, and run the following command.

td wf run --allInclude --all to make sure that you can run the workflow even if you ran it previously. TD Workflows automatically remembers that it has run successfully, and shouldn't need to be run again. You can overwrite this condition by including this condition.

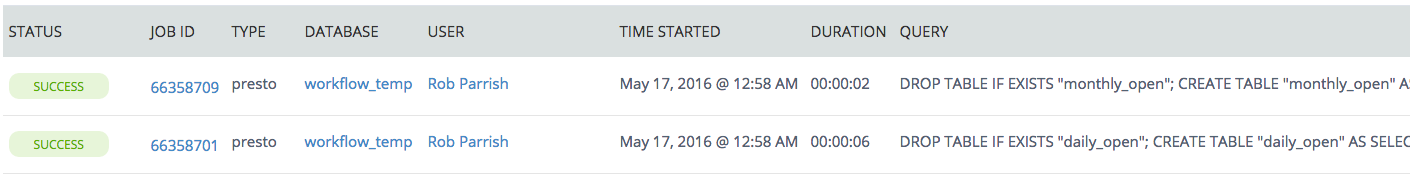

You should see the following job runs in your account.

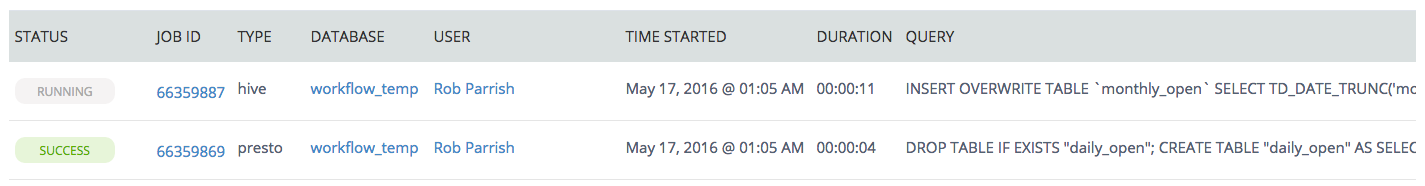

Having a query run on Hive is as simple as adding an extra parameter. Modify your workflow file with the following addition of engine: hive as a parameter to +task2.

+task2:

td>: queries/monthly_open.sql

create_table: monthly_open

engine: hivetd wf run --all

Reference information can be found here:

Additional Hive documentation and Presto documentation can be found on the Treasure Data Developer Portal.