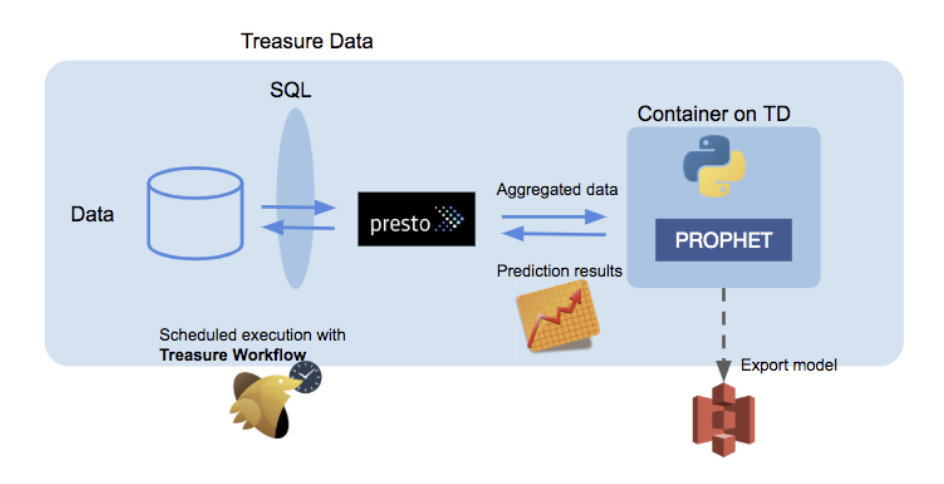

Treasure Workflow provides for the prediction of time-series values, like sales revenue or page views, using Facebook Prophet. Machine Learning algorithms can be run from a custom Python script as part of your scheduled workflows. Use Facebook Prophet in your Python custom script for time series analysis and sales data prediction.

Prophet is a procedure for forecasting time series data. It can learn probability distributions from incomplete data sets and shifts in the trends. Details of the data for these examples can be found in the Prophet’s official document.

These examples predict sales data using Facebook Prophet's time series analysis to predict continuous values using past data. Depending on your access to S3 and Slack, pick the example to use.

The workflow:

Fetches past sales data from Treasure Data

Builds a model with Prophet

Predicts future sales and write back to Treasure Data

Uploads predicted figures to Amazon S3

Sends a notification to Slack

Make sure that the custom scripts feature is enabled for your TD account.

Download and install the TD Toolbelt and the TD Toolbelt Workflow module. For more information, see TD Workflow Quickstart.

Basic Knowledge of Treasure Workflow's syntax

S3 bucket and associated credentials

Create your Slack webhook URL

Download the project from this repository

In the command line Terminal window, change directory to timeseries. For example:

cd timeseries- Run data.sh to prepare example data. It creates a database called timeseries and table called retail_sales.

./data.sh - Run the example workflow as follows:

td workflow push prophet

td workflow secrets \

--project prophet \

--set apikey \

--set endpoint \

--set s3_bucket \

--set aws_access_key_id \

--set aws_secret_access_key \

--set slack_webhook_url

# Set secrets from STDIN like: apikey=x/xxxxx, endpoint=https://api.treasuredata.com, s3_bucket=$S3_BUCKET,

# aws_access_key_id=AAAAAAAAAA, aws_secret_access_key=XXXXXXXXX, slack_webhook_url=https://hooks.slack.com/services/XXXXXXX/XXXXXX/XXXXXX

# Where XXXXXXX/XXXXXX/XXXXXX is the value of the slack URL where you want information to be populated automatically. $ td workflow start prophet predict_sales --session nowA notification of prediction results is sent to your Slack:

Prediction results are stored in the predicted_sales table in the timeseries database.

Validate predicted sales values in TD Console with SQL as follows:

SELECT

ds,

yhat,

yhat_lower,

yhat_upper

FROM

timeseries.predicted_sales

ORDER BY

ds DESC LIMIT 100You can see the predicted sales numbers in that column.

This example is for when you do not have access to S3 or Slack.

The workflow:

Fetches past sales data from Treasure Data

Builds a model with Prophet

Predicts future sales and writes back to Treasure Data

Make sure the custom scripts feature is enabled for your TD account.

Download and install the TD Toolbelt and the TD Toolbelt Workflow module. For more information, see TD Workflow Quickstart.

Basic Knowledge of Treasure Workflow's syntax

Download the project from this repository

In the command line Terminal window, change directory to timeseries. For example:

cd timeseries- Run data.sh to prepare example data. It creates a database called timeseries and table called retail_sales.

./data.shtd workflow push prophet

td workflow secrets \

--project prophet \

--set apikey \

--set endpoint

# Set secrets from STDIN like: apikey=x/xxxxx, endpoint=https://api.treasuredata.com

td workflow start prophet predict_sales_simple --session nowPredictions are stored in Treasure Data.

Make sure you are signed into a git account that has permission to see Treasure Data workflow samples.

Review the contents of the directory:

predict_sales.dig - Example workflow for sales prediction and notification to Slack.

predict.py - Custom Python script with Prophet. It also uploads figures to Amazon S3

If you don't need to send a notification to Slack, you can remove "+send_graph " step in the predict_sales.dig.

This example uses a dataset for sales data. You can use your own data in Treasure Data after modifying the query in the read_td function.

You must prepare the ds (datestamp) column and the y column. The y column represents target numerical values for forecasting, such as sales values or website page views (PVs).

Here is example code for page view logs:

import pytd.pandas_td as td

engine = td.create_engine('presto:sample_datasets')

start_date = '2014-01-01'

end_date = '2018-12-31'

df = td.read_td(f"""select TD_TIME_FORMAT(TD_DATE_TRUNC('minute', time), 'yyyy-MM-dd HH:mm:ss') as ds, count(1) as yFrom www_accessWhere TD_TIME_RANGE(time, '{start_date}', '{end_date}', 'PDT')group by 1Order by 1""",engine)

m = Prophet(changepoint_prior_scale=0.01).fit(df)

future = m.make_future_dataframe(periods=5, freq='M')

fcst = m.predict(future)