You can write job results directly to Databricks. For example, you might integrate data from multiple sources into Treasure Data, run queries to organize the data, and then use a job that applies the connector to export the data to Databricks.

For import integration, see Databricks Import Integration.

- Basic knowledge of Treasure Data, including the TD Toolbelt.

- Basic knowledge of Databricks and access to a Databricks account

- Access to a Databricks server

- If the Databricks server is in hibernation mode, it can take a few minutes to start, which may cause the connector to timeout.

- Databricks doesn't support transactions across multiple statements. Thus, the replace and truncate mode can partially fail and can't roll back. For example, In the truncate or replace mode, if the delete or create replace operation is successful but insert data from the temp table into a target table fails, the connector can't roll back to the previous data.

You can use the Treasure Data Console to configure your connection.

- Log in to the Databricks website.

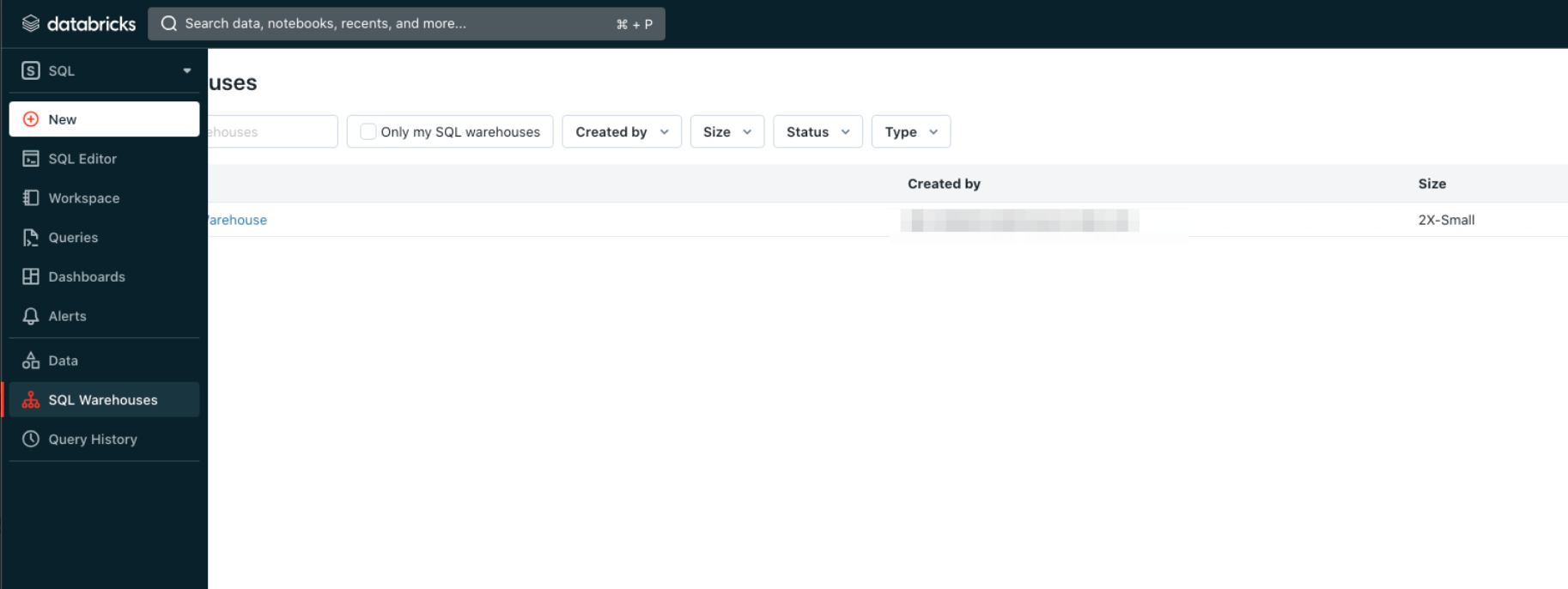

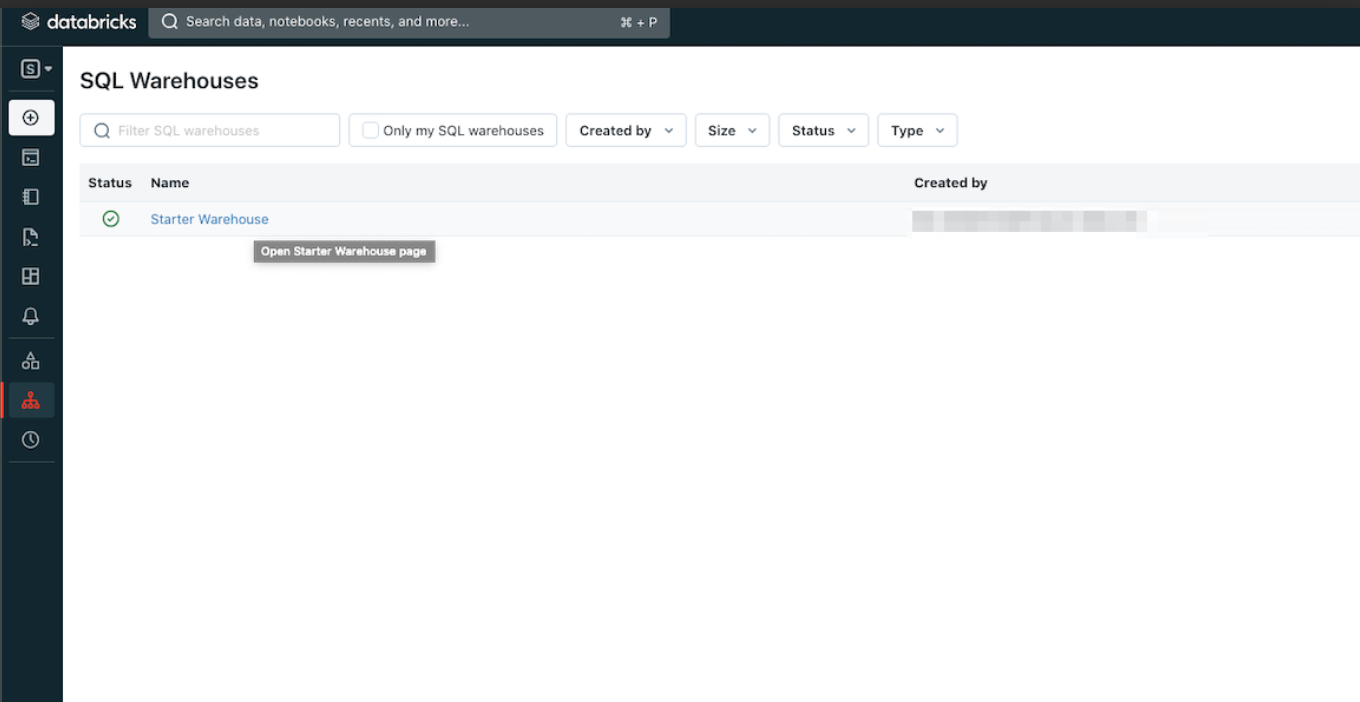

- Navigate to the SQL tab and then select SQL Warehouses.

- Select an instance of an SQL Warehouse.

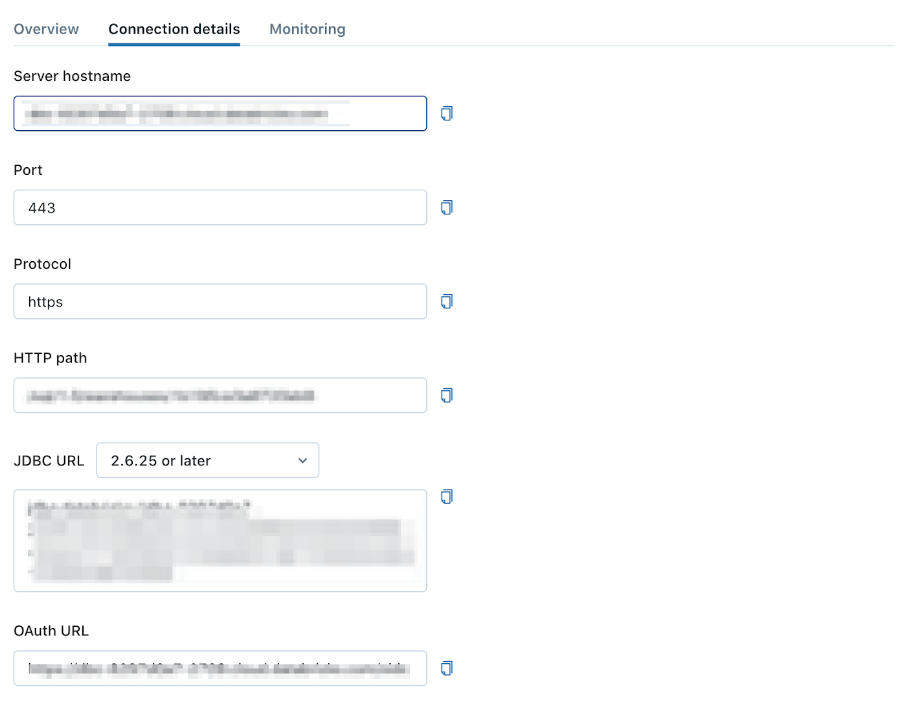

- Select the Connection details tab.

- Note the Server hostname and HTTP path for the SQL Warehouse.

Go to Treasure Data Connections

- Select Integrations Hub > Catalog.

- Search for Databricks in the catalog.

- Hover your mouse over the icon and select Create Authentication.

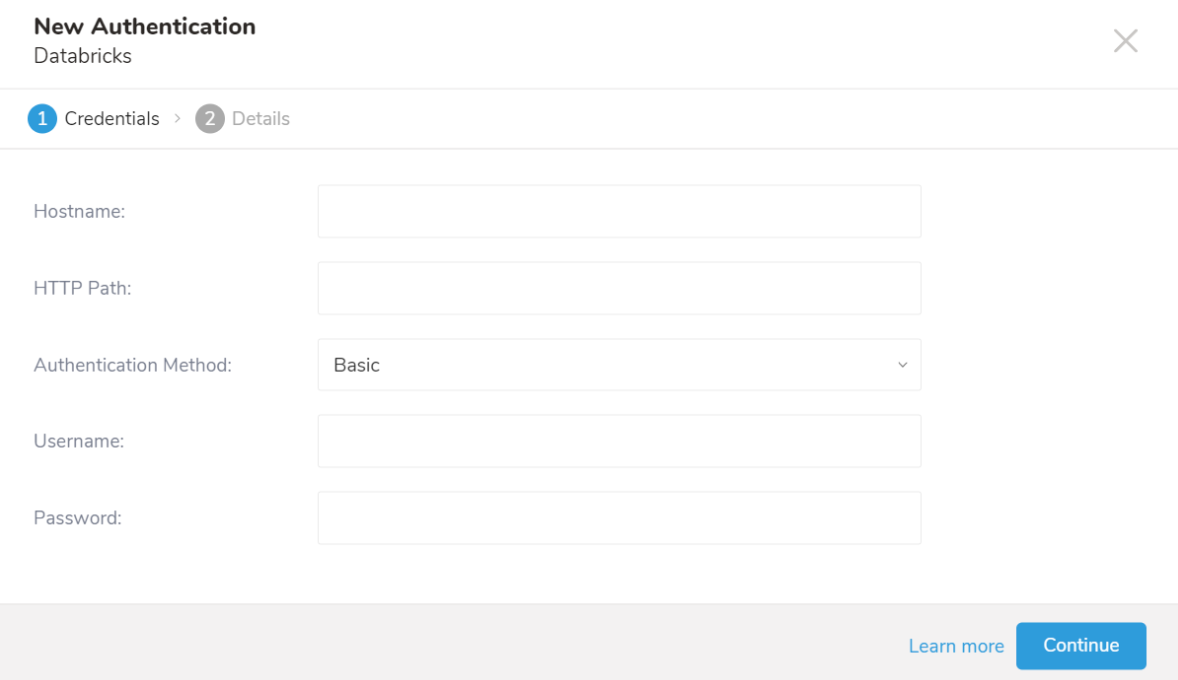

- Ensure that the Credentials tab is selected, and then enter credential information for the integration.

| Parameter | Description |

|---|---|

| Hostname | This is the hostname of the Databricks instance, |

| HTTP Path | This is the HTTP Path of the Databricks instance. |

| Authentication Method | This is the authentication method: it can be a Basic or Personal Access Token. |

| Username | This is the username used to log in. It is only valid when the Authentication Method is Basic. |

| Password | This is the password used to log in. It is only valid when the Authentication Method is Basic. |

| Token | This is the Databricks personal access token. It is only valid when the Authentication Method is a Personal Access Token. |

- Select Continue.

- Enter a name for your authentication, and select Done.

Create or reuse a query that configures the data connection.

Go to the Treasure Data console. Go to the Query Editor. Access the query that you plan to use to export data.

Sometimes, you need to define the column mapping in the query.

For Example:

SELECT c_id, c_double, c_long, c_string, c_boolean, c_timestamp, c_json FROM (VALUES (1, 100, 10, 'T4', true, '2018-01-01','{ "name":"John"}'),(2, 100, 99, 'P@#4', false, '2018-01-01', '{ "name":"John"}'),(3, 100.1234, 22, 'C!%^&*', false, '2018-01-01','{ "name":"John"}')) tbl1 (c_id, c_double, c_long, c_string, c_boolean, c_timestamp, c_json)Select Output Results, located at the top of your query editor.

- Type the connection name in the search box to filter and select your connection.

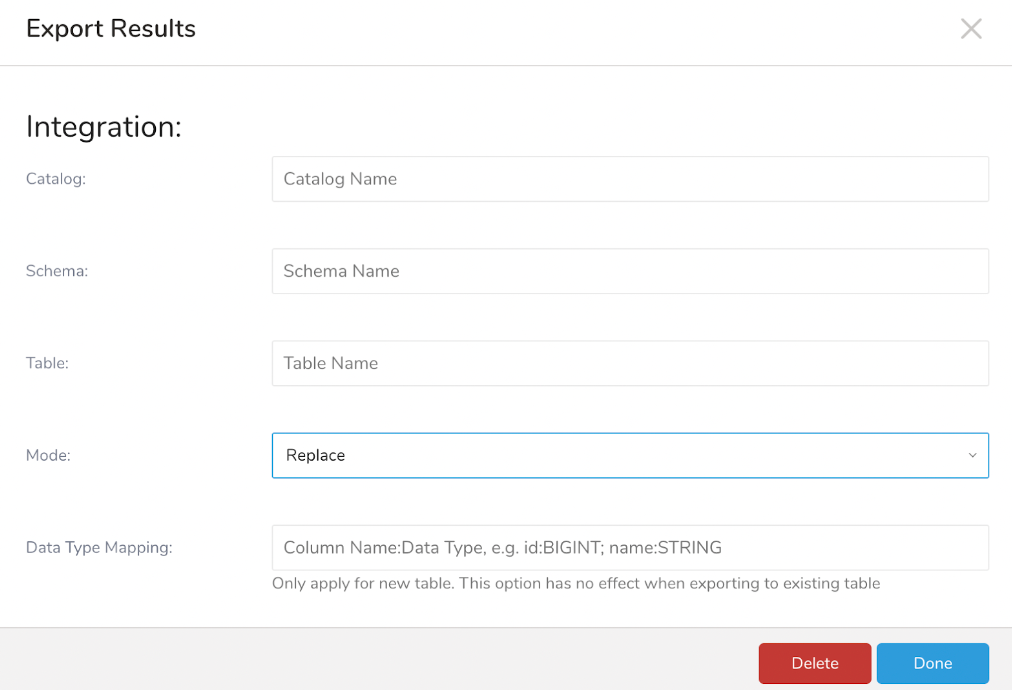

- After you select your Databricks connection, the Configuration or Export Results dialog pane appears:

Specify parameters. The parameters are as follows:

Catalog (required): This is the dataset catalog.

Schema (required): This is the dataset schema.

Table (required): The table to which results will be exported. If it does not exist, a new one will be created.

Mode (required): There are three modes to fulfill results to Databricks table: Append, Overwrite, and Replace.

- Append: TD results will be appended to the target table

- Overwrite: The target table will be erased, then TD results will be appended from the beginning of the target table

- Replace: The target table will be re-created, then TtrD results will be appended from the beginning of the target table

Data type mapping: It will be explained in the following section.

Here is the table for 1-1 default mapping from the type in TD results to the target table in case the target table does not exist:

| TD results | Databricks |

|---|---|

| string | STRING |

| double | DOUBLE |

| long | BIGINT |

| timestamp | TIMESTAMP |

| boolean | BOOLEAN |

| json | STRING |

You might want to set the different type as the default. Data Type Mapping explicitly sets a specific type (for example, STRING) to a specific column. Data Type Mapping applies a type only to columns in your target table.

If you use Data Mapping, the following is true:

- If the target table does not exist, then the export job will create a new target table.

The syntax for the data type mapping parameter is col_name_1: STRING; col_name2: BIGINT. You must provide: the column name and Databricks data type.

TD Databricks Export doesn't support BINARY, INTERVAL, STRUCTURE, ARRAY, and MAP data type.

For example, in the query:

SELECT c_id, c_double, c_long, c_string, c_boolean, c_timestamp, c_json FROM (VALUES (1, 100, 10, 'T4', true, '2018-01-01','{ "name":"John"}'),(2, 100, 99, 'P@#4', false, '2018-01-01', '{ "name":"John"}'),(3, 100.1234, 22, 'C!%^&*', false, '2018-01-01','{ "name":"John"}')) tbl1 (c_id, c_double, c_long, c_string, c_boolean, c_timestamp, c_json)

Optional: Use of Scheduled Jobs for OutputYou can use Scheduled Jobs with Result Output to periodically write the output result to a specific target destination.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

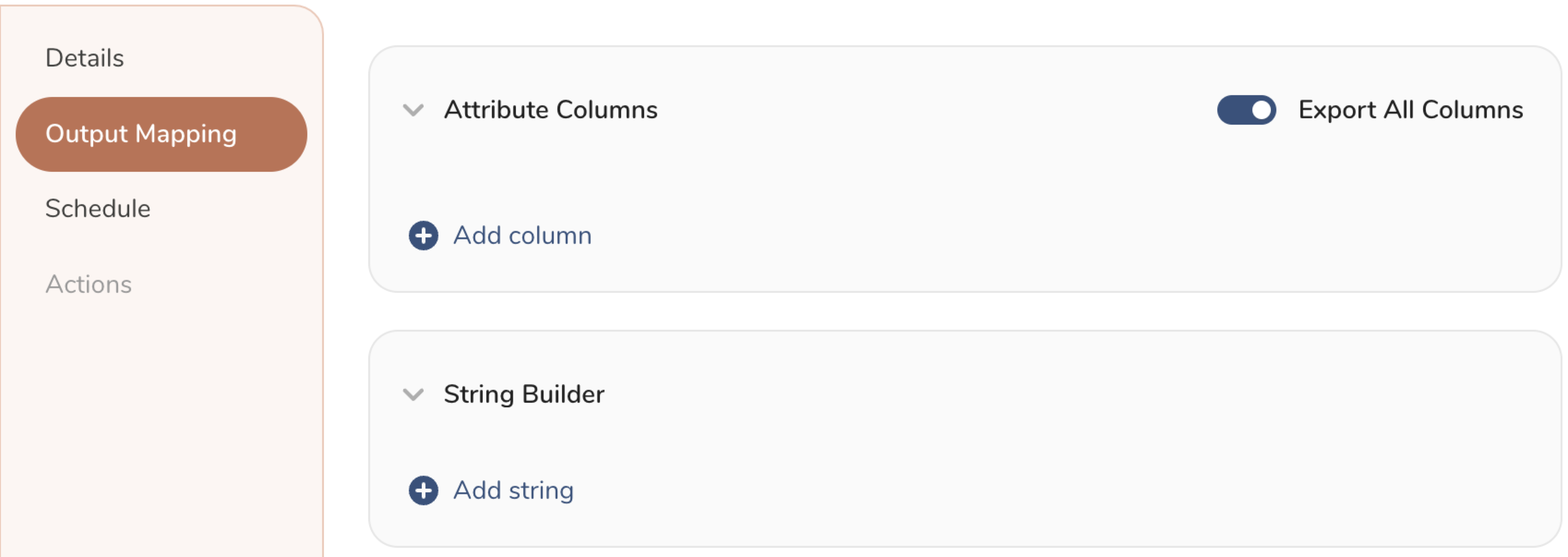

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

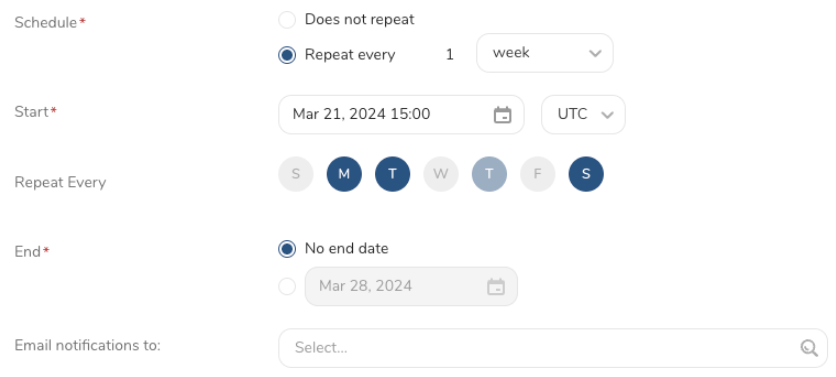

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

Within Treasure Workflow, you can specify the use of this data connector to output data.

timezone: UTC

_export:

td:

database: sample_datasets

+td-result-into-databricks:

td>: queries/sample.sql

result_connection: your_connection_name

result_settings:

catalog: CATALOG

schema: default

table: TARGET_TABLE

mode: insert

column_options:

col1: {type: 'DATE'}Install the TD Toolbelt.

Add the Databricks result output destination by using the -r / --result option for the td query command:

td query -d mydb \

-w 'SELECT id, name FROM source_table' \

--type presto \

-r '{"type": "databricks", "host_name": "hostname", "http_path": "httpPath", "auth_method": "BASIC", "username": "username", "password": "password", catalog: "catalog", "schema": "default", "table": "target_table", "mode": "replace", "column_options": {"col1": {"type": "DATE"}}}'Some parameters, such as catalog and schema, are self-explanatory and are the same parameters used in the TD Console (described in the "Configure the connection by specifying the parameters" section). However, some parameters are either different in the key or value:

mode (required): The value for mode is a raw value of the Mode parameter:

- insert: TD results will be appended to the target table

- truncate_insert: The target table will be erased, then TD results will be appended from the beginning of the target table.

- replace: The target table will be re-created, then TtrD results will be appended from the beginning of the target table

column_options: The data type mapping.

Add the Databricks result output destination by using the -r / --result option for the td sched:create command:

td sched:create every_6_mins "*/6 * * * *" \

-d mydb \

-w 'SELECT id, name FROM source_table' \

--type presto \

-r '{"type": "databricks", "host_name": "hostname", "http_path": "httpPath", "auth_method": "BASIC", "username": "username", "password": "password", catalog: "catalog", "schema": "default", "table": "target_table", "mode": "replace", "column_options": {"col1": {"type": "DATE"}}}'