This is a summary of new features and improvements introduced in the September 1st, 2018 release. If you have any product feature requests, submit them at feedback.treasuredata.com.

- Import: Cvent Beta

- Export: Cheetah Digital MailPublisher Beta

- Segment and Activate: Facebook Custom Audience with Personalization API

- User Experience: Search Integrations by Technology Vertical

- User Experience: Search Connections by Integration Type and Owner Name

- Import: MySQL Data Connector Upgrade Notice of Action

- Query: Hive - Queries Fail Automatically when Disk Space Limits Reached

- Query: Presto - TD_SESSIONIZE to Be Deprecated, Use TD_SESSIONIZE_WINDOW Instead

- Query: Presto - September Upgrade to Presto 0.205 Coming in September - Code Changes Required

- Query: Presto - TD_INTERVAL UDF

Easily ingest your event data from Cvent into Treasure Data with the new Cvent data connector. With this integration, you can consolidate Contacts, Events, Invitees, Registrations with other sources of existing data in the Treasure Data Customer Data Platform.

For details, see the CVent Data Connector article.

Note: This integration is currently under private beta. Contact the Treasure Data team for further details. The connector documentation is available only to beta participants.

Treasure Data can publish segments into Cheetah Digital MailPublisher Smart email lists. With this integration, you can export target lists from your existing data sources with custom properties to use with MailPublisher Smart drafts for personalized emails.

For details, see the Cheetah Digital MailPublisher Smart article.

Note: This integration is currently under private beta. Contact the Treasure Data team for further details. The connector documentation is available only to beta participants.

Create optimized Facebook Ads and accurately target your audience with Personalization API and Segmentation Builder. You can send a segment of customer profiles to Facebook Custom Audience directly through Facebook Pixel.

For details, see the article Facebook Custom Audience with Personalization API.

You can now filter integrations by the technology vertical, such as Advertising and eCommerce on the Catalog page.

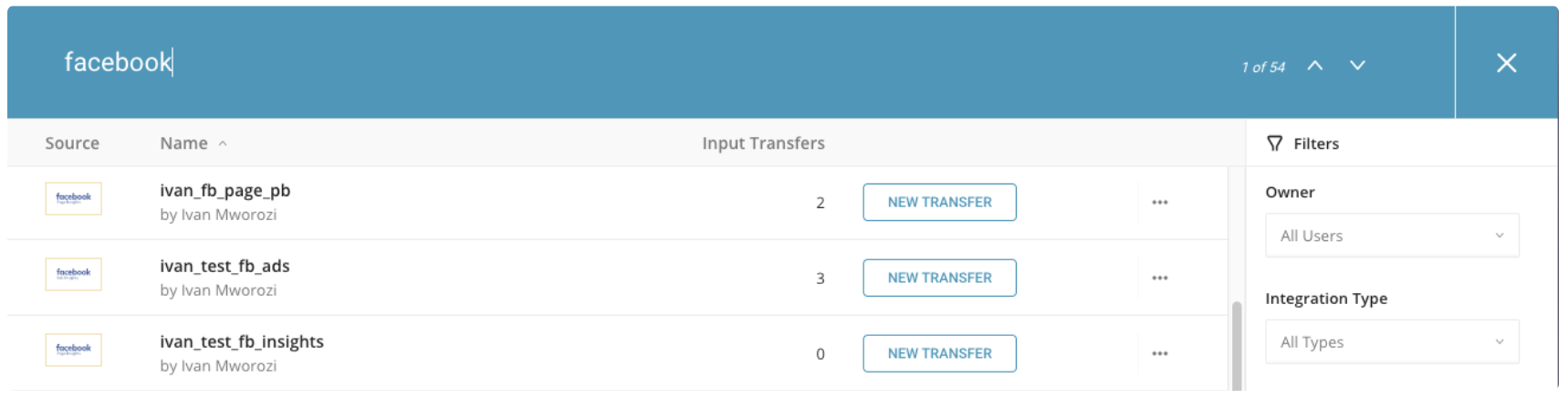

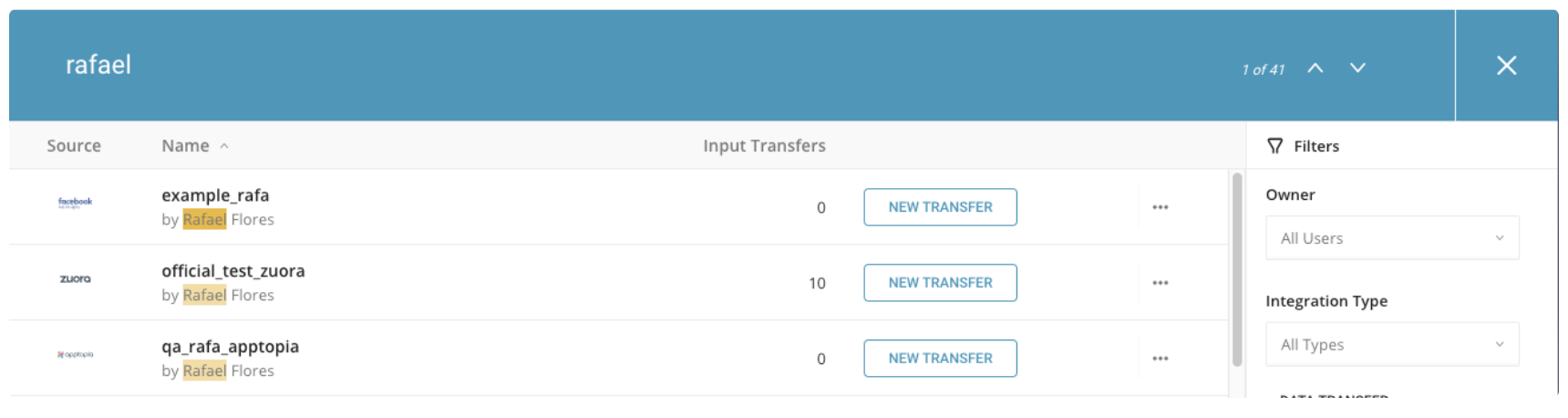

You can now more easily find your connections through additional search capabilities within the My Connections page.

Search by Integration Type:

Search by Owner Name:

The MySQL connector introduces a backward incompatibility issue for date-time values. You must take action to avoid disruptions to your MySQL import jobs.

For details, see Backward Incompatibility: Required Setting Change for the MySQL Data Connector.

Hive processing will at times move data from S3 into local storage or HDFS storage on the Hadoop cluster nodes. Hive jobs fail when disk space is exhausted on Hadoop cluster nodes-- either when one of the Hadoop nodes runs out of local space or when a job reaches its overall storage limit on HDFS.

In the past, under these conditions, a Treasure Data operator would be alerted to manually kill the job, and support would manually notify the customer and follow up. We are changing our handling of these situations to be more predictable and automated, and more in line with other job failures.

The new behavior is, such jobs will fail, and the job Output Log will contain a diagnostic message about the failure.

If the disk space is full on one Hadoop worker, the output log contains:

Diagnostic Messages for this Task:

Error: Task exceeded the limits:

org.apache.hadoop.mapred.Task$TaskReporter$TaskLimitException:

too much data in local scratch dir=/mnt4/hadoop/yarn/cache/yarn/nm-local-dir/usercache/1/appcache/application_1522879910596_701143.

current size is 322234851654 the limit is 322122547200If the query exceeds the limit of HDFS storage, the output log contains:

Error: java.lang.RuntimeException:

org.apache.hadoop.hive.ql.metadata.HiveException:

org.apache.hadoop.hdfs.protocol.DSQuotaExceededException:

The DiskSpace quota of /mnt/hive/hive-1/0 is exceeded:

quota = 8246337208320 B = 7.50 TB but diskspace consumed = 8246728346553 B = 7.50 TBTo reduce disk usage by Hadoop jobs, limit the amount of input data, such as by applying TD_TIME_RANGE to restrict the time period of any subqueries that scan data, or consider applying more restrictive conditions to JOINs.

The TD_SESSIONIZE() function is now deprecated due to performance issues and sometimes-inconsistent results.

The UDF TD_SESSIONIZE_WINDOW() was introduced in 2016 to replace TD_SESSIONIZE. It is a Presto window function with equivalent functionality, more consistent results and faster, more reliable performance.

TD_SESSIONIZE() will be removed in January 2019. Code that uses TD_SESSIONIZE() should be rewritten as soon as possible to use TD_SESSIONIZE_WINDOW().

Presto 0.205 enforces some ANSI SQL rules more strictly than previous releases. Several constructs that were previously legal now cause syntax errors. Some customers will have to make minor changes in Presto code to ensure compatibility with the new Presto.

You can make these modifications now, in our current Presto environment, and when you migrate to the new release your queries will continue to work.

For details on the required changes, see Presto 0.205 Release article.

We’ve added the TD_INTERVAL UDF. TD_INTERVAL() is a companion function to TD_TIME_RANGE(). TD_INTERVAL can be used to compute relative time ranges that otherwise require complex date manipulation.