Treasure Data allows you to directly importing data from your organization’s Zendesk account.

- Basic knowledge of Treasure Data

- Zendesk account

- Zendesk Zopim account to retrieve Chat data

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

Open the TD Console.

Navigate to Integrations Hub > Catalog.

Search and select Zendesk.

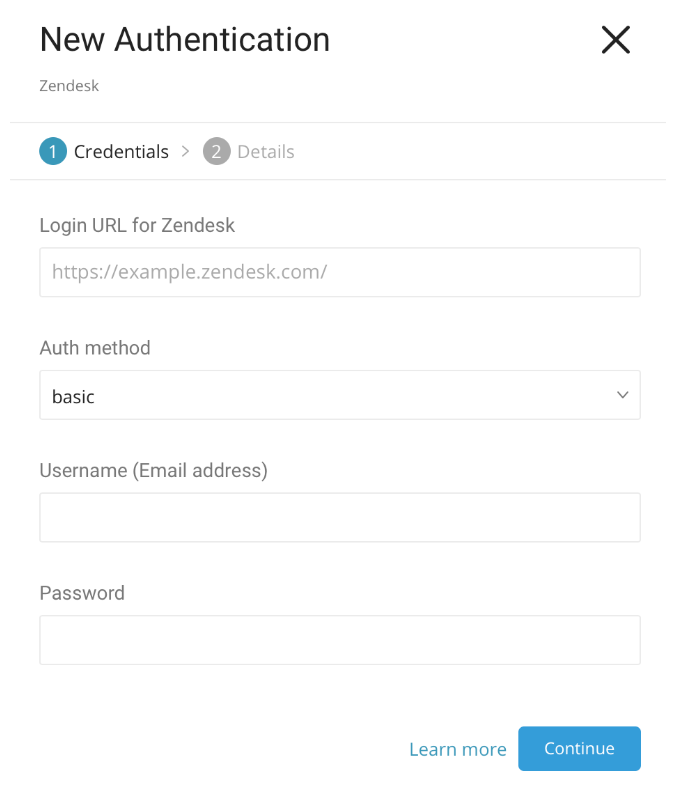

Click Create. You are creating an authenticated connection. The following dialog opens.

There are three options for Auth method: basic, token, oauth.

- To import chat data, enter in this url for Login Url for Zendesk: https://www.zopim.com. Token authentication with Chat is not supported.

Fill in all the required fields, then click Continue.

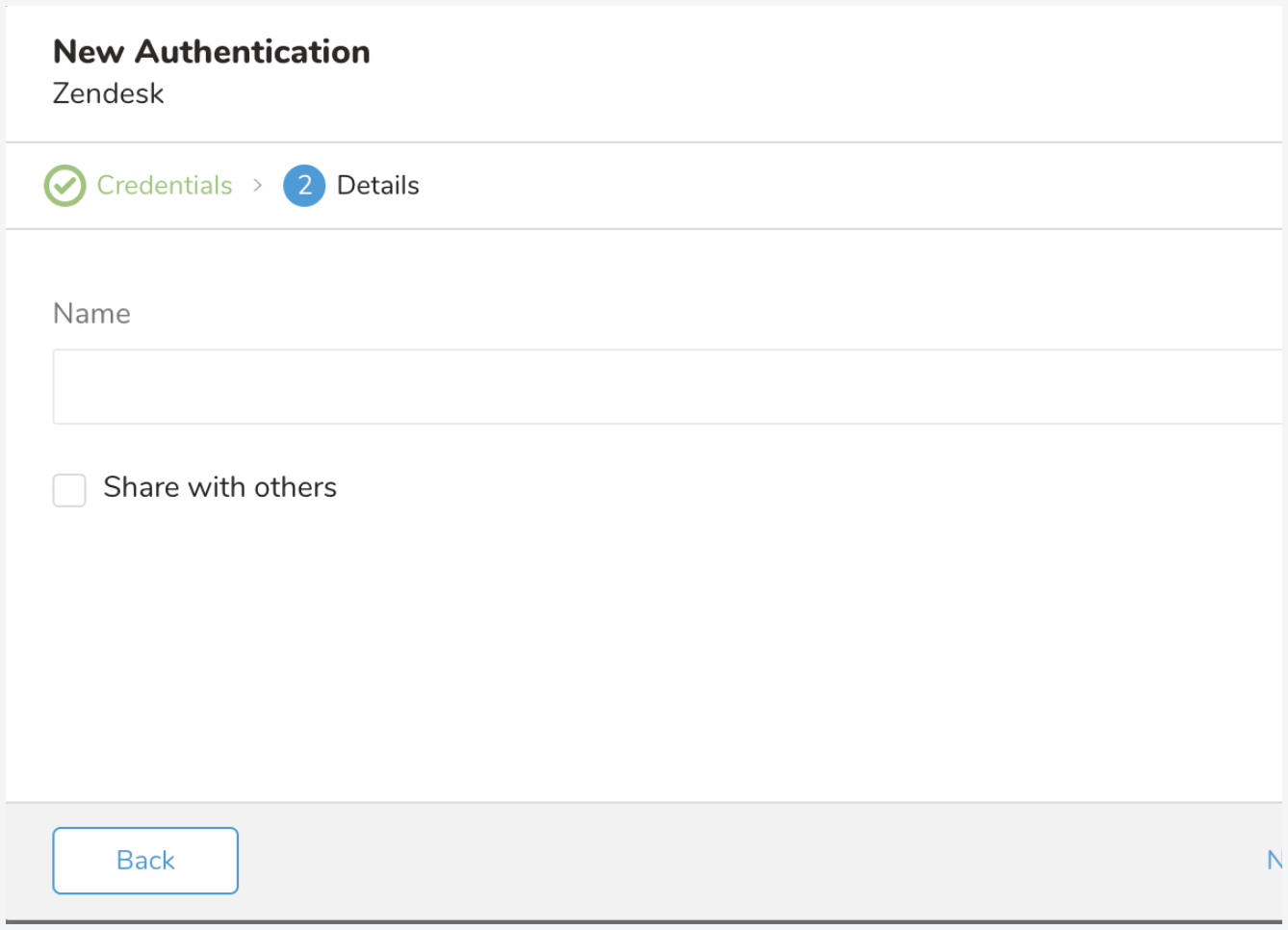

Name your new Zendesk connection. Click Done.

After creating the authenticated connection, you are automatically taken to the Authentications tab.

Search for the connection you created and click New Source.

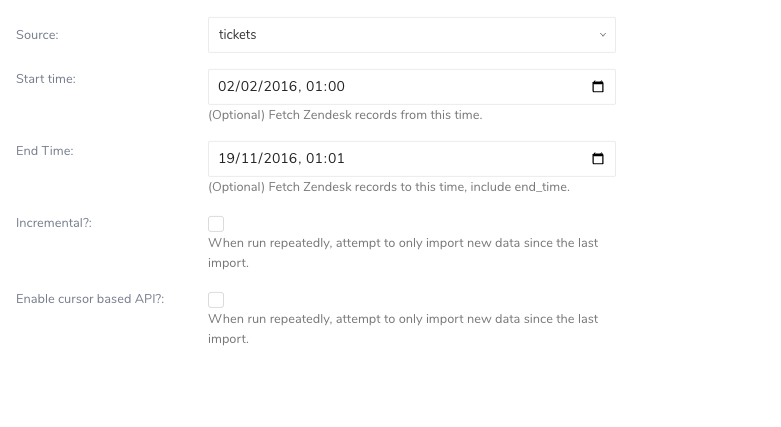

Edit the appropriate fields.

| Source | Specify the kind of object that you want to transfer from Zendesk: tickets, ticket_fields, ticket_forms, ticket_events, ticket_metrics, users, organizations, scores, recipients, object_records, relationship_records, user_events, and chat - object_records and relationship_records provide information about Zendesk custom objects - scores and recipients provide information about Zendesk NPS - Chat Limitations: - Only an administrator or owner has the permissions to retrieve chat data. - No support for "Include Subresources" and "De-duplicate records" options |

|---|---|

| Incremental | Allows the connector to run in incremental mode, which enables Start time and End time can be used. |

| Start time | Enables you to select only objects, which have been updated since the 'start_time' - If Start time is not specified, all the objects are retrieved from the beginning. |

| End time | Enables you to select only objects, which have been updated up to the 'end_time'. - If End time is not specified, all the objects up to now are retrieved. - Start time and End time can combined to select only objects that have been updated within a specific period, from'start_time' until 'end_time' |

| Enable cursor-based API | Enables you to use the cursor API flow to fetch more than 100,000 records. - Applies for tickets and users source only - Support incremental with Start time only |

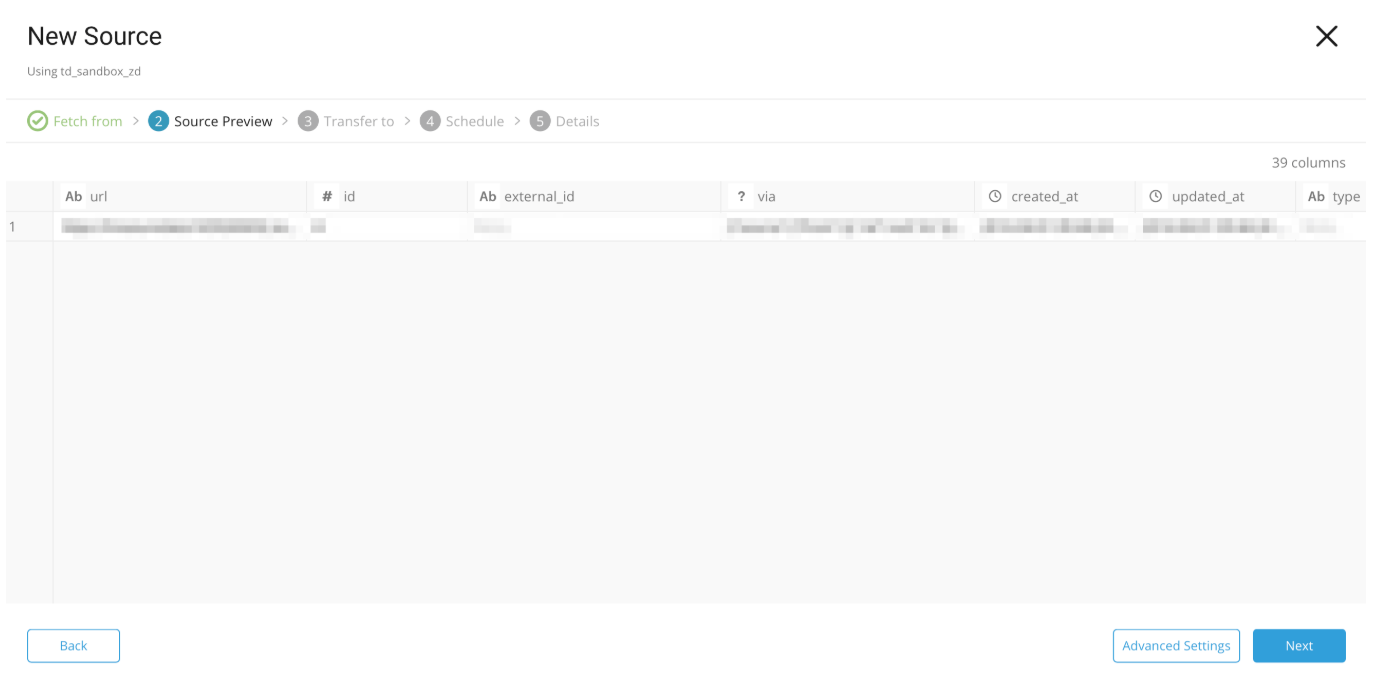

Preview your data. To make changes, click Advanced Settings.

Select Next.

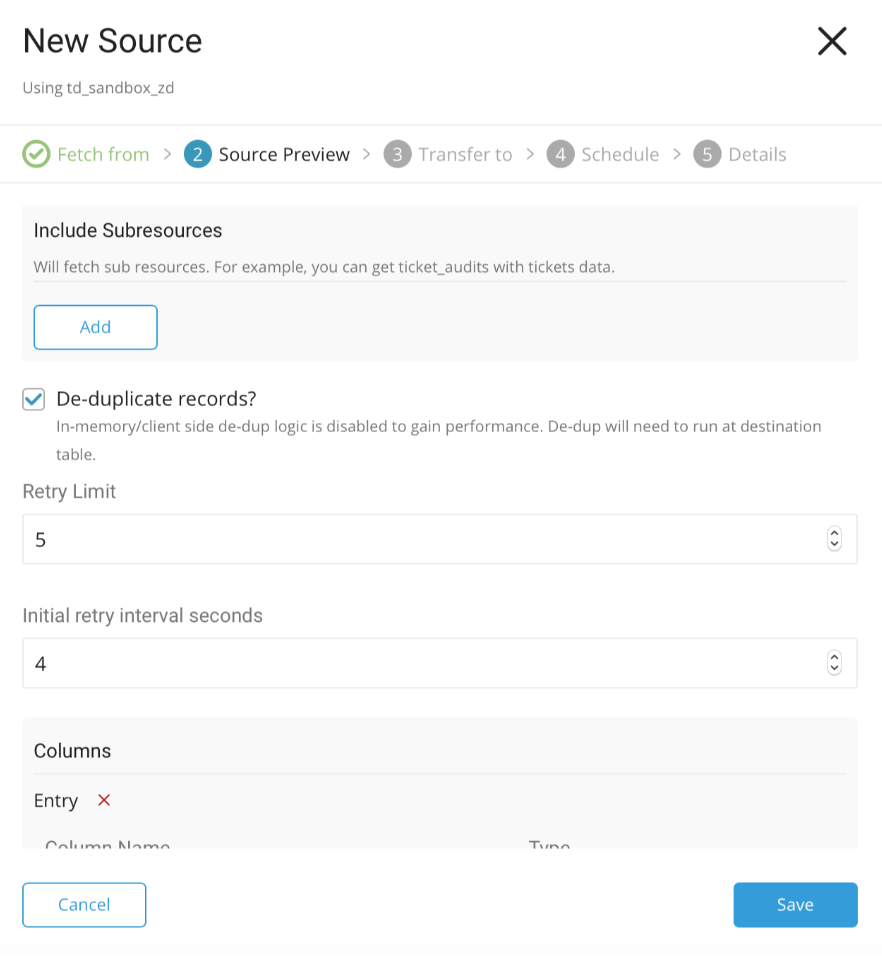

After selecting Advanced Settings, the following dialog opens.

Edit the parameters. Select Save and Next.

| Parameter | Description |

|---|---|

| Include Subresources | Enables you to fetch sub-resource along with the main object. Click Add to add more sub resource by name and Add a correspondence column as well. The sub resource is considered as an JSON object, presented in a column, with the same name . - In Zendesk, this endpoint is supported: GET /api/v2/users/{user_id}/organizations.json That means we can consider organizations as a sub-resource of users. We can get all the information of organizations that a users belong to. - To configure it, you must add 'organizations' as a sub-resource and also add one more column with the same name. The data type should be JSON. - Include Subresources is not supported for Chat. |

| De-duplicated Records | Enables you to avoid duplicated records when running in incremental mode because the Zendesk API doesn't prevent duplication. - Deduplication is not supported for Chat. |

| Retry Limit | Indicates how many times the job should retry when error occurs. |

| Initial retry interval seconds | Indicates the first waiting time before a retry. Measured in seconds. |

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

For sample workflows on importing data from Zendesk, view Treasure Boxes.

Install the newest TD Toolbelt.

Prepare seed.yml as shown in the following example, with your login_url, username (email), token and, target. In this example, you use “append” mode:

in:

type: zendesk

login_url: https://YOUR_DOMAIN_NAME.zendesk.com

auth_method: token

username: YOUR_EMAIL_ADDRESS

token: YOUR_API_TOKEN

target: tickets

start_time: "2007-01-01 00:00:00+0000"

enable_cursor_based_api: true

out:

mode: appendtoken can be created by going to Admin Home –> CHANNELS –> API –> "add new token" (https://YOUR_DOMAIN_NAME.zendesk.com/agent/admin/api).

target specifies the type of object that you want to dump from Zendesk. tickets, ticket_events, ticket_forms, ticket_fields, users, organizations, scores, recipients, object_records, relationship_records and user_events are supported.

For more details on available out modes, see Appendix.

Use connector:guess. This command automatically reads the target data, and intelligently guesses the data format.

$ td connector:guess seed.yml -o load.ymlIf you open the load.yml file, you see guessed file format definitions including, in some cases, file formats, encodings, column names, and types.

in:

type: zendesk

login_url: https://YOUR_DOMAIN_NAME.zendesk.com

auth_method: token

username: YOUR_EMAIL_ADDRESS

token: YOUR_API_TOKEN

target: tickets

start_time: '2019-05-15T00:00:00+00:00'

columns:

- {name: url, type: string}

- {name: id, type: long}

- {name: external_id, type: string}

- {name: via, type: json}

- {name: created_at, type: timestamp, format: "%Y-%m-%dT%H:%M:%S%z"}

- {name: updated_at, type: timestamp, format: "%Y-%m-%dT%H:%M:%S%z"}

- {name: type, type: string}

- {name: subject, type: string}

- {name: raw_subject, type: string}

- {name: description, type: string}

- {name: priority, type: string}

- {name: status, type: string}

- {name: recipient, type: string}

- {name: requester_id, type: string}

- {name: submitter_id, type: string}

- {name: assignee_id, type: string}

- {name: organization_id, type: string}

- {name: group_id, type: string}

- {name: collaborator_ids, type: json}

- {name: follower_ids, type: json}

- {name: email_cc_ids, type: json}

- {name: forum_topic_id, type: string}

- {name: problem_id, type: string}

- {name: has_incidents, type: boolean}

- {name: is_public, type: boolean}

- {name: due_at, type: string}

- {name: tags, type: json}

- {name: custom_fields, type: json}

- {name: satisfaction_rating, type: json}

- {name: sharing_agreement_ids, type: json}

- {name: fields, type: json}

- {name: followup_ids, type: json}

- {name: ticket_form_id, type: string}

- {name: brand_id, type: string}

- {name: satisfaction_probability, type: string}

- {name: allow_channelback, type: boolean}

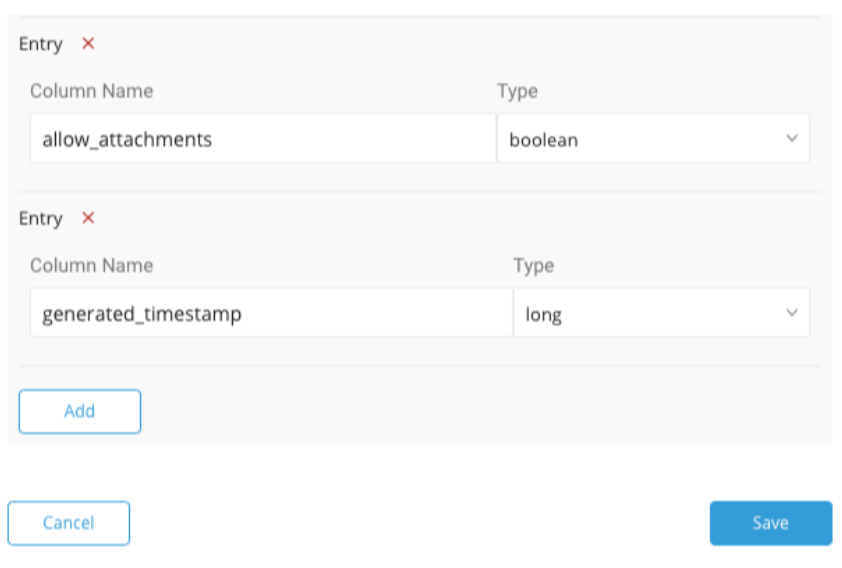

- {name: allow_attachments, type: boolean}

- {name: generated_timestamp, type: long}

out:

{mode: append}

exec: {}

filters:

type: add_time

from_value:

{mode: upload_time}

to_column: {name: time} Then you can preview how the system parses the file by using preview command.

$ td connector:preview load.ymlIf the system detects your column name or type unexpectedly, modify the load.yml directly and preview again.

The Data Connector supports parsing of "boolean", "long", "double", "string", and "timestamp" types.

Submit the load job. It may take a couple of hours depending on the data size. Users need to specify the database and table where their data is stored.

$ td connector:issue load.yml --database td_sample_db --table td_sample_tableThe preceding command assumes that you have already created database(td_sample_db) and table(td_sample_table). If the database or the table do not exist in TD this command will not succeed, so create the database and table manually or use --auto-create-table option with td connector:issue command to automatically create the database and table:

$ td connector:issue load.yml --database td_sample_db --table td_sample_table --time-column created_at --auto-create-tableYou can assign Time Format column to the "Partitioning Key" by "--time-column" option.

You can load records incrementally from Zendesk by using the incremental flag. If False, the start_time and end_time in next.yml is not updated. The connector will always fetch all the data from Zendesk with static conditions. If True, the start_time and end_time is updated in next.yml. The default is True.

in:

type: zendesk

login_url: https://YOUR_DOMAIN_NAME.zendesk.com

auth_method: token

username: YOUR_EMAIL_ADDRESS

token: YOUR_API_TOKEN

target: tickets

start_time: "2007-01-01 00:00:00+0000" end_time: "2008-01-01 00:00:00+0000"

incremental: true

out:

mode: appendZendesk supports pagination endpoint that enables you to fetch more than 100,000 records. This endpoint only applies for users and tickets target. For details, see Introducing Pagination Changes - Zendesk API

To use the new endpoint, enable Enable cursor-based API.