This plugin is recommended for large data sets.

Alternatively, you can use Salesforce Marketing Cloud (ExactTarget) Data Connector for small data sets, to write job results.

Treasure Data can publish user segments into Salesforce Marketing Cloud (ExactTarget), and enable you to send personalized emails to your customers. You can run data-driven email campaigns, by using your first-party data from Web, Mobile, CRM, and other data sources.

This feature is in BETA version. For more information, contact your Customer Success Representative.

- Basic knowledge of Treasure Data

- Basic knowledge of Salesforce Marketing Cloud

- TD account

Open SSH 7.8 Private Key is supported. The format of the key is detected and the correct library to use is chosen.

Access your Salesforce account to begin set up.

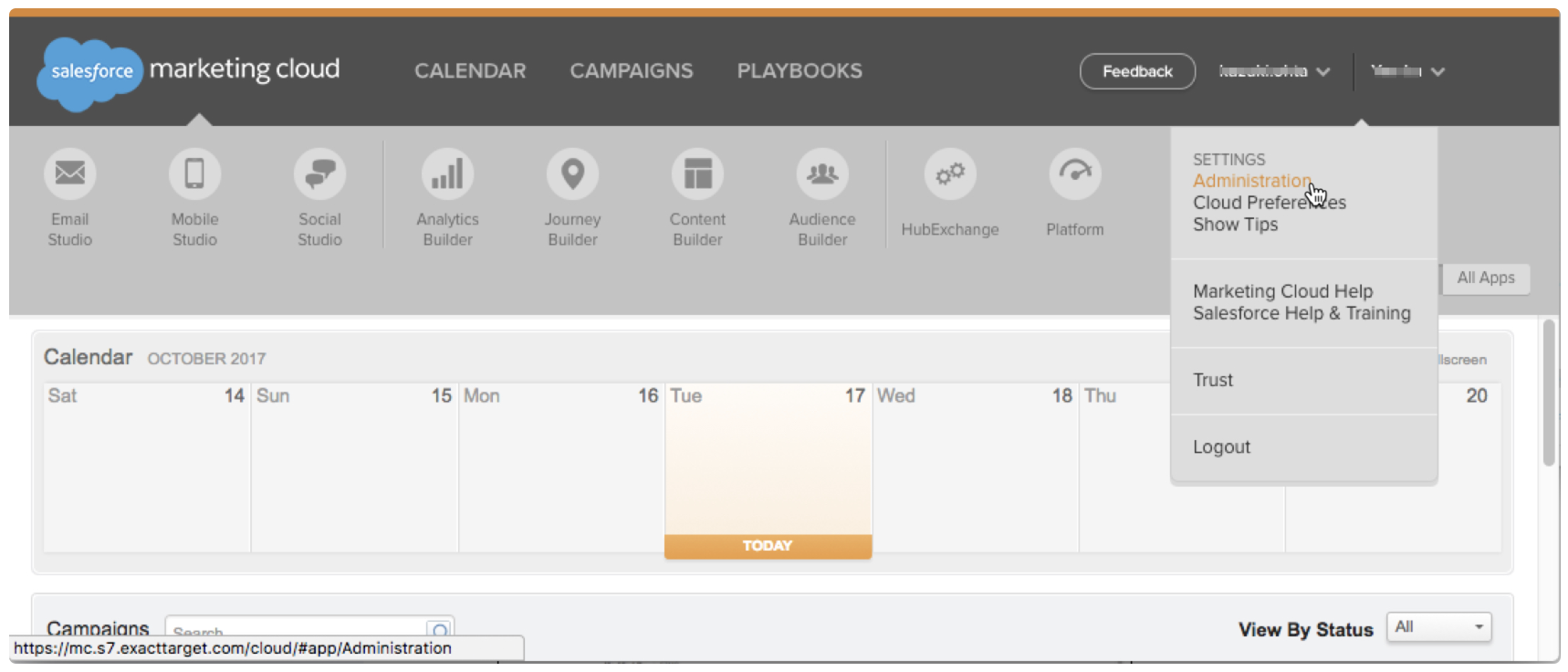

On the SFMC dashboard, in your account, select Administration.

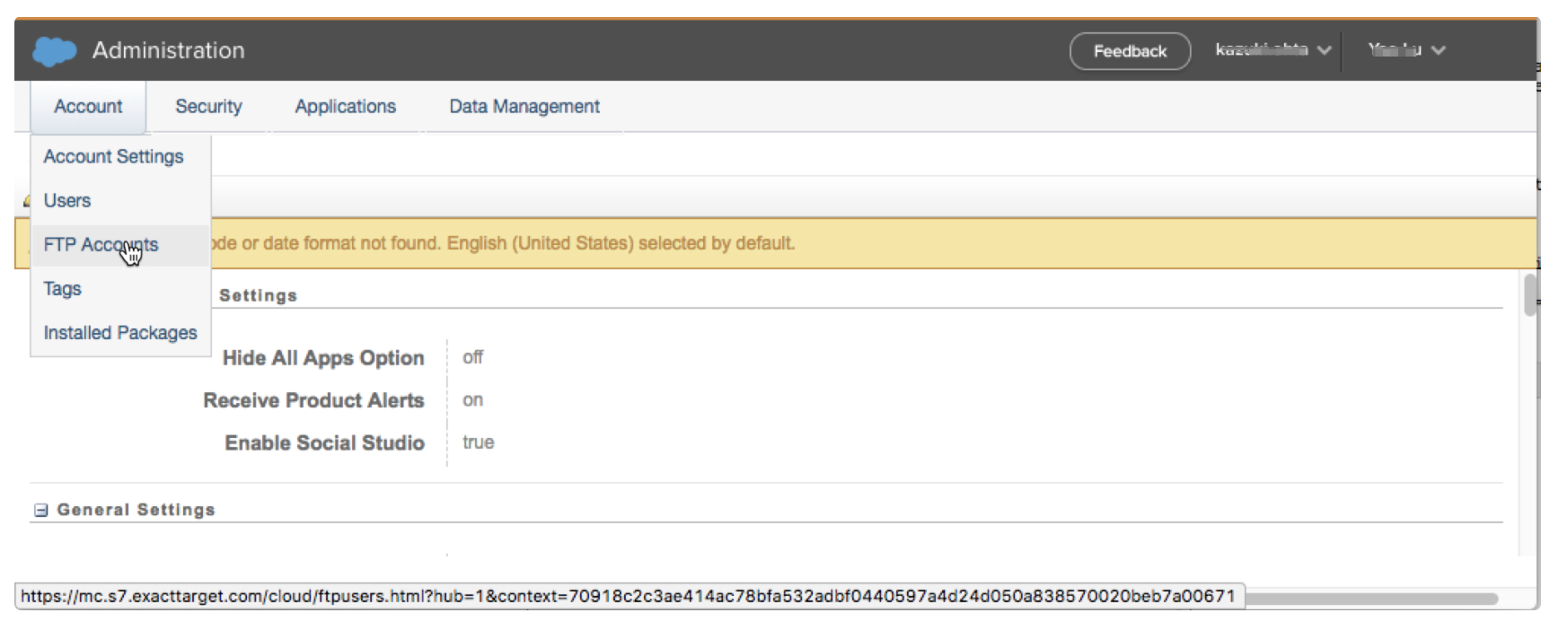

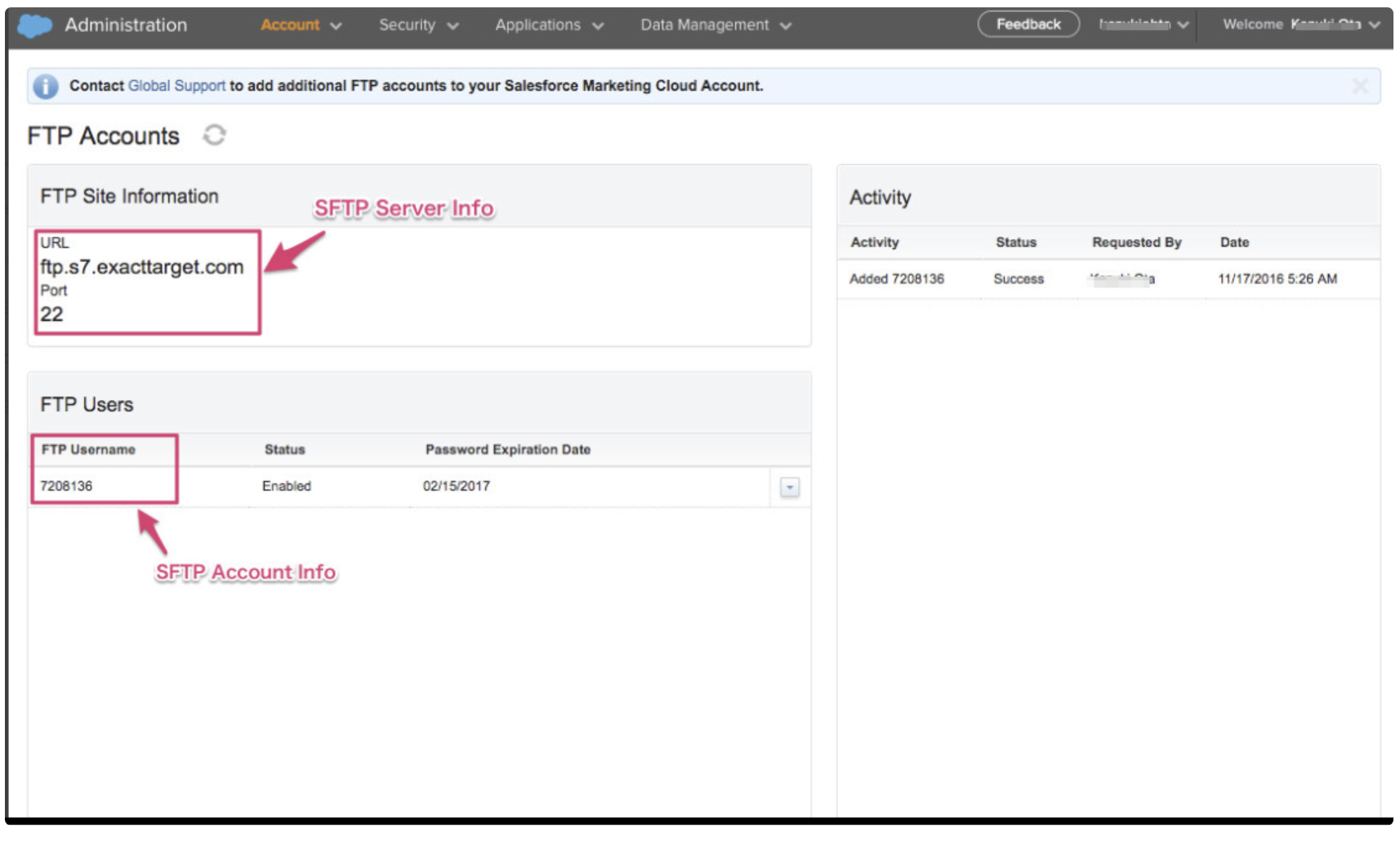

From the Account drop-down menu, select FTP Accounts. This allows you to establish an SFTP account.

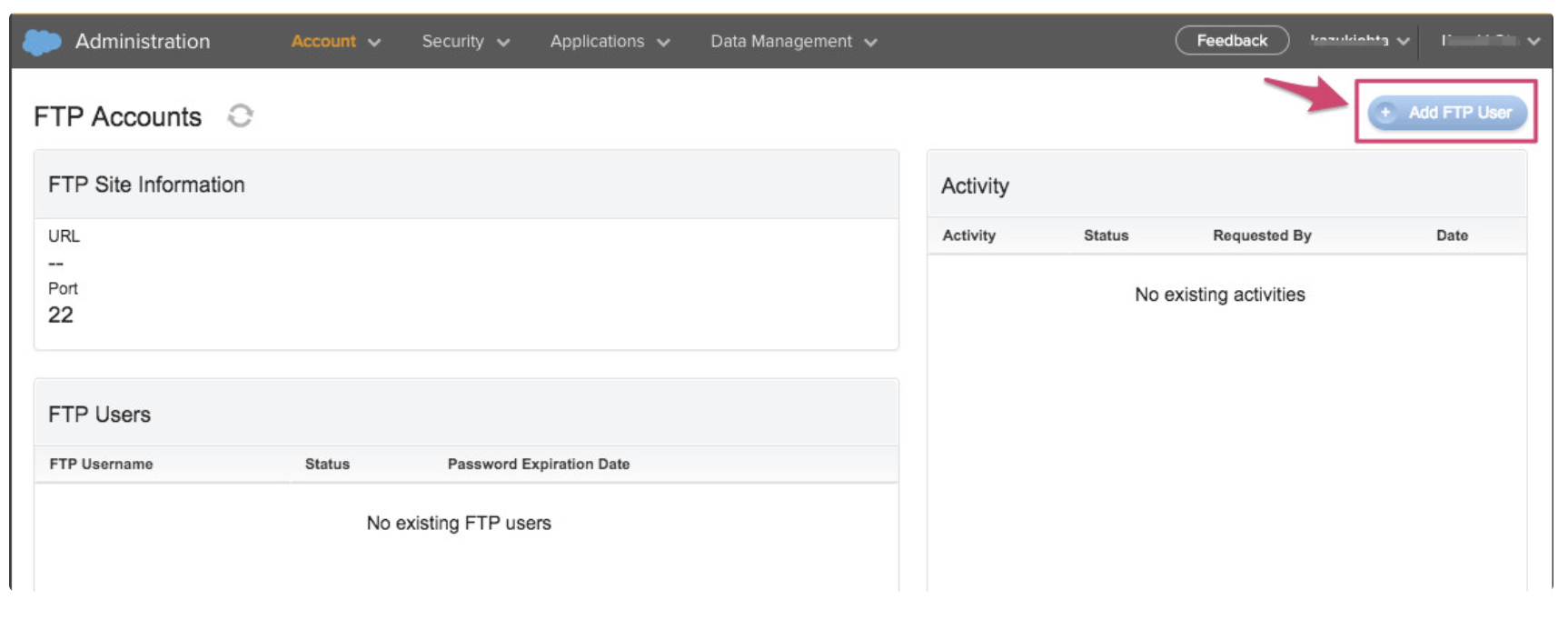

In the FTP Accounts panel, select Add FTP User.

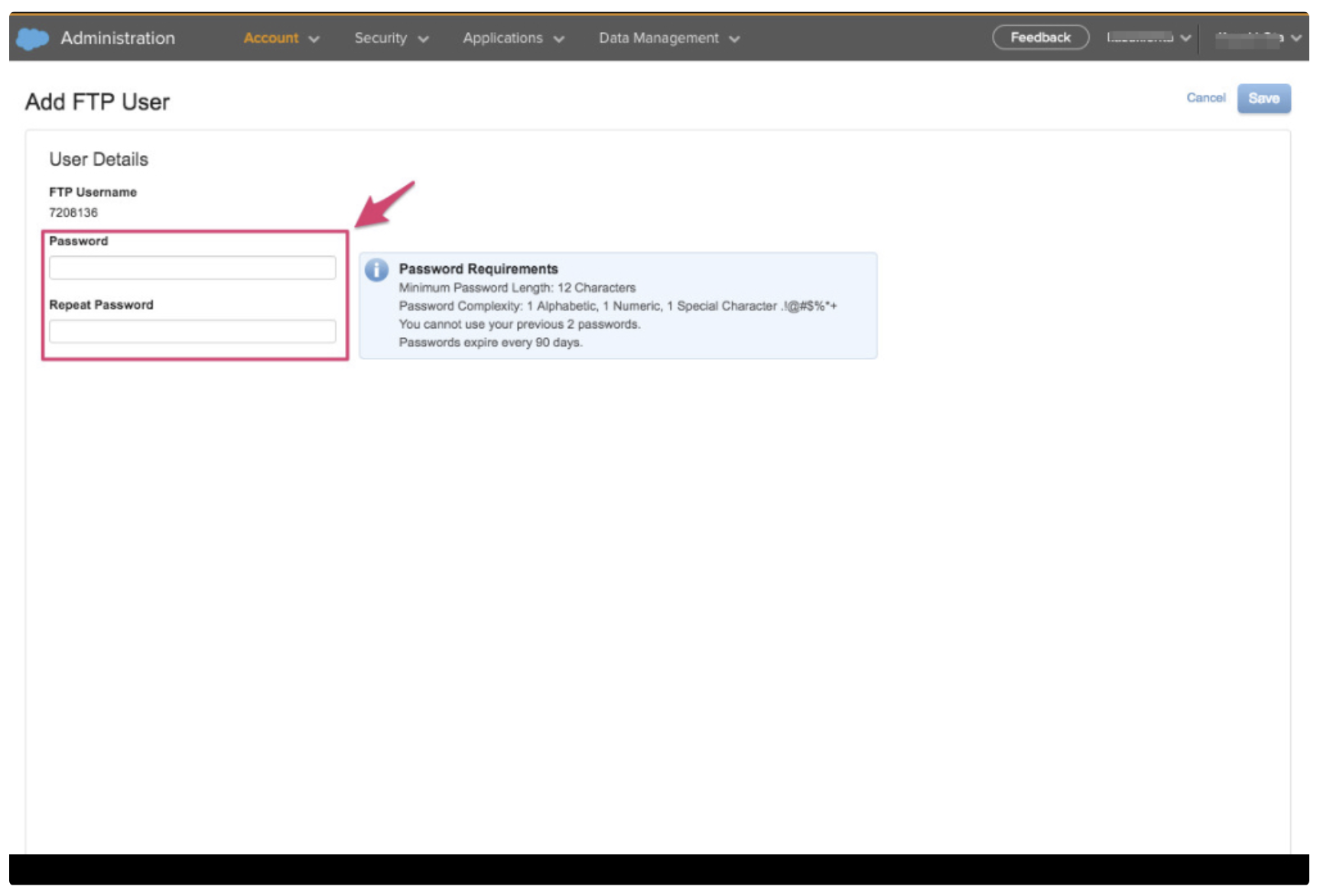

Provide an FTP account password.

Review your SFTP account information.

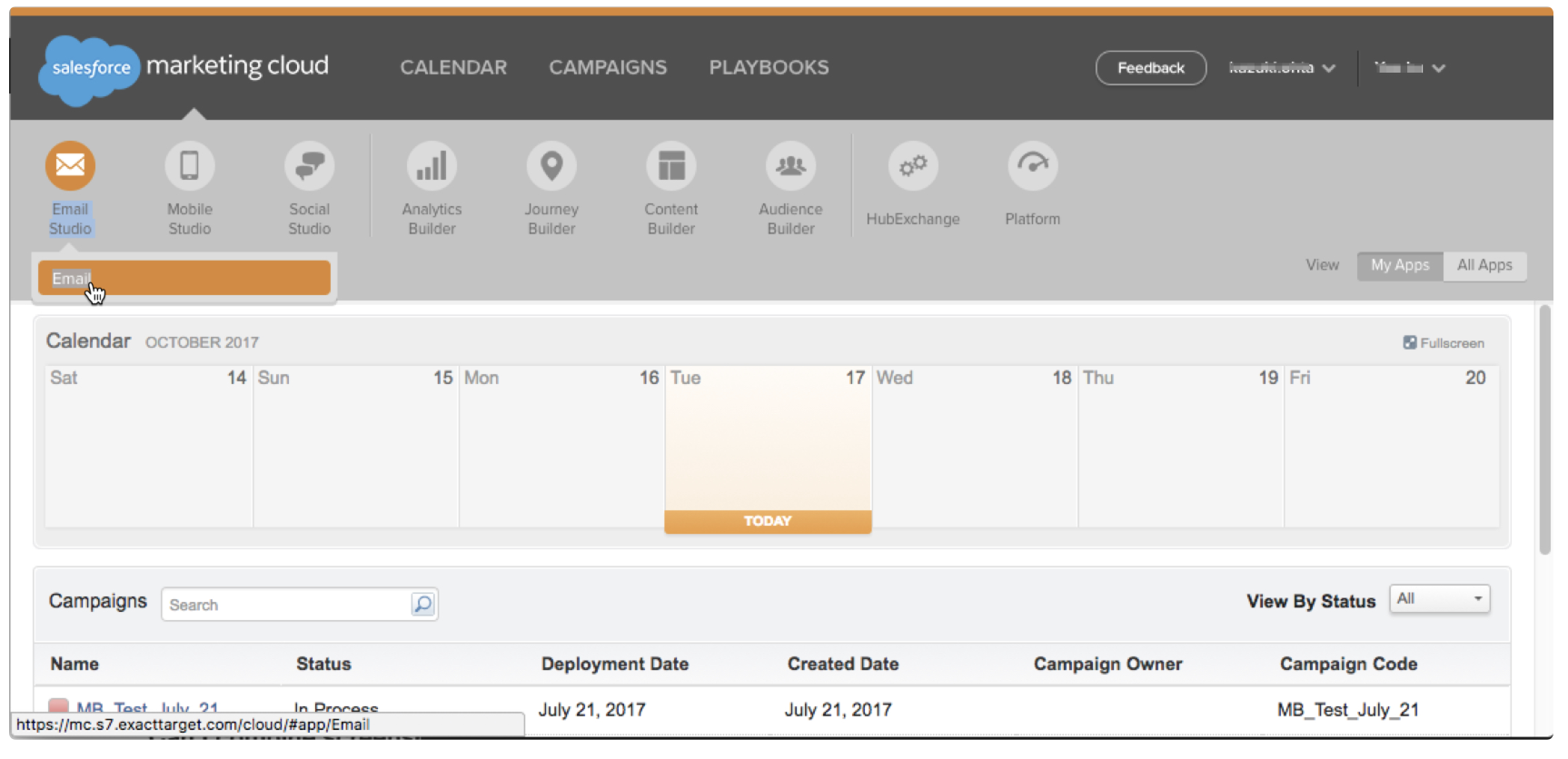

Go back to the SFMC dashboard, and select Email Studio > Email.

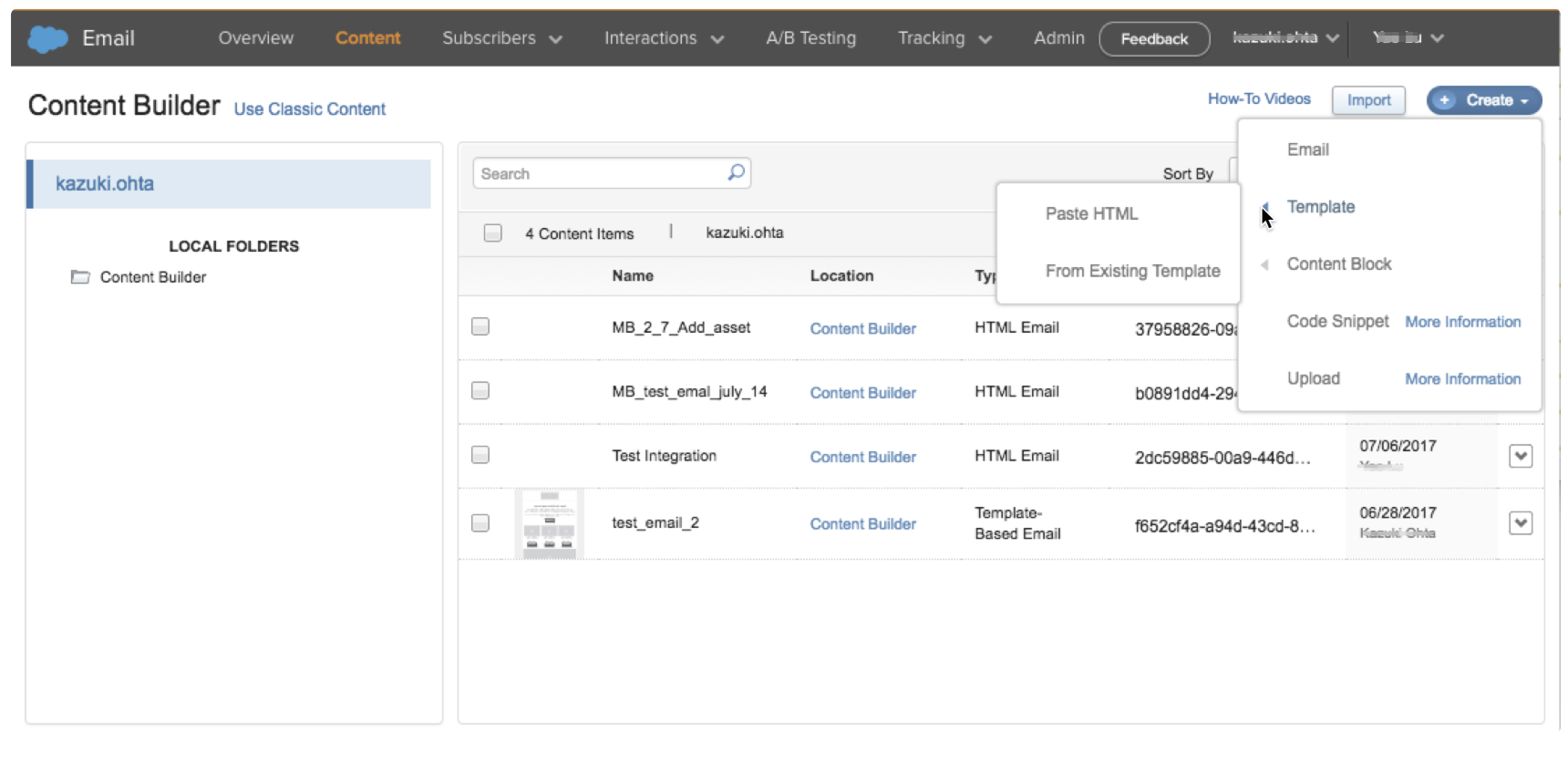

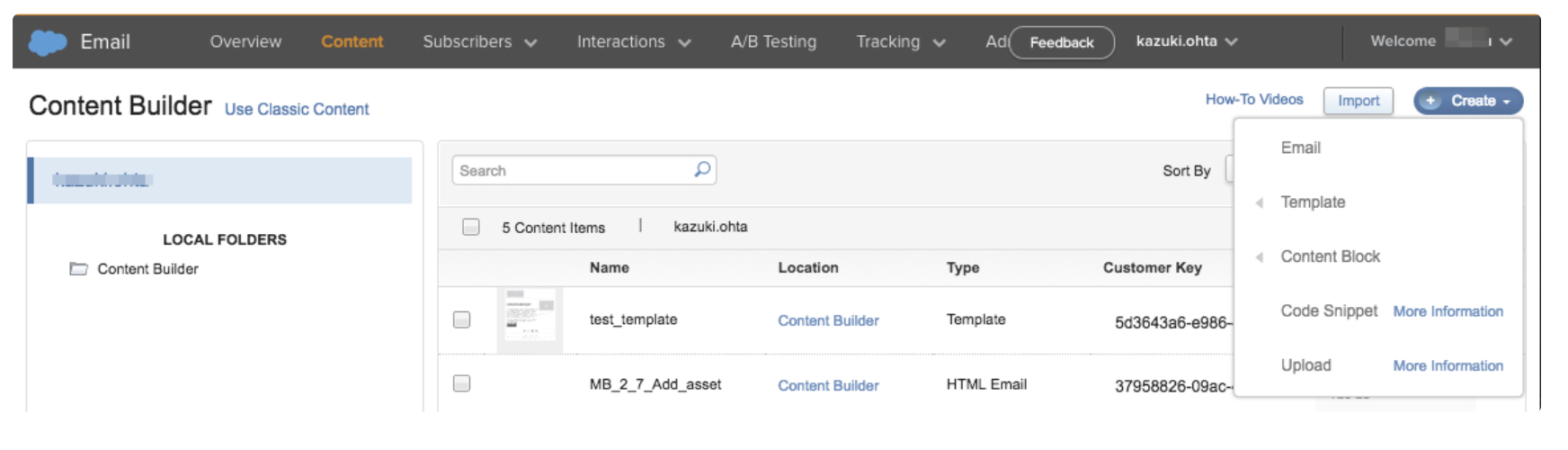

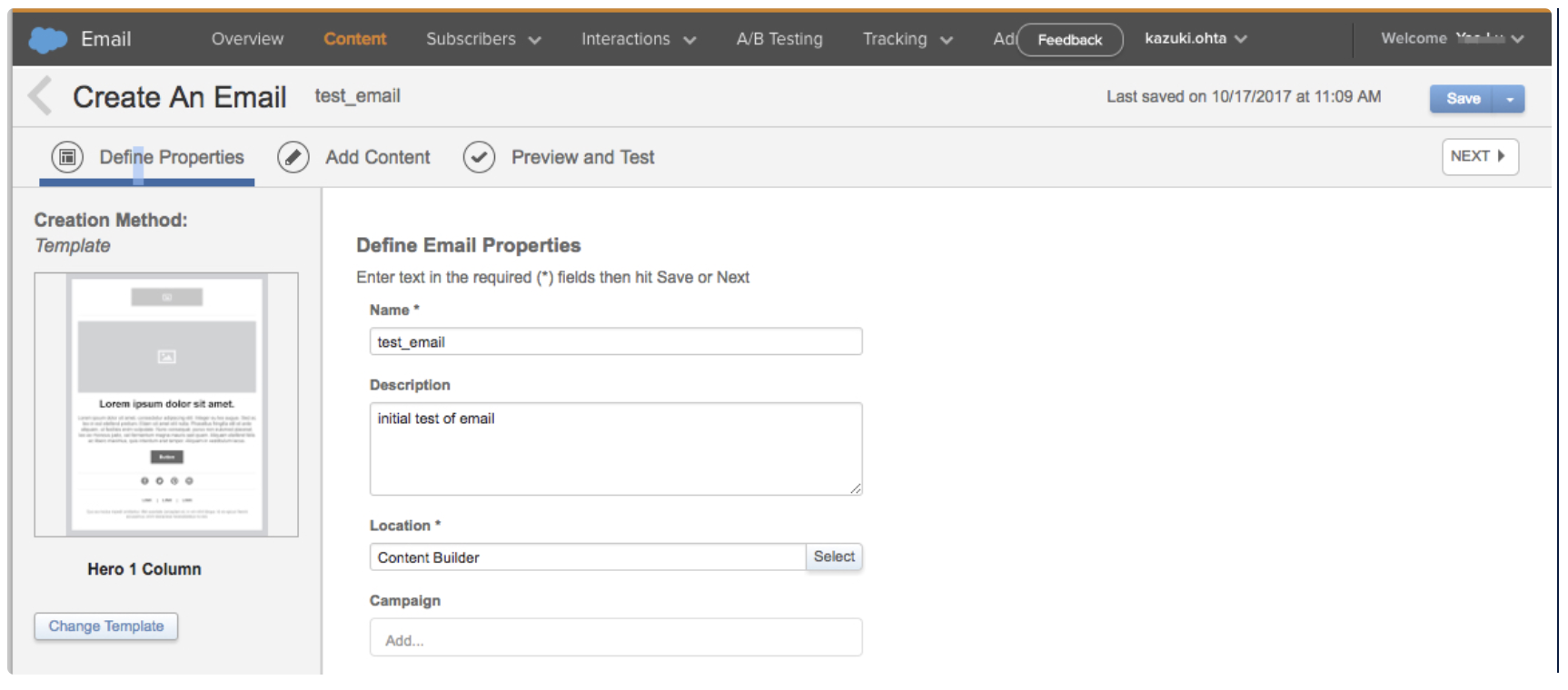

Select Content > Create > Template > From Existing Template to create an email template.

After creating the template, select Save > Save and Exit and then provide a template name and location, and save that information as well.

Remaining on the Email page, select Create > Email to create email content (for example, for a campaign) from a template.

Select the template, define the email properties, including name and location, and select Next to provide content. Continue creating the email and save it when you are finished.

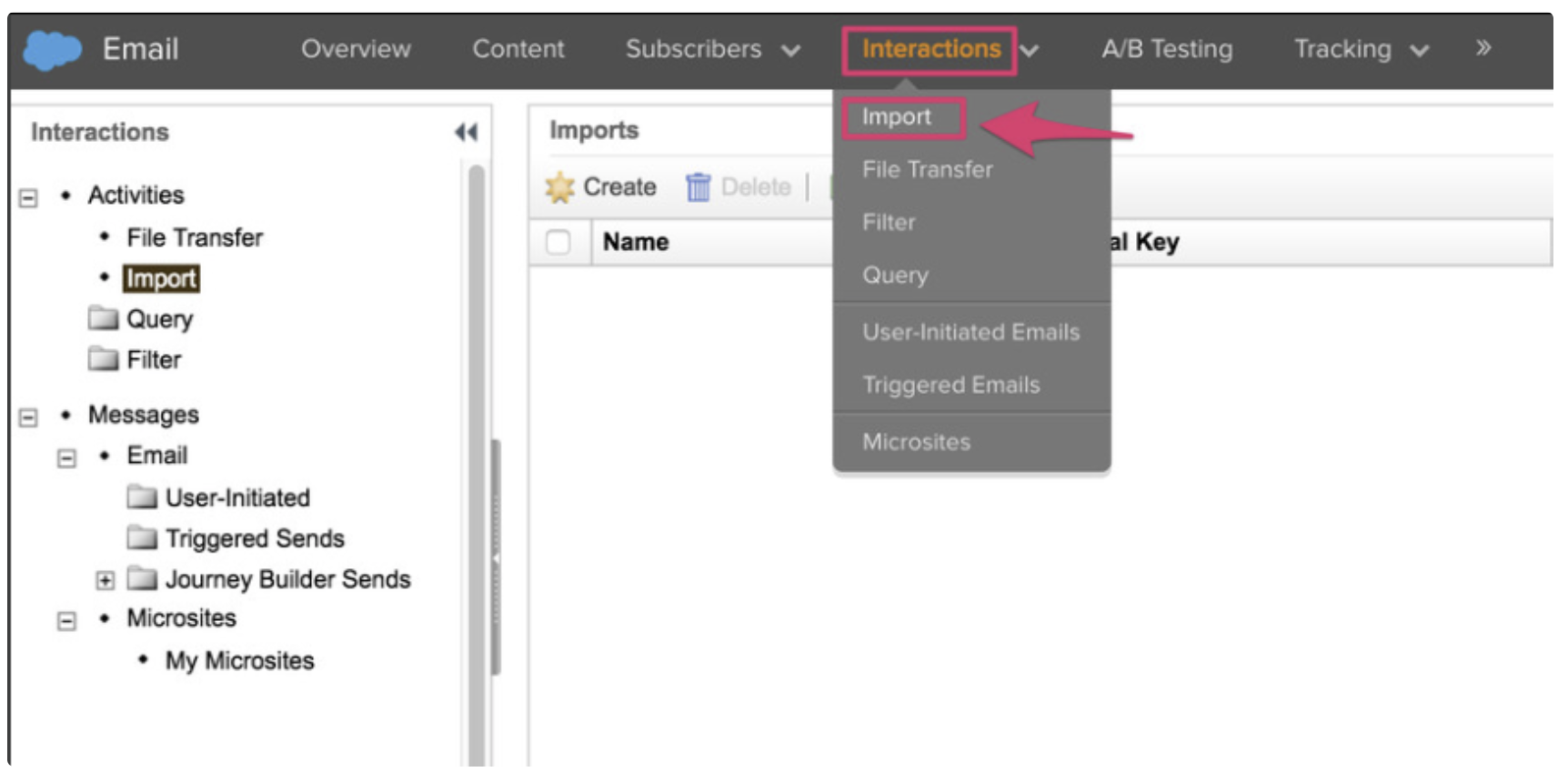

From the Email view, select Interactions.

Select Import.

Select Create to make a new import interaction definition.

Provide the import interaction information, including SFTP information and data import location. Save the information.

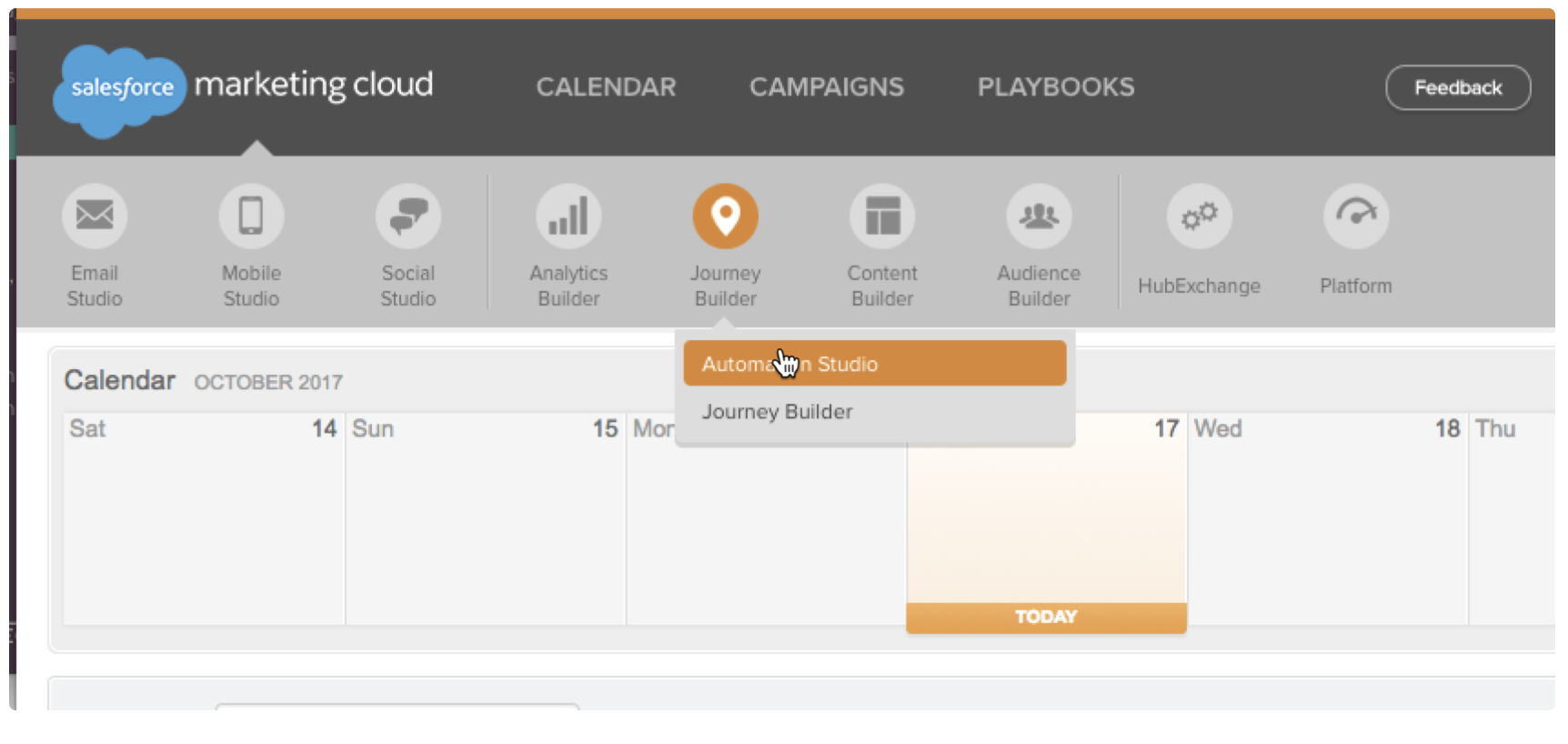

From the Email view, select the SFDC blue cloud icon to view menu options.

Select Journey Builder > Automation Studio.

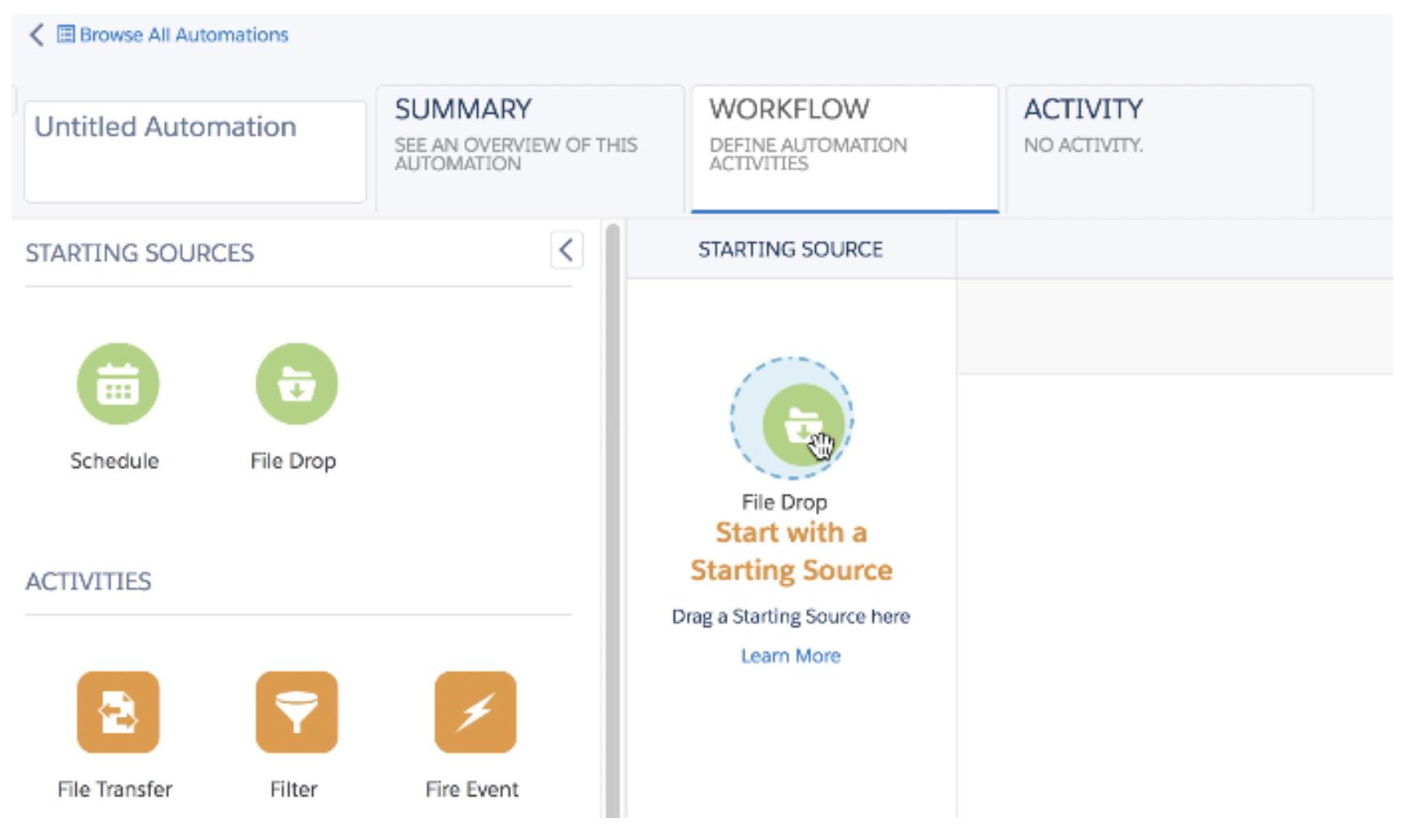

Select New Automation.

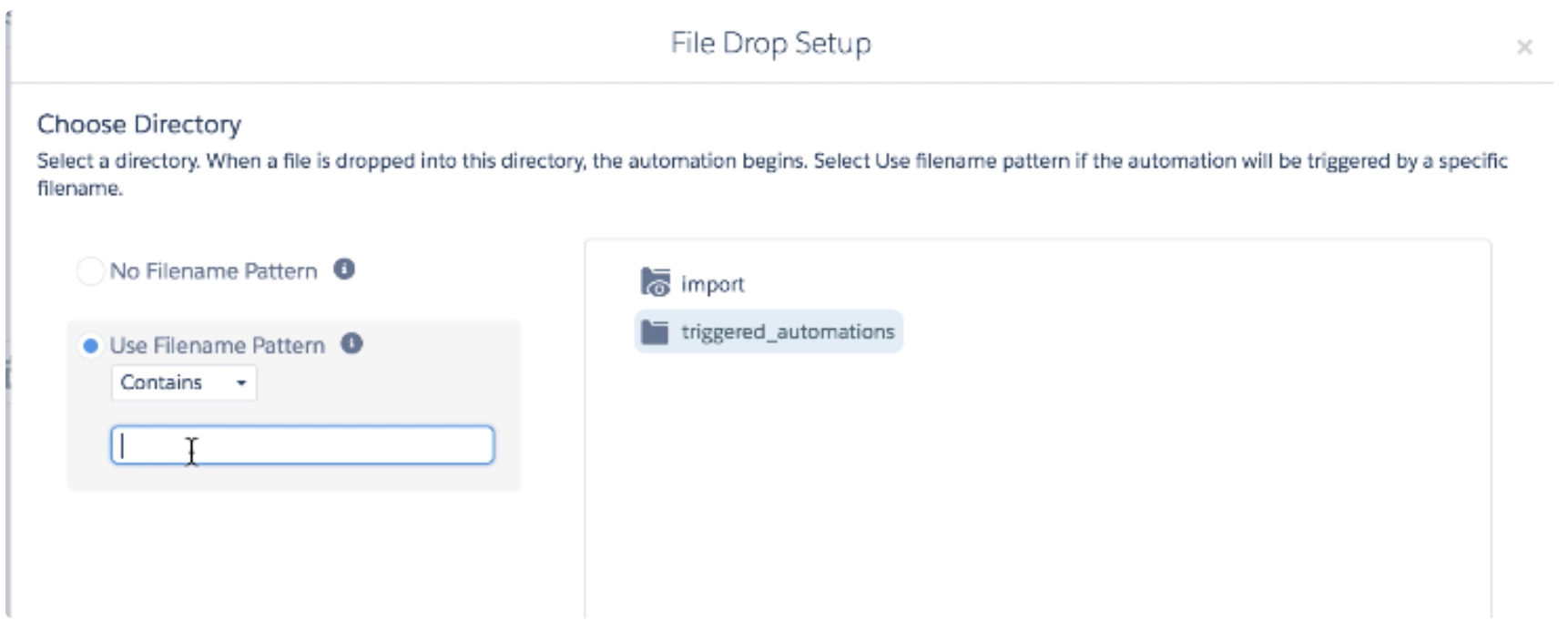

Drag the File Drop icon to Starting Source.

Select Configure> Trigger Automation.

Specify Use Filename Pattern and then select Done.

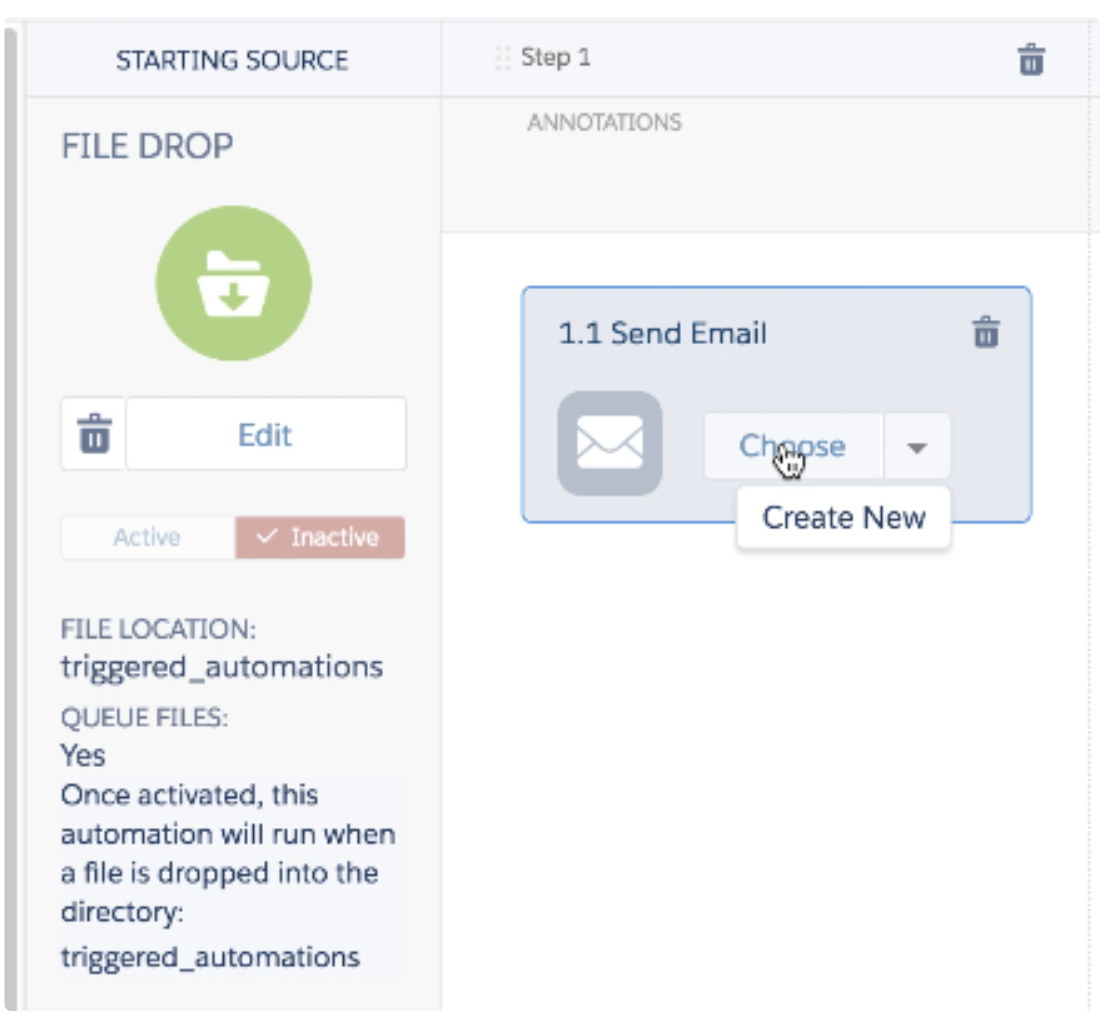

Drag the Send Email icon to the canvas and select Create New.

Select an email object, for example, the one you created in this section. Select Next. Select an email target list. Select Next. Verify the email configuration information, and select Finish.

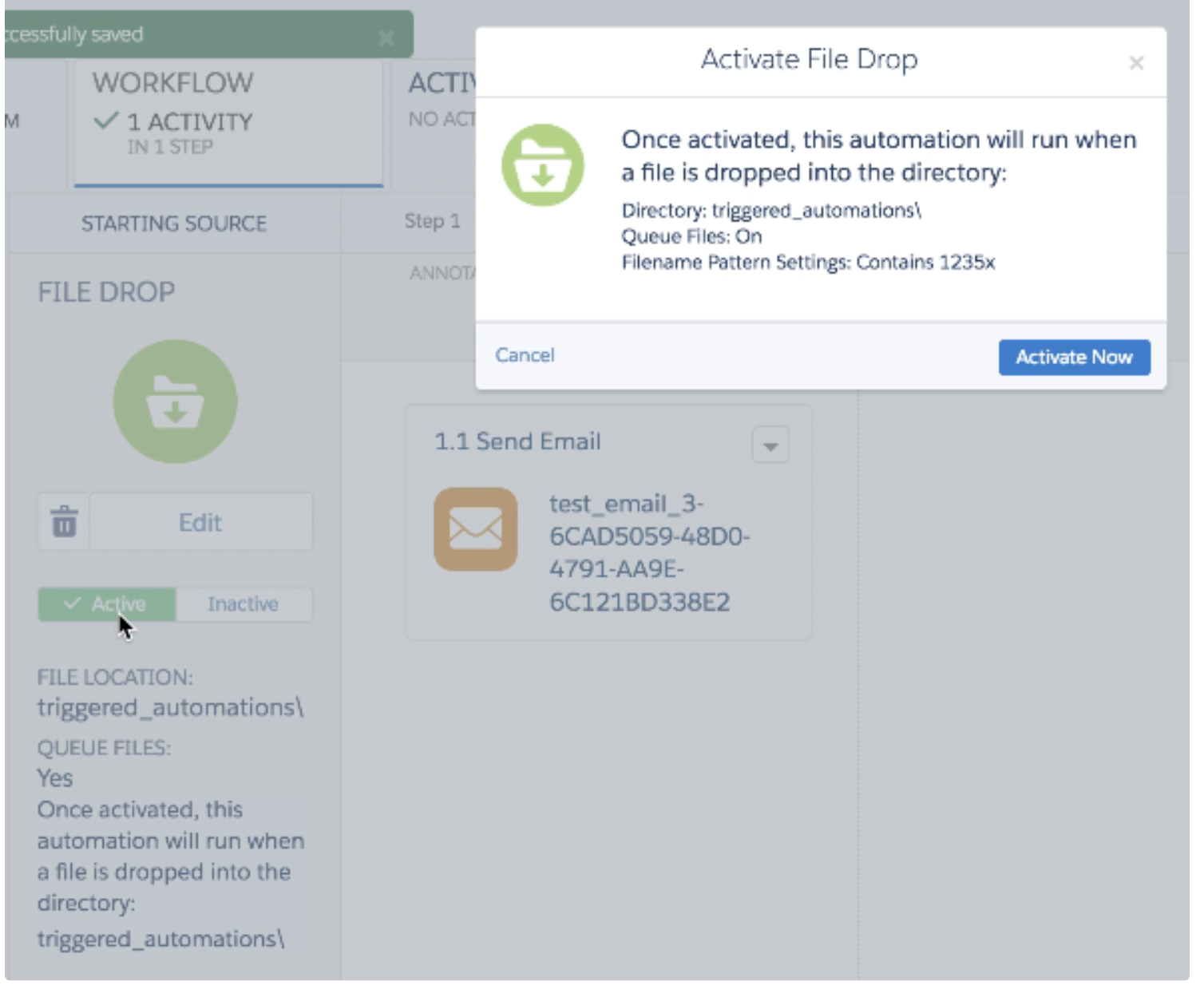

Provide a name for the import trigger and an external key that is referred to by Treasure Data, and select Save.

Select Active to enable the import trigger.

Select Save and Close.

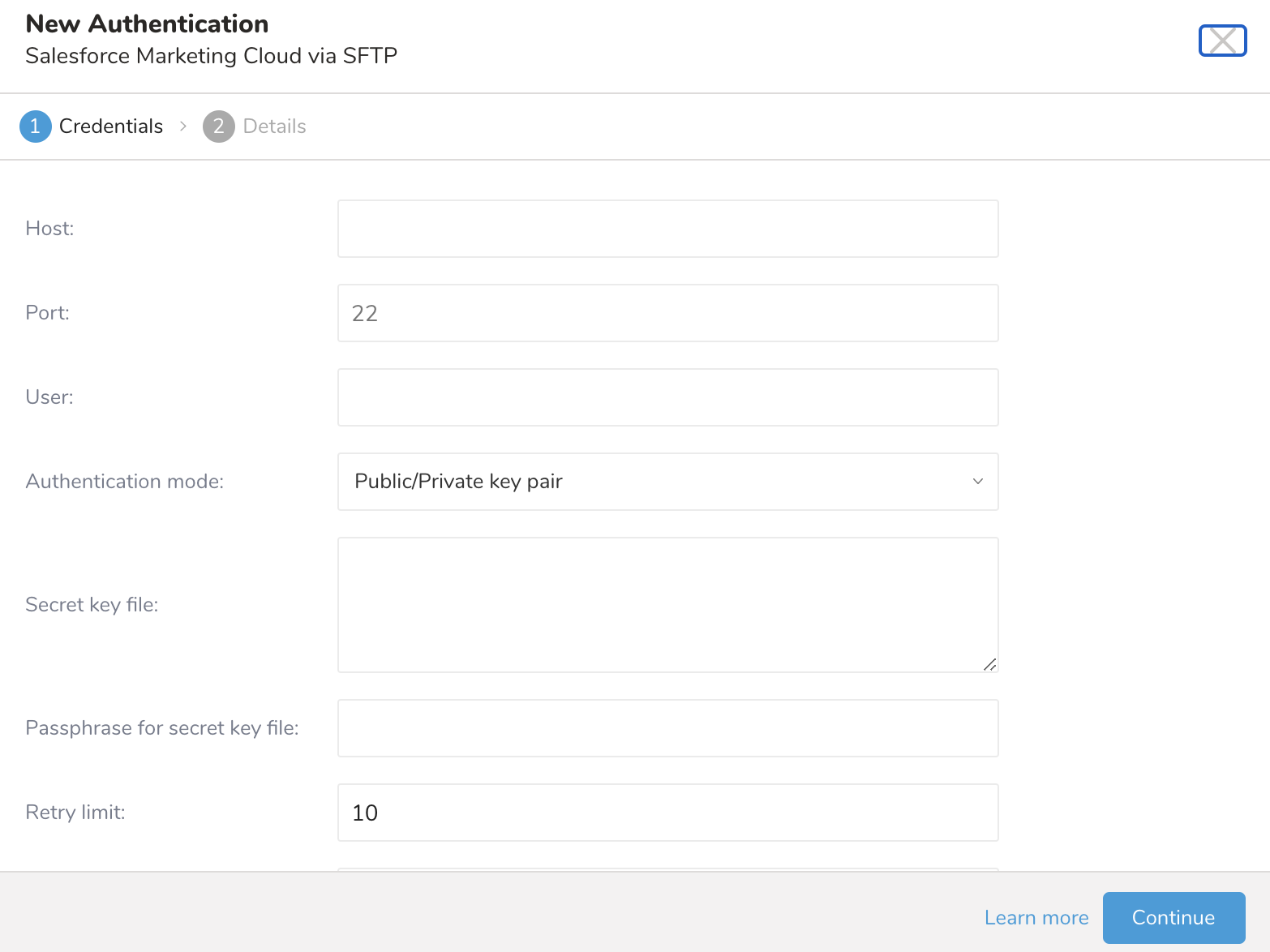

In Treasure Data, you must create and configure the data connection prior to running your query. As part of the data connection, you provide authentication to access the integration.

Enter the required credentials for your remote SFTP instance. Name the connection. If you would like to share this connection with other users in your organization, check the Share with others checkbox. If this box is unchecked this connection is visible only to you.

Open TD Console.

Navigate to Integrations Hub > Catalog.

Search for and select Salesforce Marketing Cloud via SFTP.

Select Create Credentials.

Type the credentials to authenticate.

- Type or select values for the parameters:

| Parameter | Description |

|---|---|

| Host | The host information of the remote SFTP instance, for example, an IP address. |

| Port | The connection port on the remote FTP instance, the default is 22. |

| User | The user name used to connect to the remote FTP instance. |

| Authentication mode | The way you choose to authenticate with your SFTP server.

|

| Secret key file | Required if 'public / private key pair' is selected from Authentication Mode. (The key type ed25519 is not supported but the ecdsa key type is supported.) |

| Passphrase for secret key file | (Optional) If required, provide a passphrase for the provided secret file. |

| Retry limit | Number of times to retry a failed connection (default 10). |

| Timeout | Connection timeout in seconds |

| Use proxy? | If selected, enter the details for the proxy server. - Type - Host - Port - User - Password - Command |

| Sequence format | Format for sequence part of output files (string, default: ".%03d.%02d") |

Select Continue.

Type a name for your connection.

Select Done.

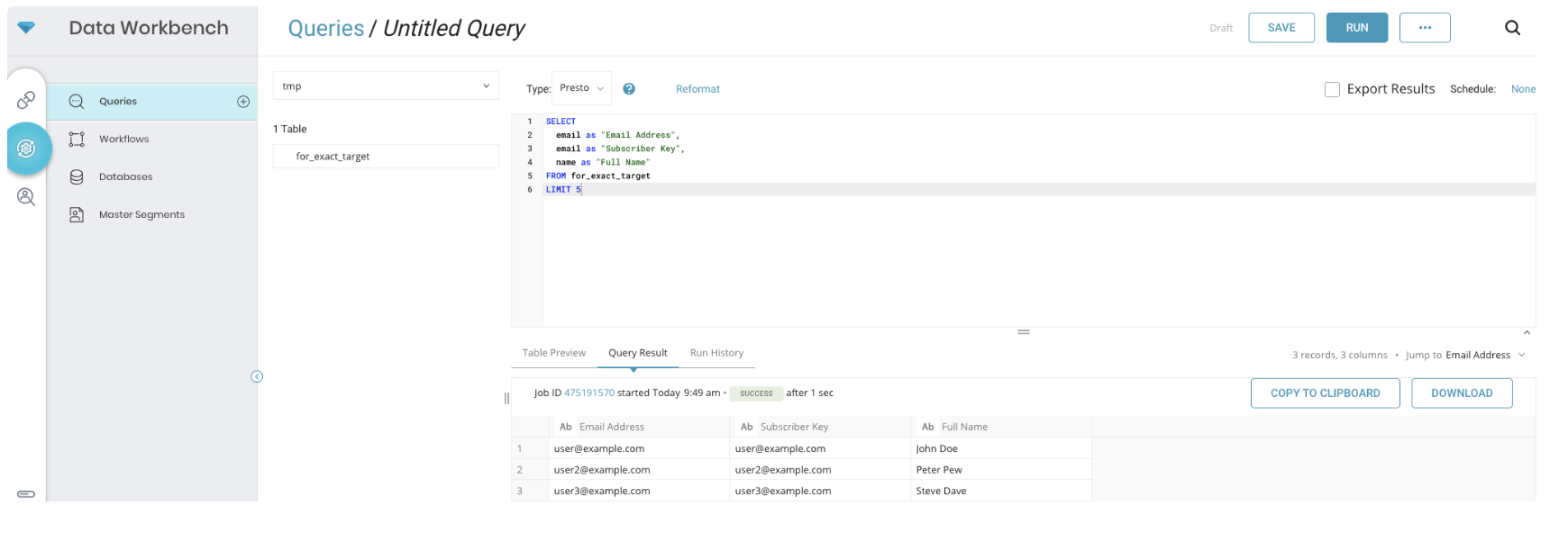

Create a job that selects data from within Treasure Data. The specified column name for the mapping must match the column name in the SFMC Exact Target mail. “Email Address” and “Subscriber Key” columns are required. If needed, you can change the mapped column name that is in TD database. You can change the column name from within the TD Console.

- Complete the instructions in Creating a Destination Integration.

- Navigate to Data Workbench > Queries.

- Select a query for which you would like to export data.

- Run the query to validate the result set.

- Select Export Results.

- Select an existing integration authentication.

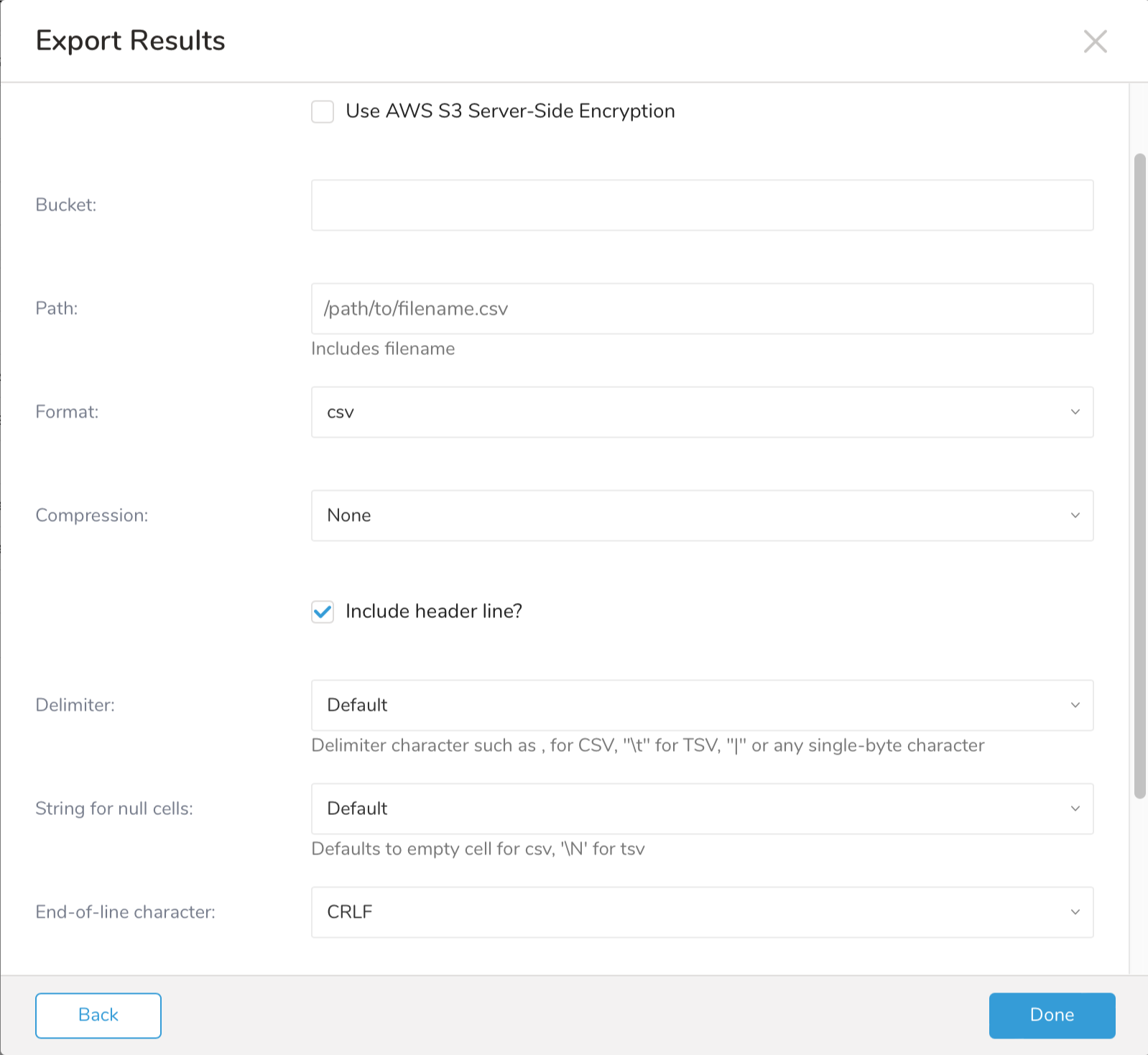

- Define any additional Export Results details. In your export integration content review the integration parameters. For example, your Export Results screen might be different, or you might not have additional details to fill out:

- Select Done.

- Run your query.

- Validate that your data moved to the destination you specified.

You could specify more parameters for the target export file:

- Path prefix: the path for the plugin to save your output files in the target server

- Rename file after upload finish: select to try to upload the file with .tmp extension first, then rename the file without .tmp when the file is uploaded successfully

- Format: Format of the file (would be CSV or TSV)

- Compression: choose it whenever you would like to compress the file. We support gzip and bzip2 compression

- Header line: choose it if you would like to write the first line as the columns' name

- Delimiter: delimiter between values in the target file, would be | or tab or comma

- Quote policy: quote between each column, could be MINIMUM, ALL, or NONE

- Null string: the value for the null field in the query

- End-of-line character: the character to specify for end of the line. Would be Carriage Return Line Feed (CRLF - used in Windows OS file systems**)** or Line Feed (LF - used in Unix, macOS**)** or Carriage Return (CR - used in classic macOS**)**

- Encryption column names: list of encryption columns, separated by a comma

- Encryption key: specify key needed to perform the encryption algorithm

- Encryption iv: specify a number to prevent repetition in data encryption

You can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

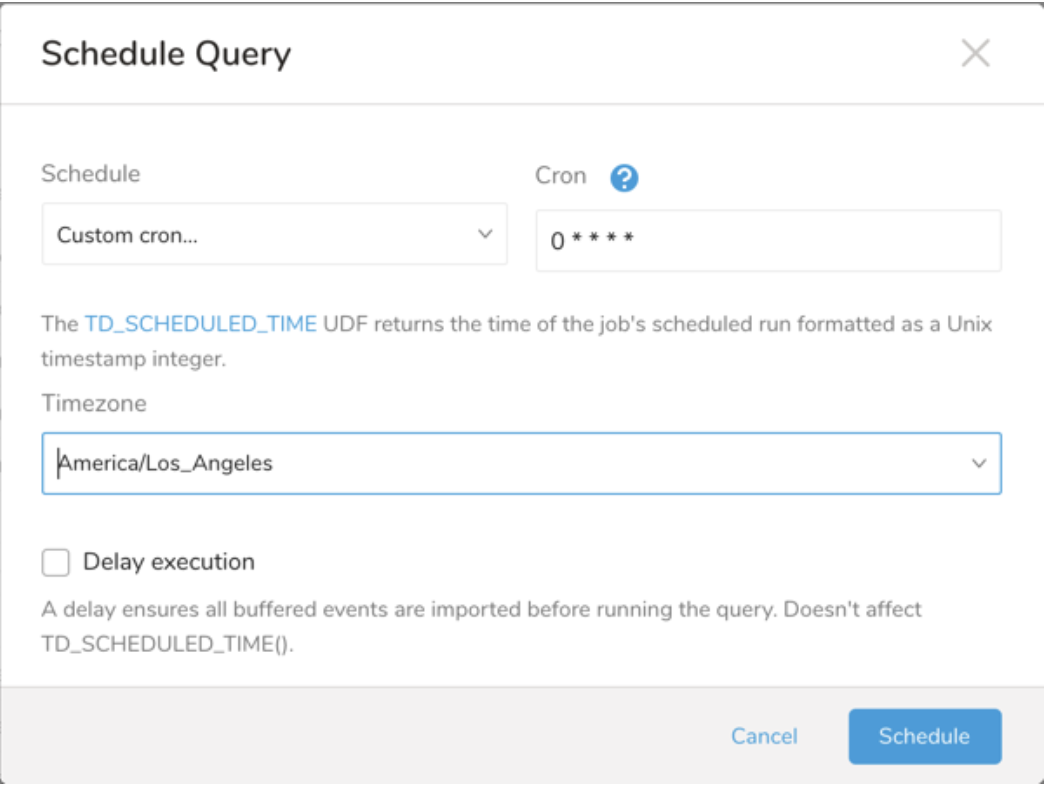

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

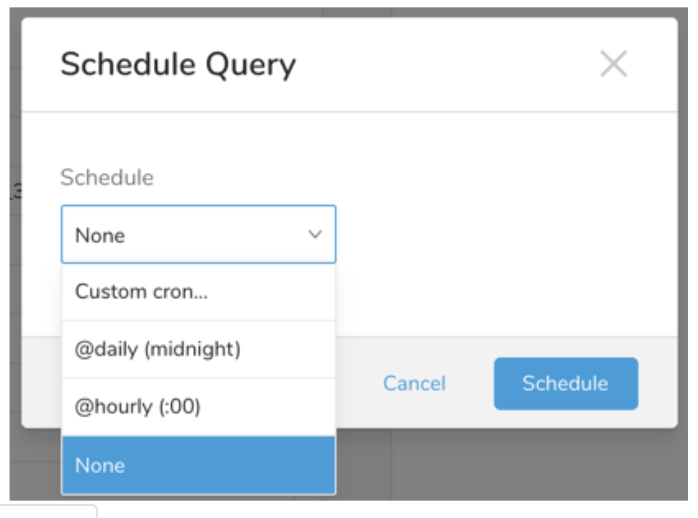

Next to Schedule, select None.

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

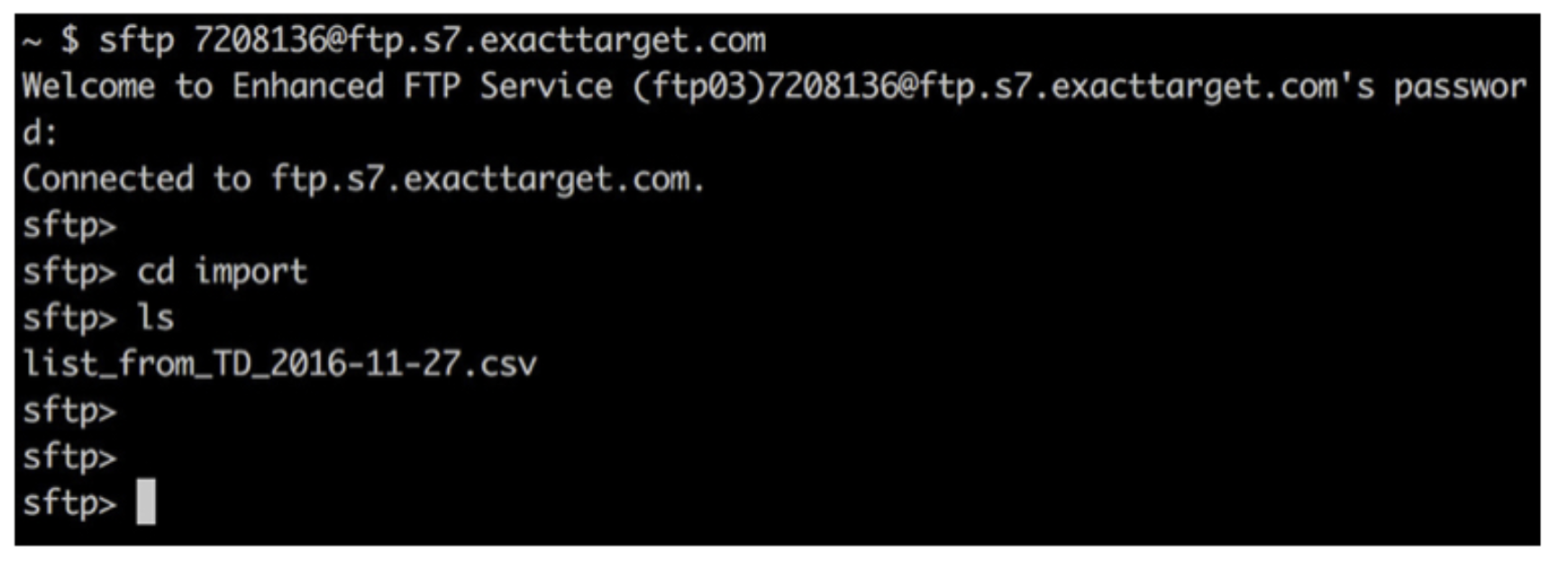

After the job finishes, you can check the output file on the SFTP server by using the general SFTP command, as shown in the following example:

Check the SFMC dashboard to verify a successful import. If the import and mail delivery is successful, you can see Complete on the Automation Studio Overview page.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

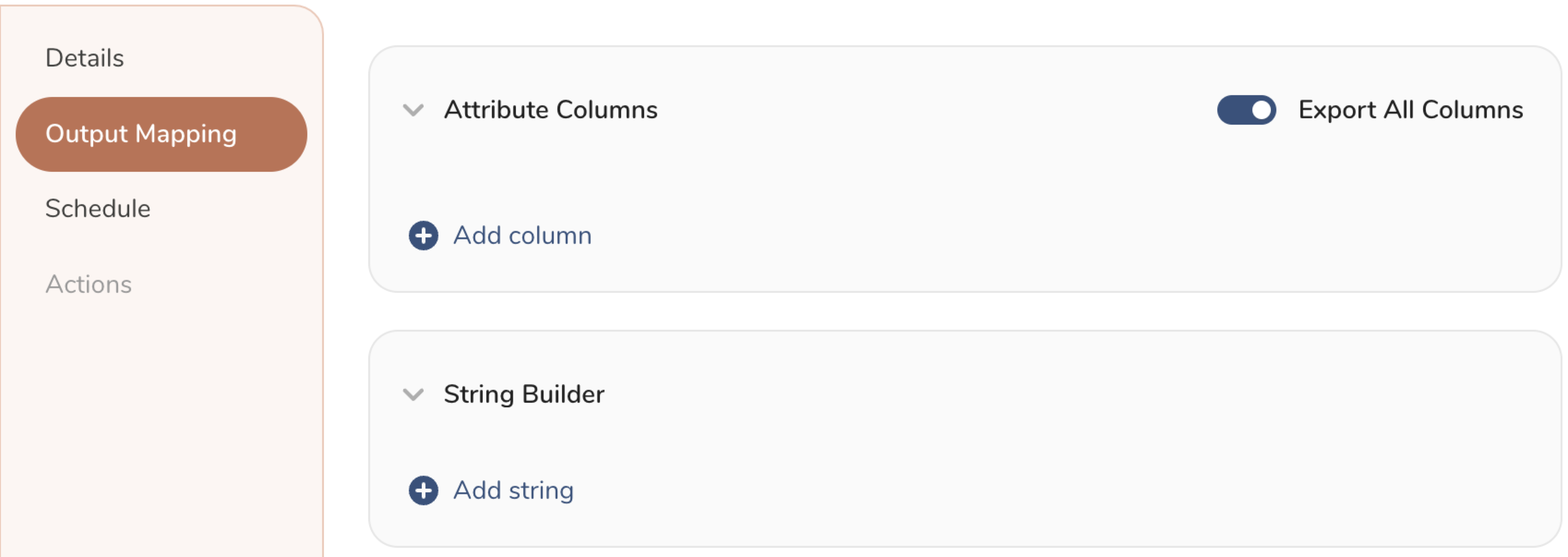

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

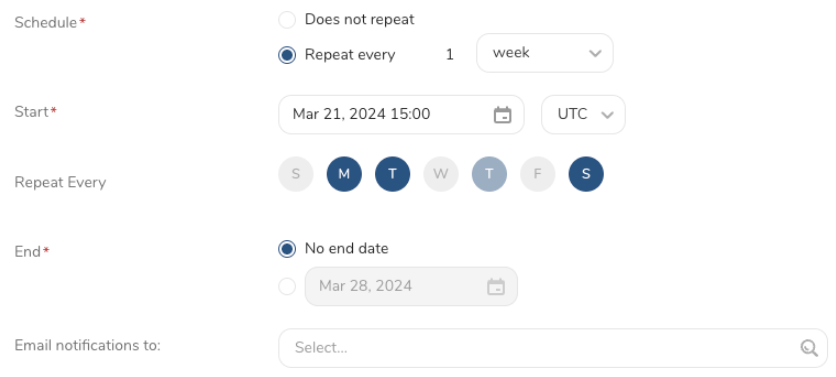

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

- You can involve this integration in a TD workflow as part of a more advanced data pipeline