Salesforce (SFDC) is a leading customer relationship management (CRM) platform. It provides CRM software and applications focused on sales, customer service, marketing automation, e-commerce, analytics, and application development. Over 150,000 companies, both big and small, are growing their businesses with Salesforce.

This TD export integration allows you to write job results from Treasure Data directly to version 2 of SFDC.

- A Salesforce.com username, password, and security token for API integration

- The “API Enabled” permission is enabled.

- A target Salesforce.com object should exist, and you should have permissions to read and write to it.

- The "Hard delete" permission is enabled for your user profile

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

SFDC V2 output supports these authentication types:

- Credentials

- OAuth

- Session ID

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Search for salesforce and select salesforce.

- Select Create Authentication.

- Type the credentials to authenticate:

| Parameter | Description |

|---|---|

| Login URL | The Salesforce login url, e.g., https://login.salesforce.com. When authentication method is Session ID, the value must be Salesforce instance URL |

| Authentication method | Support 3 types of authentication: - Credentials - OAuth - Session ID |

| OAuth connection | |

| Username | The Salesforce login username and required if authentication method is credentials |

| Password | The Salesforce login password and required if authentication method is credentials |

| Client ID | The Salesforce app's client id. It's required for input connector only |

| Client secret | The Salesforce app's client secret. It's required for input connector only |

| Security token | This field can be omit if it's appended in the password field |

| Session ID | The session ID of Salesforce |

| Initial retry delay | The first time we fail to connect, wait this many seconds before retrying (with exponential backoff) |

| Retry limit | Maximum number of retry |

- Select Continue.

- Type a name for your connection.

- Select Done

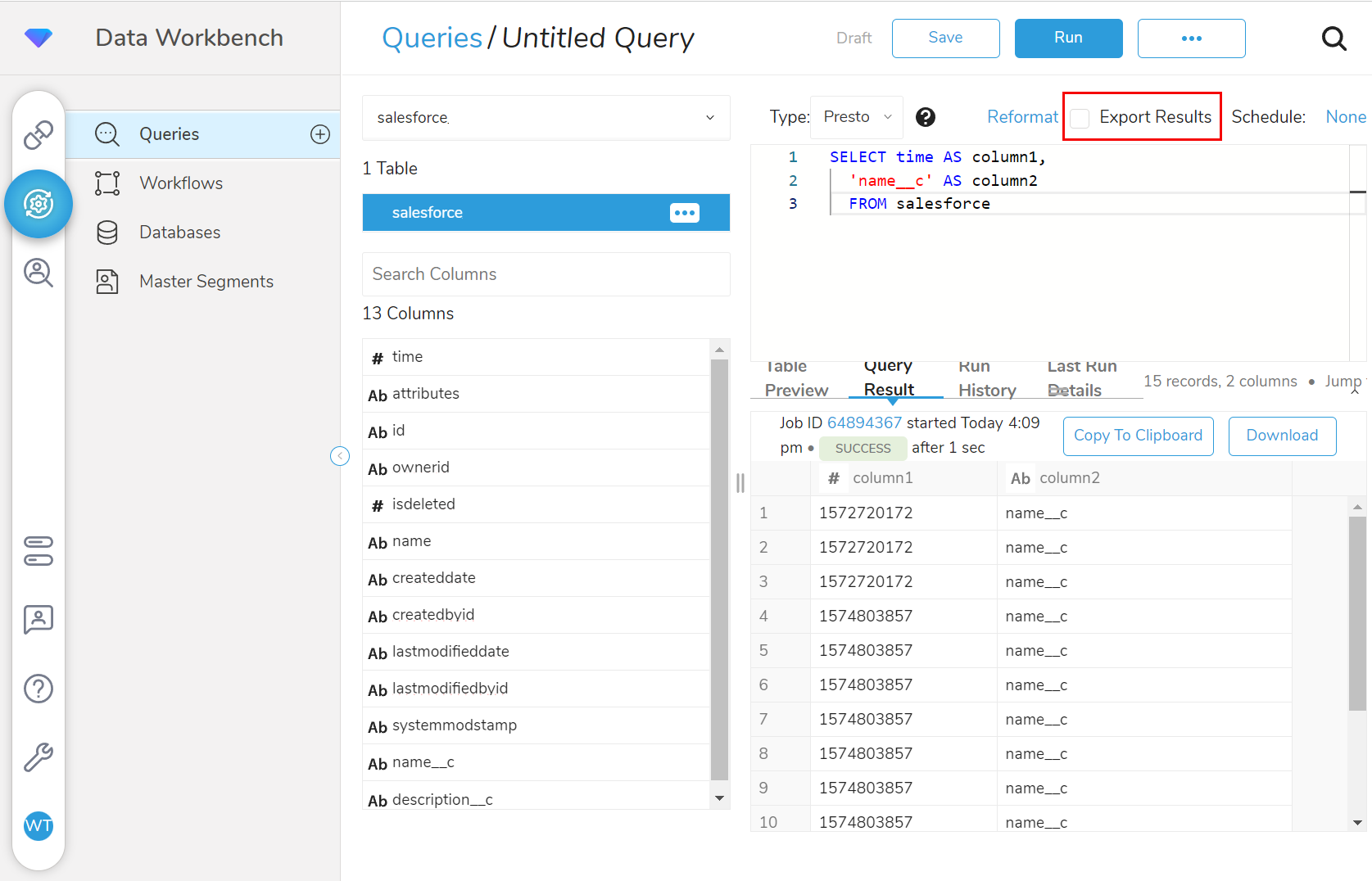

To avoid any issues with result export, define column aliases in your query such that resulting column names from the query match the Salesforce field names for default fields and API names (usually ending with __c) for custom fields.

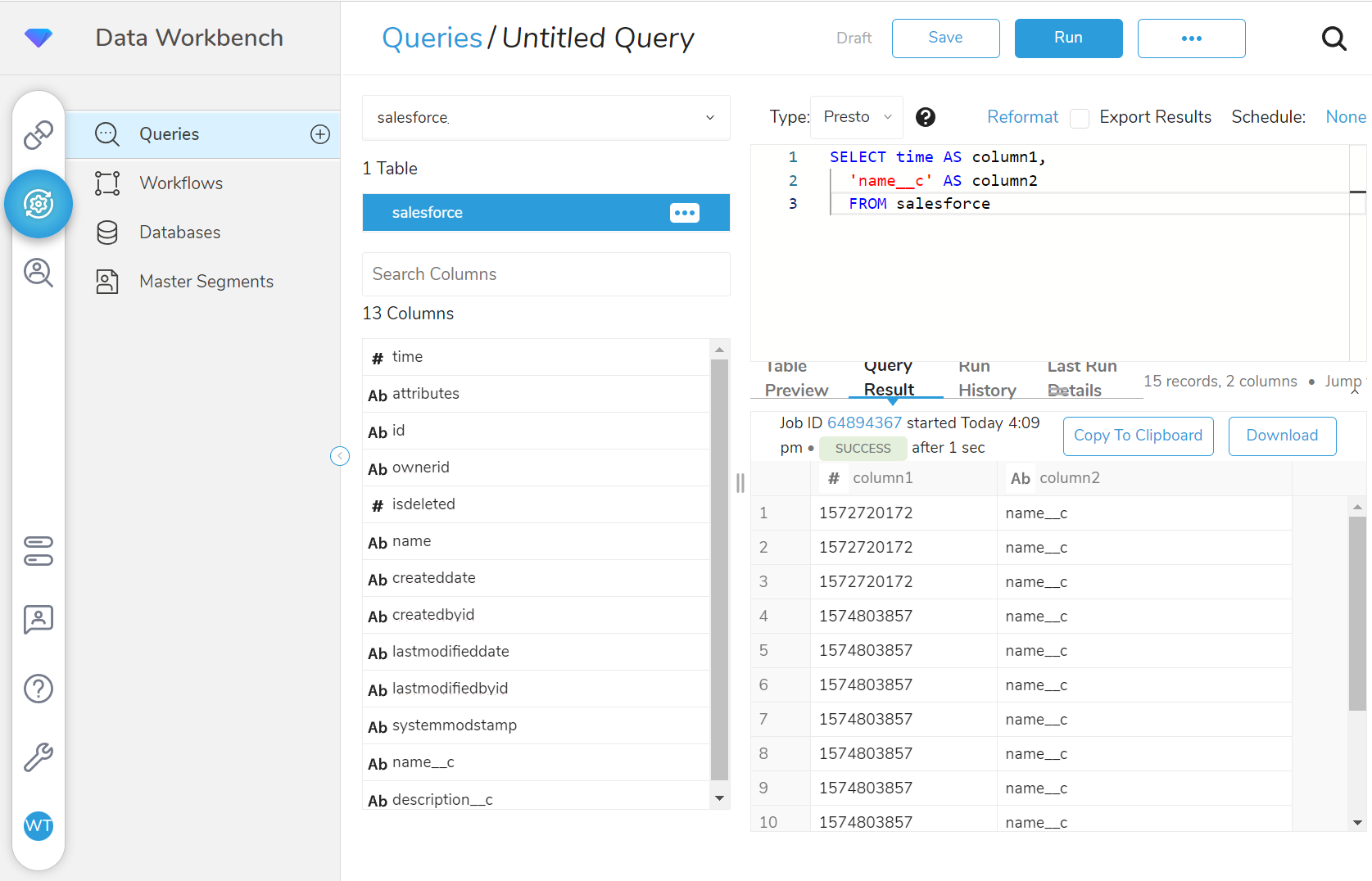

Navigate to Data Workbench > Queries.

Select New Query.

Run the query to validate the result set. For example, a query could look something like this:

- Select Export Results.

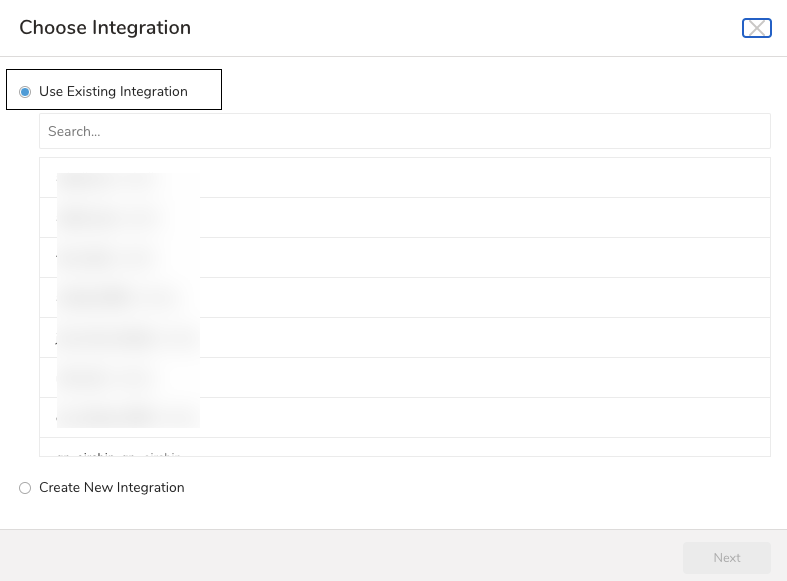

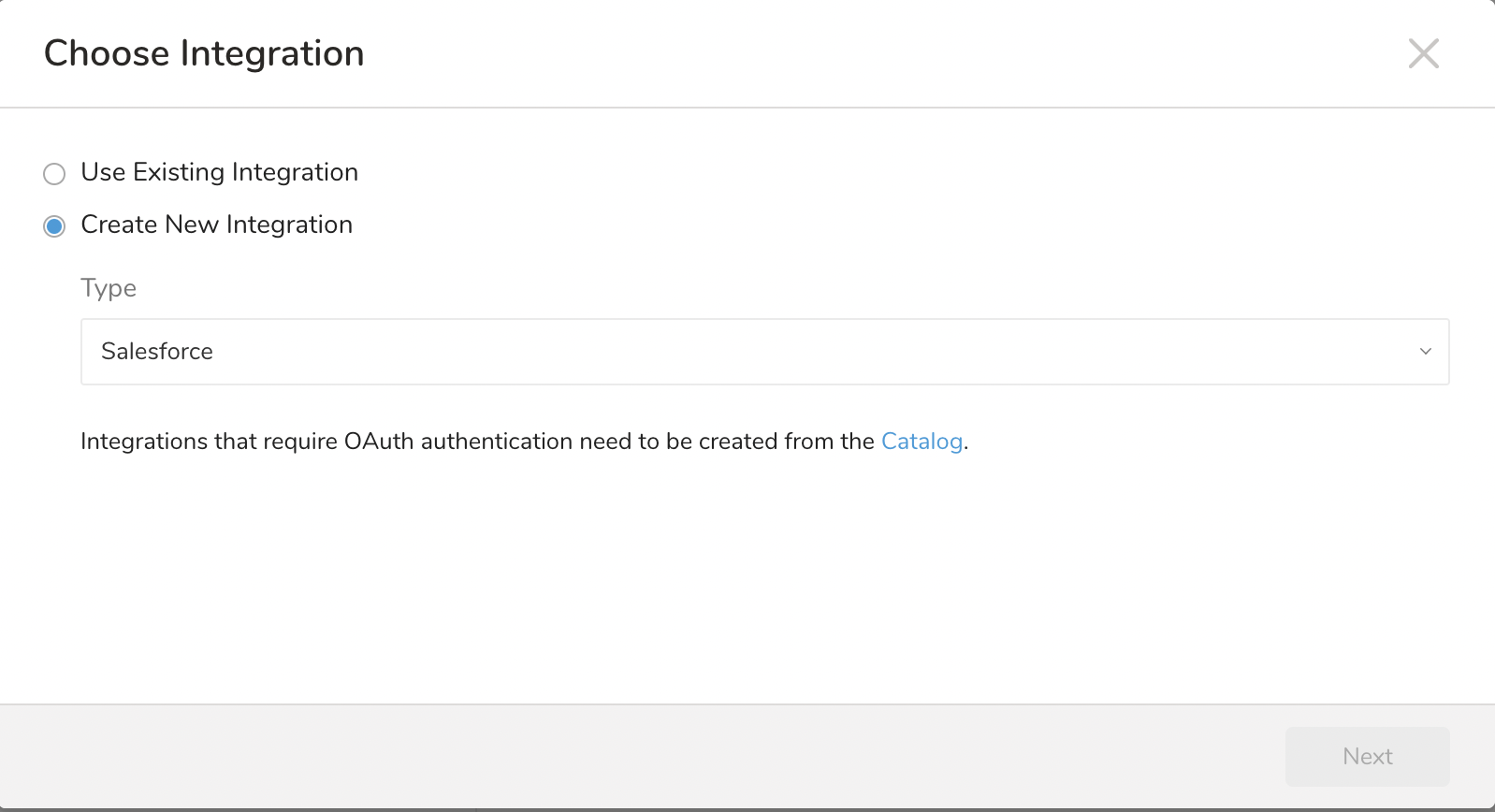

- You can select an existing authentication or create a new authentication for the external service to be used for output. Choose one of the following:

Use Existing Integration

Create New Integration: For plugins that support OAuth, we can not use this option. It will force the user to back to the catalog and create a connection there

For Use Existing Integration

| Parameter | Description |

|---|---|

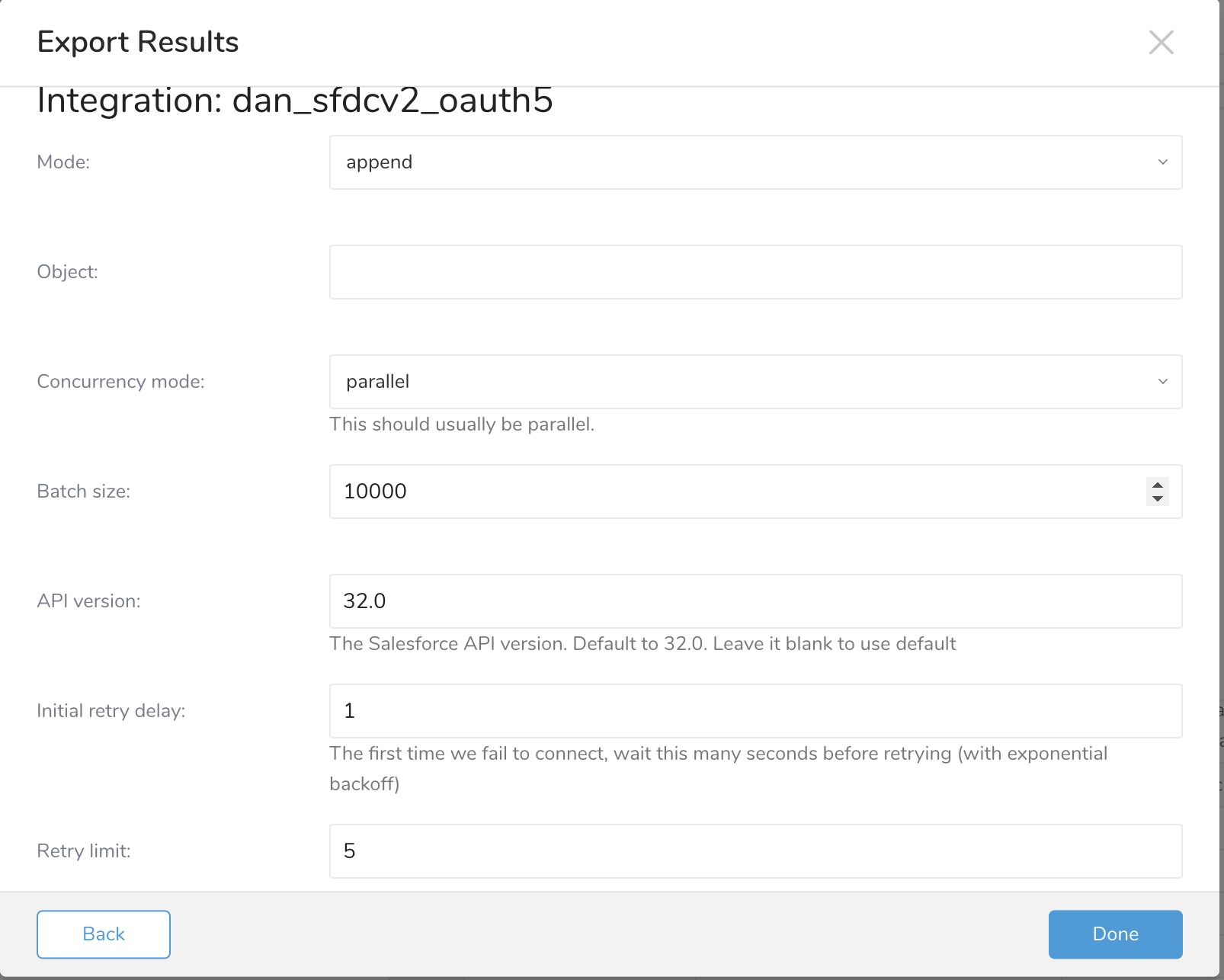

| Mode |

|

| Object | Salesforce.com Object |

| Upsert new records? | This option is available when mode is update. |

| Key | This option is available when mode is update. If Upsert new records? checkbox is checked, this field must be the externalId field in Salesforce. The externalId field can only set for a custom field. System fields—such as Id, CreatedDate, LastModifiedDate, CreatedByID, etc.—are not custom fields. |

| Hard delete? | This option is available when mode is truncate. |

| Concurrency mode | The concurrency_mode option controls how the data is uploaded to the Salesforce.com organization. The default mode is parallel. With the parallel method, data is uploaded in parallel. This is the most reliable and effective method for most situations. if you see an error message that says "UNABLE_TO_LOCK_ROW," try concurrency_mode=serial instead. |

| Batch size | By default, this splits the records in the result of a query into chunks of 10000 records and bulk uploads one chunk at a time. |

| API version | The Salesforce API version. Default is 32.0. |

| Initial retry delay | If the initial attempt to connect fails, wait this many seconds before retrying (with exponential backoff). |

| Retry limit | This option sets the number of attempts that should be made to write the result to the configured Salesforce.com destination if errors occur. If the export fails more than the set number of retries, the query fails. |

- After you have entered the required information in the fields, select Done.

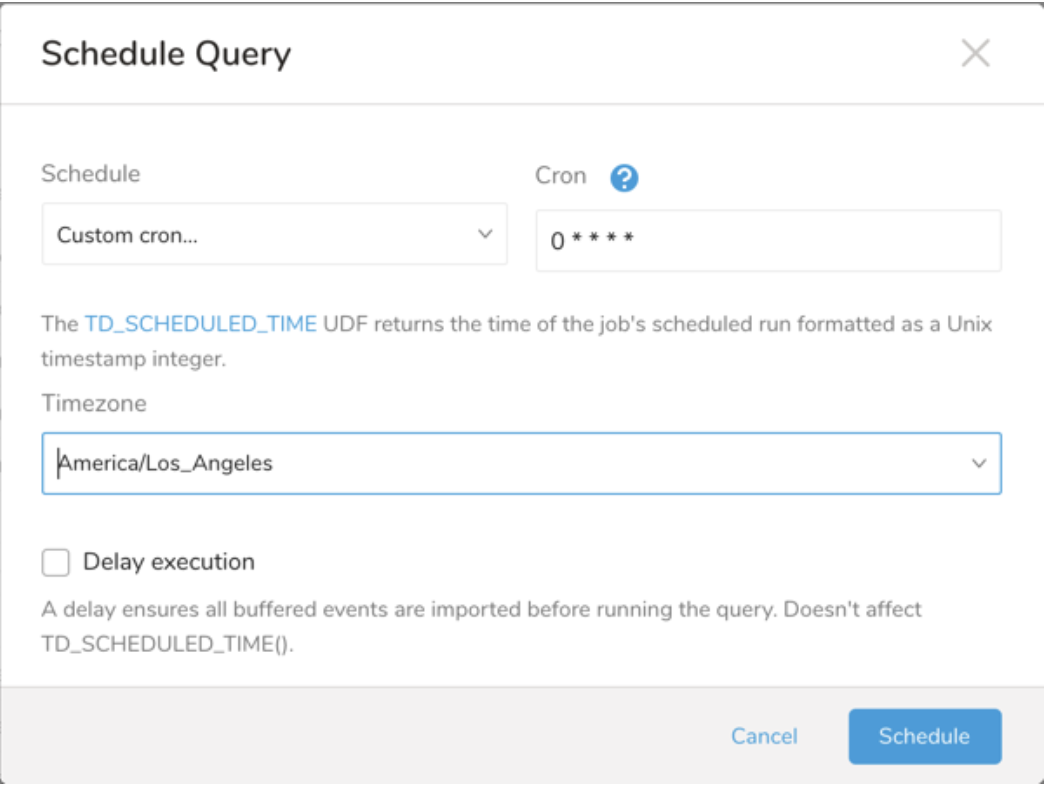

You can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

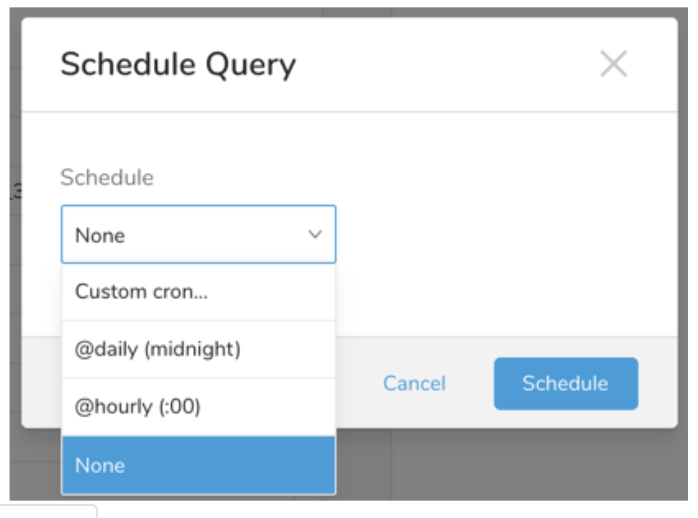

Next to Schedule, select None.

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

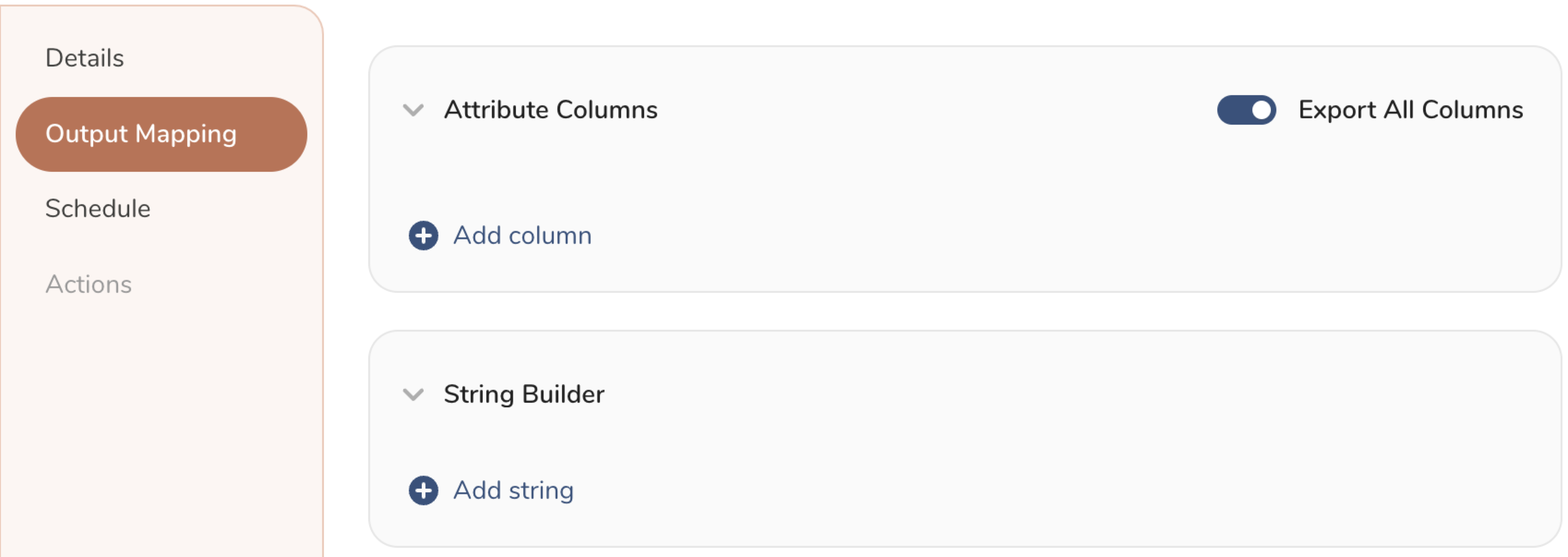

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

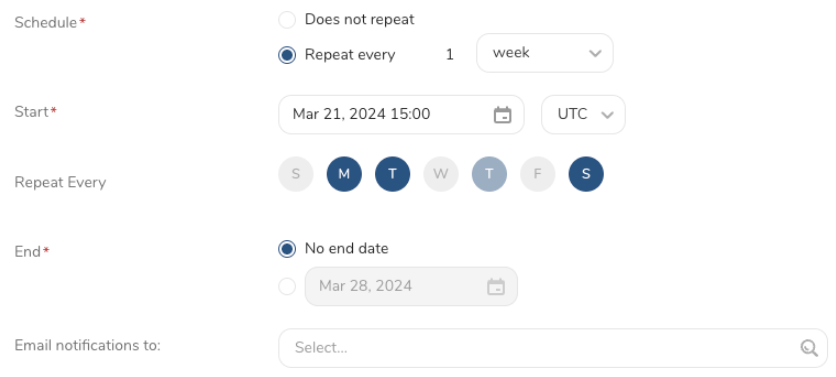

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.