PUSHCODE is a popular Japanese web push service that delivers the most relevant content at the right time to individual site visitors.

This import integration for PUSHCODE enables you to import the following data provided by PUSHCODE from Google Cloud Storage:

- Subscriber List

- Segment List

- Push Result

- Basic Knowledge of Treasure Data

- Basic knowledge of PUSHCODE

- Basic Knowledge of Import Connector for Google Cloud Storage

- PUSHCODE account

- Enable Treasure Data Integration setting in PUSHCODE

- Files of Subscriber List / Segment List / Push Result are created every day.

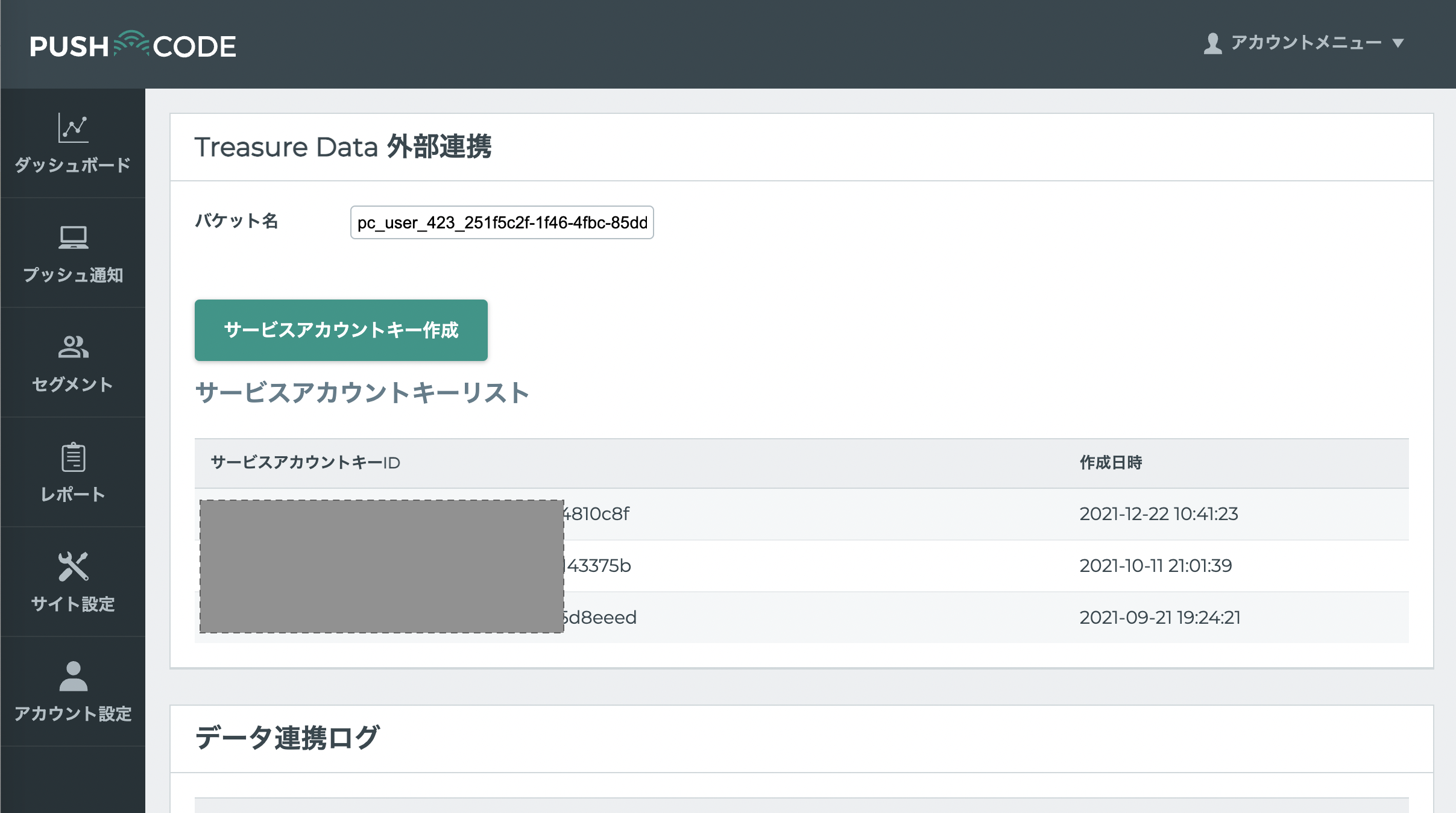

- Navigate to to PUSHCODE Console > Account Settings.

- Enable the Treasure Data integration.

Once you configure the Treasure Data integration, following information is provided.

- Allocated Google Cloud Storage Bucket Name

- Google Cloud Storage service account key (JSON file)

Keep them available for the settings on Treasure Data Console.

The PUSHCODE integration uses Google Cloud Storage integration. Follows the steps in the Google Cloud Storage Import Integration to create an authentication using a JSON key file.

Open TD Console.

Navigate to Integrations Hub > Authentications.

Locate your new authentication and select New Source.

| Parameter | Description |

|---|---|

| Data Transfer Name | You can define the name of your transfer. |

| Authentication | The authentication name that is used to a transfer. |

Type a source name in the Data Transfer Name field.

Select Next.

The Create Source page displays with the Source Table tab selected.

- Select Next.

- The source Table dialog opens, edit the following parameters based on the target PUSHCODE data.

| Import Target List | Parameter | Description |

|---|---|---|

| Subscriber List | Bucket | Bucket Name is found in PUSHCODE console |

| Path Prefix | "subscriber_list/subscriber_list_" | |

| Path Regex | "subscriber_list/subscriber_list_[0-9]{12}.csv.gz$" | |

| Start after path | In order to import only the latest file 'subscriber_list/subscriber_list_YYYYMMDD0000.csv.gz', where YYYY is year, MM is month, and DD is the day of the month (zero-padded). To import all past data, leave it blank. | |

| Incremental? | Check this if you want incremental loading. | |

| Segment List | Bucket | Bucket Name is found in PUSHCODE console |

| Path Prefix | "segment_list/segment_list_" | |

| Path Regex | "segment_list/segment_list_[0-9]{12}.csv.gz$" | |

| Start after path | In order to import only the latest file 'segment_list/segment_list_YYYYMMDD0000.csv.gz', where YYYY is year, MM is month, and DD is the day of the month (zero-padded). To import all past data, leave it blank. | |

| Incremental? | Checked | |

| Push Result | Bucket | Bucket Name is found in PUSHCODE console |

| Path Prefix | "push_result/push_result_" | |

| Path Regex | "push_result/push_result_[0-9]{12}.csv.gz$" | |

| Start after path | n order to import only the latest file '"push_result/push_result_[0-9]{12}.csv.gz$', where YYYY is year, MM is month, and DD is the day of the month (zero-padded). To import all past data, leave it blank. | |

| Incremental? | Checked |

The imported file formats are as follows. You don't need to manually define data settings as the connector automatically guesses these formats.

| Import Target List | Name | Description | Type |

|---|---|---|---|

| Subscriber list | domain_id | your configured site id | int |

| domain | your configured site domain | string | |

| user_id | User ID who subscribed | int | |

| browser_id | Browser ID where subscribed | int | |

| user_agent | Browser User Agent | string | |

| subscribe_time | When user subscribed | string | |

| status | 0: Subscribed / 1: Denied | int | |

| unsubscribe_time | When user denied (if user haven't denied, this value is not added) | string | |

| Segment list | list_id | User list id | int |

| list_name | User list name | string | |

| user_id | User ID who is delivered | string | |

| Push Result | domain_id | your configured site id | int |

| domain | your configured site domain | string | |

| push_scenario_inst_i | Push Notification ID | int | |

| browser_id | Browser ID where subscribed | int | |

| user_id | User ID who subscribed | int | |

| push_create_time | When PUSHCODE regsitered a push notification | string | |

| push_sent_time | When PUSHCODE sent a push notification | string | |

| push_view_time | When user viewed a push notification | int | |

| push_click_time | When user clicked a push notification | string |

Select Next.

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

You can import data from PUSHCODE by using td_load>: operator of workflow. If you have already created a SOURCE, you can run it; if you don't want to create a SOURCE, you can import it using a YAML file.

Identify your source.

To obtain a unique ID, open the Source list and search for the source you created.

Open the menu and select "Copy Unique ID".

- Define a workflow task using td_load> operator.

+load:

td_load>: unique_id_of_your_source

database: ${td.dest_db}

table: ${td.dest_table}- Run a workflow.

Identify your yml file. If you need to create the yml file, review Google Cloud Storage Import Integration for reference.

Define a workflow task using td_load> operator.

+load:

td_load>: config/daily_load.yml

database: ${td.dest_db}

Table: ${td.dest_table}- Run a workflow

Visit Treasure Boxes for sample workflow code.

Before setting up the connector, install the most current TD Toolbelt.

PUSHCODE integration with CLI uses Google Cloud Storage integration. Follows steps in the Google Cloud Storage Import Integration to create an authentication with selecting JSON key file.

See Define Data Settings to obtain required parameters.