As more and more data protection laws arrive all over the world, ensuring compliance is a priority. OneTrust is a privacy management and marketing compliance company. Its services are used by organizations to comply with global regulations like GDPR.

This OneTrust input integration is to provide an input integration that can collect customer's consent data and load it into TD. Access to OneTrust data on the Treasure Data platform enables your marketing team to optimally enrich your data.

- Basic knowledge of Treasure Data

- Basic knowledge of OneTrust

- GUID of a single Collection point to limit data, if not provided, get from all Collection Points.

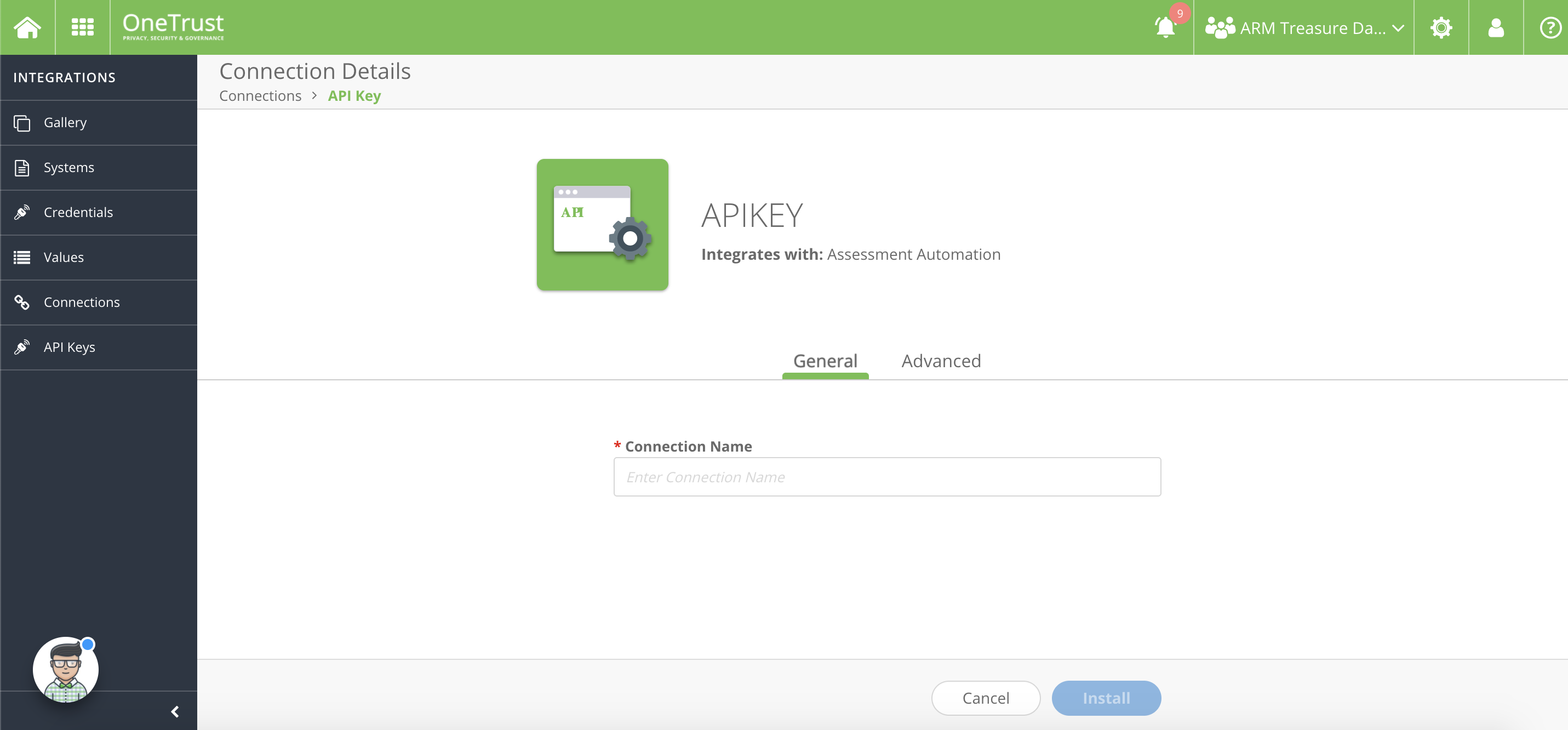

Navigate to https://app.onetrust.com/integrations/api-keys .

Sign on to the OneTrust application if necessary.

Select Add New

Type a name that you want for the Connection Name.

Select Install.

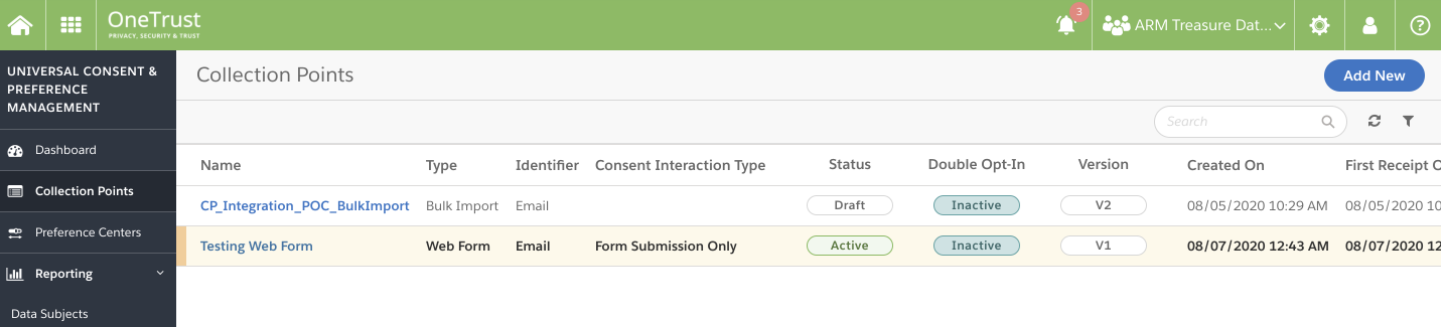

Navigate to https://app.onetrust.com/consent/collection-points. The Collection Point screen displays.

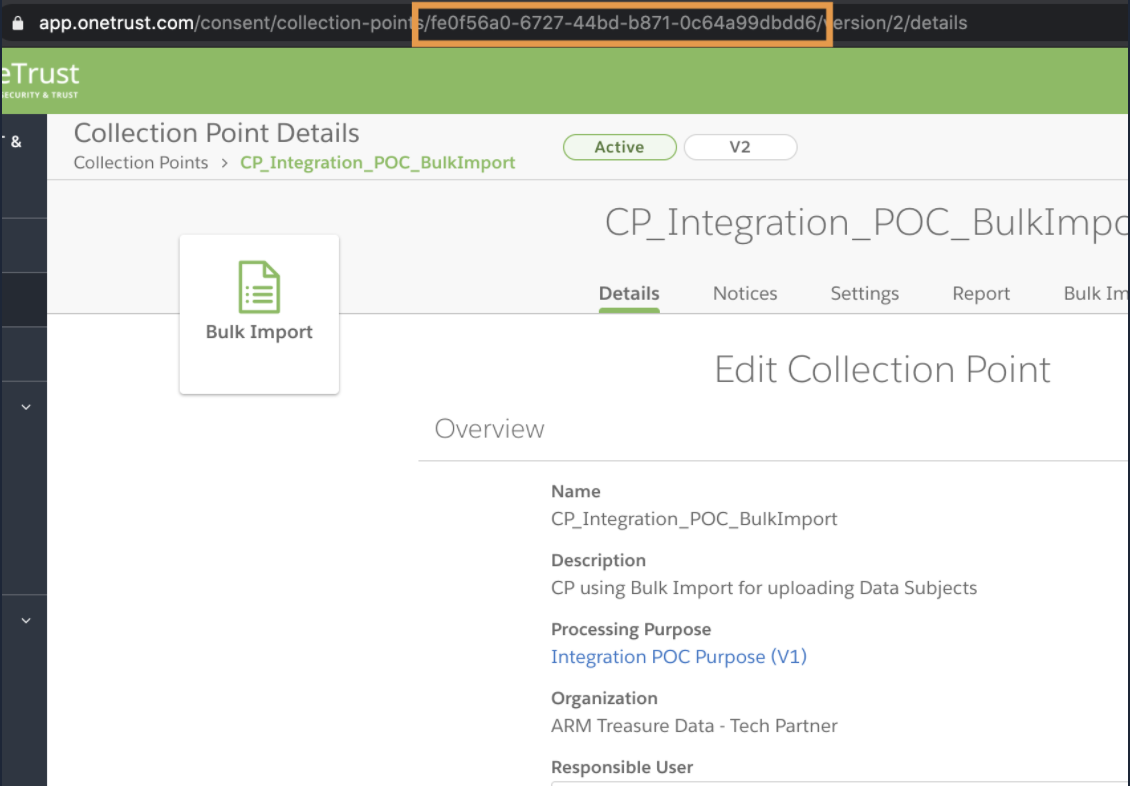

Select the corresponding Collection Point, the GUID is in the URL. For example:

Create a OneTrust token to store the Client ID and Secret. This is short-lived token.

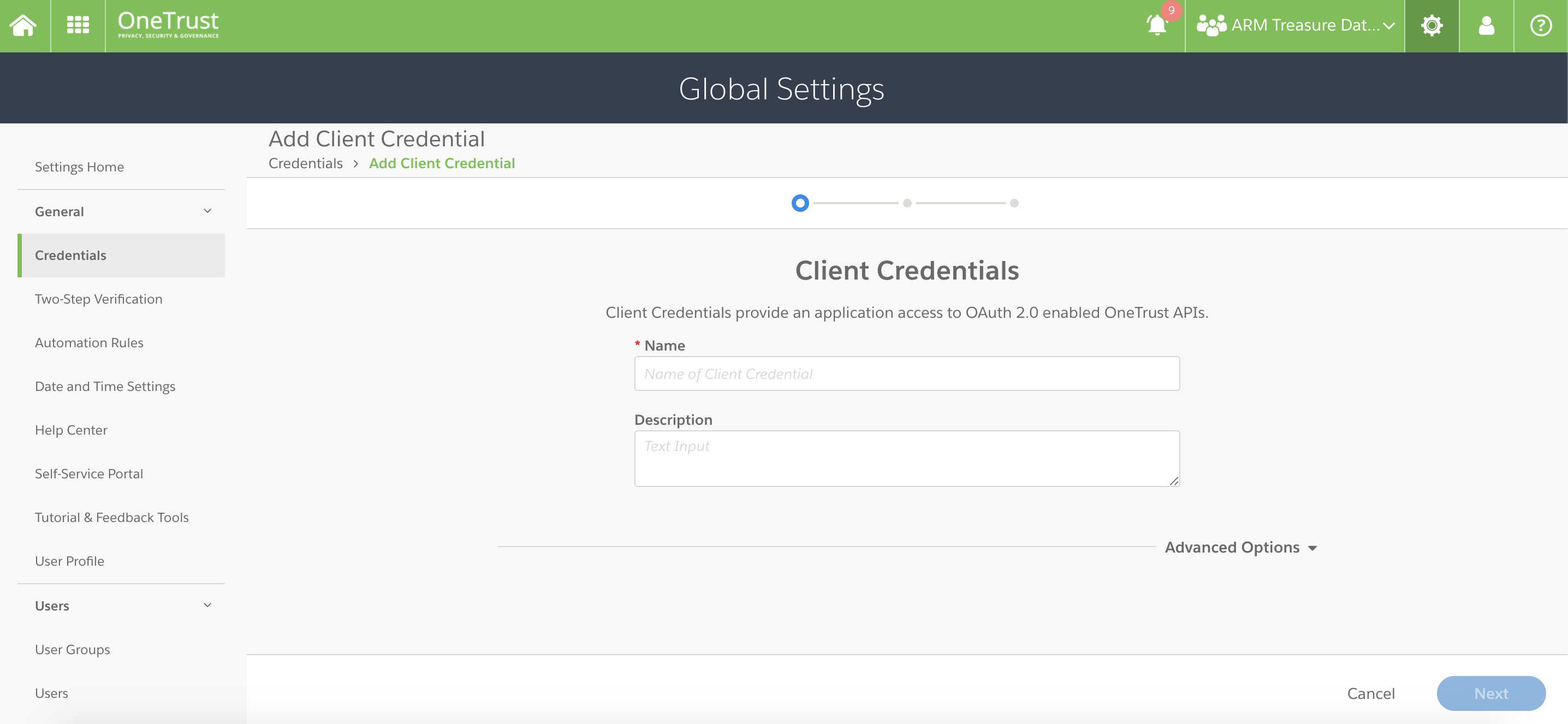

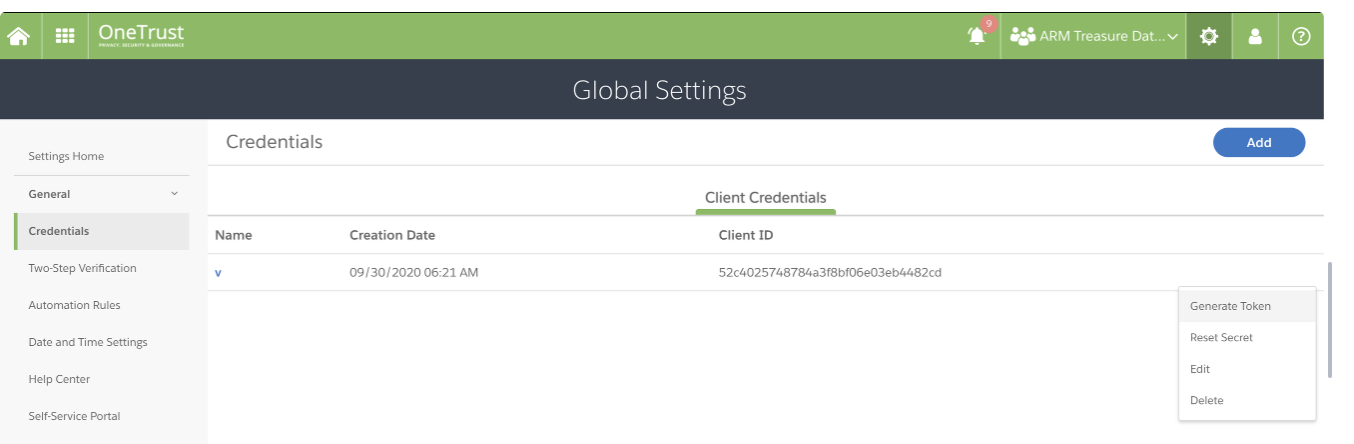

Navigate to https://app.onetrust.com/settings/client-credentials/list.

Select Add.

Type a name and describe your token.

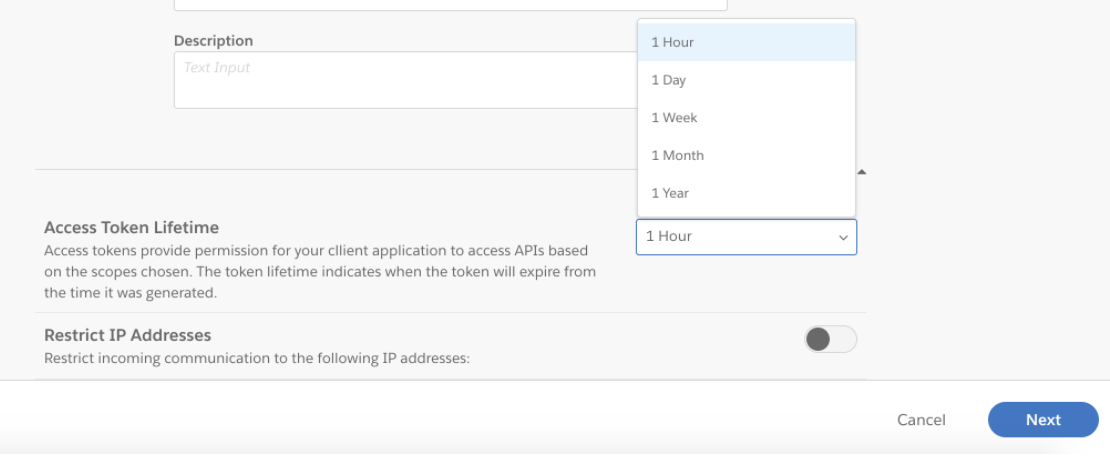

Select appropriate Access Token Lifetime. The default lifetime is one hour.

Navigate to https://app.onetrust.com/settings/client-credentials/list.

Select your credential.

Select Generate Token.

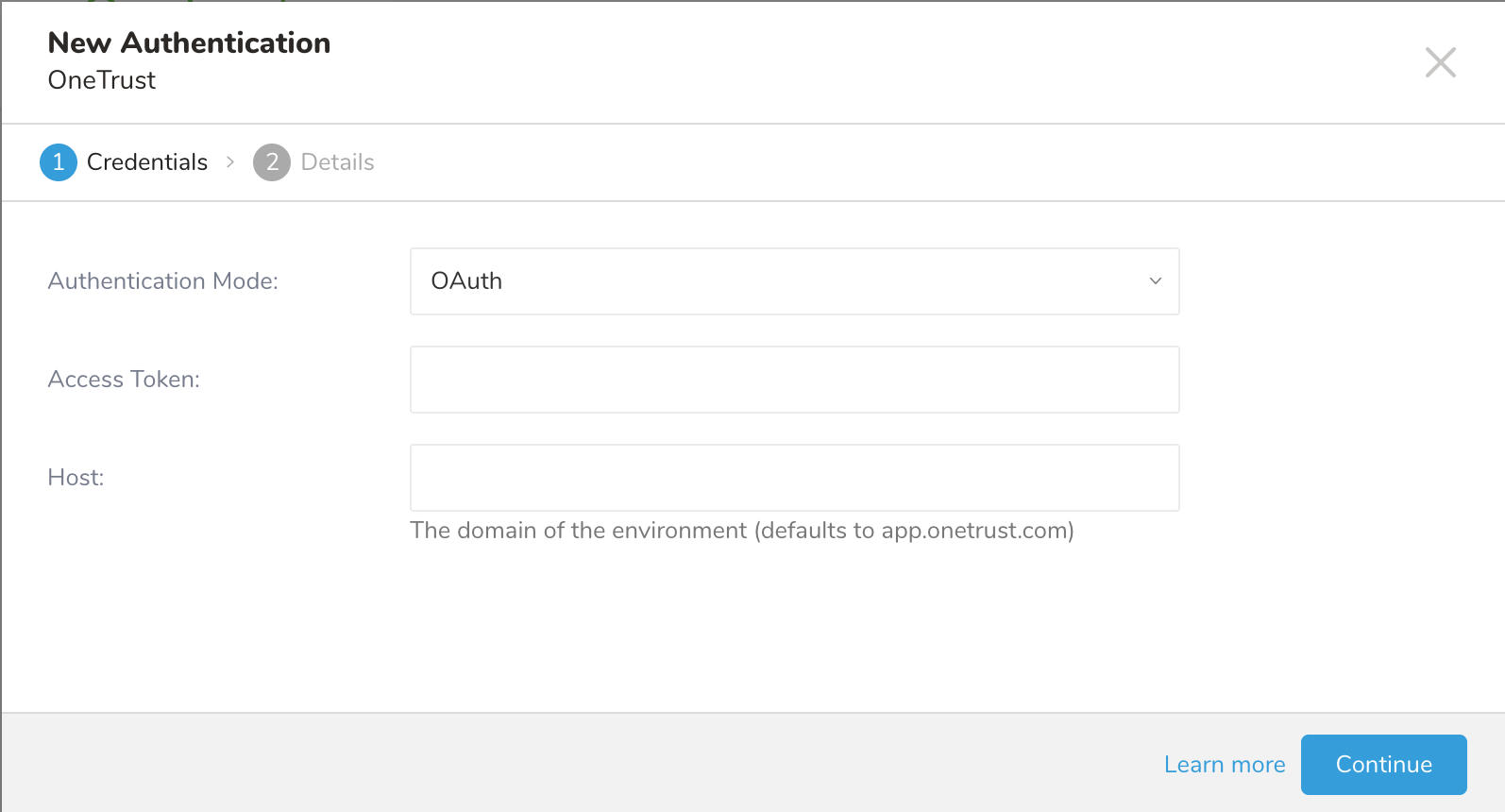

When you configure a data connection, you provide authentication to access the integration. In Treasure Data, you configure the authentication and specify the source information.

Open TD Console.

Navigate to Integrations Hub > Catalog.

Search for and select OneTrust.

Type the name of the access token that you created in the OneTrust application.

Select Continue.

Type a name for your connection.

Select Done.

After creating the authenticated connection, you are automatically taken to Authentications.

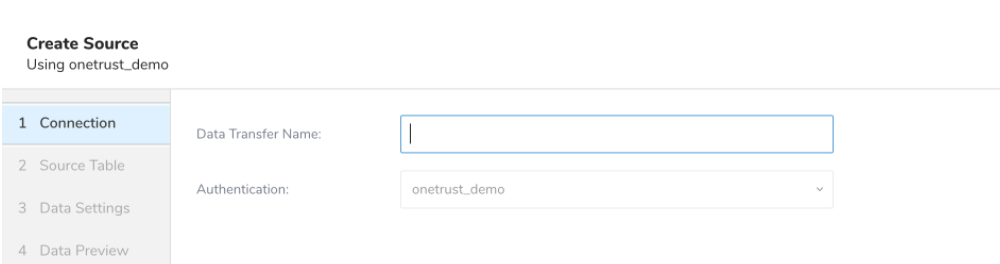

Search for the connection you created.

Select New Source.

Type a name for the data transfer**.**

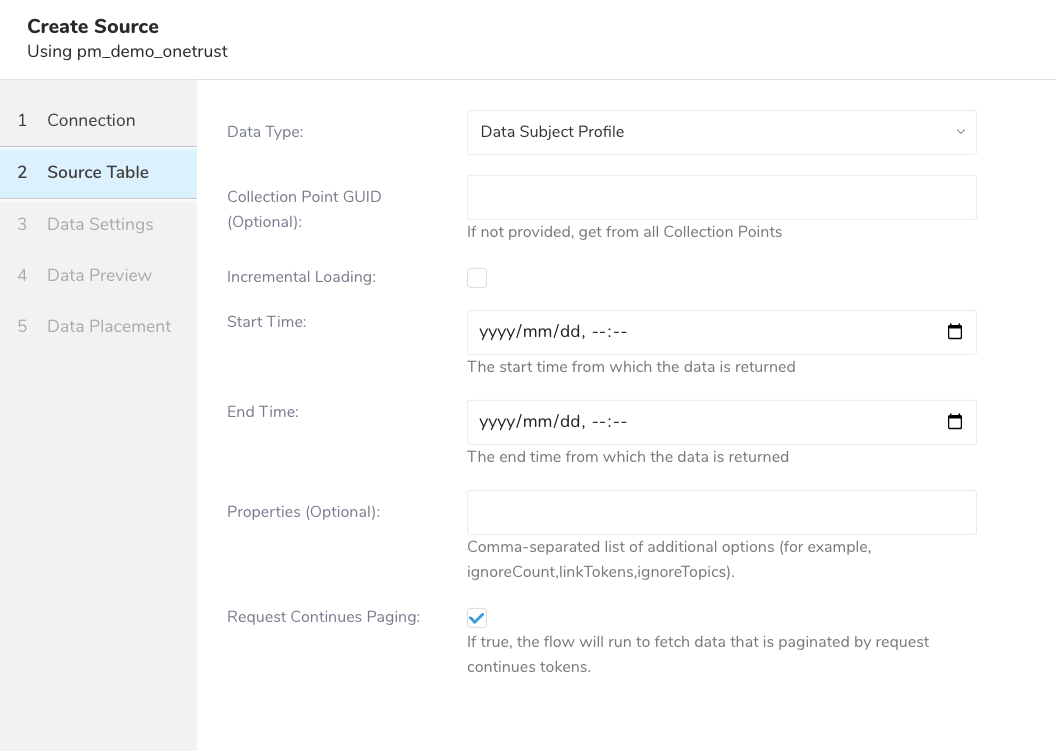

Select Next. The Source Table dialog opens.

Select Next. The Data Settings dialog opens.

Edit the following parameters:

| Parameters | Description |

|---|---|

| Data Type |

|

| Collection Point GUID(Optional) | GUID of a single Collection point to limit data, if not provided, get from all Collection Points. |

| Incremental Loading | Enables incremental report loading with new Start Time automatic calculation. For example, if you start incremental loading with Start Time = 2014-10-02T15:01:23Z to 2014-10-03T15:01:23Z, the next jobs run new Start Timewill be 2014-10-03T15:01:23 |

| Start Time (Required when select Incremental Loading. Required for every API V4 Data Type). | For UI configuration, you can pick the date and time from supported browser, or input the date that suit the browser expectation of date time. For example, on Chrome, you will have a calendar to select Year, Month, Day, Hour, and Minute; on Safari, you need to input the text such as 2020-10-25T00:00. For cli configuration, we need a timestamp in RFC3339 UTC "Zulu" format, accurate to nanoseconds, for example: "2014-10-02T15:01:23Z". |

| Incremental By Modifications of |

|

| End Time (Required when select Incremental Loading. Required for every API V4 Data Type). | For UI configuration, you can pick the date and time from supported browser, or input the date that suit the browser expectation of date time. For example, on Chrome, you will have a calendar to select Year, Month, Day, Hour, and Minute; on Safari, you need to input the text such as 2020-10-25T00:00. For cli configuration, we need a timestamp in RFC3339 UTC "Zulu" format, accurate to nanoseconds, for example: "2014-10-02T15:01:23Z" |

| Properties (Optional) | Comma-separated setting to add properties query param when fetch Data Subject Profile Data Type. It will be showed when select the Data Type Data Subject Profile. Things to Know:

|

| Request Continues Paging (Optional) | If checked, the ingestion process will run to fetch data that is paginated by request continues tokens. This option is useful if the data volume is large. |

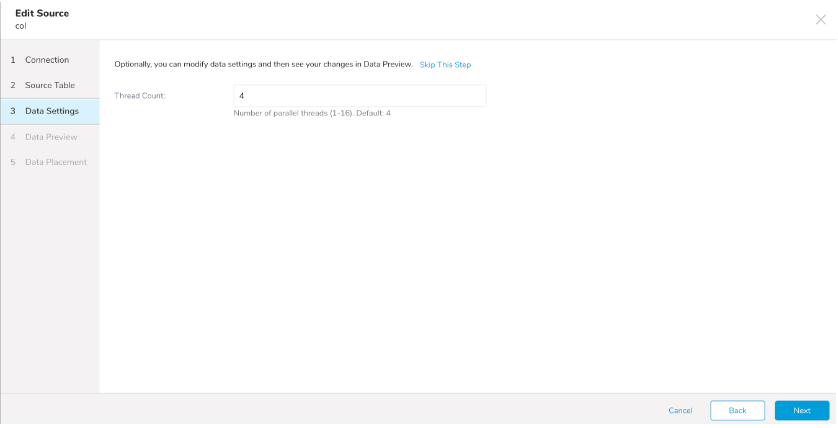

- Select Next. The Data Settings page opens.

- Skip this page of the dialog.

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

Before setting up the integration, install the latest version of the TD Toolbelt.

in:

type: onetrust

base_url: ***************

auth_method: oauth

access_token: ***************

data_type: data_subject_profile

incremental: false

start_time: 2025-01-30T00:49:04Z

end_time: 2025-02-28T17:00:00.000Z

thread_count: 5

out:

mode: replaceThis example gets a list of Data Subject Profile objects. The start_time specifies the date to start getting data from. In this case, the import will start pulling data from January 30th, 2025 at 00:49.

Parameters Reference

| Name | Description | Value | Default Value | Require |

|---|---|---|---|---|

| type | The source of the import. | "onetrust" | Yes | |

| base_url | Base url of onetrust server. | String. | "app.onetrust.com" | Yes |

| auth_method | Authentication method "oauth" or "api_key" | String. | "oauth" | Yes |

| access_token | Oauth access token require when oauth auth mode. | String | Yes when auth_method is "oauth" | |

| api_key | Api key require when api_key auth mode. | String | Yes when auth_medthod is "api_key" | |

| data_type | The Data Type that want to fetch from OneTrust.

| String. Supported data_type:

| Yes | |

| collection_point_guid(Optional) | GUID of a single Collection point to limit data, if not provided, get from all Collection Points. It will show if the Data Type are Data Subject Profile and Purpose (API V4). | String. | No | |

| incremental | Enables incremental report loading with new Start Time automatic calculation. For example, if you start incremental loading with Start Time is 2014-10-02T15:01:23Z and End Time is 2014-10-03T15:01:23Z, the next jobs run new Start Time will be 2014-10-03T15:01:23 | Boolean | False | Yes |

start_time | For UI configuration, you can pick the date and time from supported browser, or input the date that suit the browser expectation of date time. For example, on Chrome, you will have a calendar to select Year, Month, Day, Hour, and Minute; on Safari, you need to input the text such as For cli configuration, we need a timestamp in RFC3339 UTC "Zulu" format, accurate to nanoseconds, for example: | TimeStamp. | Yes when select Incremental Loading. No for every the API V1 Data Type (data_subject_apiand collection_point). Yes for every API V4 Data Type. (data_subject_profile_v4, link_token_api_v4 and purpose_api_v4). | |

| incremental_type | Select the time type that you want to fetch data from OneTrurst. | String.

| "data_subject_profile" | Yes when select Incremental Loading in the data_subject_profileData Type. |

data_subject_properties(Optional) | Comma-separated setting to add It will be showed when select the Data Type Data Subject Profile. | String | No | |

end_time | For UI configuration, you can pick the date and time from supported browser, or input the date that suit the browser expectation of date time. For example, on Chrome, you will have a calendar to select Year, Month, Day, Hour, and Minute; on Safari, you need to input the text such as For cli configuration, we need a timestamp in RFC3339 UTC "Zulu" format, accurate to nanoseconds, for example: | TimeStamp | No for every the API V1 Data Type (data_subject_apiand collection_point). Yes for every API V4 Data Type. (data_subject_profile_v4, link_token_api_v4 and purpose_api_v4). | |

| request_continues_paging | If true, the ingestion process will run to fetch data that is paginated by request continues tokens. This option is useful if the data volume is large. | Boolean | false | No |

| ingest_duration_minutes | Set the time range for ingestion duration when target is data_subject_profile and request_continues_paging is true | Integer | 1440 | No |

To preview the data, use the td connector:preview command.

td connector:preview load.ymlIt might take a couple of hours, depending on the size of the data. Be sure to specify the Treasure Data database and table where the data should be stored. Treasure Data also recommends specifying the --time-column option because Treasure Data’s storage is partitioned by time (see data partitioning). If this option is not provided, the data connector chooses the first long or timestamp column as the partitioning time. The type of the column specified by --time-column must be either of long and timestamp type.

If your data doesn’t have a time column, you can add a time column by using the add_time filter option. For more details see the documentation for the add_time Filter Function.

$ td connector:issue load.yml --database td_sample_db --table td_sample_table \--time-column created_atThe connector:issue command assumes that you have already created a database(td_sample_db) and a table(td_sample_table). If the database or the table does not exist in TD, this command fails. Create the database and table manually or use --auto-create-table option with td connector:issue command to auto-create the database and table.

$ td connector:issue load.yml --database td_sample_db --table td_sample_table--time-column created_at --auto-create-tableThe data connector does not sort records on the server side. To use time-based partitioning effectively, sort records in files beforehand.

If you have a field called time, you don’t have to specify the --time-column option.

$ td connector:issue load.yml --database td_sample_db --table td_sample_tableSpecify the file import mode in the out: section of the load.yml file. The out: section controls how data is imported into a Treasure Data table. For example, you may choose to append data or replace data in an existing table.

| Mode | Description | Examples |

|---|---|---|

| Append | Records are appended to the target table. | in: ... out: mode: append |

| Always Replace | Replaces data in the target table. Any manual schema changes made to the target table remain intact. | in: ... out: mode: replace |

| Replace on new data | Replaces data in the target table only when there is new data to import. | in: ... out: mode: replace_on_new_data |

You can schedule periodic data connector execution for incremental file import. The Treasure Data scheduler is optimized to ensure high availability.

For the scheduled import, you can import all files that match the specified prefix and one of these conditions:

- If use_modified_time is disabled, the last path is saved for the next execution. On the second and subsequent runs, the integration only imports files that come after the last path in alphabetical order.

- Otherwise, the time that the job is executed is saved for the next execution. On the second and subsequent runs, the connector only imports files that were modified after that execution time in alphabetical order.

A new schedule can be created using the td connector:create command.

$ td connector:create daily_import "10 0 * * *" \td_sample_db td_sample_table load.ymlTreasure Data also recommends specifying the --time-column option because Treasure Data’s storage is partitioned by time (see data partitioning).

$ td connector:create daily_import "10 0 * * *" \td_sample_db td_sample_table load.yml \--time-column created_atThe cron parameter also accepts three special options: @hourly, @daily, and @monthly.

By default, the schedule is set up in the UTC timezone. You can set the schedule in a timezone using -t or --timezone option. The --timezone option supports only extended timezone formats like Asia/Tokyo, America/Los_Angeles, etc. Timezone abbreviations like PST, CST are not supported and might lead to unexpected schedules.