This article describes how to use the data connector for MySQL, which allows you to directly import data from MySQL to Treasure Data.

For sample workflows on importing data from MySQL, view Treasure Boxes.

- Basic knowledge of Treasure Data

- Basic knowledge of MySQL

- A MySQL instance that is reachable from Treasure Data

If you are using MySQL Community Server 5.6 and 5.7 and want to use SSL, set the parameters enabledTLSProtocols and TLSv1.2 to resolve a compatability issue with the underlying library of the connector. For other MySQL versions, the integration will try to use the highest TLS version automatically.

When you configure a data connection, you provide authentication to access the integration. In Treasure Data, you configure the authentication and then specify the source information.

Open TD Console.

Navigate to Integrations Hub > Catalog

Search for and select MySQL.

Select Create

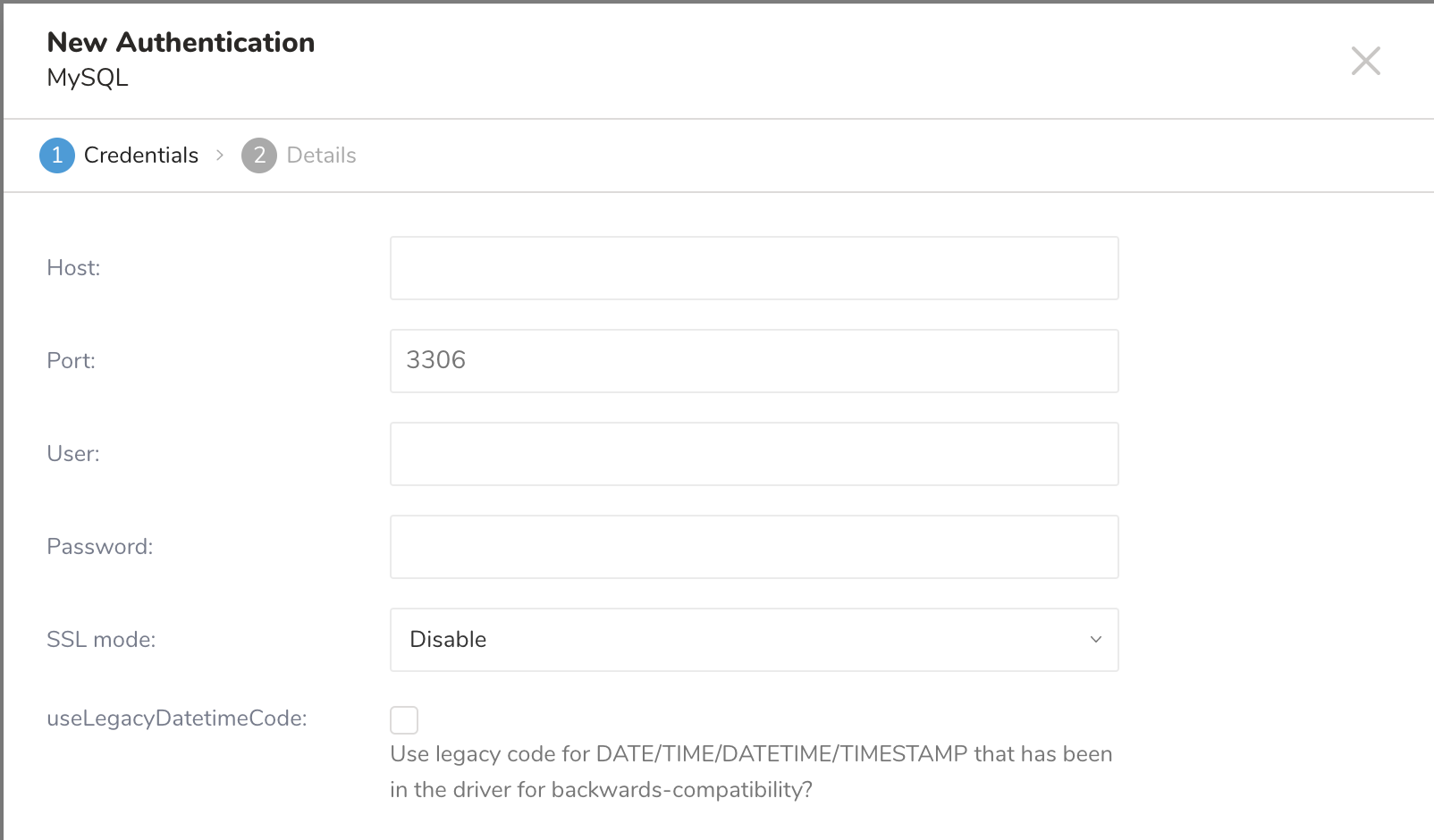

The following dialog opens.

Set the following parameters. Select Continue.

| Parameters | Description |

|---|---|

| Host | The host information of the remote database, eg. an IP address. |

| Port | The connection port on the remote instance,MySQL default is 3306. |

| Username | Username to connect to the remote database. |

| Password | Password to connect to the remote database. |

| SSL Mode | Learn more about SSL mode |

| useLegacyDatetimeCode | Learn more about useLegacyDatetimeCode |

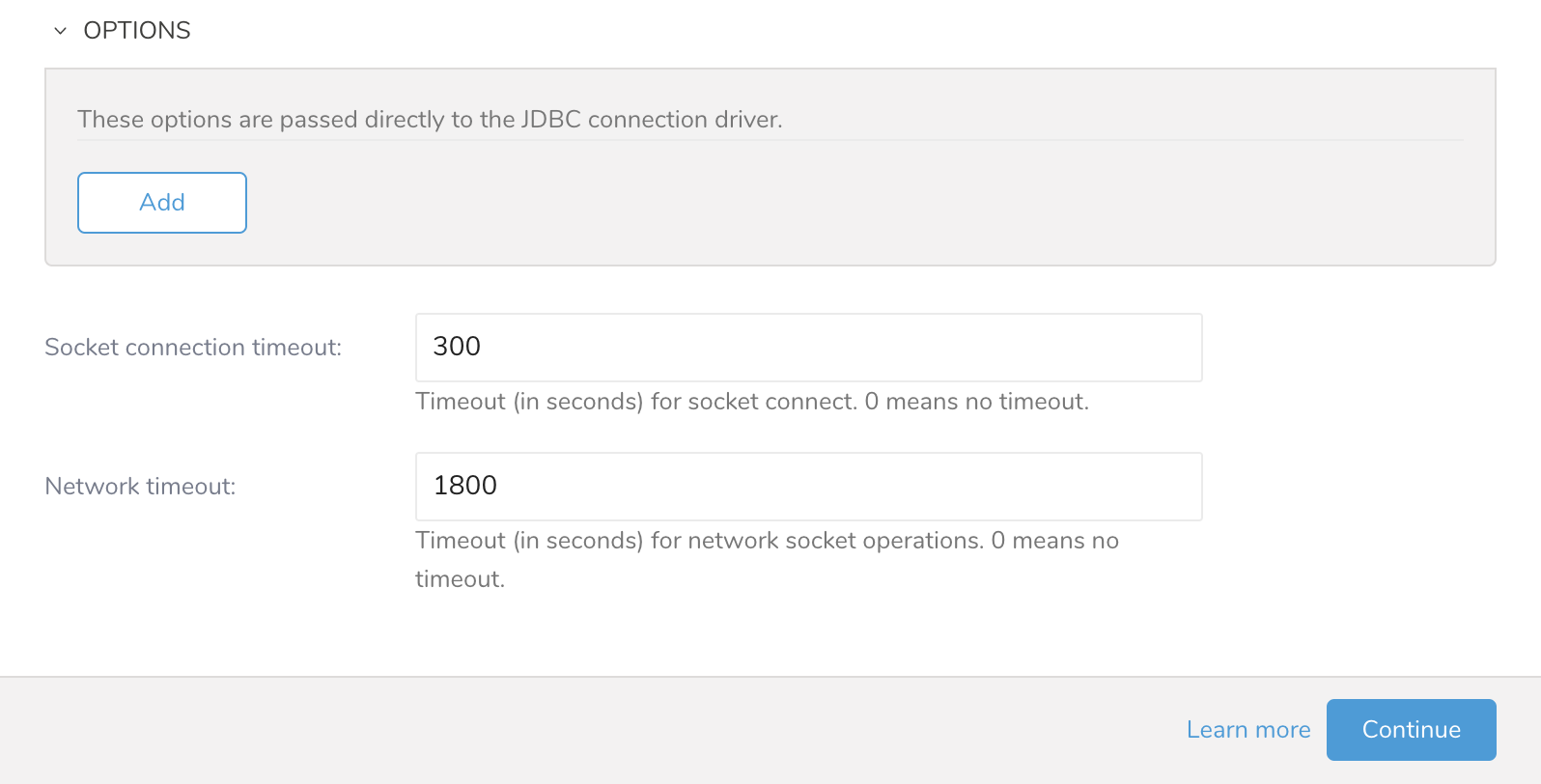

| OPTIONS | |

| JDBC Connection options | Any special JDBC connections required by remote database. |

| Socket connection timeout | Timeout (in seconds) for socket connection (default is 300). |

| Network timeout | Timeout (in seconds) for network socket operations. 0 means no timeout. |

- Type a name for your connection.

- Select Done.

After creating the authenticated connection, you are automatically taken to Authentications.

- Search for the connection you created.

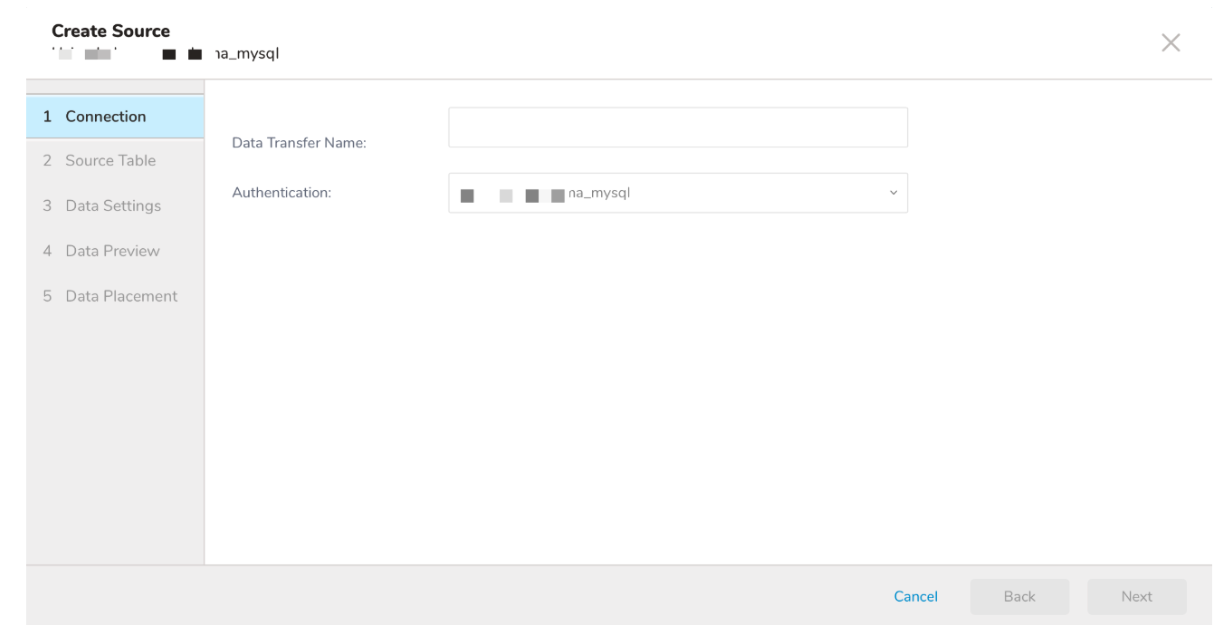

- Select New Source.

Type a name for your Source in the Data Transfer field**.**

Provide the details of the database and table you would like to ingest data from.

- Select Next

- Edit the following parameters.

| Parameters | Description |

|---|---|

| Database name | The name of the database you are transferring data from. (Ex. your_database_name) |

| Use custom SELECT query? | Use if you need more than a simple SELECT (columns) FROM table WHERE (condition). |

| SELECT columns | If there are only specific columns you would like to pull data from, list them here. Otherwise all columns will be transferred. |

| Table | The table from which you would like to import the data. |

| WHERE condition | If you need additional specificity on the data retrieved from the table you can specify it here as you would as part of WHERE clause. |

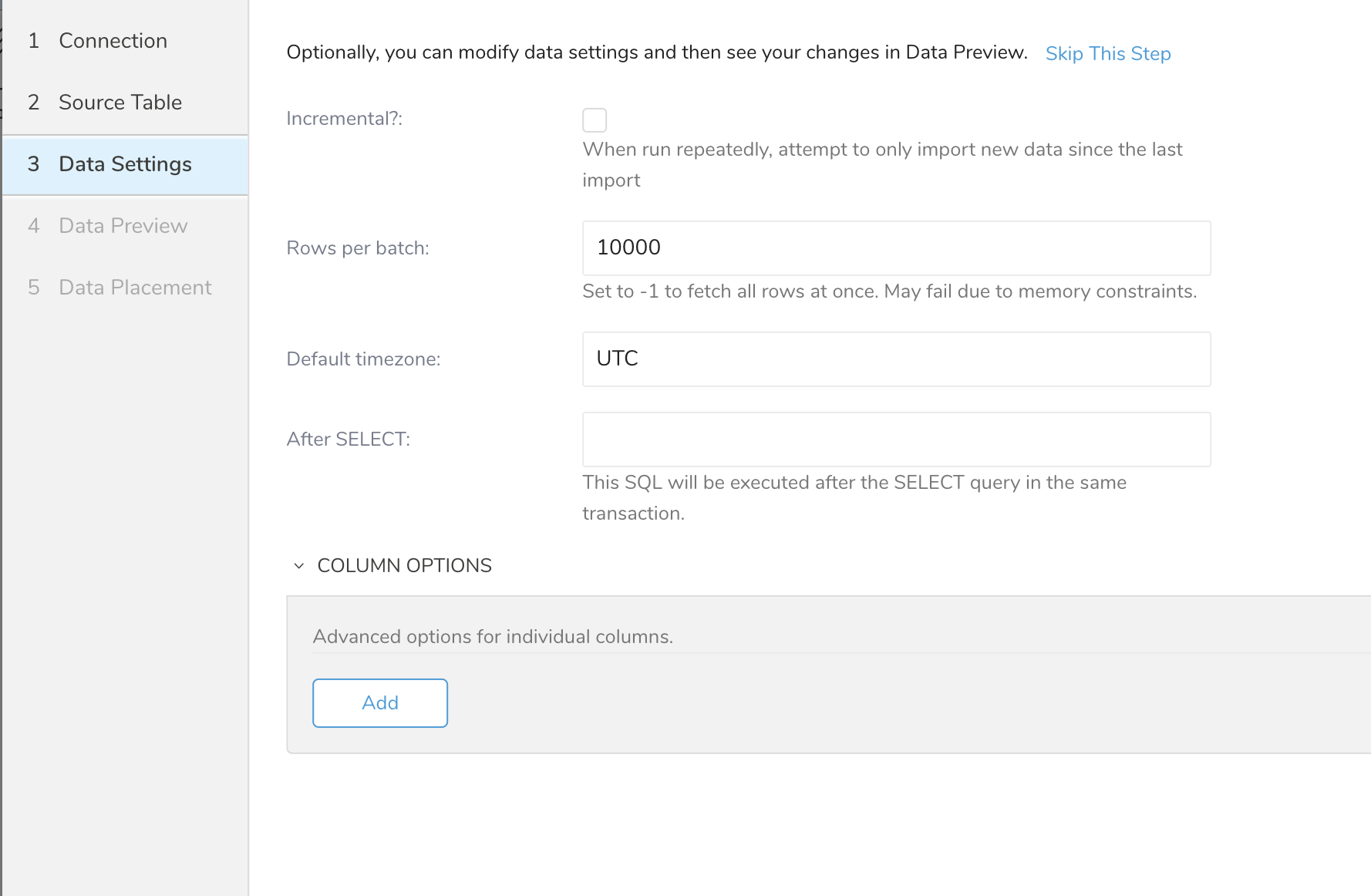

Select Next. The Data Settings page opens.

Optionally, edit the data settings or skip this page of the dialog.

| Parameters | Description |

|---|---|

| Incremental | In cases where you repeatedly run this transfer, this checkbox will allow you to only import data since the last time the import was run. |

| Rows per batch | Extremely large datasets can lead to memory issues and subsequently failed jobs. This flag allows you to breakdown the import job into batches by a number of rows to reduce the chances of memory issues and failed jobs. |

| Default timezone | The timezone to be used when doing the import. Default is UTC however, you can change this if needed. |

| After SELECT | This SQL will be executed after the SELECT query in the same transaction. |

| Column Options | If you need to modify the type of column before importing it, select this option and the relevant column details. Select Save to save any advanced setting you have entered. |

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.