This integration allows you to export TD job results into your existing MongoDB instance. For sample workflows on this, please view Treasure Boxes.

- Basic knowledge of Treasure Data, including the TD Toolbelt.

- A MongoDB instance.

- Treasure Data must have the proper privileges.

A front-end application collects data into Treasure Data via the Treasure Agent. Treasure Data periodically runs jobs on the data, then writes the job results to your MongoDB collections.

Every social/mobile application calculates the “top N of X” (e.g., top 5 movies watched today). Treasure Data already handles the raw data warehousing; the “write-to-mongodb” feature also enables Treasure Data to find the “top N” data.

If you are a data scientist, you need to keep track of a range of metrics every hour/day/month and make them accessible via visualizations. Using this “write-to-mongodb” feature, you can streamline the process and focus on your queries and visualizations of the query results.

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

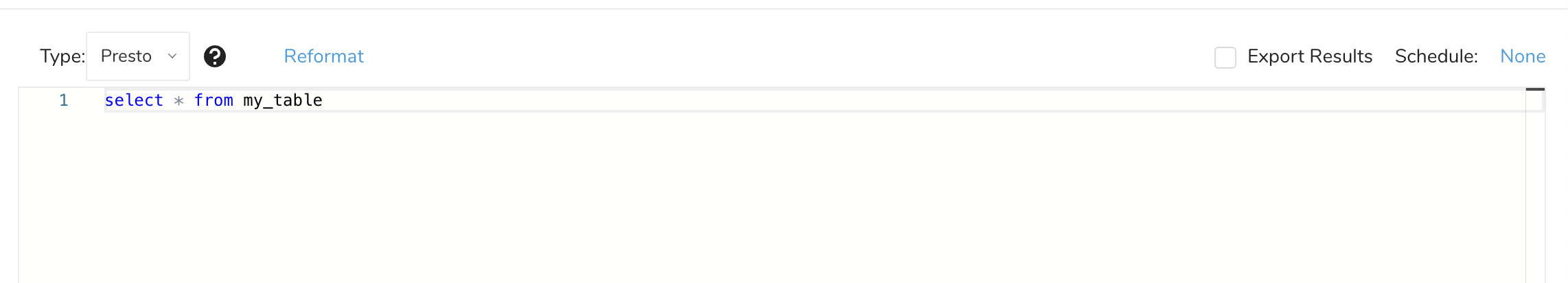

Navigate to Data Workbench > Queries.

Select New Query.

Run the query to validate the result set.

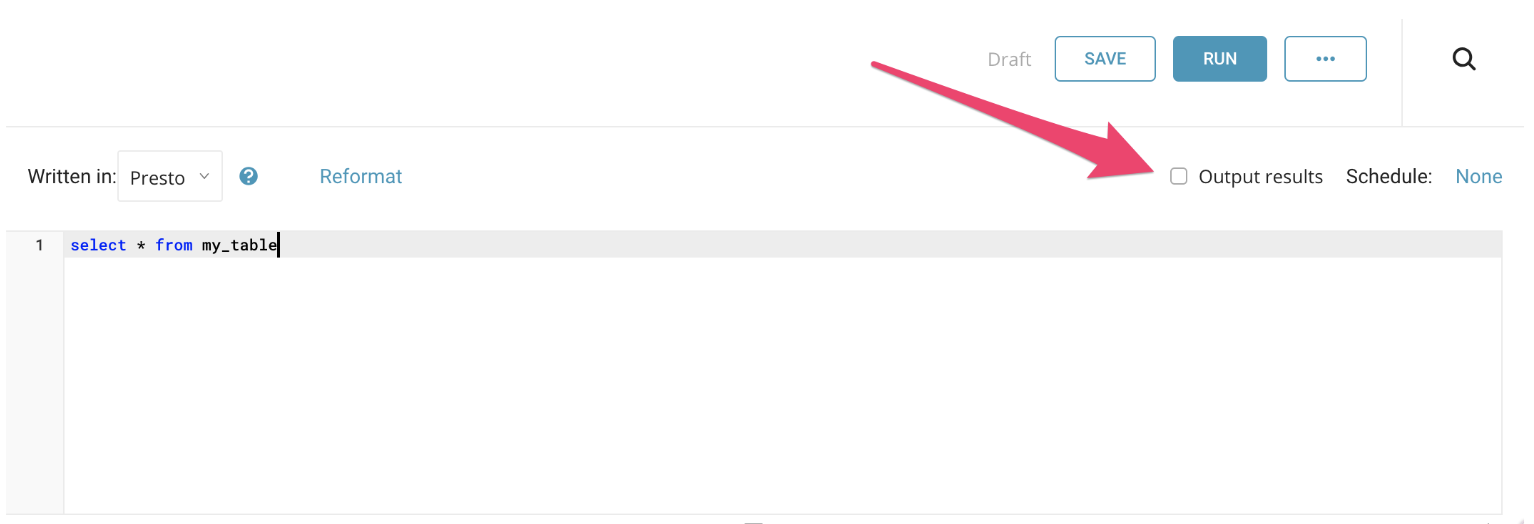

- Select Output Results.

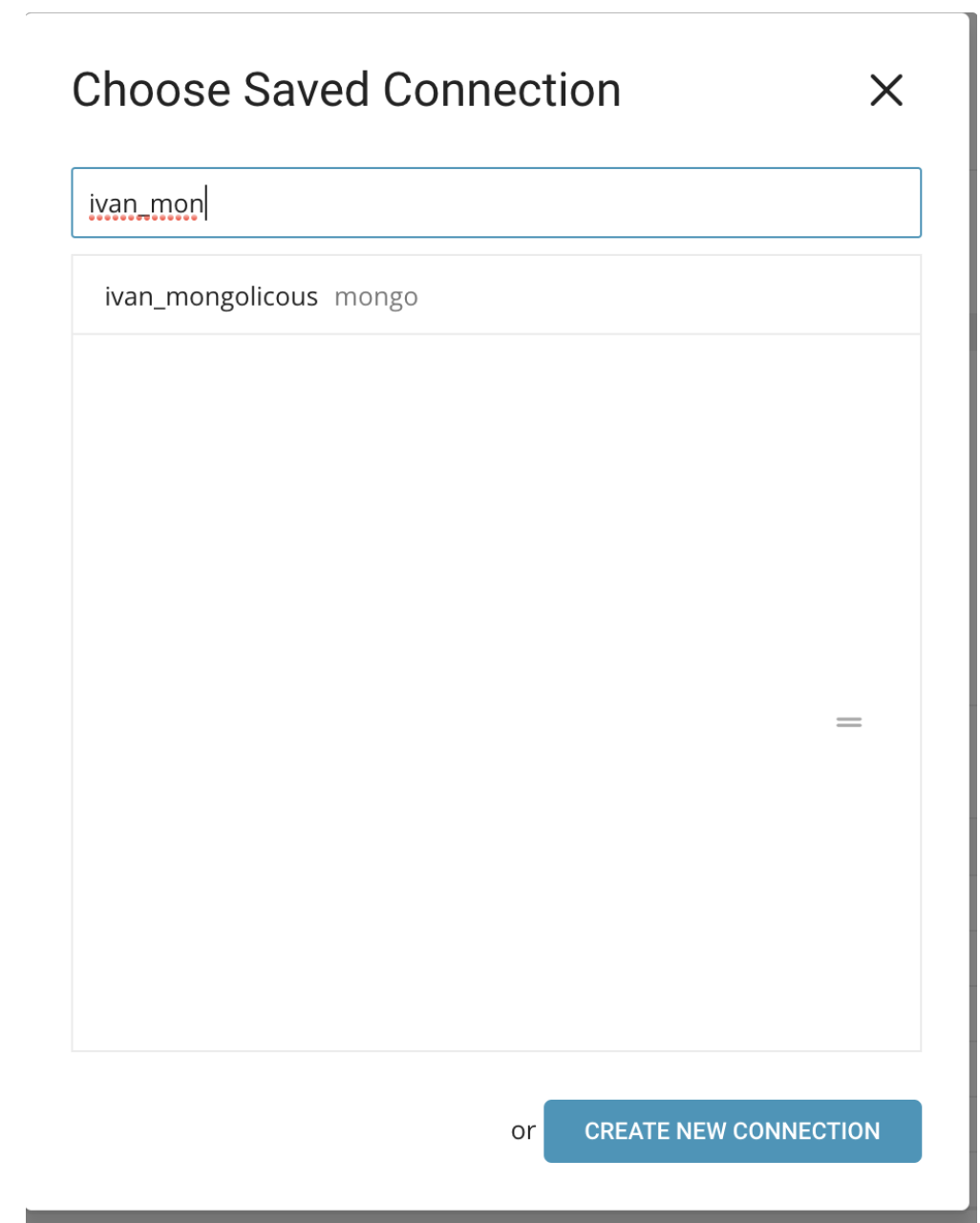

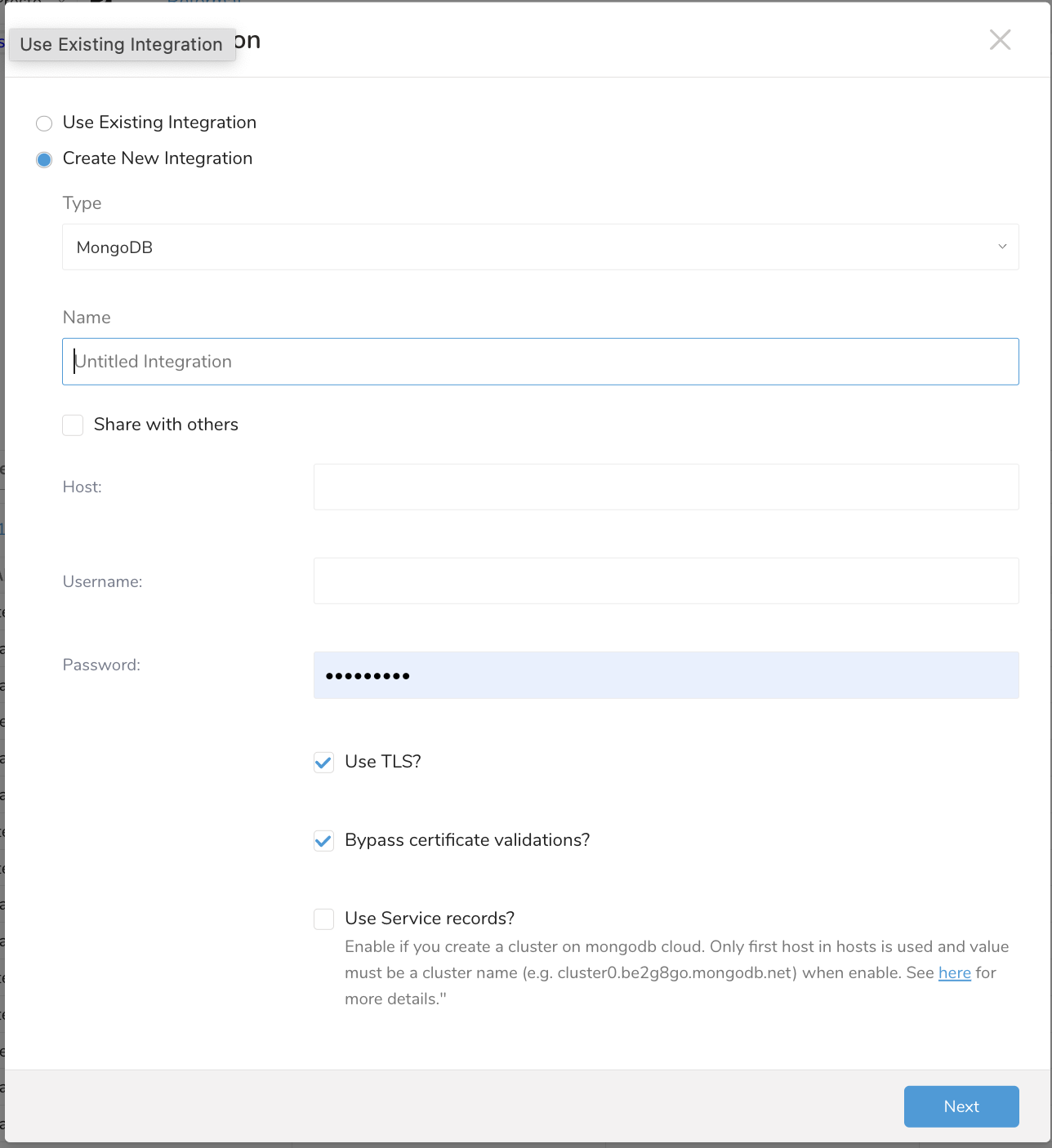

- You can select an existing authentication or create a new authentication for the external service to be used for output. Choose one of the following:

Use Existing Integration

Create a New Integration

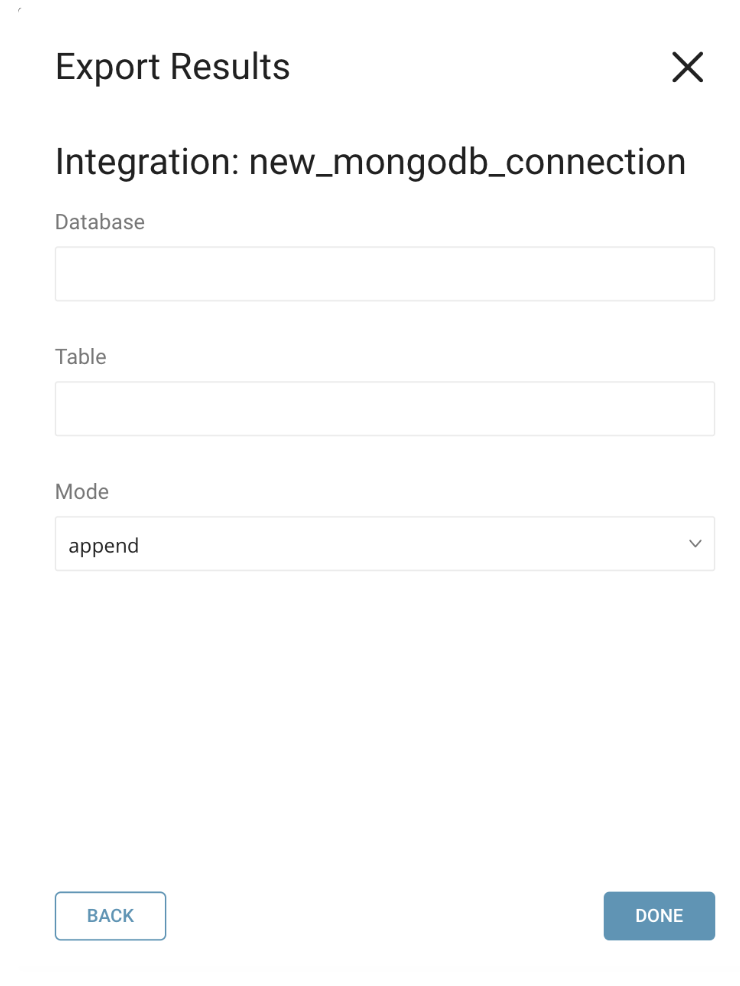

Specify information for Export to MongoDB.

| Field Name | Description |

|---|---|

| Host | The hostname or IP address of the remote server. (You can add more than one IP address, depending on your MongoDB setup.) |

| Username | Username to connect to the remote database. |

| Password | Password to connect to the remote database. |

| Use TLS? | Check this box to connect using TLS (SSL). |

| Bypass certificate validations? | Check this box to bypass all certificate validations. |

| Use Service records? | Enable if you create a cluster on MongoDB cloud. Only the first host in hosts is used and the value must be a cluster name (e.g. cluster0.be2g8go.mongodb.net) when enabling. |

| Database name | The name of the database to which you are transferring data (e.g., your_database_name). |

| Table Name | The name of the collection to which you are transferring data. |

| Mode | Append - Add to the existing records in the database. This mode is atomic. Replace - Replace the existing records with the query results. This mode is atomic. Truncate - Truncate the existing records. This mode is atomic. Update - a row is inserted unless it can cause a duplicate value in the columns specified in the “keys” parameter |

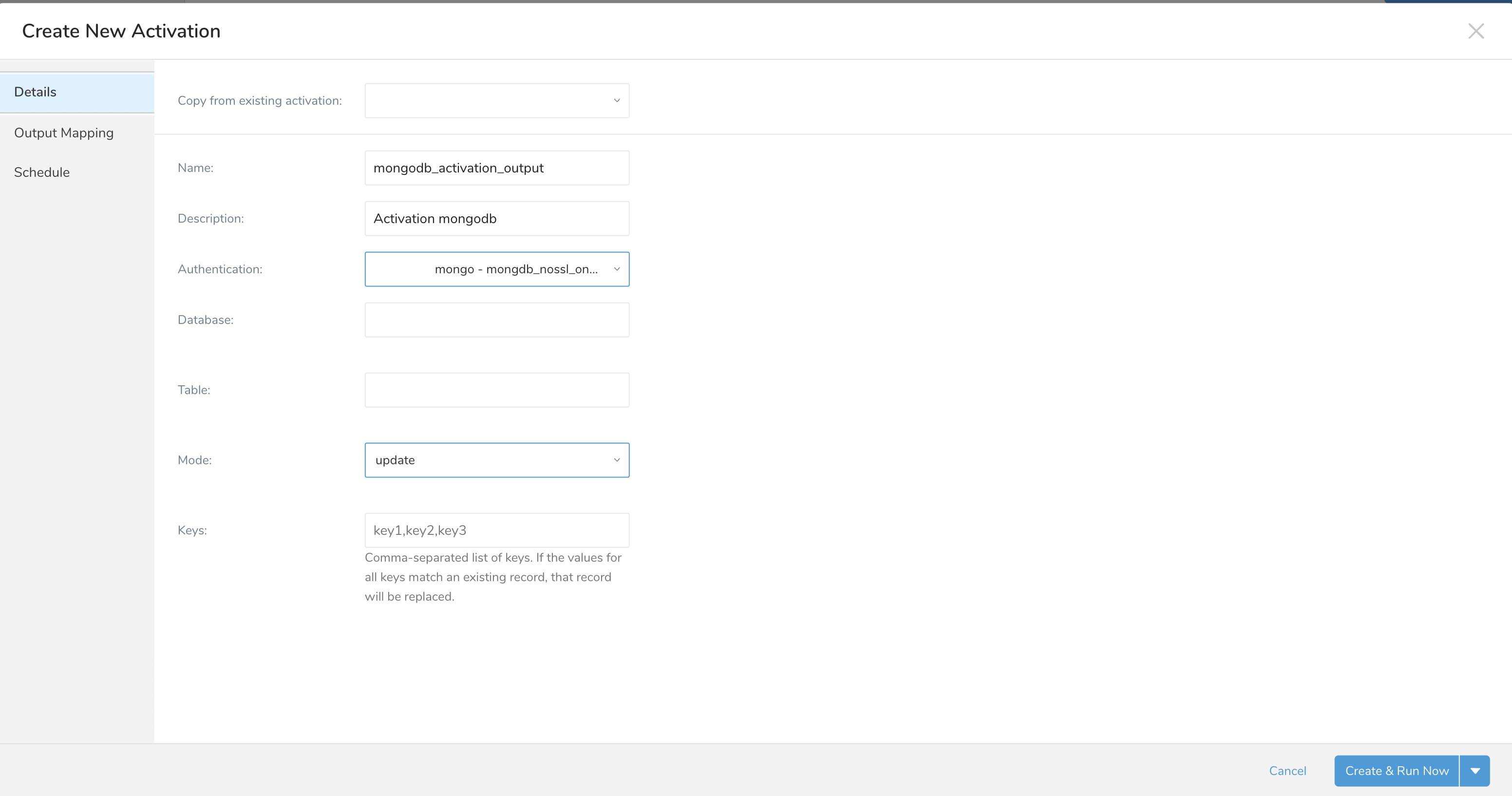

You can create an activation to export segment data or stages.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

- Navigate to Audience Studio.

- Select a segment.

- Select Create Activation.

See Also

| Field Name | Description |

|---|---|

| Database name | The name of the database to which you are transferring data (e.g., your_database_name). |

| Table Name | The name of the collection to which you are transferring data. |

| Mode | Append - Add to the existing records in the database. This mode is atomic. Replace - Replace the existing records with the query results. This mode is atomic. Truncate - Truncate the existing records. This mode is atomic. Update - a row is inserted unless it can cause a duplicate value in the columns specified in the “keys” parameter |

| Keys | Comma-separated list of keys. If the values for all keys match an existing record, that record will be replaced. |

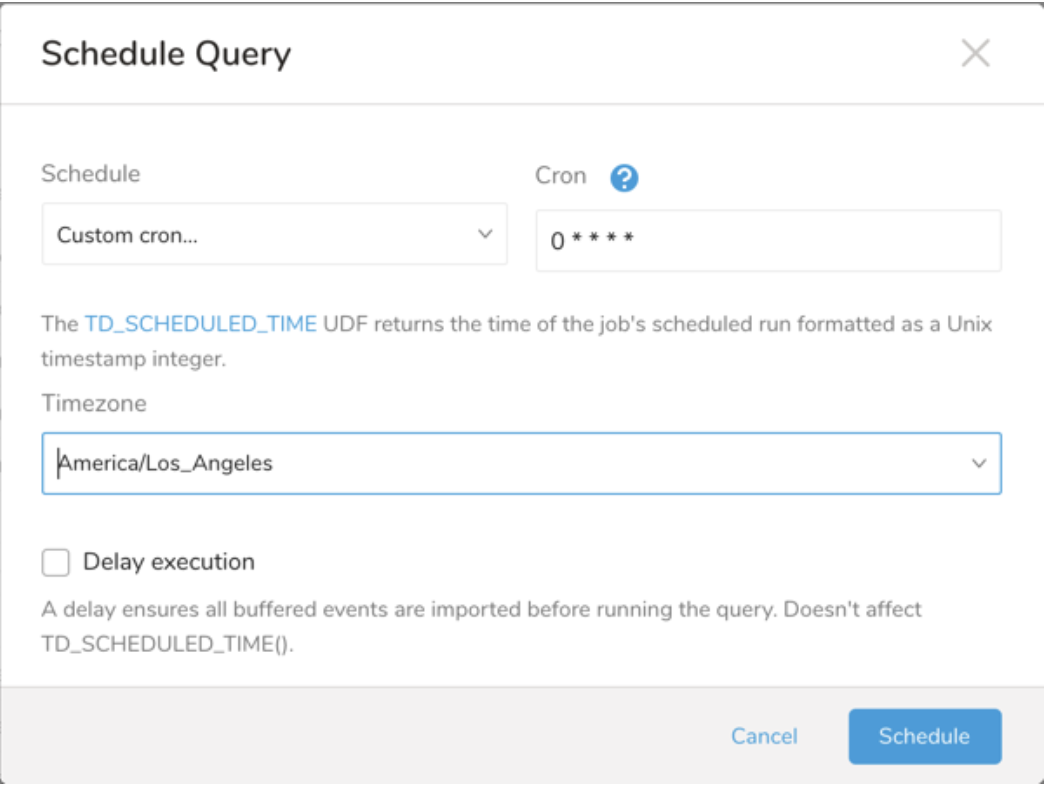

SELECT * FROM my_tableYou can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

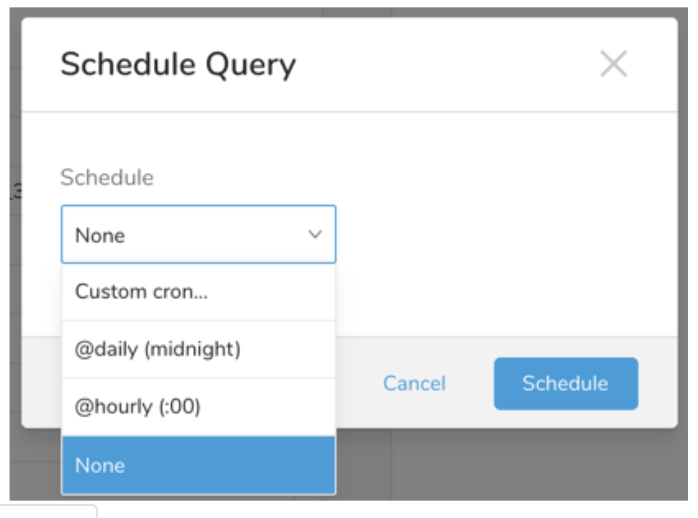

Next to Schedule, select None.

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

Within Treasure Workflow, you can specify the use of this data connector to export data.

Learn more at Using Workflows to Export Data with the TD Toolbelt.

#Example Worflow

_export:

result_connector_name: mongodb_connector

target_database_name: mongodb_database

target_collection_name: mongodb_collection

+export_to_mongodb:

td>: your_query.sql

result_connection: ${result_connector_name}

result_settings:

database: ${target_database_name}

table: ${target_collection_name}

mode: [append|replace|truncate|update]