The Data Connector for Mixpanel allows you to back up the event data in Mixpanel on Treasure Data. Use cases include:

| Use Case | Description |

|---|---|

| One-time migration | The organization is migrating away from Mixpanel and wants to keep a raw copy of the data |

| Incremental daily backup | The organization needs to have more granular access with SQL to event data inside Mixpanel |

For sample workflows on how to import event data from Mixpanel, view Treasure Boxes.

- Navigate to Integrations Hub > Catalog

- Search and select Mixpanel.

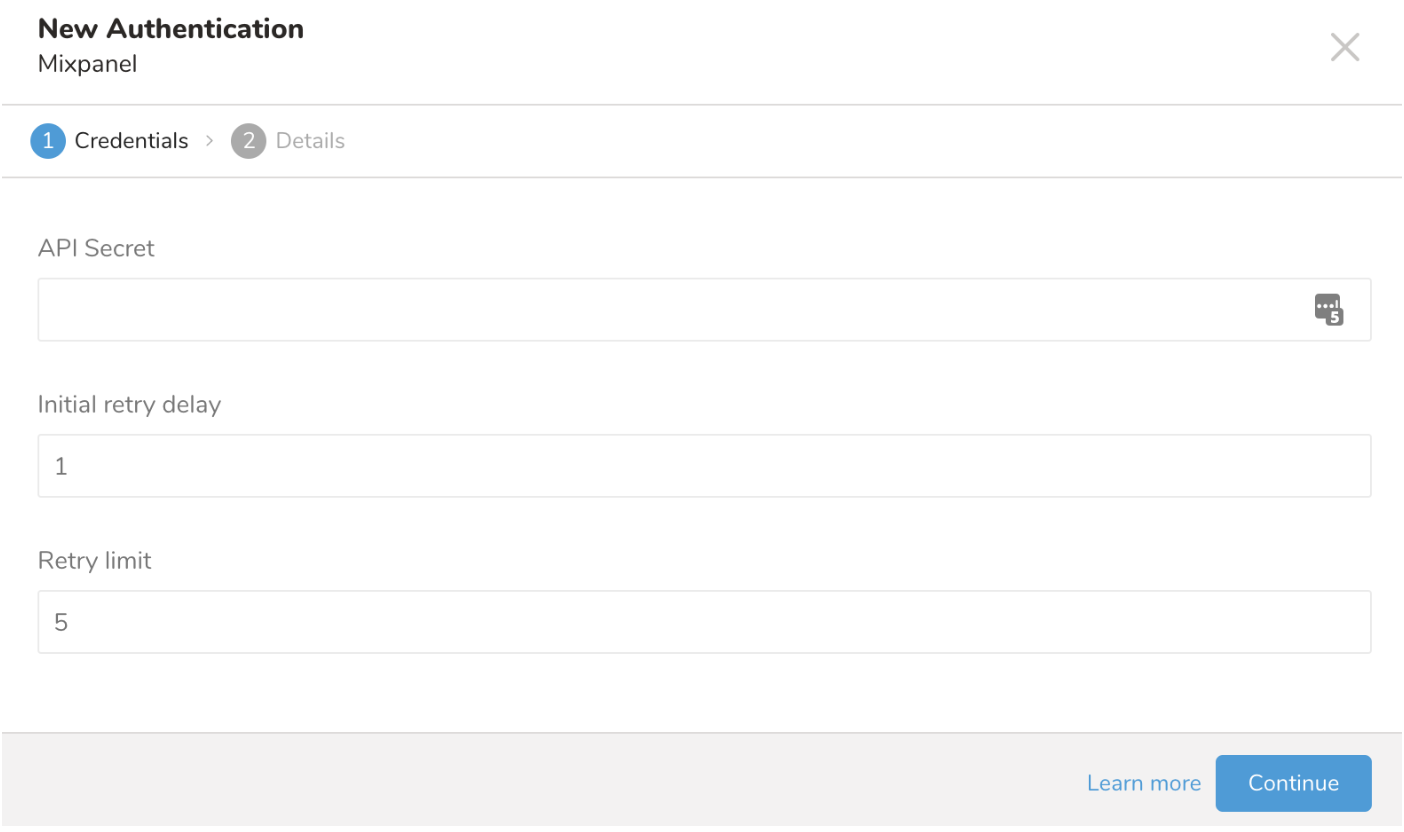

- The following dialog opens. Edit the Mixpanel credential details.

- Select Continue and provide a name for this connection.

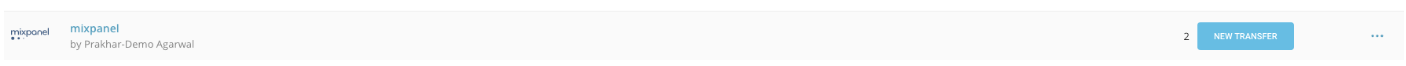

After creating the connection, you are automatically taken to the Authentications page. Look for the connection you created and select New Source.

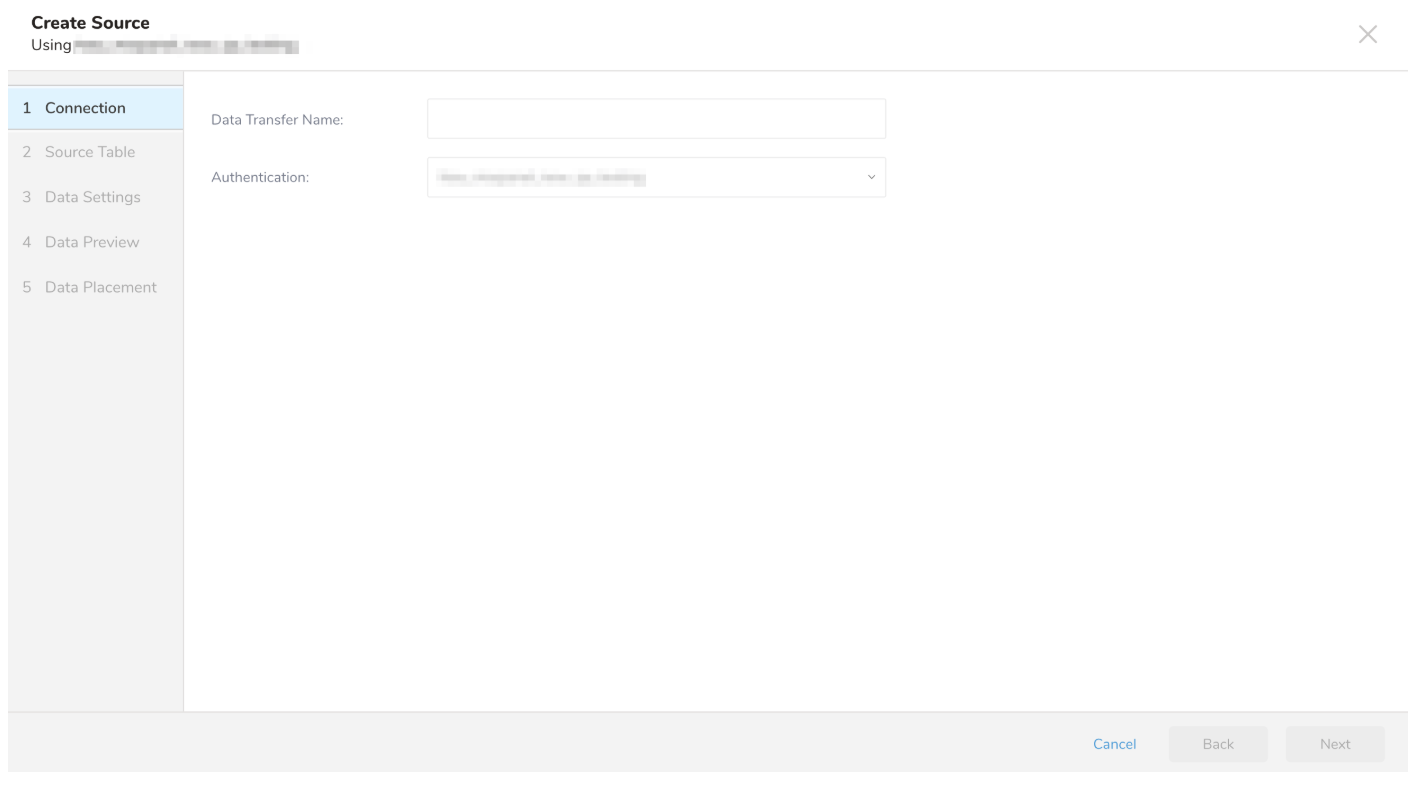

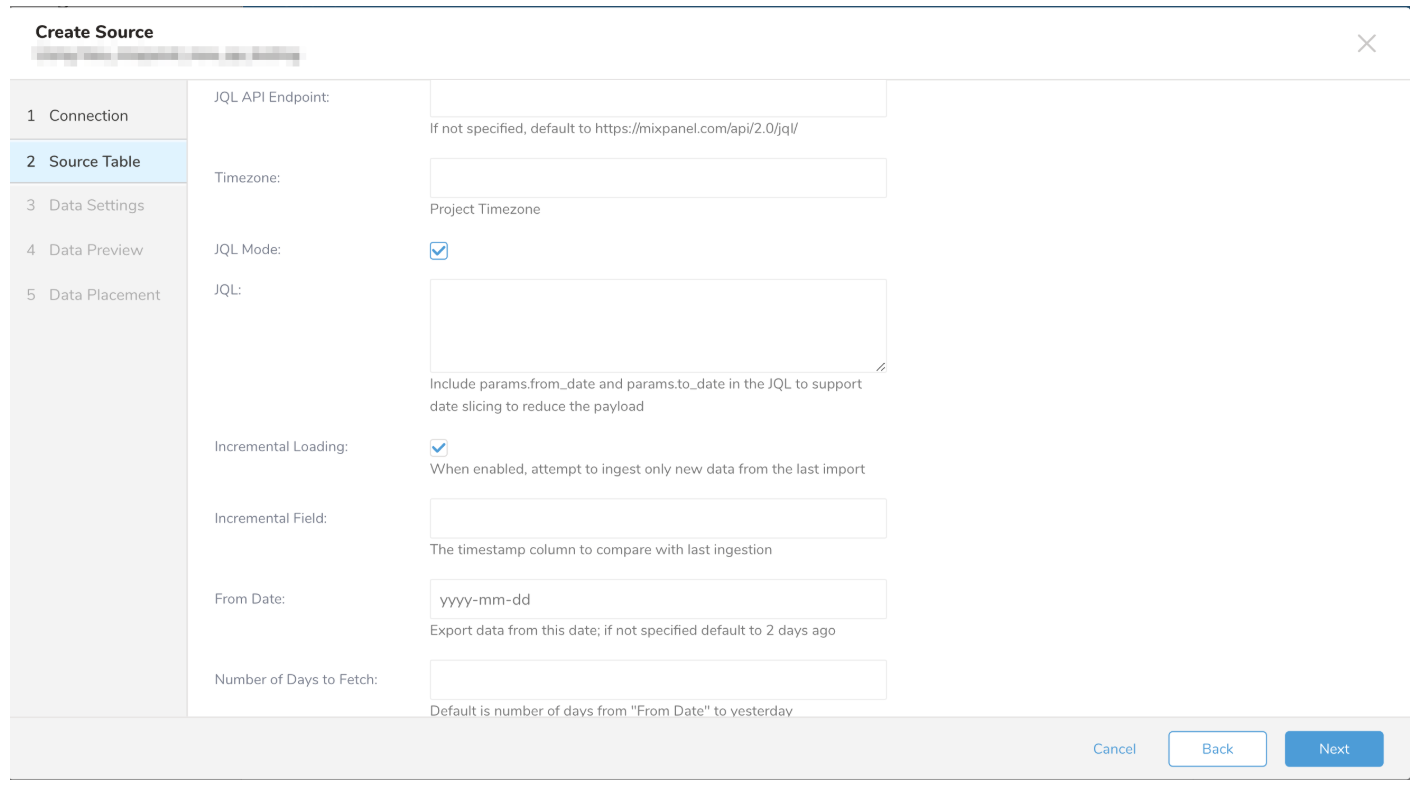

The following dialog opens.

- Name the data transfer and select Next.

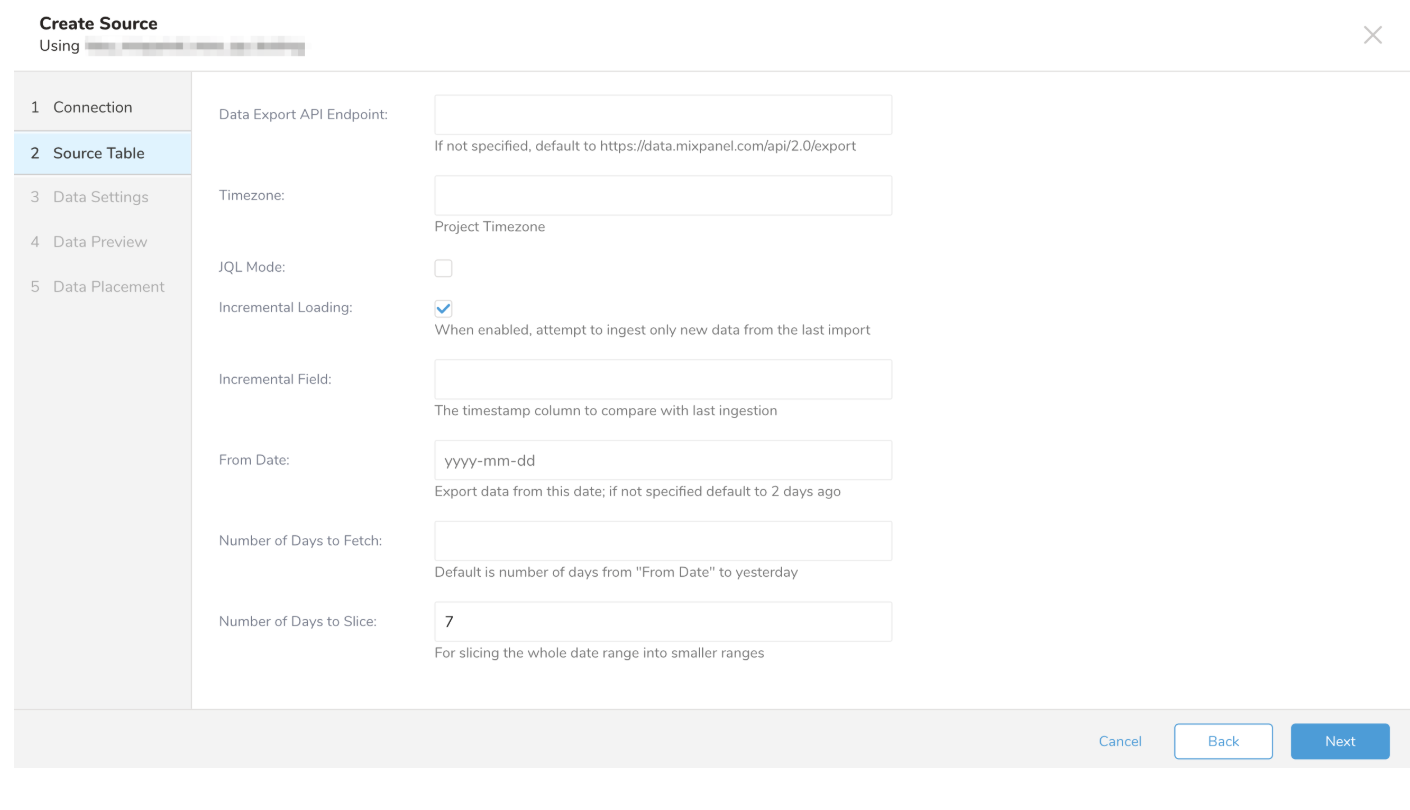

The following dialog opens

- Edit your source parameters and select Next.

| Parameters | Description |

|---|---|

| Data Export API Endpoint: | The endpoint you are using for the Export API call. If you leave it empty the default value is https://data.mixpanel.com/api/2.0/export/ |

| JQL API Endpoint | The endpoint using for JQL API call. If you leave it empty the default value is https://mixpanel.com/api/2.0/jql/ |

| Timezone | Your project timezone. It could be found in PROJECT SETTINGS >> YOUR PROJECT >> OVERVIEW. |

| JQL Mode | Using JQL endpoint or just export endpoint. The default value is false |

| JQL | JQL script. It only effects when JQL Mode is true |

| Incremental Loading | Run your transfer in incremental mode or not |

| Incremental Field | which field is used as an index for incremental. The default value is time |

| From date | start date of the incremental |

| Number of Days to Fetch | total number of days to fetch data in one time |

| Number of Days to Slice | number of days that one request fetch |

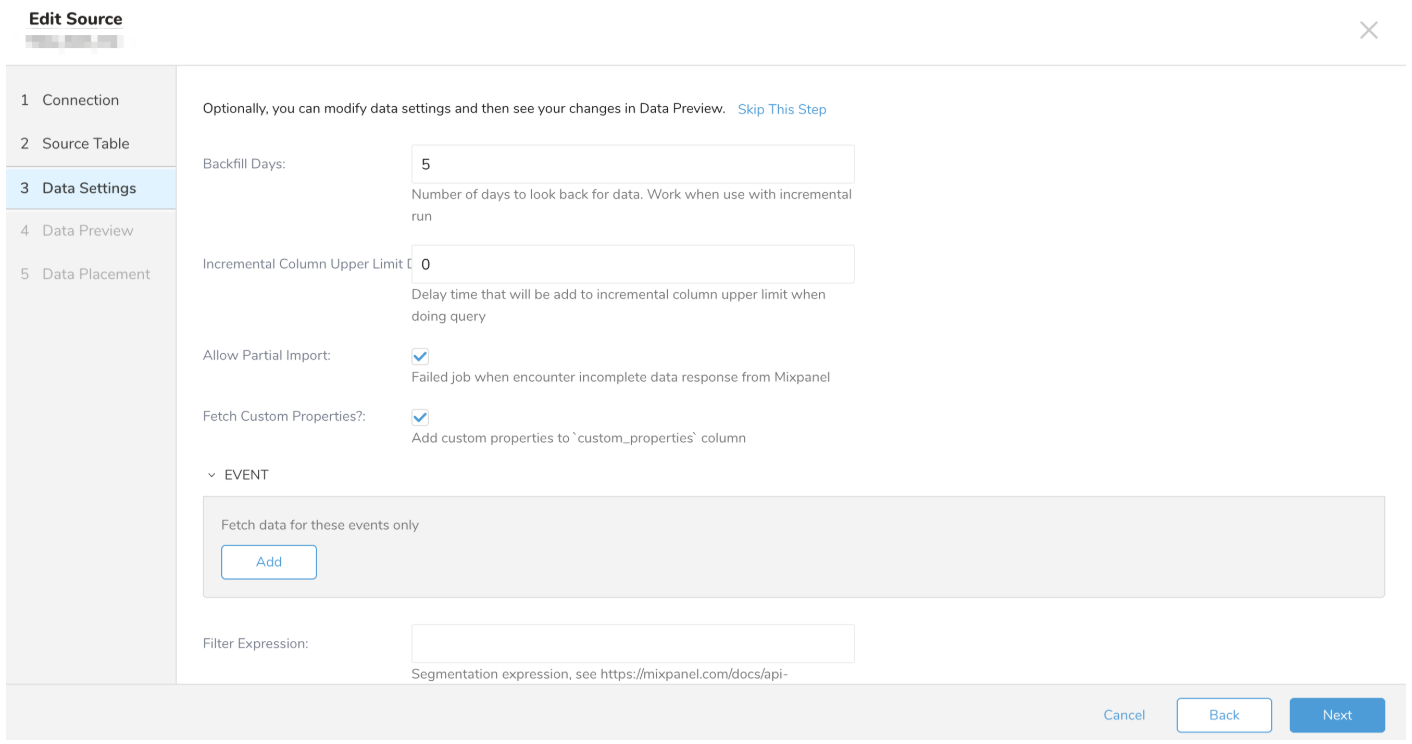

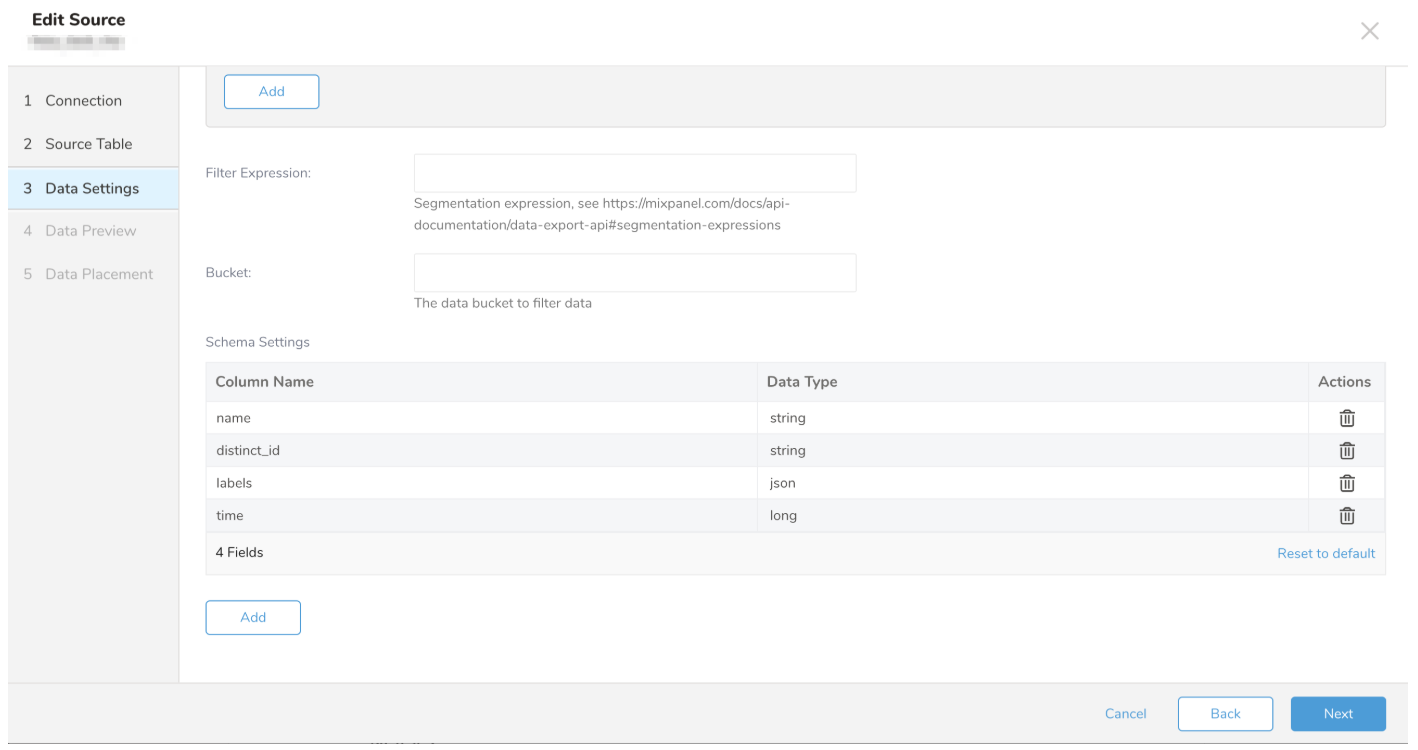

Your data settings can be edited on this page.

- Edit the data settings or optionally, skip this page of the dialog.

| Parameters | Description |

|---|---|

| Backfill Days | The amount of time that will be subtracted from from\_date to calculate the final from\_date that is used for the API Request. This is due to the Mixpanel caching data on user devices before sending it to the Mixpanel server. It only affects when Incremental is true and Incremental Field is specified. The default value is 5 |

| Incremental Column Upper Limit Delay In Seconds | The upper limit of the incremental column. When using export with the incremental column, the plugin will lock the upper limit of the incremental column query with the job start time. This is to support when Mixpanel has a delay in their processing. It only affects Export endpoint. The default value is 0. |

| Allow Partial Import | Allows the plugin to skip errors in the import. It only affects Export endpoint. The default value is true. |

| Fetch Custom Properties | Allows the plugin to import all custom properties for Export endpoint only. The default value is false. |

| Events | The events for filtering data for Export endpoint only |

| Filter Expression | The segmentation expression for Export endpoint only |

| Bucket | The data bucket to filter data for Export endpoint only |

| Schema | Your schema with columns names and types, which are stored in the TD table. |

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

Install the newest Treasure Data Toolbelt.

Log in to your Mixpanel account, go to “Account” then “Projects” to look up your API key and API secret.

Create a file called seed.yml as follows.

in:

type: mixpanel

api_key: MIXPANEL_API_KEY

api_secret: MIXPANEL_API_SECRET

timezone: 'UTC'

from_date: "2015-10-28"

fetch_days: 1

out:

mode: appendThis seed file is used to “guess” the full configuration file with column names. In this example, one day’s worth of data on Oct 28, 2015, is exported from Mixpanel to Treasure Data.

For more details on available out modes, see the Appendix below.

Based on seed.yml, generate load.yml with the connector:guess command.

$ td connector:guess seed.yml -o load.ymlYour column names and types depend on how you configure Mixpanel. The load.yml file should look as follows:

in:

type: mixpanel

api_key: MIXPANEL_API_KEY

api_secret: MIXPANEL_API_SECRET

timezone: UTC

from_date: '2015-10-28'

fetch_days: 1

columns:

- name: event

type: string

- name: "$browser"

type: string

- name: "$browser_version"

type: long

- name: "$city"

type: string

- name: "$current_url"

type: string

- name: "$initial_referrer"

type: string

- name: "$initial_referring_domain"

type: string

- name: "$lib_version"

type: string

- name: "$os"

type: string

- name: "$referrer"

type: string

- name: "$referring_domain"

type: string

- name: "$region"

type: string

- name: "$screen_height"

type: long

- name: "$screen_width"

type: long

- name: ENV

type: string

- name: RAILS_ENV

type: string

- name: distinct_id

type: long

- name: from

type: string

- name: job_id

type: long

- name: mp_country_code

type: string

- name: mp_lib

type: string

- name: name

type: string

- name: time

type: long

filters:

- type: rename

rules:

- rule: upper_to_lower

- rule: character_types

pass_types: ["a-z", "0-9"]

pass_characters: "_"

replace: "_"

- rule: first_character_types

pass_types: ["a-z"]

pass_characters: "_"

prefix: "_"

- rule: unique_number_suffix

max_length: 128

out:

mode: append

exec: {}For more details on the rename filter, see rename filter plugin for Data Connector.

The Data Connector picked up fields such as:

- ENV

- RAILS_ENV

- $current_url

Column names are normalized in the type: rename filter section.

Use connector:preview command to preview the data load.

td connector:preview load.ymlIf the data looks good, then create the database and the table on Treasure Data where you wish to import Mixpanel data:

td db:create mixpanel_historical

td table:create mixpanel_historical app_nameSubmit the load job. It may take a couple of hours depending on the size of the data. Users need to specify the database and table where their data is stored.

It’s also recommended to specify --time-column option because Treasure Data’s storage is partitioned by time (see architecture) If the option is not provided, the data connector chooses the first long or timestamp column as the partitioning time. The type of the column specified by --time-column must be either of long and timestamp type.

td connector:issue load.yml --database mixpanel_historical --table app_name --time-column timeThe preceding command assumes you have already created database(td_sample_db) and table(td_sample_table). If the database or the table do not exist in TD this command fails, so create the database and table manually or use --auto-create-table option with td connector:issue command to auto-create the database and table:

td connector:issue load.yml \

--database td_sample_db --table td_sample_table \

--time-column created_at --auto-create-tableYou can assign Time Format column to the "Partitioning Key" by "--time-column" option.

You can check that the data is loaded by running a SQL query directly against your data in Treasure Data:

td query -T presto -w -d mixpanel_historical 'SELECT COUNT(1) FROM app_name'Unless you are migrating off of Mixpanel completely, Mixpanel data must be incrementally loaded into Treasure Data regularly. The Data Connector’s scheduling function comes in handy for this purpose.

After scheduling, the Mixpanel Data Connector’s successive runs increment the from_date parameter by fetch_days. For example, if the initial run in load.yml was

from_date: '2015-10-28'

fetch_days: 1Then, the next run is as follows:

from_data: '2015-10-29'

fetch_days: 1You do not need to update load.yml after it is uploaded since from_date field is automatically updated on the server side.

Suppose you wish to schedule a daily upload. Then, make sure that the initial from_date is at least two days ago and set fetch_days: 1 in load.yml. Then, the following command creates a daily job called “daily_mixpanel_import” which loads historical data to mixpanel_historical.app_name on Treasure Data every day.

$ td connector:create \

daily_mixpanel_import \

"10 0 * * *" \

mixpanel_historical \

app_name \

load.yml \

--time-column time # optionalThe historical runs of the import can be seen with td connector:history name, e.g., td connector:history daily_mixpanel_import.

Certain Mixpanel account has project set up with additional field added to indicate the time data get processed by Mixpanel. For example: mp_processing_time_ms

User can add an additional parameter to Mixpanel Input Plugin incremental_column. The max incremental_column value of a run session will store and use as a filter for next run, Example: where incremental_column > previous_run_max_value.

Example:

in:

type: mixpanel

api_key: MIXPANEL_API_KEY

api_secret: MIXPANEL_API_SECRET

timezone: 'UTC'

from_date: "2015-10-28"

incremental_column: mp_processing_time_ms

fetch_days: 1

out:

mode: appendFor devices data like phone, tablets… Mixpanel can keep data in user’s device for a while before send them to Mixpanel Server. So data appear in query can be delayed up to 5 days. When incremental import data from Mixpanel, we can missed data that are cached in user devices. To solve this issue we can set back_fill_days parameter(Default to 5). Plugin looks back for a number of days(from_date - back_fill_days). Due to performance issue, this feature only work with incremental_column.

For some cases, the data return could be too big, data return in 1 query could exceed Mixpanel limitation and cause job to failed. In that case, we can split the big range query into smaller one using slice_range configuration parameter. This parameter are optional and default to 7.

Example:

in:

type: mixpanel

api_key: MIXPANEL_API_KEY

api_secret: MIXPANEL_API_SECRET

timezone: 'UTC'

from_date: "2015-10-20"

incremental_column: mp_processing_time_ms

fetch_days: 6

slice_range: 2

out:

mode: appendThe above configuration produces a query with the following range: [2015-10-20,2015-10-21],[2015-10-22,2015-10-23],[2015-10-24,2015-10-25] instead of [2015-10-20,2015-10-25]

You can specify file import mode in out section of seed.yml.

- append (default)

This is the default mode and records are appended to the target table.

in:

...

out:

mode: append- replace (In td 0.11.10 and later)

This mode replaces data in the target table. Manual schema changes made to the target table remains intact with this mode.

in:

...

out:

mode: replace