Complete the following steps to migrate from the legacy Salesforce Legacy data connector to the new Salesforce connector. The legacy data connector uses only REST API to import data. The new Salesforce data connector enables you to use Bulk import and REST API.

When migrating data from one place or version, it is worth being aware of how that data might be transformed. The following sections outline some important characteristics to be aware of.

You can ingest more than 50 assets.

| Column | Old Data Type | New Data Type |

|---|---|---|

| createdDate | string | timestamp |

| modifiedDate | string | timestamp |

| Column | Old Data Type | New Data Type |

|---|---|---|

| createdDate | string | ISO 8601 string |

Other date time values are converted to UTC.

Ingestion is limited to:

root and system defined data

one-to-one and one-to-many relationships

- one-to-one relationships are saved as a single JSON

- one-to-many relationships are saved as a JSON array

Other attributes must be ingested using the Treasure Data ingestion feature.

Contact attributes are collected for root and system, you are not able to limit ingested attributes.

The number of records per page used the default value of 2000.

Ingestion is limited to:

- one data extension at a time

| Old Column Name | New Column Name |

|---|---|

| data-extension-column-name | column-name |

| Column | Old Data Type | New Data Type | Format of Data |

|---|---|---|---|

| any-datetime | string | timestamp | UTC |

TD generated properties have an underscore prefix of “_” so that they can be easily identified.

The number of records per page used the default value of 2500.

Ingestion excludes subscribers associated with an event.

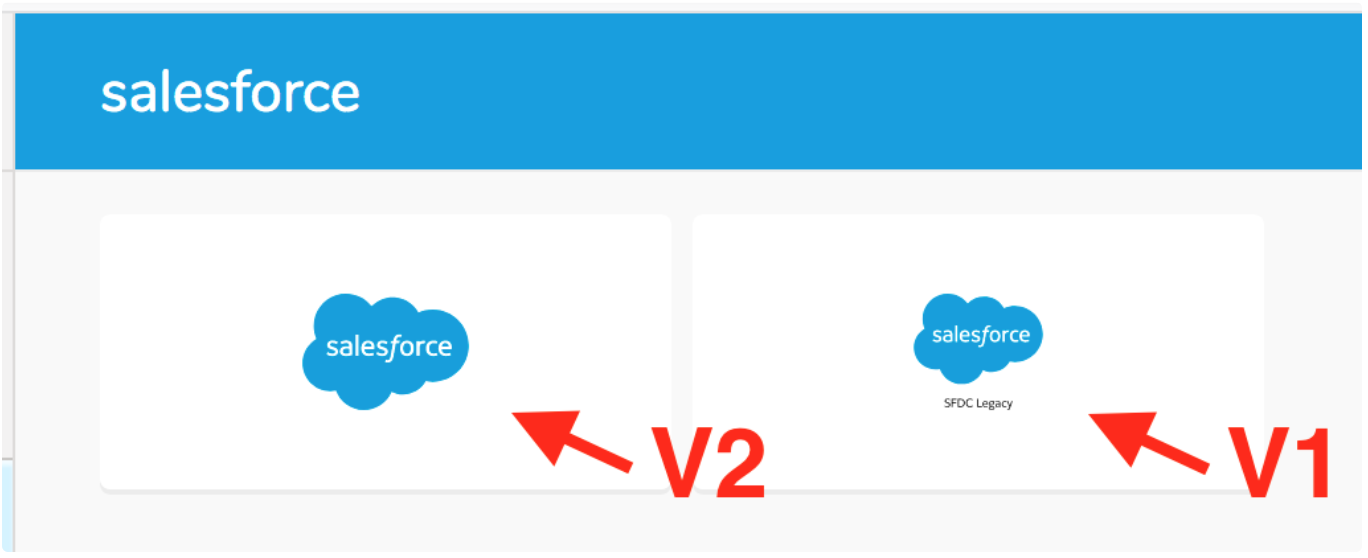

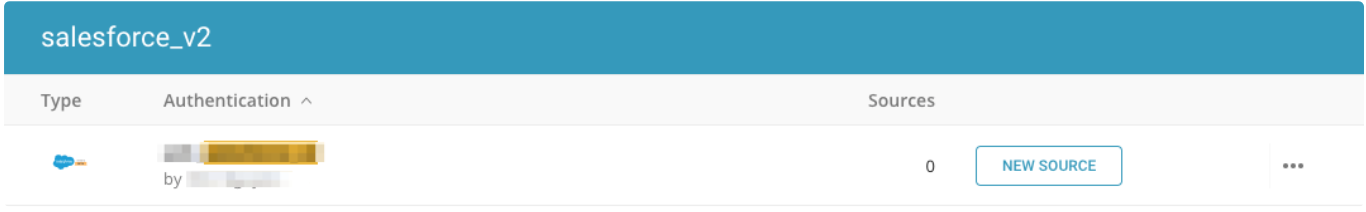

Go to Treasure Data Catalog, then search and select Salesforce v2.

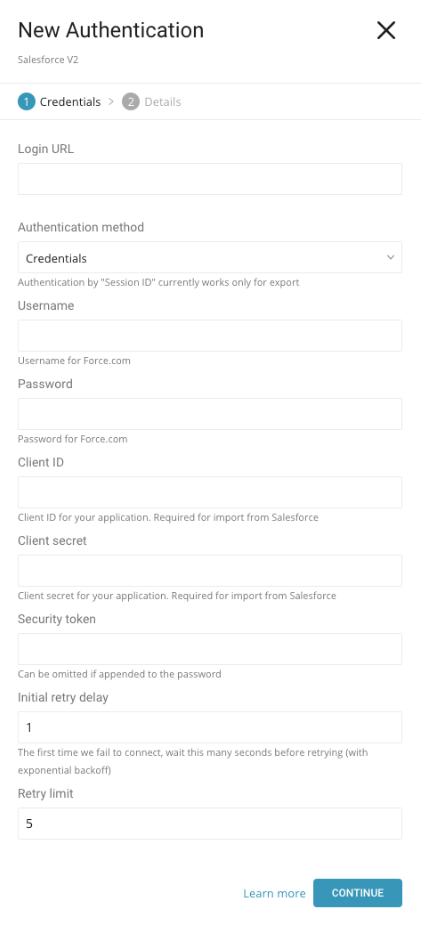

In the dialog box, enter the values that you enter in your legacy Salesforce connector.

Salesforce v2 connector requires that you remove unnecessary letters from Login URL parameter. For example, instead of https://login.salesforce.com/?locale=jp , use https://login.salesforce.com/ .

Enter your username (your email) and password, as well as your Client ID, Client Secret and Security Token.

You can save your legacy setting from TD Console or from the CLI.

- Campaign

- Contact

- Data Extension

- Email Event

- Using TD Console

- Using CLI and Workflow

- Using TD Console

- Using CLI

- Using Workflow

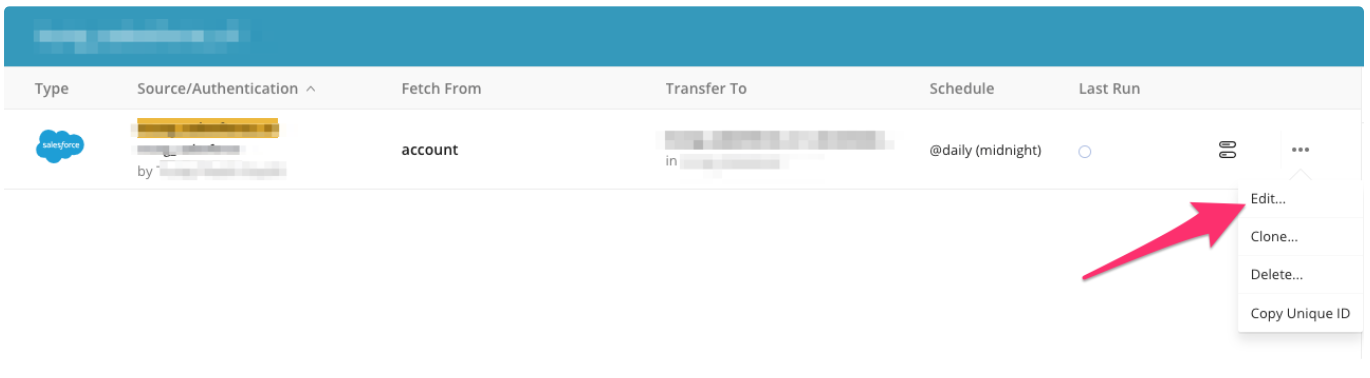

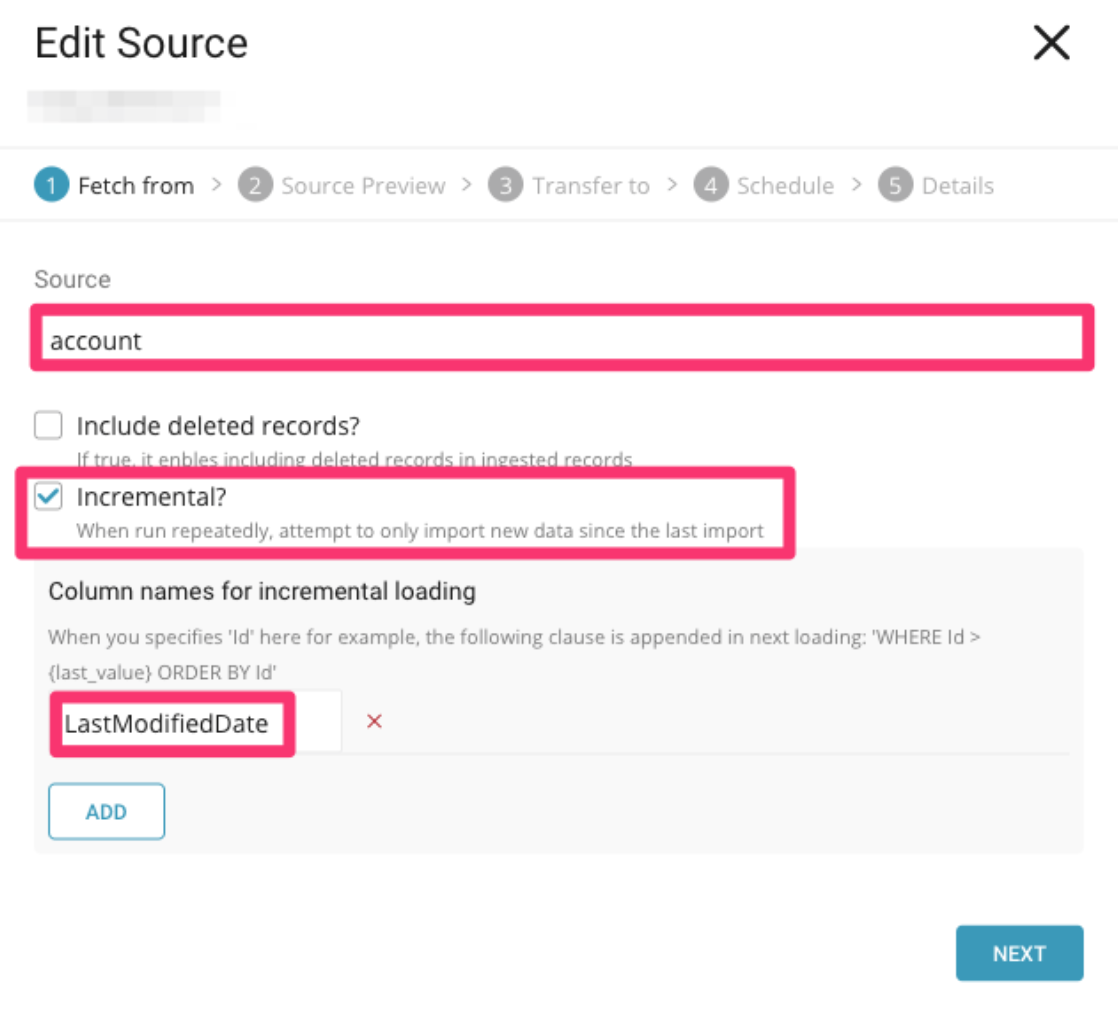

Go to Integration Hub > Sources. Search for your scheduled Salesforce source, select the source and select Edit.

In the dialog box, copy the settings to use later:

Also copy any advanced settings:

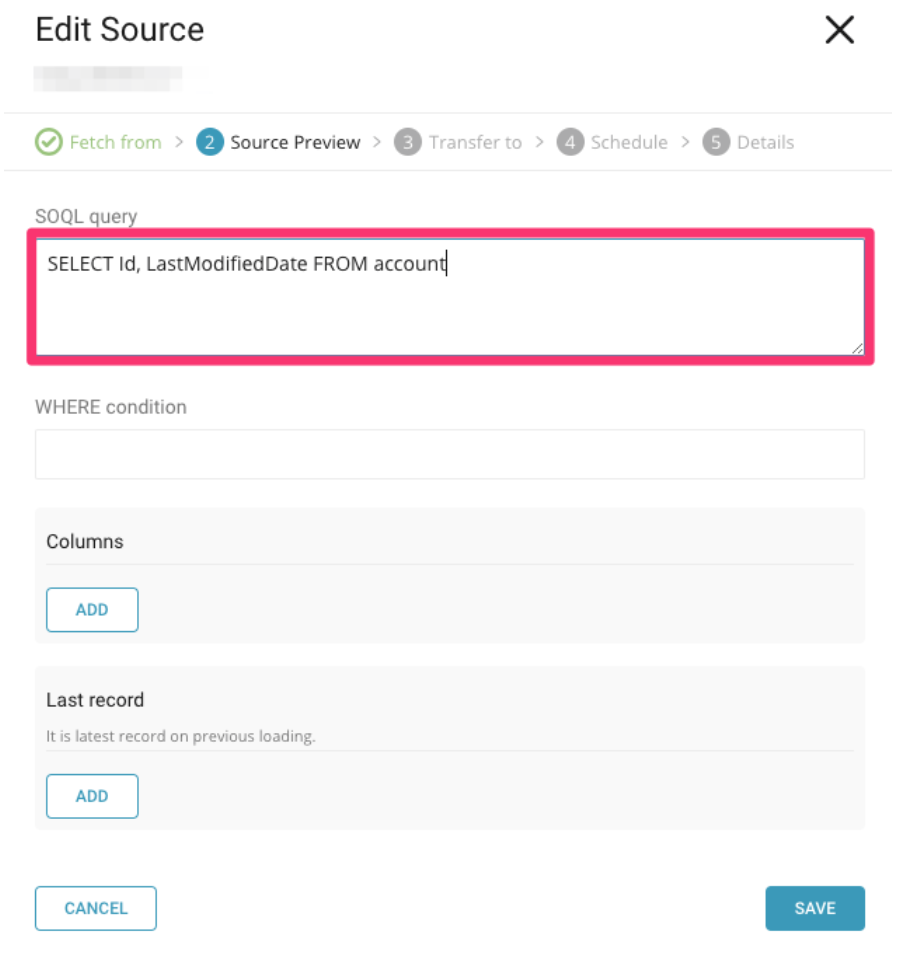

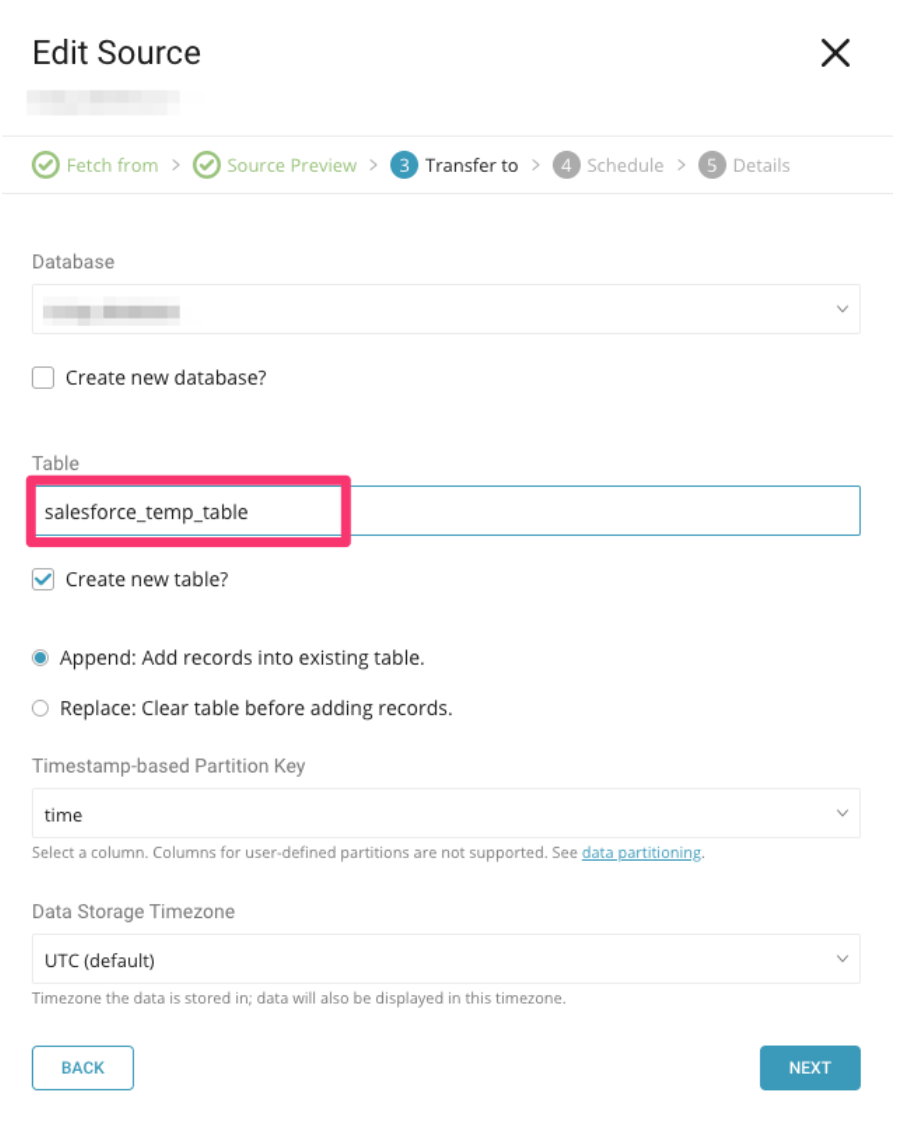

Next, you configure one final run with the legacy data connector to create a temporary table against which you can run a config-diff. You use the diff to identify and confirm the latest data imported into Treasure Data.

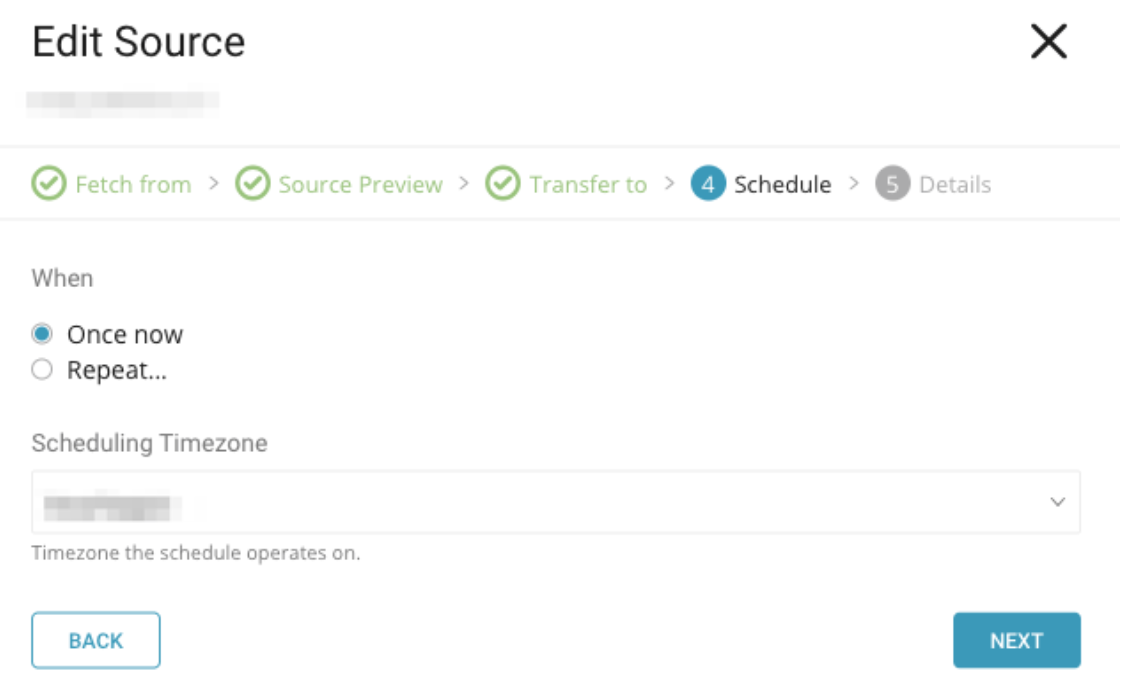

Before running the final import with the legacy connector, make sure that you change the schedule to one run only:

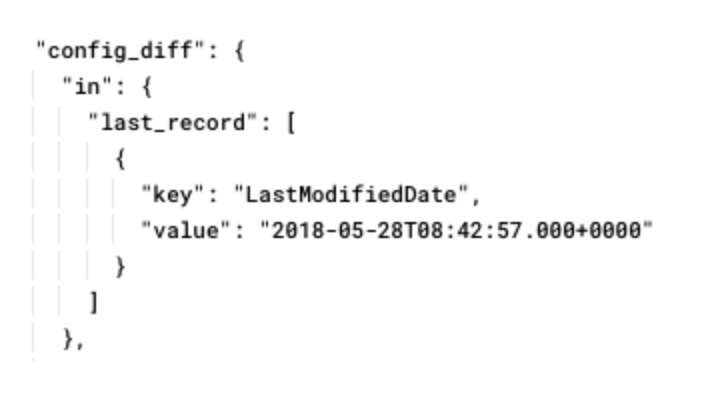

After the job is complete, look at and copy config_diff in job query information somewhere to use later.

Go to Integration Hub > Authentication. Search for new Salesforce v2 connection that you created:

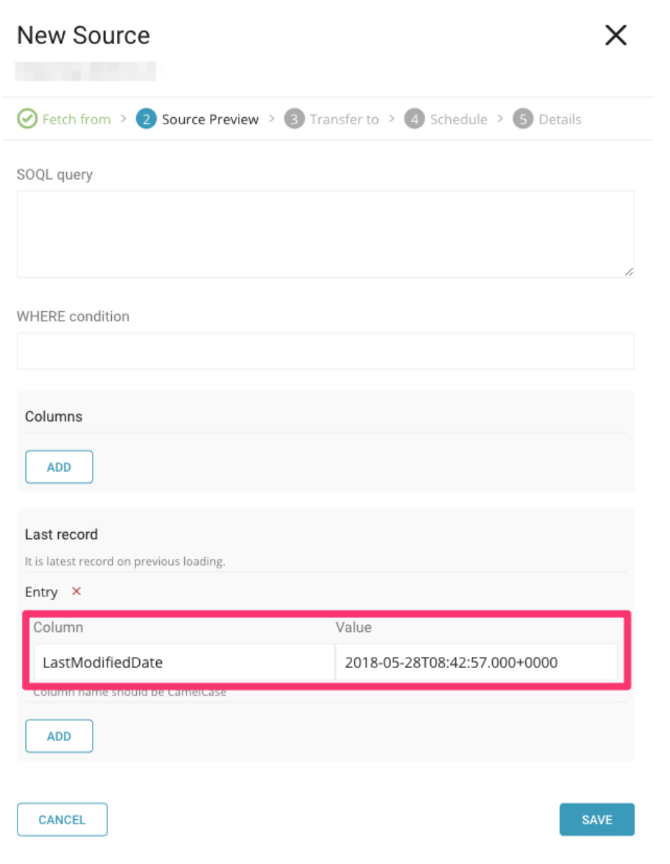

Select the New Source. Fill in all basic settings and advanced settings that you copied in the preceding steps. Then, if you want the new source to continue ingesting from the point where the legacy connector left, fill in the Last Record field with the config_diff information that you copied in the previous job.

After completing the settings, choose the database and table job to populate data into, then schedule the job and provide a name for your new data connector. Select Save and then run the new data connector.

Update in: type in your yml configuration from sfdc to sfdc_v2.

For example, your existing workflow configuration might look something like this:

in:

type: sfdc

username: ${secret:sfdc.username}

password: ${secret:sfdc.password}

client_id: ${secret:sfdc.client_id}

client_secret: ${secret:sfdc.client_secret}

security_token: ${secret:sfdc.security_token}

login_url: ${secret:sfdc.login_url}

target: Lead

out: {}

exec: {}

filters: []Your new workflow configuration would look like this:

in:

type: sfdc_v2

username: ${secret:sfdc.username}

password: ${secret:sfdc.password}

client_id: ${secret:sfdc.client_id}

client_secret: ${secret:sfdc.client_secret}

security_token: ${secret:sfdc.security_token}

login_url: ${secret:sfdc.login_url}

target: Lead

out: {}

exec: {}

filters: []The SFDC connection is shared between data connector and result output, although there is nothing change in result output, if you use either of those, you should upgrade it too.

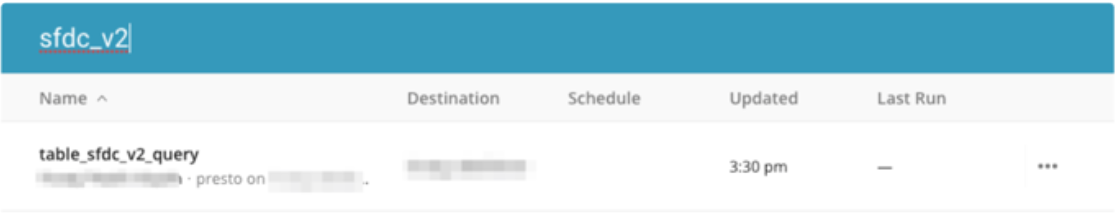

Go to TD Console. Go to Query Editor. Open the Query that uses SFDC for its connection.

Select the SFDC connector, then copy and save the details of the existing connection to use later.

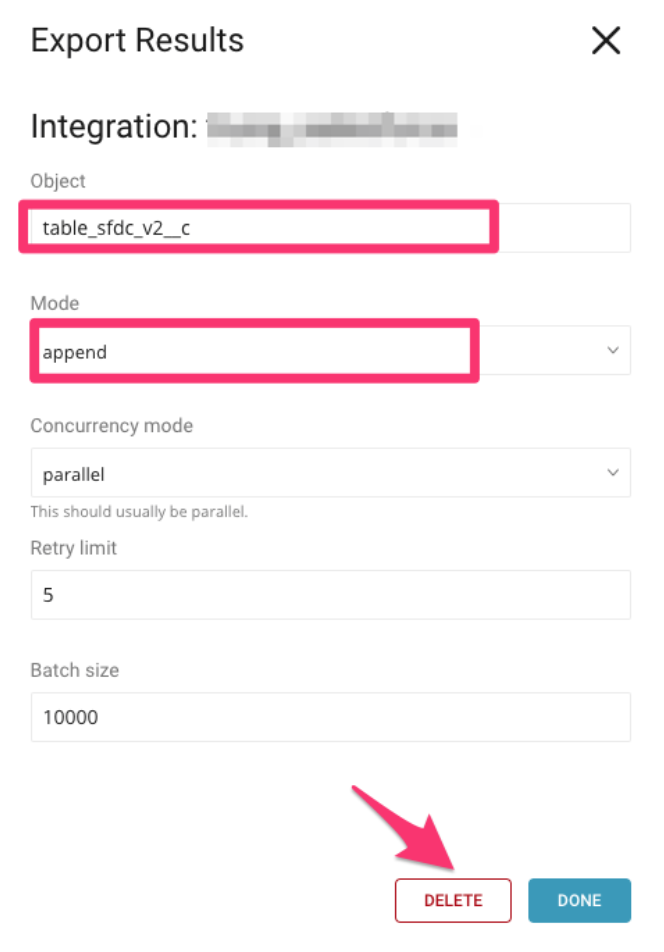

Select DELETE to remove the Legacy one.

In the query, select Output Results. Next, you are going to set up the SFDC v2 connector by finding and select the SFDC v2 export connector that you created.

In Configuration pane, specify the fields you saved in the previous step, then select Done.

Check Output results to... to verify that you are using the created output connection. Select Save.

| It is strongly recommended to create a test target and use it for the first data export to verify that exported data looks as expected and the new export does not corrupt existing data. In your test case, choose an alternate “Object” for your test target. |

Result type protocol needs to update from sfdc to sfdc_v2 for instance from:

sfdc://username:passwordsecurity_token@hostname/object_nameto:

sfdc_v2://username:passwordsecurity_token@hostname/object_nameIf you have a workflow that used the SFDC, you can keep your result settings the same, but need to update result_connection to the new connection_name.

An example of old workflow result output settings is as follows:

+td-result-output-sfdc:

td>: queries/sample.sql

database: sample_datasets

result_connection: your_old_connection_name

result_settings:

object: object_name

mode: append

concurrency_mode: parallel

retry: 2

split_records: 10000An example of new workflow result output settings is as follows:

+td-result-output-sfdc:

td>: queries/sample.sql

database: sample_datasets

result_connection: your_new_connection_name

result_settings:

object: object_name

mode: append

concurrency_mode: parallel

retry: 2

split_records: 10000