This Treasure Data integration empowers digital sales organizations with modern remote collaboration capabilities for exceptional teamwork and frictionless engagement:

- Find and build stronger relationships.

- Improve productivity and performance.

- Get a single view of customers.

The import integration allows you to ingest contact and transactional data (including quotes and sales orders) from MS Dynamics 365 to Treasure Data.

- Basic Knowledge of Treasure Data.

- Client Credentials authentication: Administration privileges to access Azure Active Directory and Dynamics CRM Security settings.

- OAuth authentication: A tenant administrator or a user with access to Azure "Enterprise applications" to grant consent.

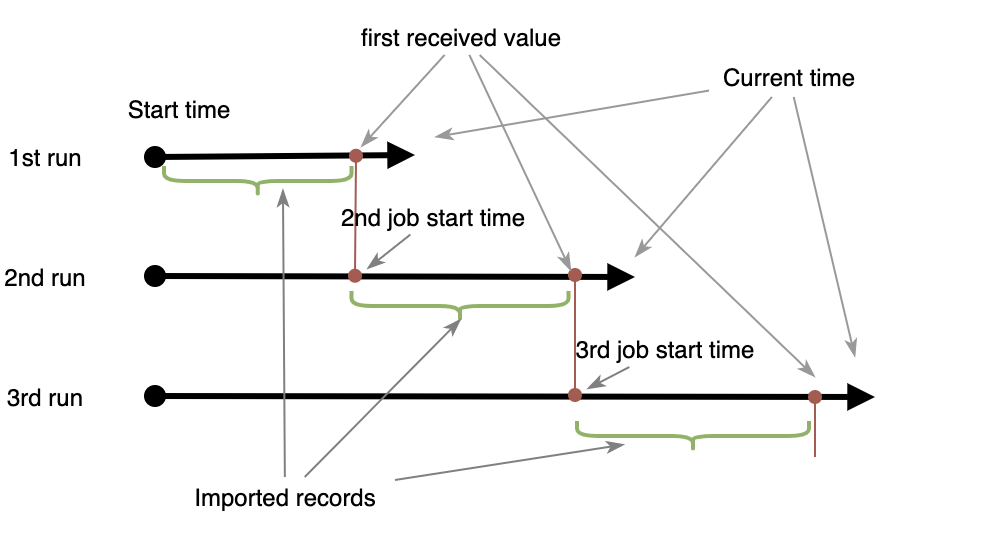

- When incremental loading is enabled, the query issued to Dynamics API contains the statements $filter and

$orderby.The $filter queries data for desired criteria, and $orderby sorts data in a descending manner - The first value received will be kept as a reference for the next job filter. The following job $filter excludes previous job data and fetches new data only.

- The process repeats for subsequence execution.

- When incremental loading is enabled, the End Time is left blank (by default, it will be set to the current time)

- The filter column (by default

modifiedon) must not contain a null or empty value.

The following is an example of when incremental loading is enabled:

- Assumption/condition

- Start Time = 2021-01-01T00:03:01Z

- Job scheduled to run daily

- 1st job current time = 2021-01-15T00:03:01Z: $filter=

modifiedon> 2021-01-01T00:03:01Z andmodifiedon<= 2021-01-15T00:03:01Z, $orderby = modifiedon desc. First record result has modifiedon = 2021-01-10T00:00:00Z 2nd job current time = 2021-01-16T00:03:01Z:$filter=modifiedon>2021-01-10T00:00:00Zandmodifiedon<= 2021-01-16T00:03:01Z, $orderby = modifiedon desc. First record result has modifiedon = 2021-01-16T00:03:01Z3rd job current time = 2021-01-17T00:03:01Z:$filter=modifiedon>2021-01-16T00:03:01Zandmodifiedon<= 2021-01-17T00:03:01Z, $orderby = modifiedon desc. First record result has modifiedon = 2021-01-17T00:00:01Z...

These values are necessary to connect using the Client Credentials authentication option. They are optional if you expect to use the OAuth option to authenticate.

Follow the Microsoft documentation to create your client application and get your client ID and client secret:

You should create a custom security role with minimal permission for your registered application. See:

- https://docs.microsoft.com/en-us/previous-versions/dynamicscrm-2016/administering-dynamics-365/dn531130(v=crm.8)

- https://docs.microsoft.com/en-us/previous-versions/dynamicscrm-2016/administering-dynamics-365/dn531090(v=crm.8)

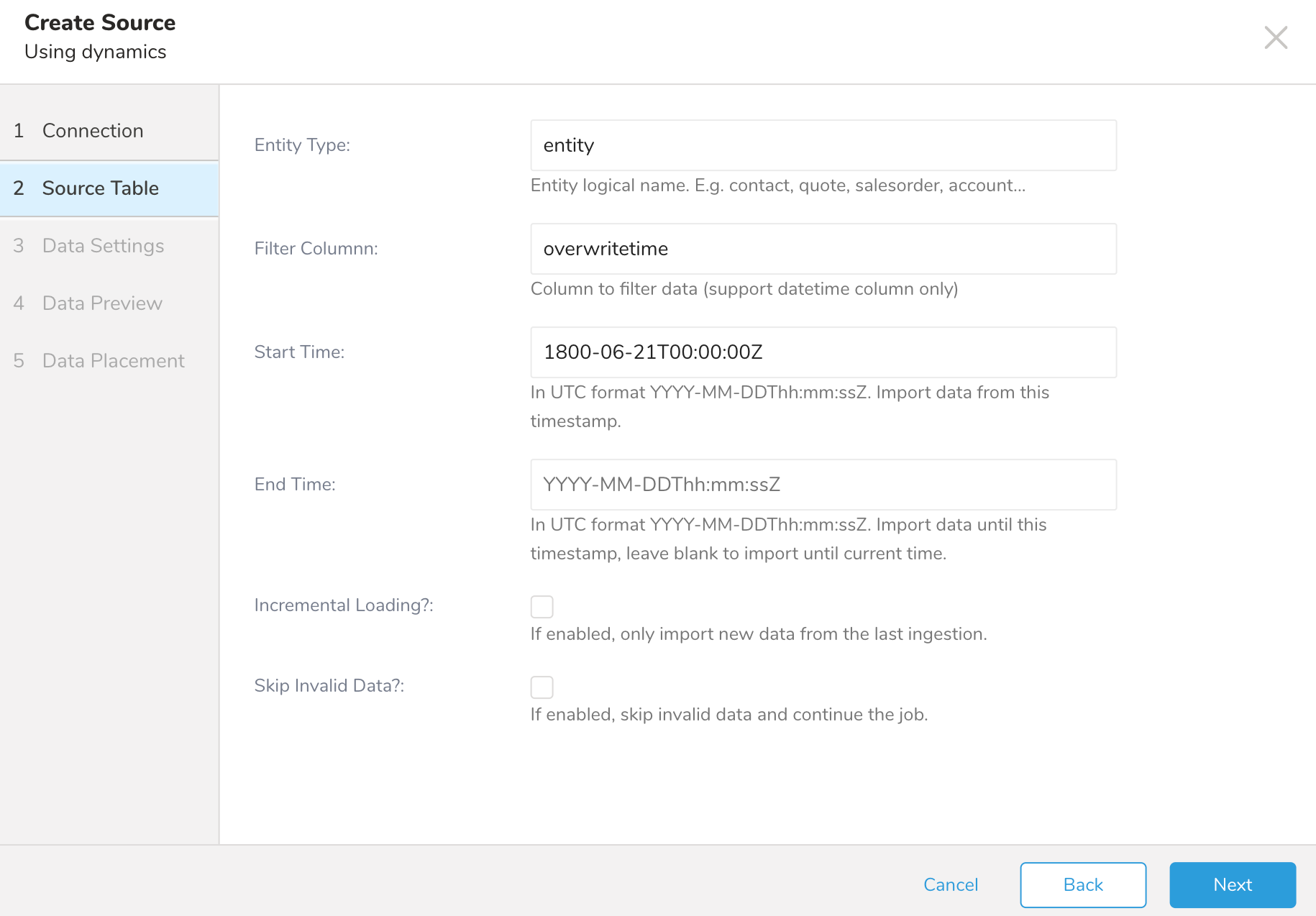

You can fetch all available entities by importing:

- Entity Type = entity

- Filter Column = overwritetime

- Start Time = 1800-06-21T00:00:00Z

Although this integration supports almost all entities by generic configuration settings if you notice entities that may require specific settings, contact support.

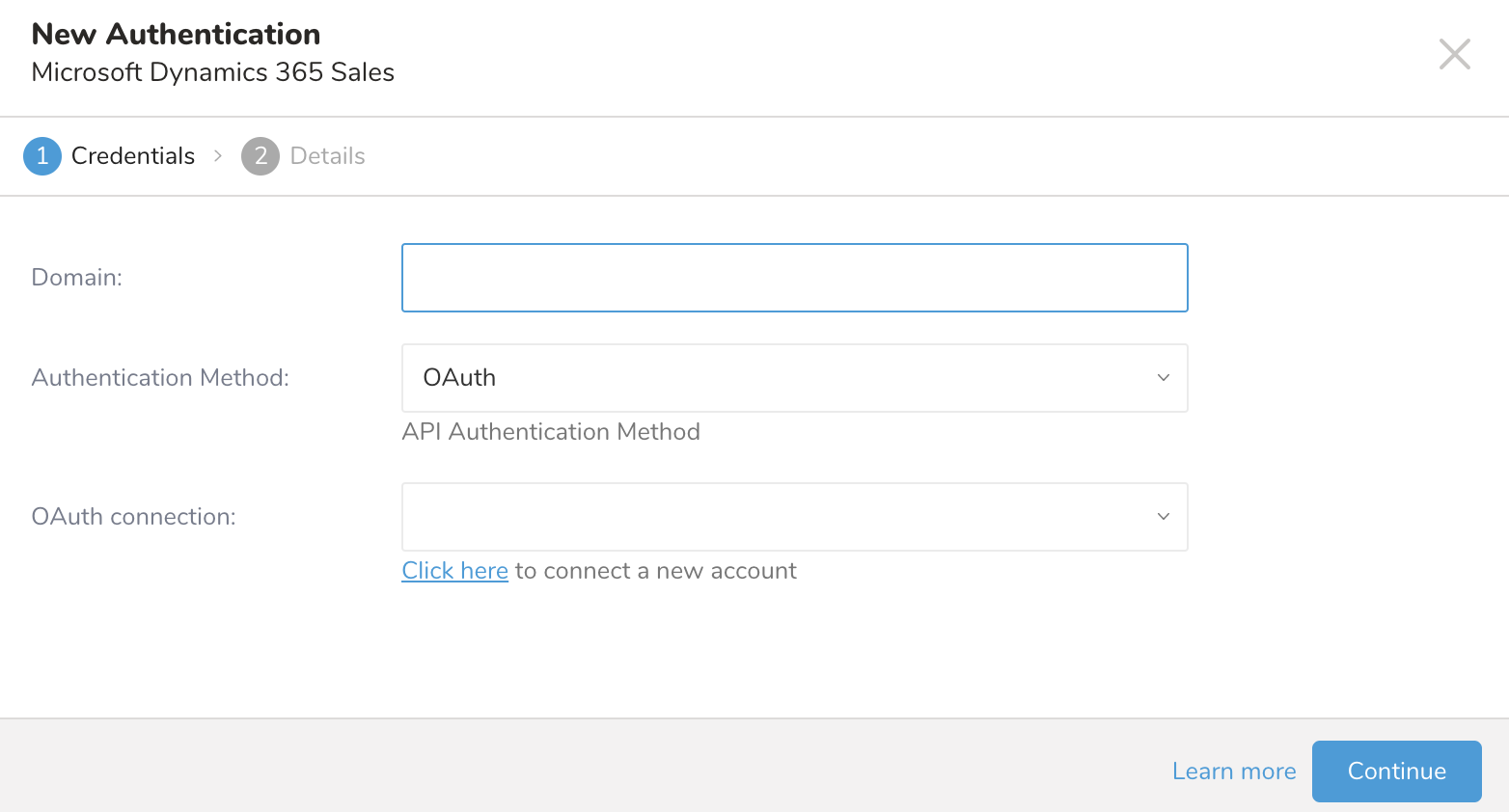

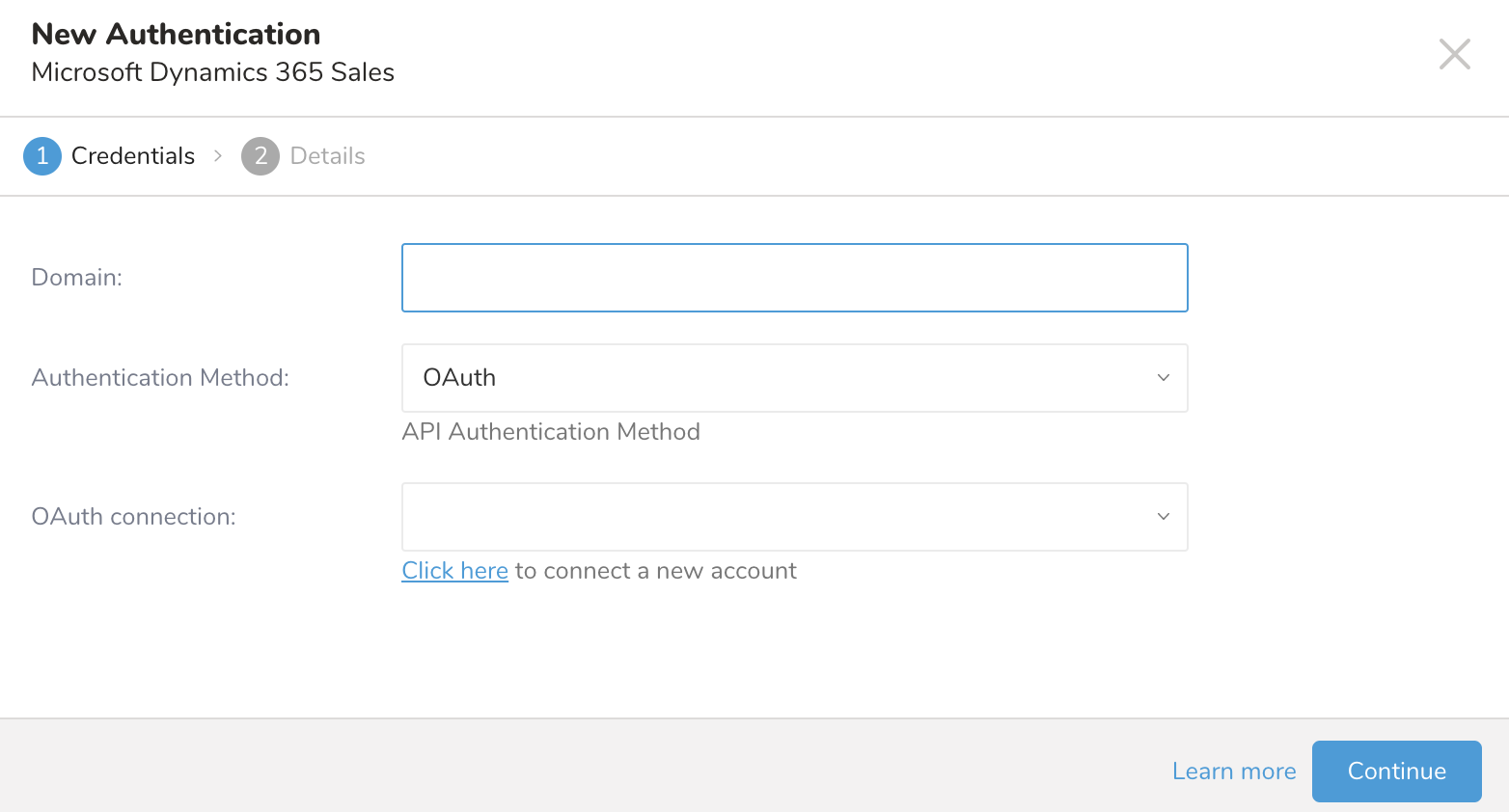

You must create and configure the data connection in Treasure Data before running your query. As part of the data connection, you provide authentication to access the integration.

Open TD Console.

Navigate to Integrations Hub > Catalog.

Search for and select Microsoft Dynamic 365 Sales.

- Select Create Authentication.

Type your MS Dynamics domain name.

Choose one of the following authentication methods:

Select OAuth.

Type the credentials to authenticate.

Optionally, select Click here and log in to Microsoft Dynamics 365 to grant consent.

Return Integrations Hub > Catalog.

Search for and select Microsoft Dynamics 365 Sales.

Type the value of your Domain

Select OAuth Authentication Method.

Select your newly created OAuth connection

Review the OAuth connection field definition.

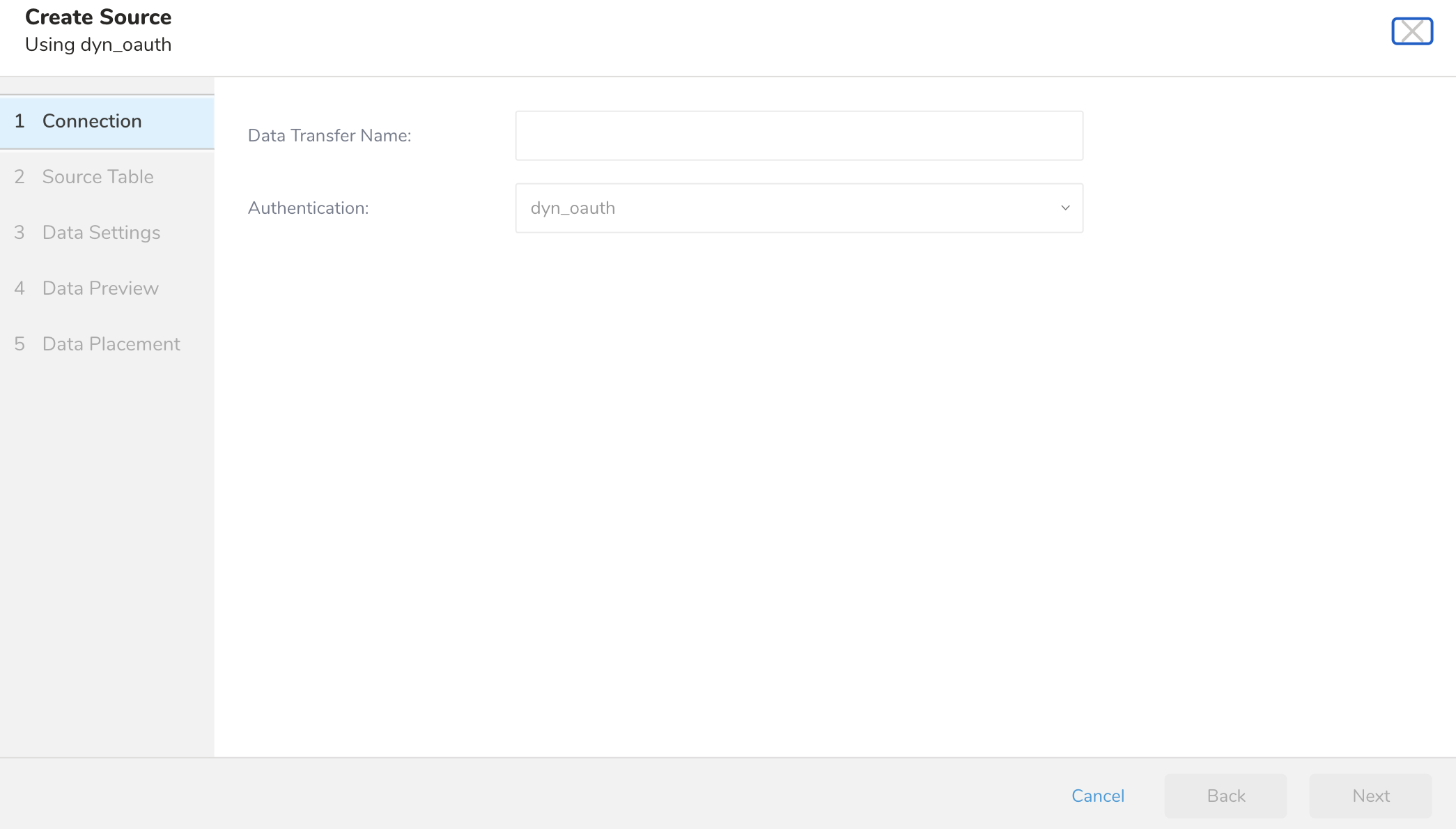

After creating the authenticated connection, you are automatically taken to Authentications.

Search for the connection you created.

Select New Source.

Type a name for your Source in the Data Transfer field**.**

- Select Next.

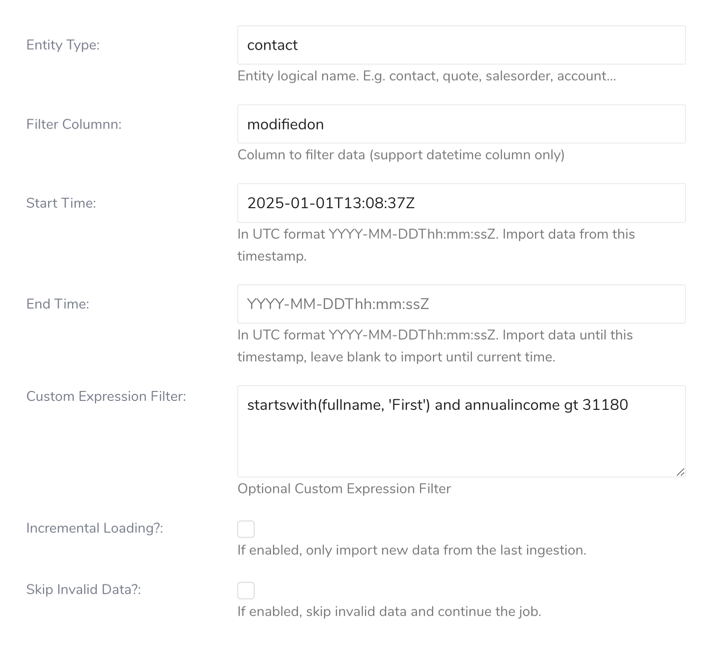

The Source Table dialog opens.

Edit the following parameters:

| Parameters | Description | Required |

|---|---|---|

| Entity Type | Entity logical name E.g. contact, sales_order, account...use fetch Entity Type to fetch all available entities | Yes |

| Filter Column | Column to filter data (support date time column only) | No |

| Start Time | In UTC format YYYY-MM-DDThh:mm:ssZ. Import data modified from this timestamp. - The Start Time field is exclusive, which means it won’t download data equal to this value. If you want data equals to this value being included, set the time earlier 1 second (while the End Time is inclusive) | No |

| End Time | This field is optional, If not specified current time will be used. In UTC format YYYY-MM-DDThh:mm:ssZ. Import data modified from this timestamp. It's recommended to leave this field blank when Incremental loading is enabled. | No |

| Custom Expression Filter | - A text area where you can enter custom filter expressions to refine your data import - This filter will be combined with the time-based filter using AND operator - Leave empty if you only need time-based filtering - Please refer the the section "Building Custom Filter Expressions" below for more details on how to formulate the custom filter expression | |

| Incremental Loading? | If enabled, only import new data from the last ingestion. | No |

| Skip Invalid Data? | When a column data type can not convert to a known value, the row will be skipped. If more than 30% of processed rows are invalid, the job stops with a status of fail. | No |

Basic Syntax

Custom filter expressions consist of:

- Field names (e.g., revenue, classification, status)

- Operators (e.g., eq, ne, gt)

- Values (must be properly formatted based on data type)

Operators

Comparison Operators

eq- Equal to:status eq 'active'ne- Not equal to:classification ne 'confidential'gt- Greater than:revenue gt 1000000ge- Greater than or equal:priority ge 2lt- Less than:completion lt 100le- Less than or equal:risk_level le 3

Logical Operators

and- Both conditions must be true:status eq 'active' and revenue gt 1000000or- Either condition can be true:type eq 'commercial' or type eq 'residential'not- Negates the condition:not (classification eq 'confidential')

Value Formatting

- Text: Single quotes -

'active','confidential' - Numbers: No quotes -

1000000,2.5 - Dates:

datetime'2024-01-08T00:00:00Z' - GUIDs:

guid'12345678-1234-1234-1234-123456789012'

Functions

startswith(fieldname, 'value')- Checks if the field starts with valueendswith(fieldname, 'value')- Checks if the field ends with valuesubstringof('value', fieldname)- Checks if the field contains value

Basic Filter

revenue gt 1000000 and status eq 'activeComplex Filter

(classification ne 'confidential' and building_type ne 'government') or 'security_level eq 'public' and revenue gt 5000000)Using Functions

not (startswith(customer_name, 'Gov')) and not (substringof('classified', description))Test your filter using Dynamics 365's API before using it in the import

Start with simple conditions and build up complexity

Use parentheses to group conditions clearly

Validate that field names match precisely with Dynamics 365 system's field name

Field names are case-sensitive

Text values must be in single quotes

Date values must be in the correct UTC format

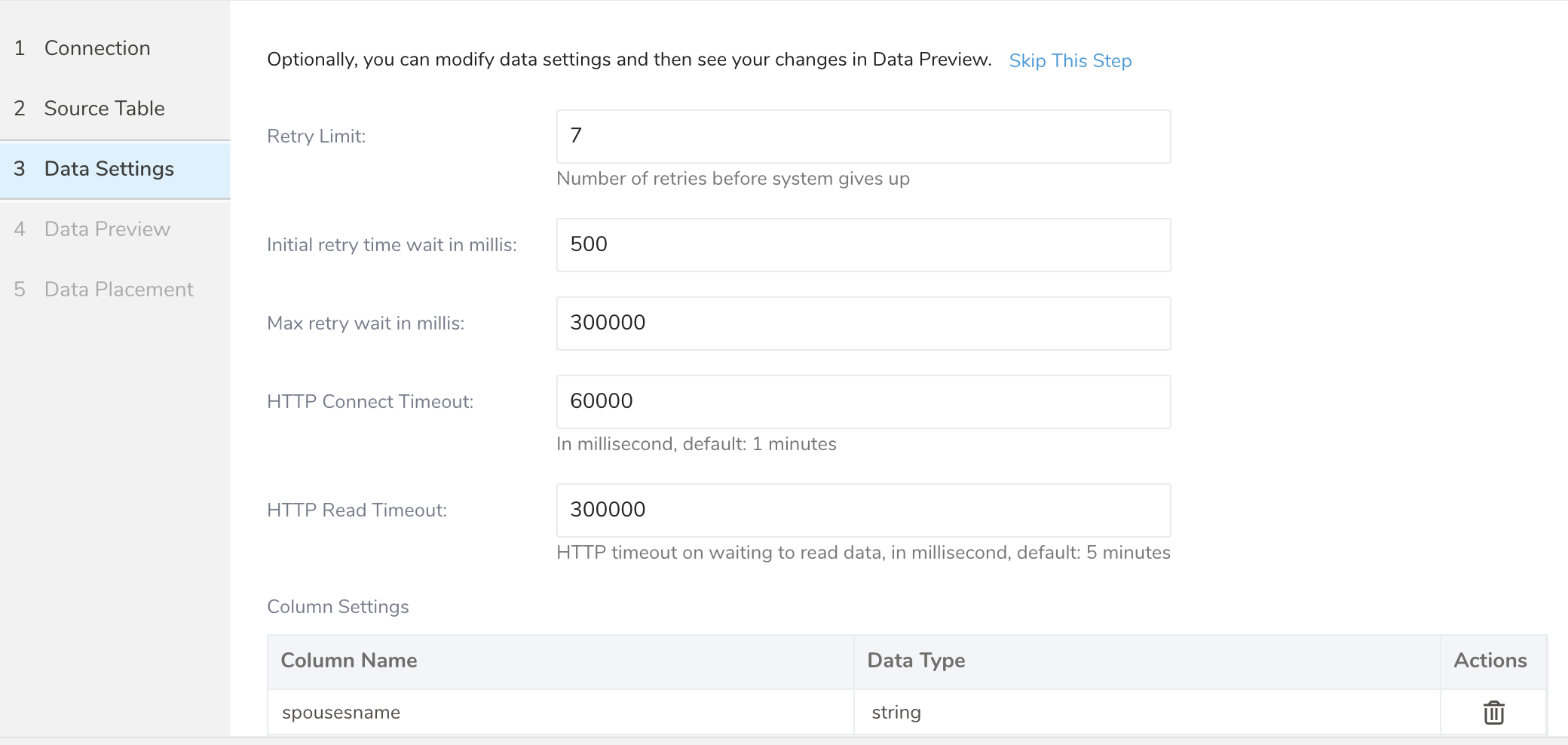

The Data Settings page can be modified to fit your needs, or you can skip the page.

Optionally, edit the following parameters:

| Parameter | Description | Required |

|---|---|---|

| Retry Limit | Maximum retry times for each API call. | No |

| Initial retry time wait in millis | Wait time for the first retry (in milliseconds). | No |

| Max retry wait in mills | Maximum wait time for an API call before it gives up. | No |

| HTTP Connect Timeout | The amount of time before the connection times out when making API calls. | No |

| HTTP Read Timeout | The amount of time waiting to write data into the request. | No |

| Column Settings | You can remove a column from the result or define its data type. Do not update the column name because it results in a null value for that column. | No |

- Select Next.

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

You can import data from MS Dynamics 365 Sale using td_load>: operator of workflow. If you have already created a SOURCE, you can run it

Identify your source.

To obtain a unique ID, open the Source list and then filter by MS Dynamics 365 Sales.

Open the menu and select Copy Unique ID.

- Define a workflow task using td_load> operator.

+load:

td_load>: unique_id_of_your_source

database: ${td.dest_db}

table: ${td.dest_table}- Run a workflow.