With its' columnar data format, Parquet is particularly beneficial for analytics workloads where queries often need to access only a subset of the columns in a dataset, making it a preferred option in numerous data pipeline strategies.

This TD export integration lets you generate parquet files from Treasure Data job results and upload them directly to Azure Data Lake Storage.

- Basic knowledge of Treasure Data.

- Basic knowledge of Azure Data Lake Storage.

- Azure Data Lake Storage Account configured with Hierarchical Namespace enabled.

- Azure Data Lake Access Keys or Shared Access Signature is set up. For more details, refer to Obtain Azure Data Lake Credentials

- Export Integration only supports Shared Access Signature with Account or Container level. It does not support Shared Access Signature with Folder or Directory level.

On TD Console, you must create and configure the data connection before running your query. As part of the data connection, you provide authentication to access the integration following the below steps

- Open TD Console

- Navigate to Integrations Hub > Catalog

- Search for your integration and select Azure Data Lake

- Select Create Authentication, and enter credential information for the integration

The Authentication fields

| Parameter | Description |

|---|---|

| Authentication Mode | Choose one of the two supported authentication modes: - Access Key - Shared Access Signatures |

| Account Name | The Azure storage account name having the hierarchical namespaces enabled. |

| Account Key | The Account Key or SAS Token corresponds to the selected Authentication Mode, which is obtained from the Azure platform. See Obtain Azure Data Lake Credentials for more details. |

| SAS Token | |

| Proxy Type | Select HTTP if the integration to Azure Data Lake Storage is via an HTTP proxy. |

| Proxy Host | Proxy Host value when Proxy Type is HTTP |

| Proxy Port | Proxy Port value when Proxy Type is HTTP |

| Proxy Username | .Proxy username when Proxy Type is HTTP |

| Proxy Password | Proxy password when proxy type is HTTP |

| On Premises | Ignore this parameter as it is not applied to the export integration. |

- Select Continue.

- Enter a name for the Authentication, and select Done.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

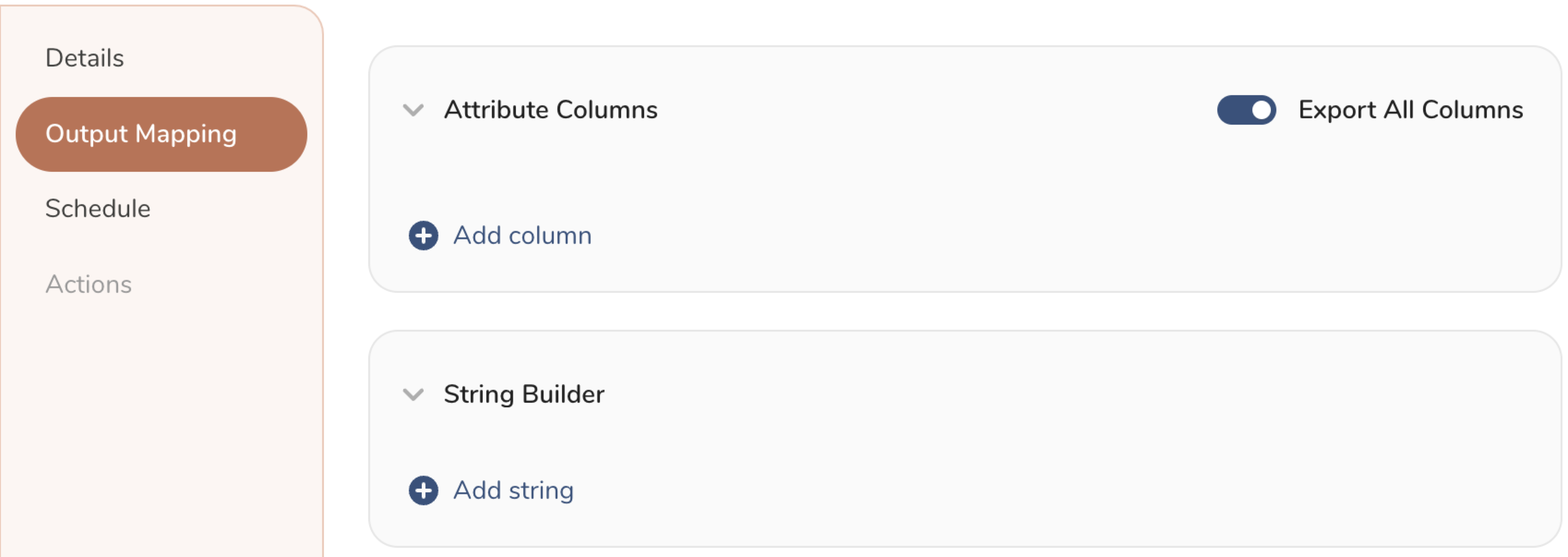

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

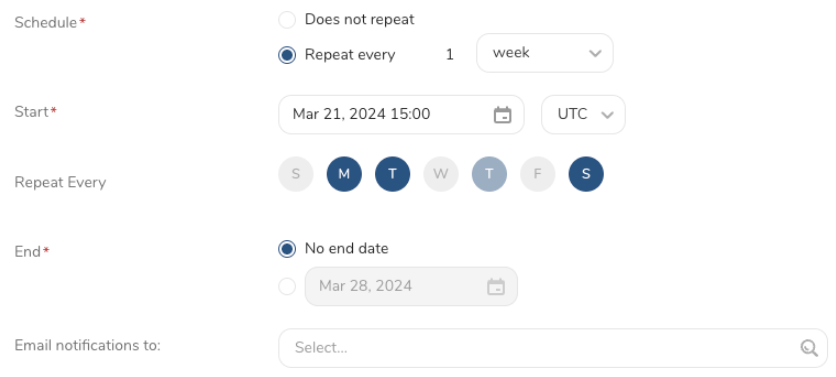

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

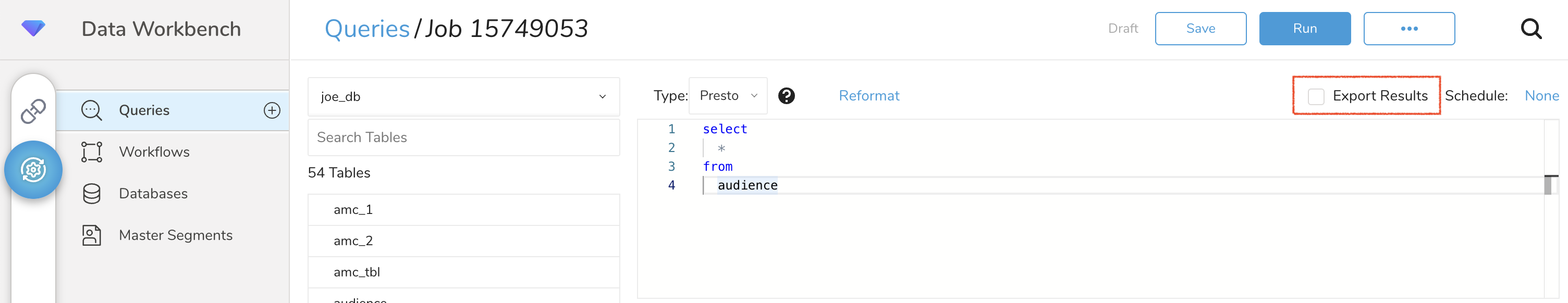

TD Console supports multiple ways to export data. Perform the following steps to export data from the Data Workbench.

- Navigate to Data Workbench > Queries.

- Select New Query, and define your query.

- Select Export Results to configure the data exporting.

- Select an existing authentication, or create a new one following the above section.

- Configure the exporting parameters, and select Done.

- Optionally, a schedule could be configured to export data periodically to the target destination specified. For more details, see Scheduling Jobs on TD Console

The Configuration fields

| Field | Description |

|---|---|

| Container name | The name of an existing Azure storage container. |

| File path | The export location in the Azure Storage, including a file name and the parquet extension, i.e, "folder/file.parquet". |

| Overwrite existing file? | If the checkbox is checked, the existing file will be overwritten. |

| Parquet compression | The supported parquet compression: - Uncompressed - Gzip - Snappy |

| Row group size (MB) | Parquet compression algorithms are only applied per row group, so the larger the row group size, the more opportunities to compress the data. Use this field to fine-tune the data exporting based on the actual data schema. Min: 10 MB, max: 1024 MB, default: 128 MB |

| Page size (KB) | The smallest unit that must be read fully to access a single record. If this value is too small, the compression will deteriorate. Use this field to fine-tune the data exporting based on the actual data schema. Min: 8 KB, max: 2048 KB, default: 1024 KB. |

| Single file? | When enabled, the single file is created otherwise multiple files are generated based on the row group size (Note that the output file size varies if compression is enabled) |

| Advanced configuration | Enable this option to adjust the advanced configuration below. |

| Timestamp Unit | Configure timestamp unit in milliseconds or microseconds. Default: milliseconds. |

| Enable Bloom filter | A Bloom filter is a space-efficient structure that approximates set membership, offering definitive "no" or probable "yes" answers. This option could be enabled to include a Bloom filter in the generated parquet files. |

| Retry limit | Maximum retry, min: 1, max: 10, default: 5 |

| Retry Wait (second) | Time to wait before executing retry, min: 1 second, max: 300 seconds, default: 3 seconds |

| Number of concurrent threads | The number of current requests to Azure Storage service, min: 1, max: 8, default: 4 |

| Part size (MB) | The Azure Storage supports uploading files in small chunks (Request buffer size). Min: 1 MB, max: 100 MB, default: 8 MB |

The CLI(Toolbelt) could be used to trigger the Query Result exporting to Azure Data Lake in the parquet file format. You need to specify the information for export to your Azure Data Lake Storage as --result option of td query command. About td query command, see this article.

The format of the option is JSON and the general structure is as follows.

{"type": "azure_datalake", "authentication_mode": "account_key", "account_name": "demo_account_name", "proxy_type": "none", "container_name": "demo_container_name", "file_path": "joetest/test-3.parquet", "overwrite_file": "true", "compression": "uncompressed", "row_group_size": "128", "page_size": 1024, "single_file": "false", "advanced_configuration": "false"}Example for Usage

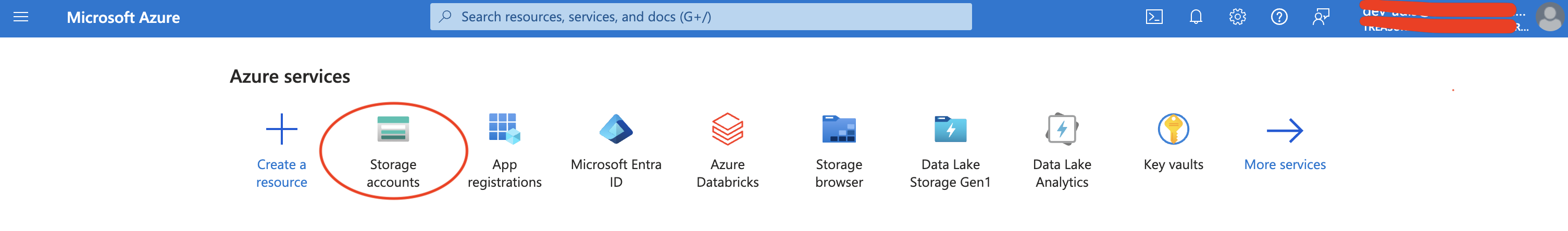

td query --result '{"type":"azure_datalake","authentication_mode":"account_key","account_name": "account name", "account_key": "account key", "proxy_type": "none", "container_name": "container", "file_path": "joe/test.parquet", "overwrite_file": "false", "compression": "uncompressed", "row_group_size": 128, "page_size": 1024, "single_file": true, "advanced_configuration": false}' -d sample_datasets "select * from sample_table" -T prestoLogin to Microsoft Azure Portal and choose Storage accounts

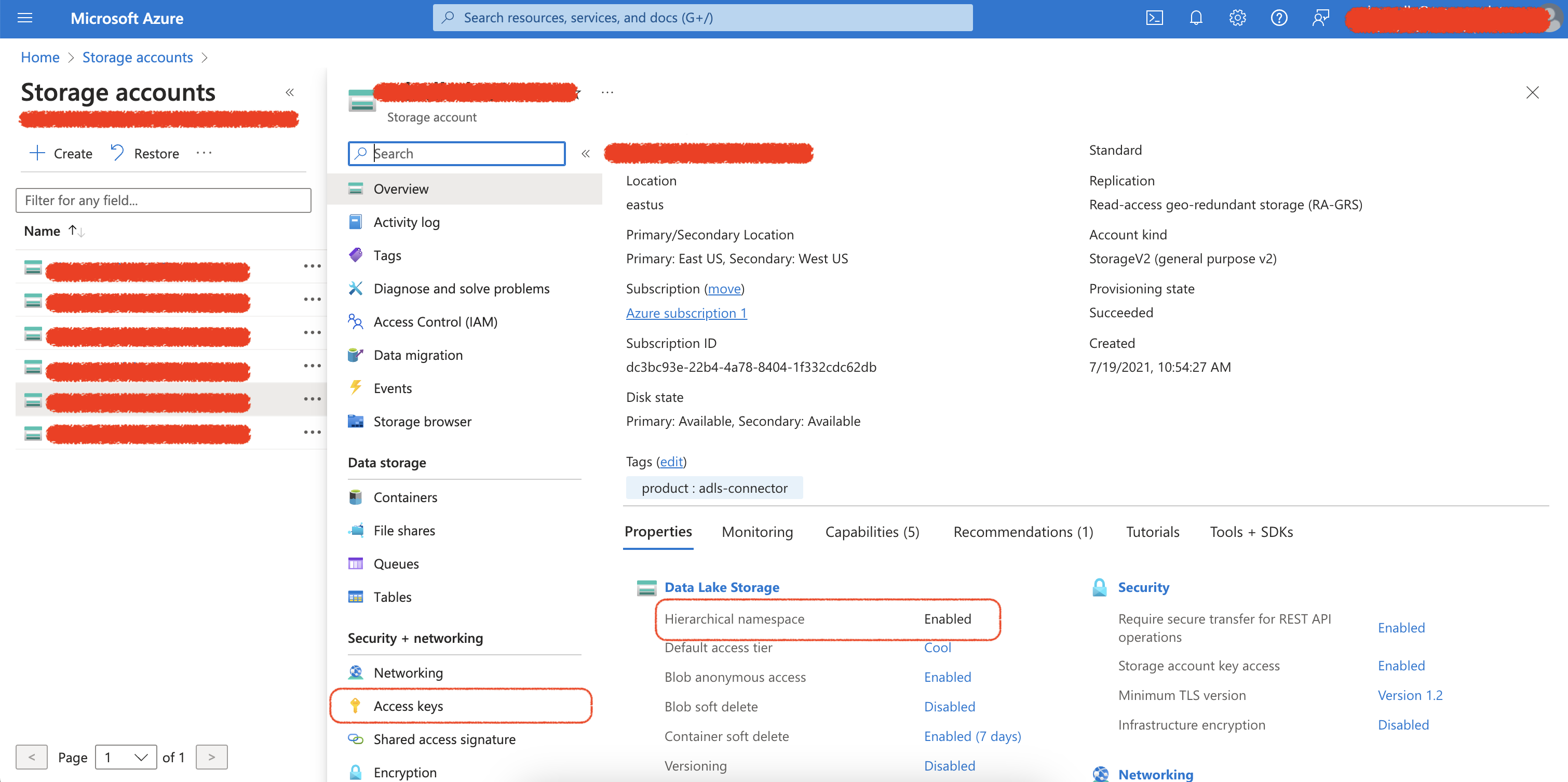

Select your storage account and make sure that the hierarchical namespace option is enabled.

To use the Access Key for the integration:

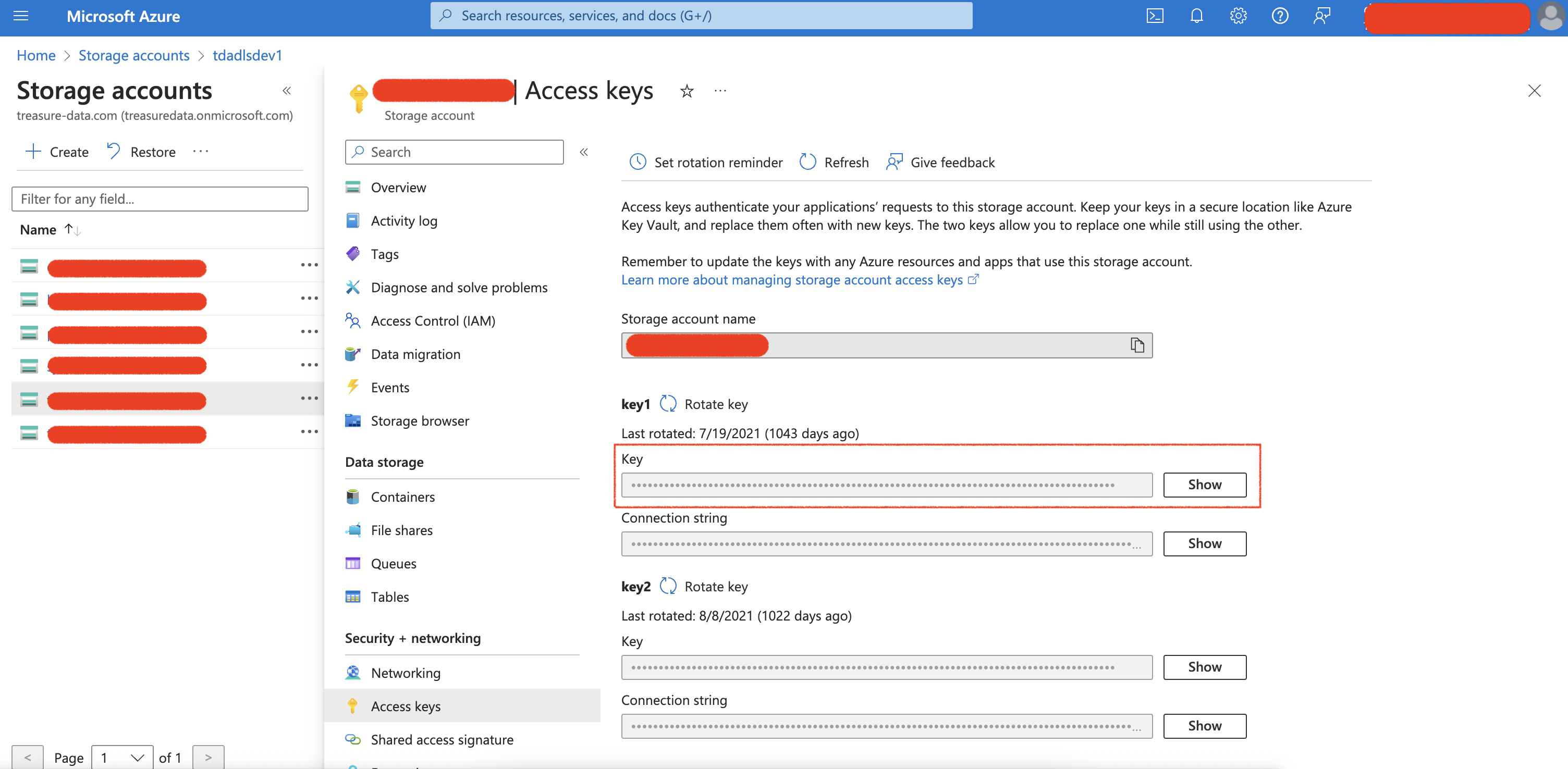

- Select the Access keys from the Security + networking menu**.**

- Selectthe Show button and copy the access key.

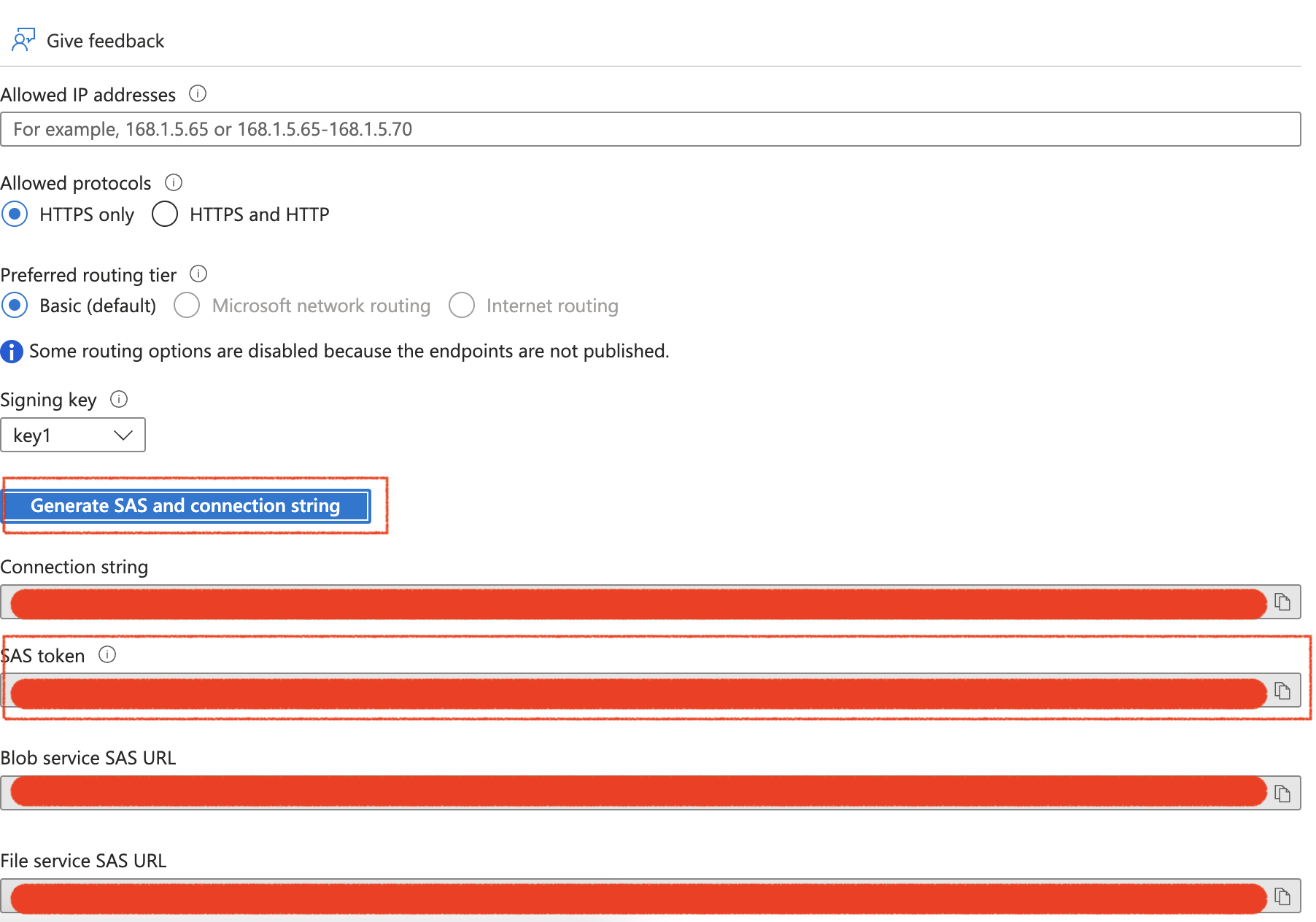

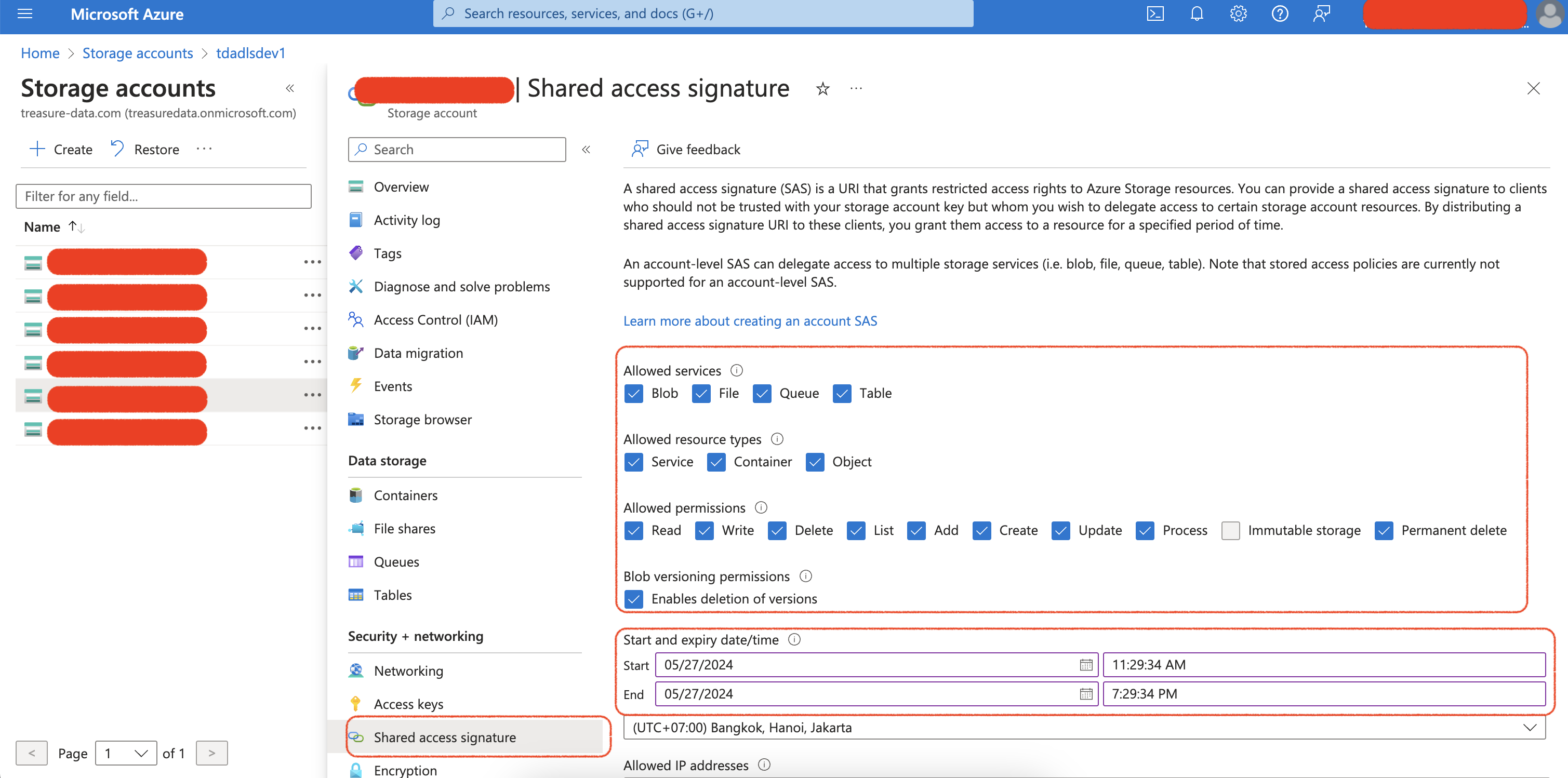

To use the Shared access signature for the integration:

- Select the Shared access signature from the Security + networking menu.

- Configure the permission & expiration date for the shared access signature.

- Select the Generate SAS and connection string button, and copy the SAS token.