Learn more about Mailchimp Export Integration.

You can use this data connector to import List Members, Member Activity, Campaigns, and Lists data into Treasure Data.

You can use the same connection to create and update Mailchimp List with Treasure Data.

- Basic knowledge of Treasure Data, including the TD Toolbelt.

- A MailChimp account that can grant permissions to Treasure Data.

Each MailChimp user account is permitted up to 10 simultaneous HTTP connections. Currently, there are no options to raise this limit on a per-customer basis.

When running simultaneous jobs, ensure that the total number of HTTP connections for all simultaneous jobs doesn't exceed 10 HTTP connections. Otherwise, all jobs will fail. MailChimp flushes all HTTP connections of a user account when the 10 HTTP connections limit is exceeded.

Use Batch Operations to import Member Activity can reduce requests to MailChimp API server and thus reduce rate limit. See import Member Activity

Go to Integrations Hub -> Catalog and search and select the Mailchimp tile.

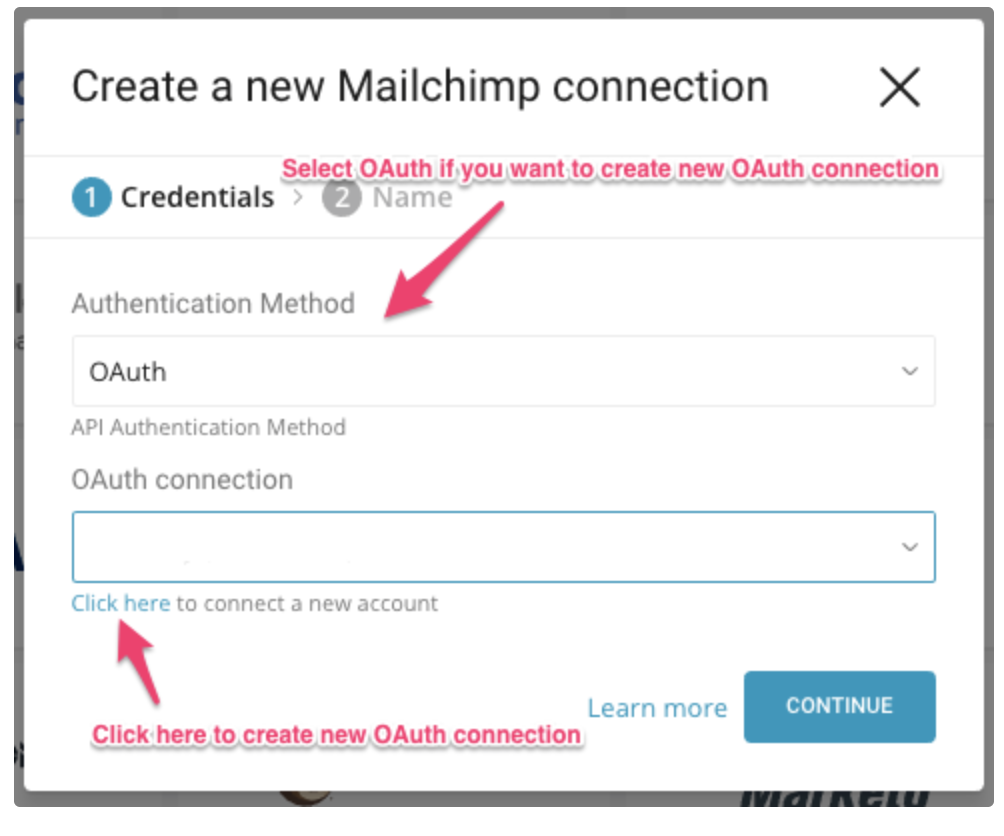

A dialog opens in which you provide the required credentials. Specify an Authentication Method.

The method you use to authenticate Treasure Data with MailChimp affects the steps you take to enable the data connector. You can choose to authenticate using:

- API Key

- OAuth

You can specify the MailChimp API Key and credential to authorize Treasure Data access. The API key grants full access to your Mailchimp account.

Note that OAuth method is currently not supported for JP and IDCF customers.

You can select an existing OAuth connection for MailChimp from the drop-down.

Or you can select the link under OAuth connection to create a new one.

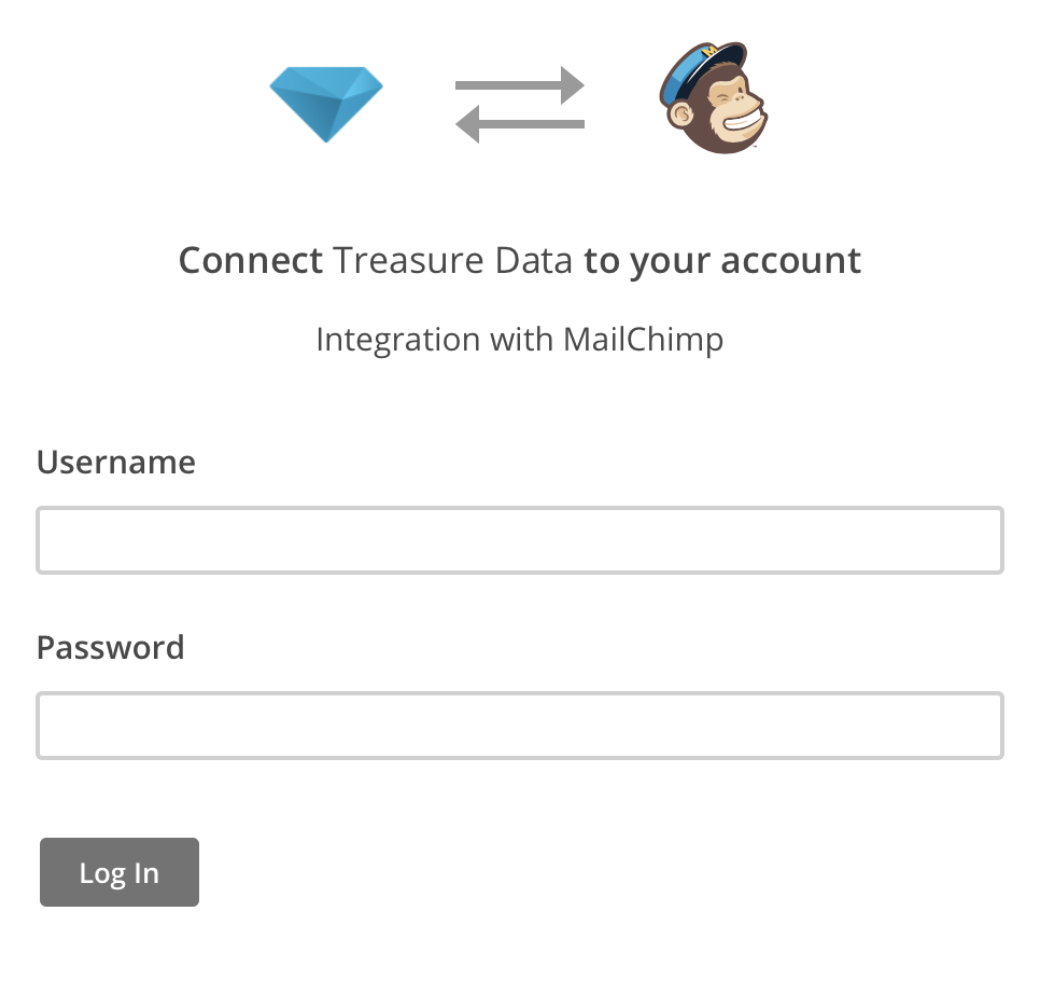

When you select Click here to connect a new account, you must sign into your MailChimp account in popup window

By signing into MailChimp, you are authenticating. The action of signing into Mailchimp generates an OAuth authentication.

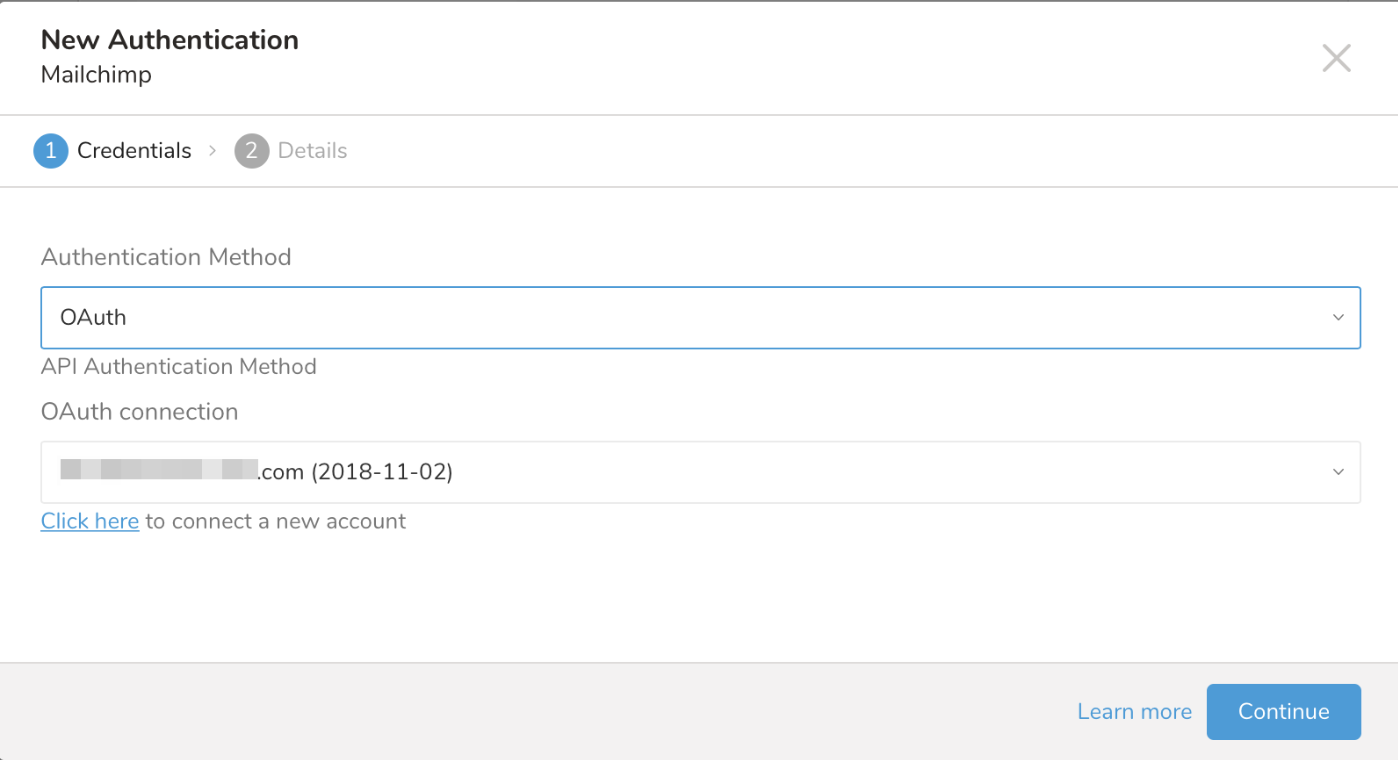

You are redirected back to the Treasure Data Catalog page. Repeat the first step (Create a New Connection) and choose your new OAuth connection, then finish creating your connection.

You now have an authenticated connector that you use to complete configuration for input (to Treasure Data) of data in the next step.

Select Continue. Provide a name for your connector. You have completed the first part of creating a connector.

After creating the authenticated connection, you are automatically taken to the Authentications tab. Look for the connection you created and select New Source.

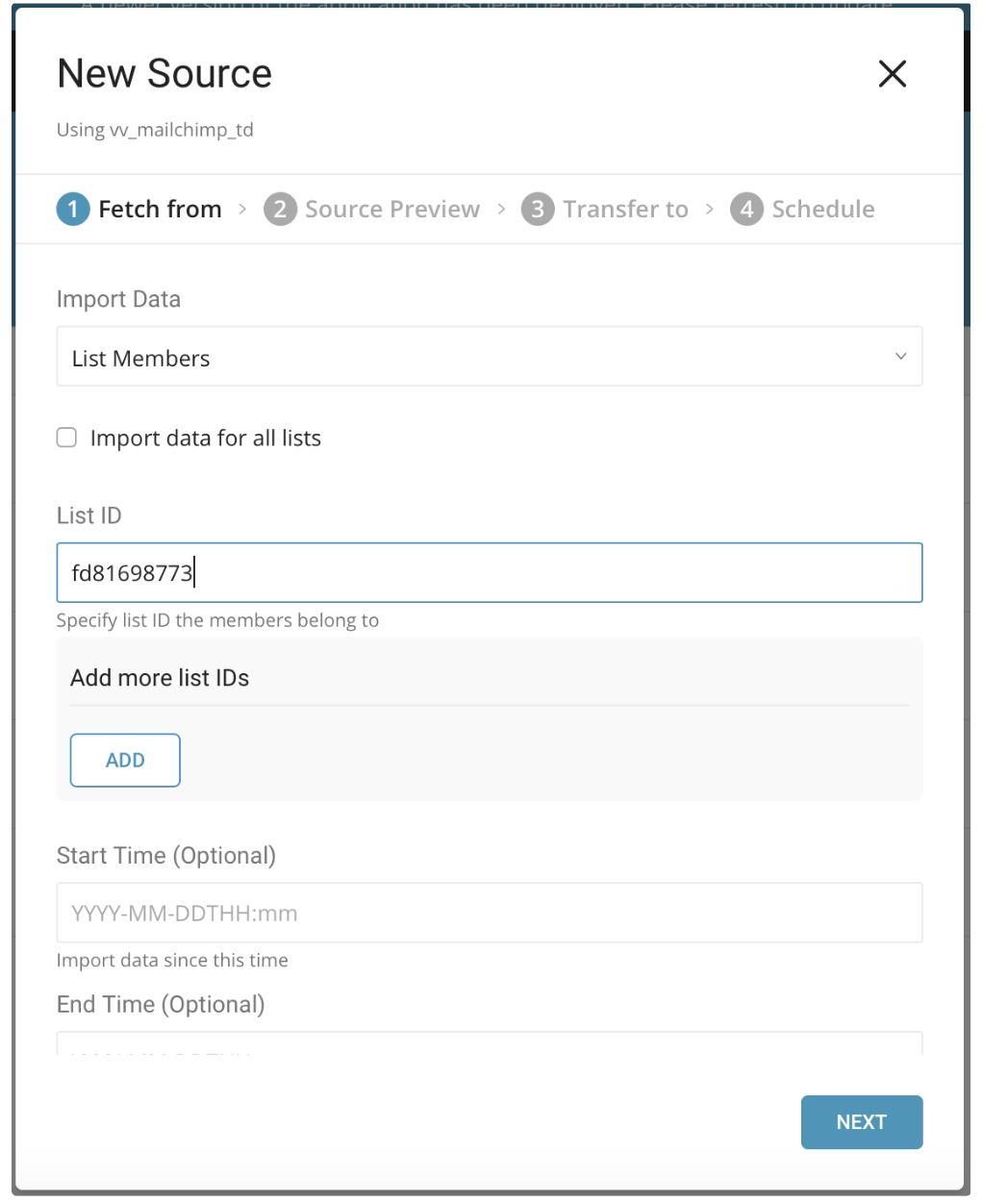

In the New Source dialog, choose List Members for the Import Data, choose whether to import data for all or specific lists, and enter the Start Time (Optional) and End Time (Optional), then select Next.

When Incremental loading option is checked, it incrementally imports members whose info updated since the last import.

| Parameter | Description |

|---|---|

| Import data for all lists | When the option is checked, import data for all lists |

| List ID | A valid MailChimp list id |

| Add more list IDs | Enter more list ids if needed |

| Start Time | Import members whose info updated since this time (Inclusive) |

| End Time | Import members whose info updated before this time (Exclusive) |

Next, you see a Preview of your list member data similar to the following dialog. Select Next.

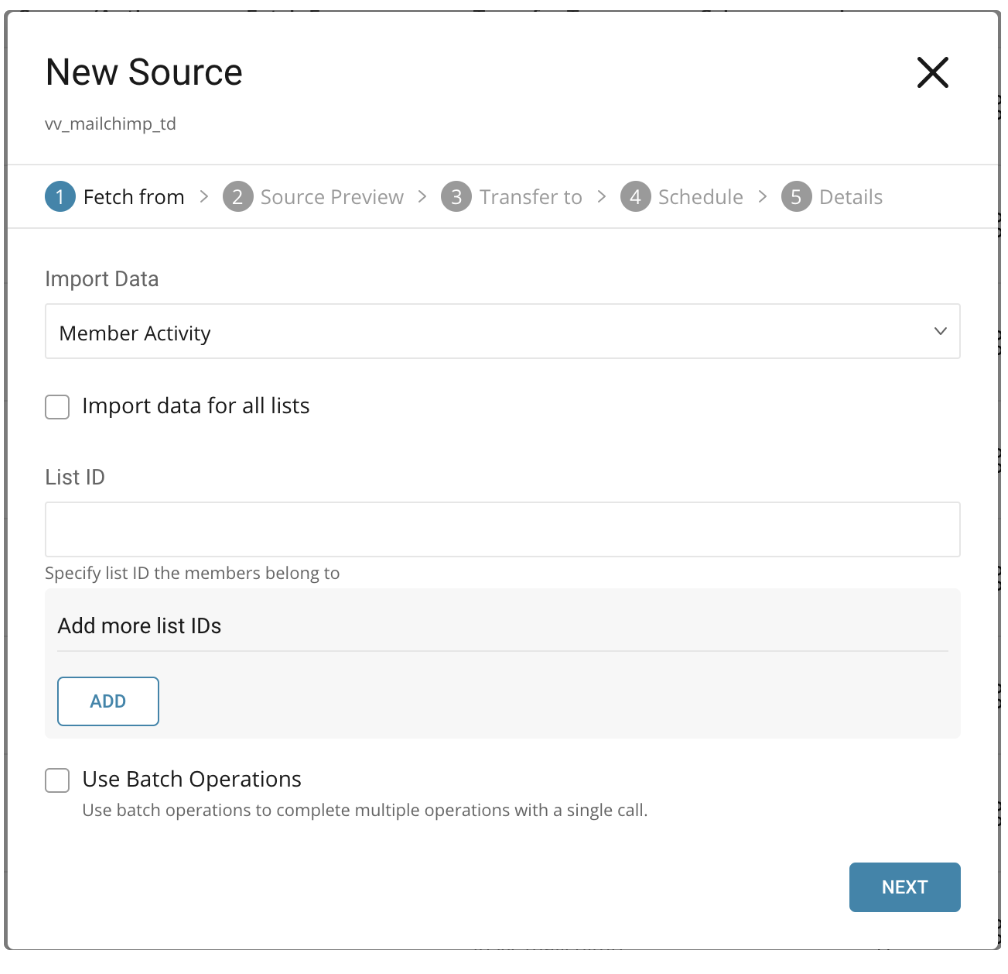

In the New Source dialog, choose Member Activity for the Import Data, and choose whether to import data for all or specific lists, and then select Next.

Only last 50 events of a member’s activity are available to be imported.

| Parameter | Description |

|---|---|

| Import data for all lists | When the option is checked, import data for all lists |

| List ID | A valid MailChimp list id |

| Add more list IDs | Enter more list ids if needed |

| Use Batch Operations | When import 10.000 member's activity, there will be 10.000 requests needed. User batch operations to group the requests and send them at once. |

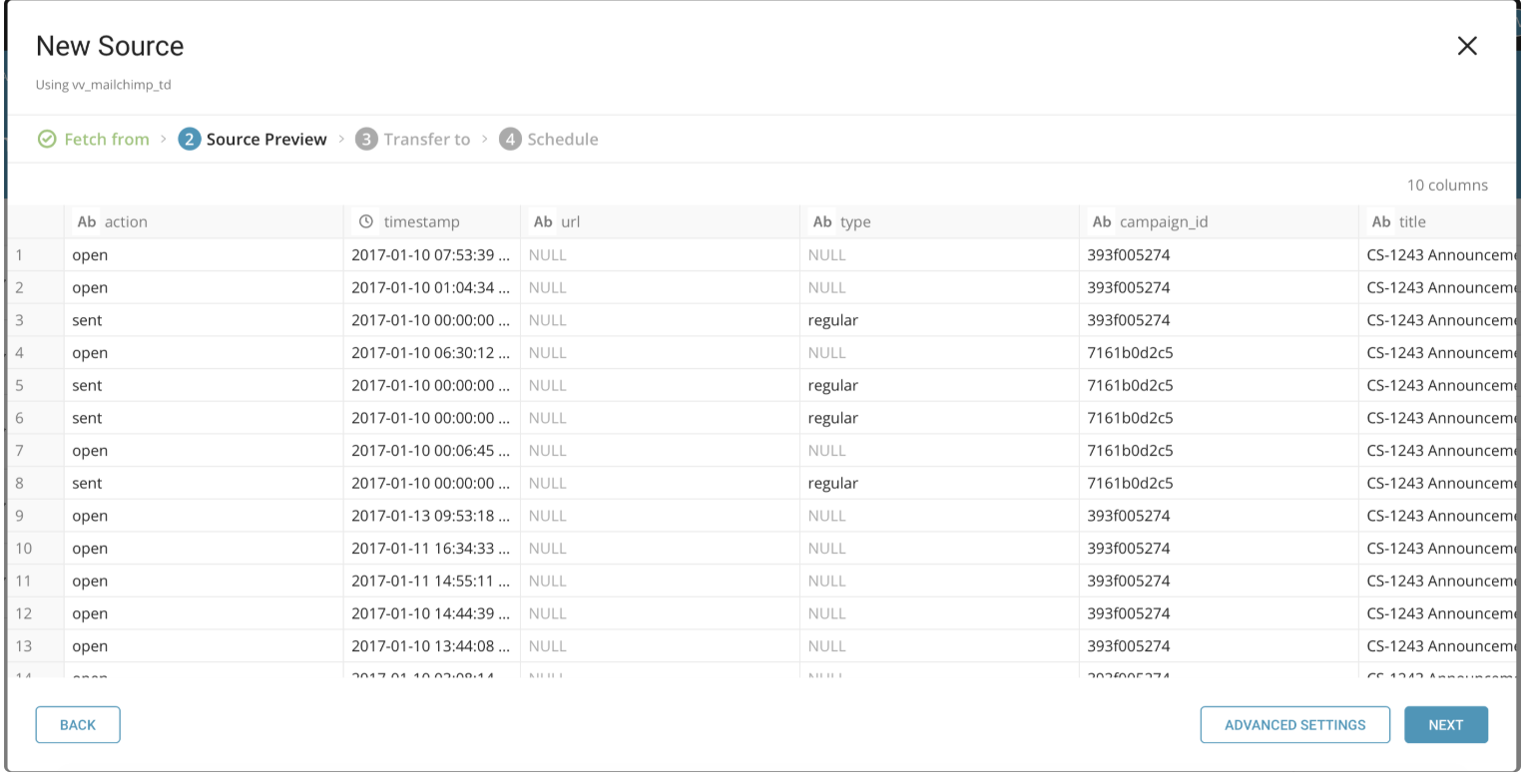

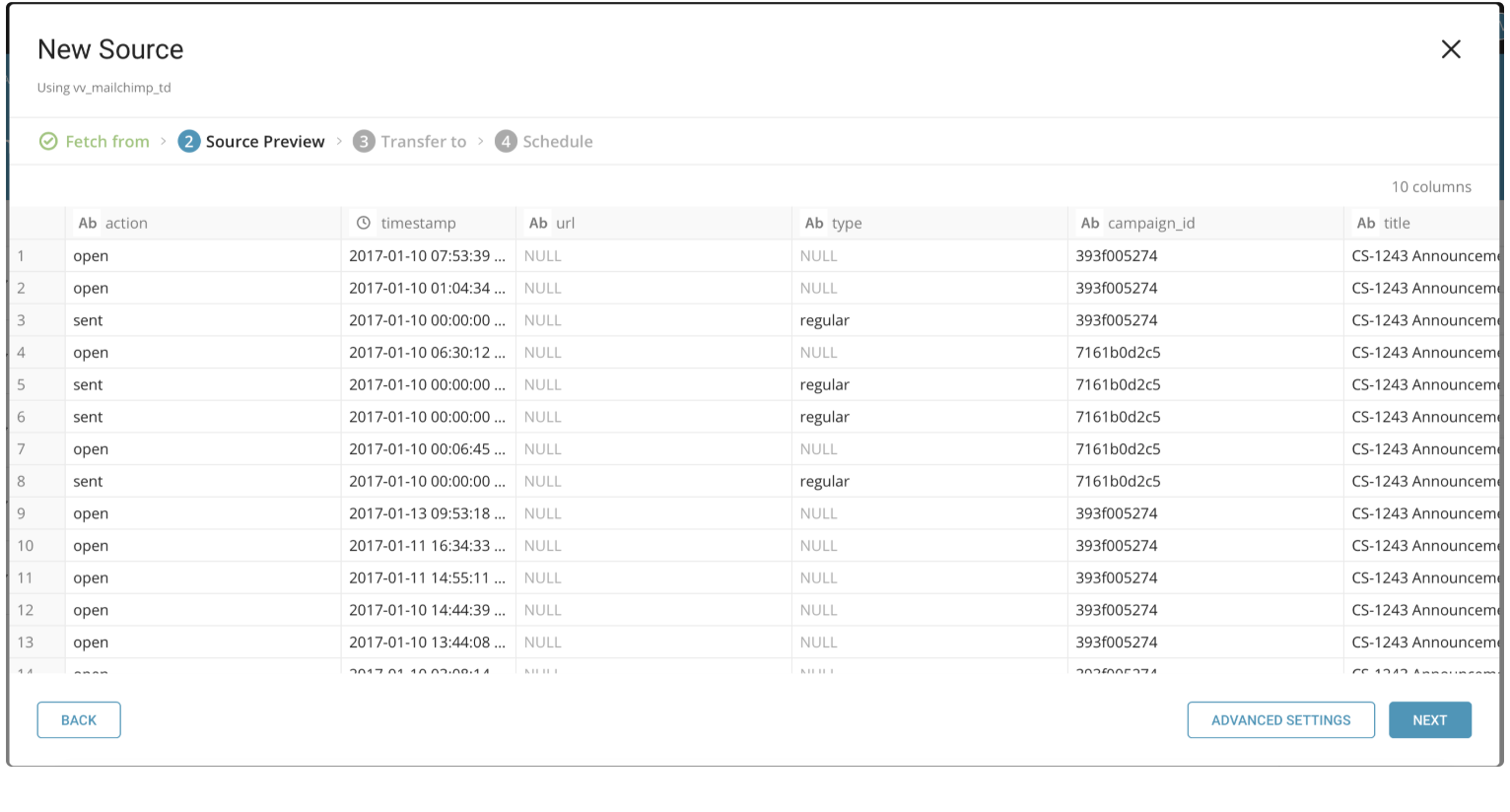

Next, you see a Preview of your member activity data similar to the following dialog. Select Next.

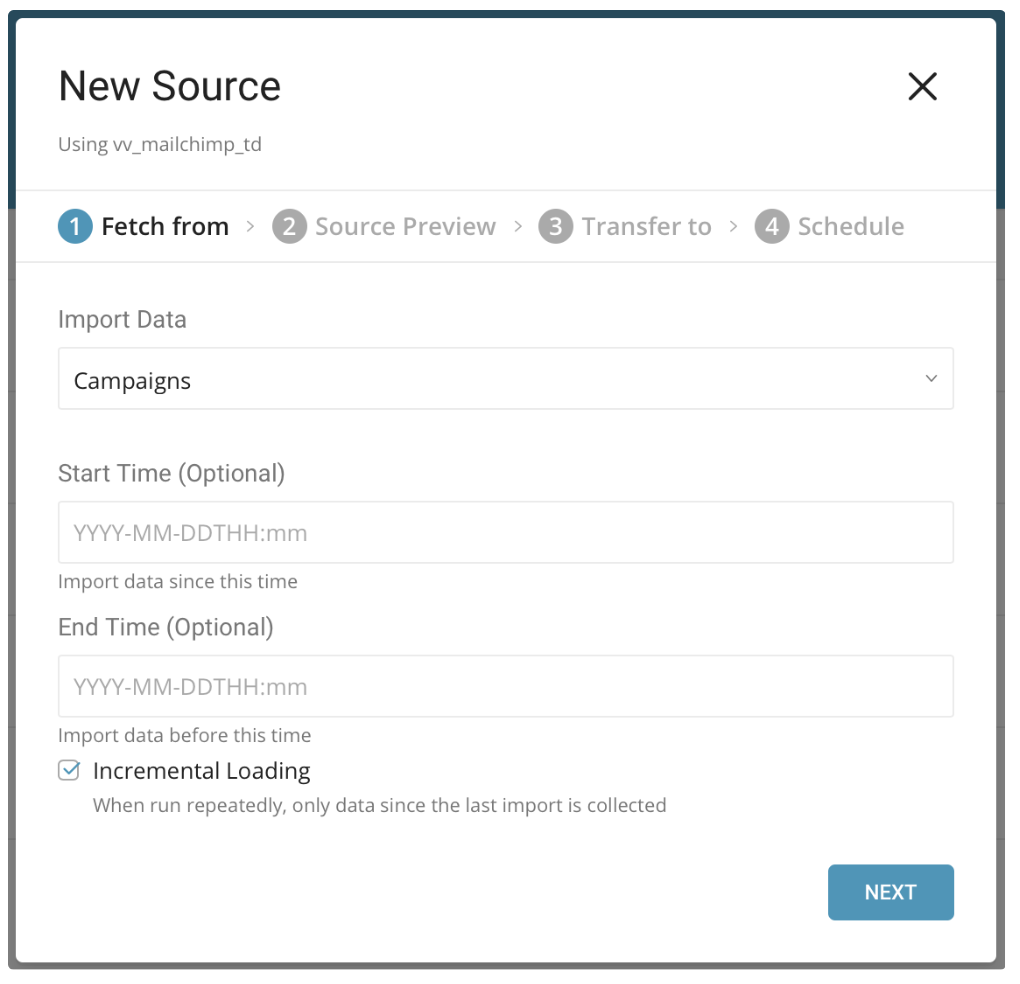

In the New Source dialog, choose Campaigns for the Import Data, and enter a Start Time (Optional) and End Time (Optional), then select Next.

When Incremental loading option is checked, it incrementally imports campaigns created since the last import.

| Parameter | Description |

|---|---|

| Start Time | Import campaigns created since this time (Inclusive) |

| End Time | Import campaigns created before this time (Exclusive) |

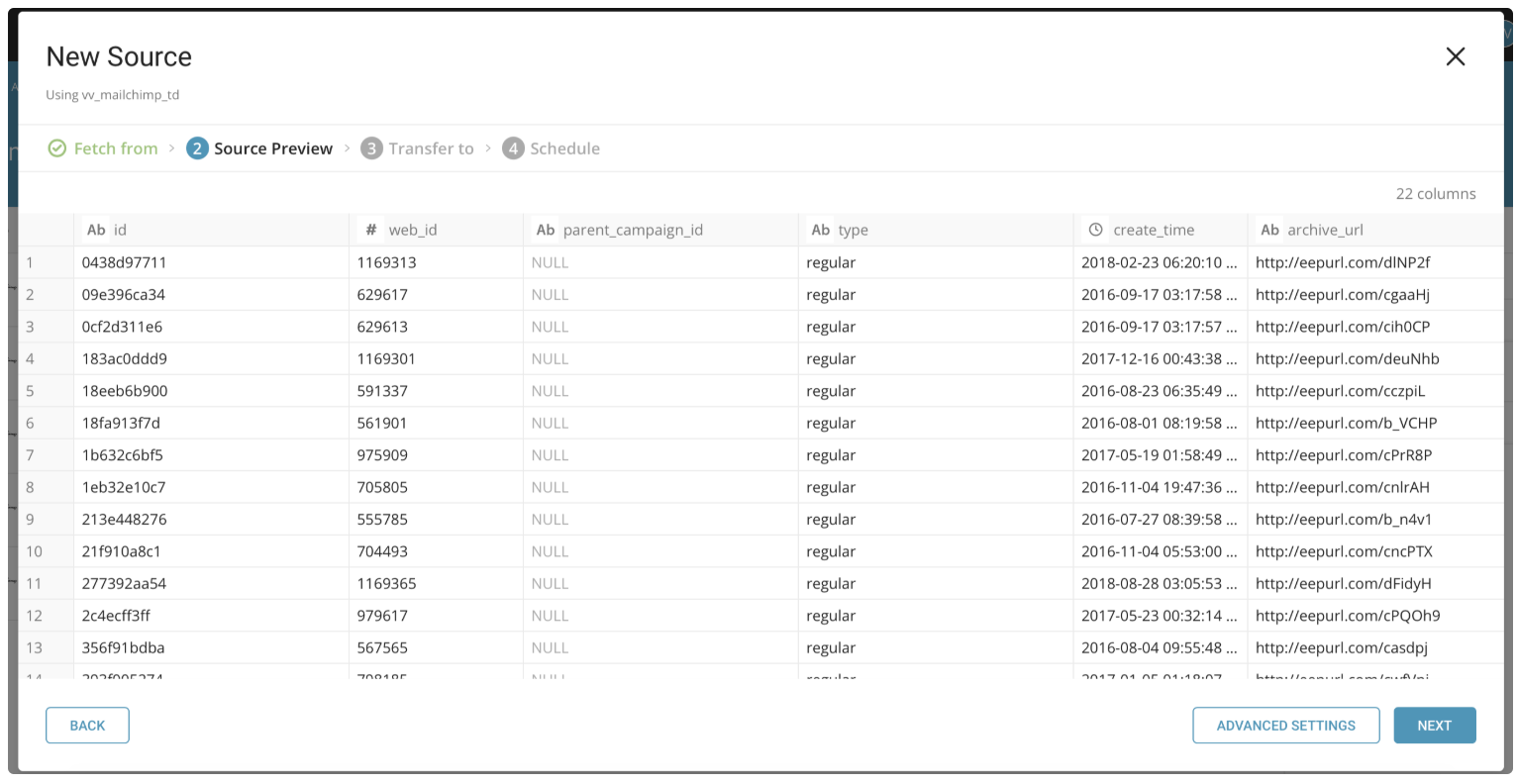

Next, you see a Preview of your campaign data similar to the following dialog. Select Next.

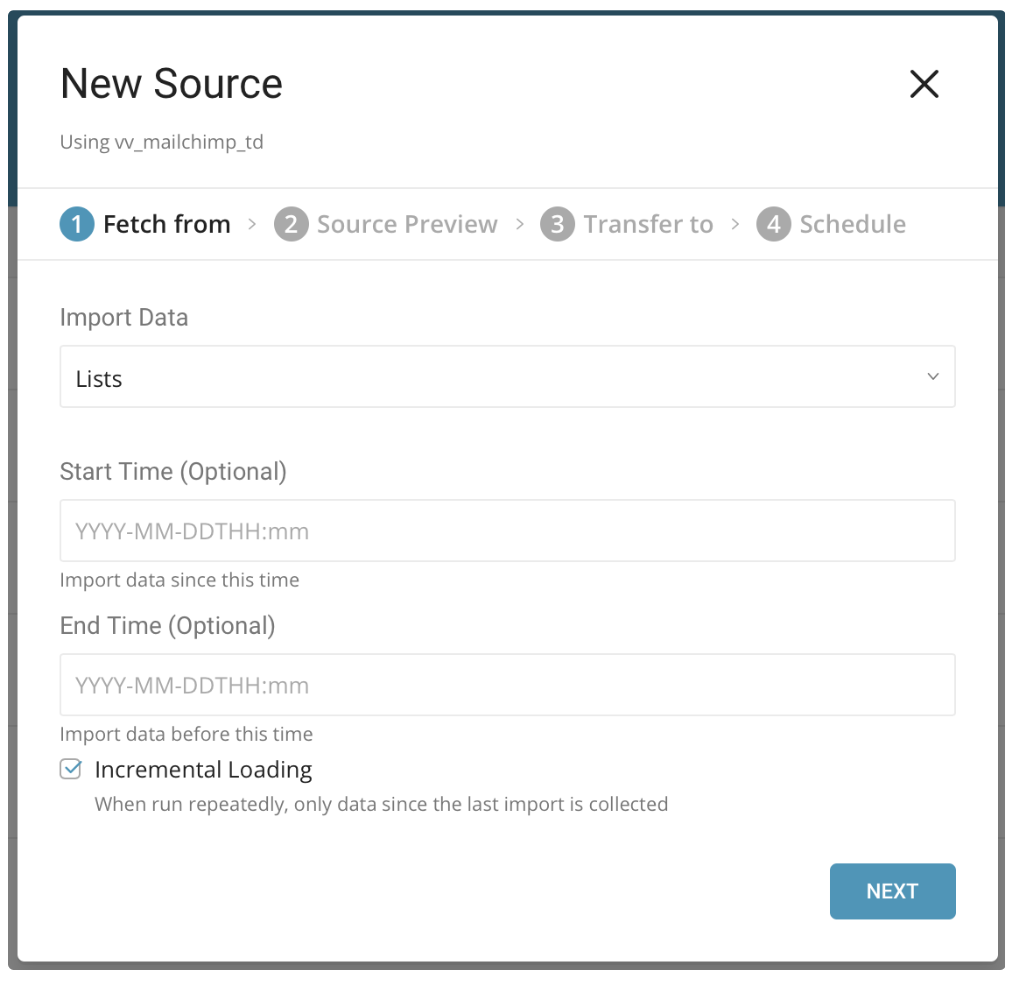

In the New Source dialog, choose Lists for the Import Data, and enter a Start Time (Optional) and End Time (Optional), then select Next.

When Incremental loading option is checked, it incrementally imports lists created since the last import.

| Parameter | Description |

|---|---|

| Start Time | Import lists created since this time (Inclusive) |

| End Time | Import lists created before this time (Exclusive) |

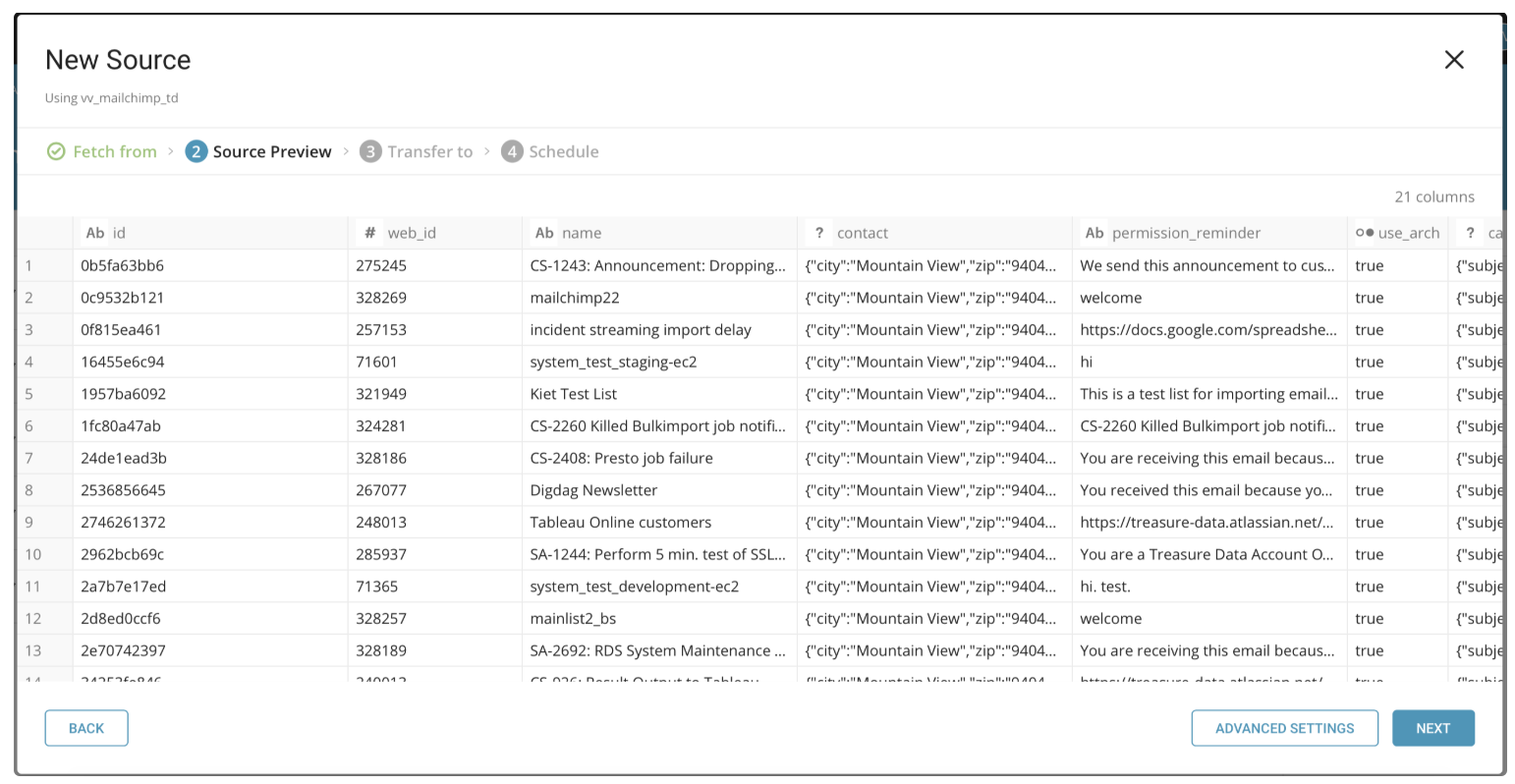

Next, you see a Preview of your list data similar to the following dialog. Select Next.

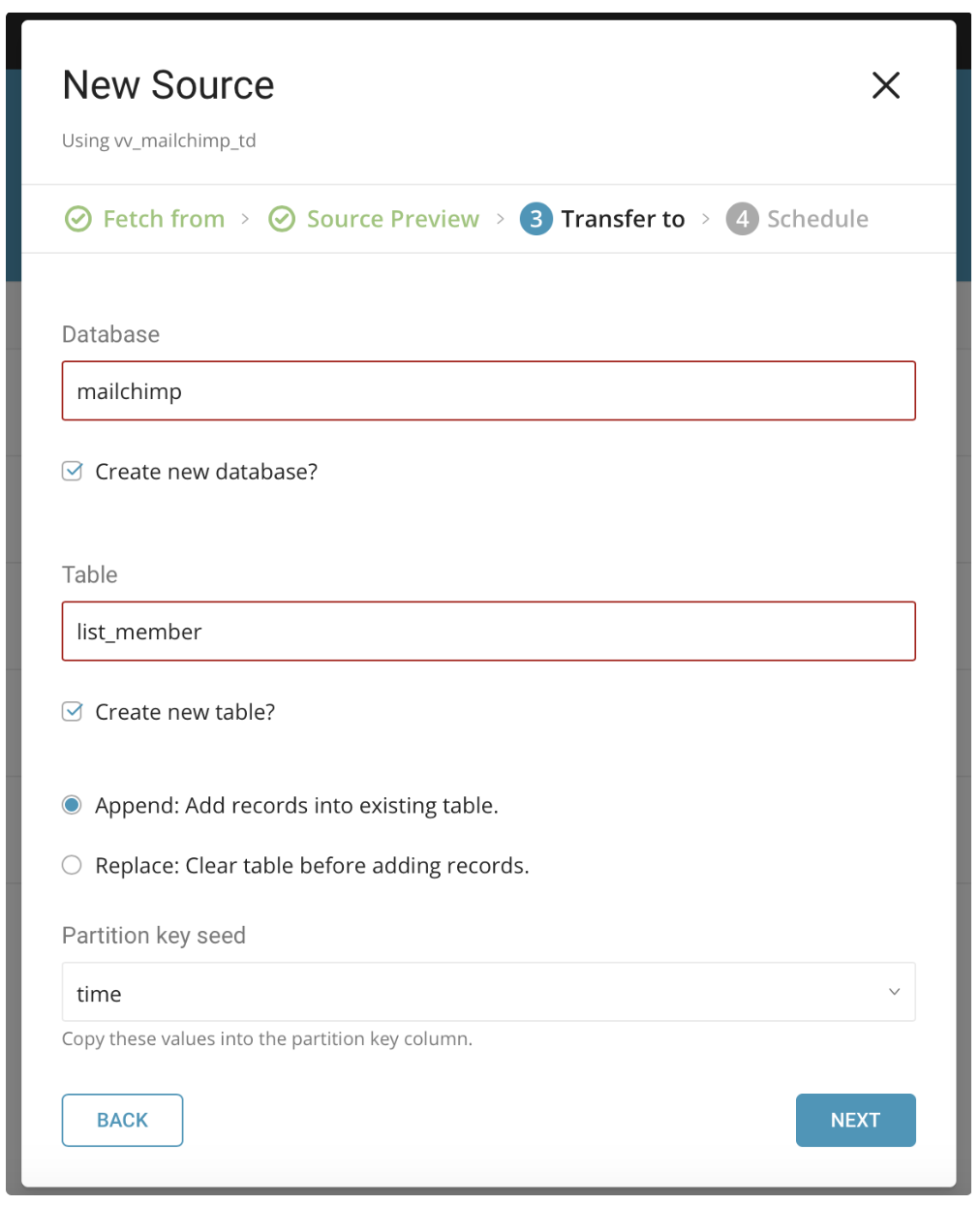

Select the database and table to where you want to transfer the data, as shown in the following dialog:

If you are creating a new database, check Create new database and give your database a name. Do the same with Create new table.

Select whether to append records to an existing table or replace your existing table.

If you want to set a different partition key seed than the default key, you can specify one using the popup menu.

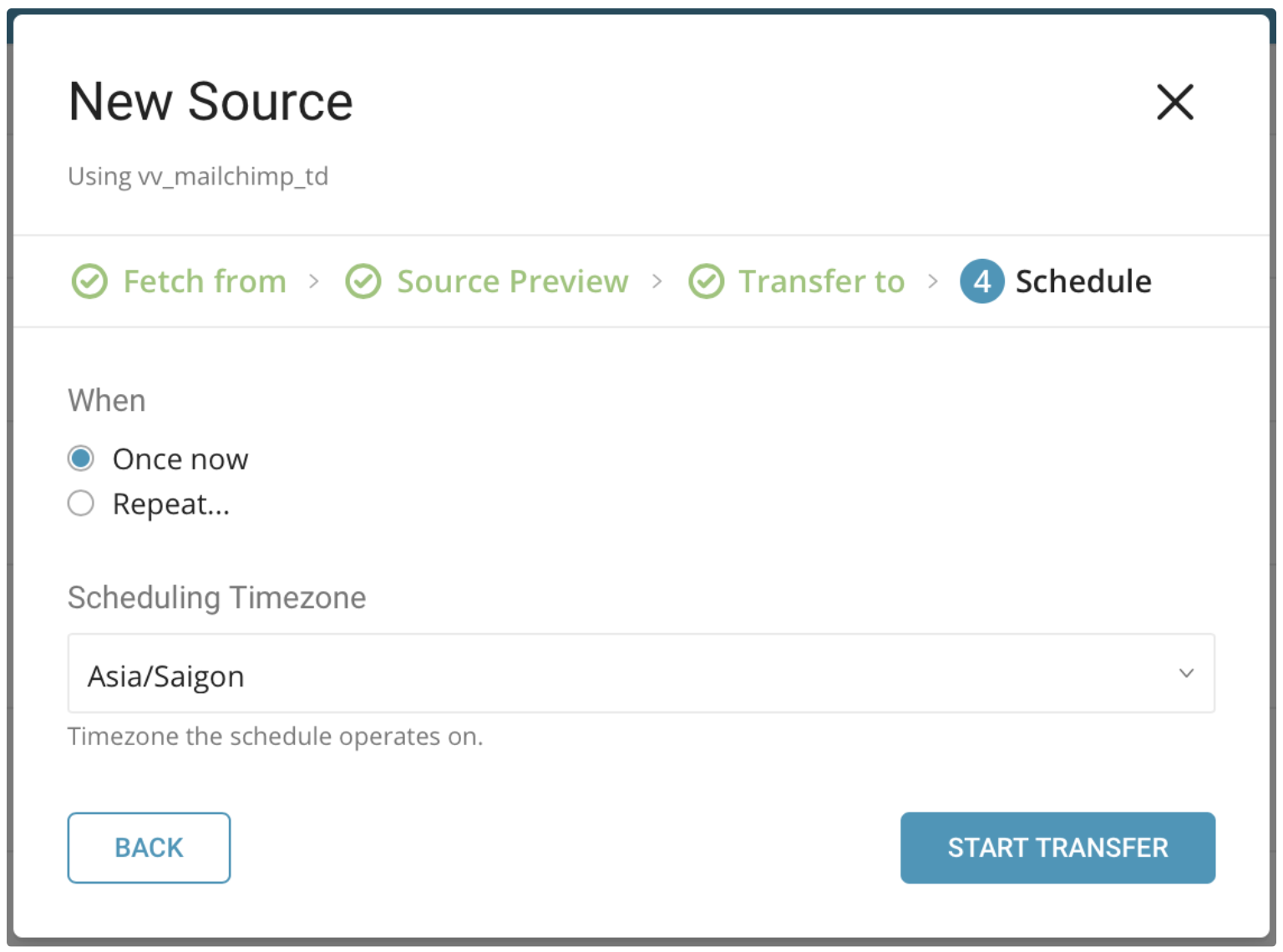

Finally, specify the schedule of the data transfer using the following dialog and select Start Transfer:

You see the new data transfer in progress listed under the My Input Transfers tab and a corresponding job is listed in the Jobs section.

In the When tab, you can specify this as a one-time transfer, or you can schedule an automated recurring transfer. If you selected Once now, select Start Transfer. If you selected Repeat… specify your schedule options, then select Schedule Transfer.

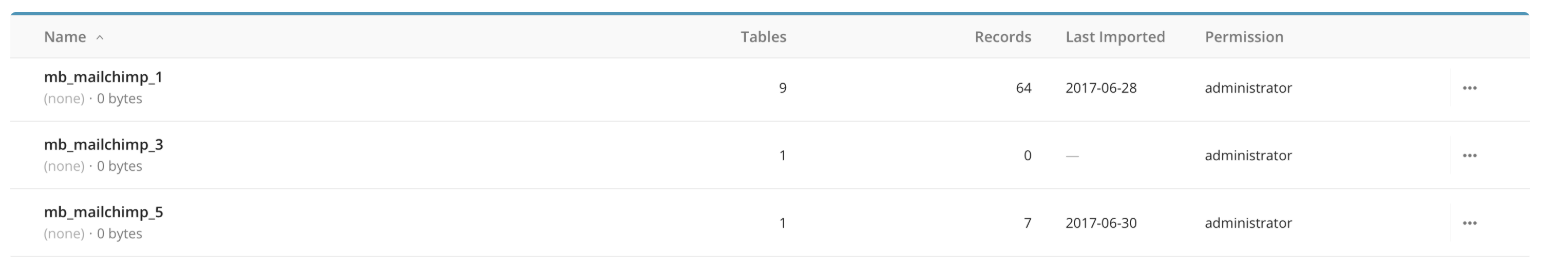

After your transfer runs, you can see the results of your transfer in the Databases tab.

You are ready to start analyzing your data.

You can install the latest TD Toolbelt.

$ td --version

0.15.8Prepare configuration file (for eg: load.yml) as shown in the following example, with your MailChimp credential and transfer information.

in:

type: mailchimp

apikey: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx-dcxx

target: list_members

list_id: xxxxxxxxxxxxx

start_time: "2018-05-07T00:00:00Z"

end_time: "2018-05-08T00:00:00Z"

out:

mode: appendYou can preview data to be imported using the command td connector:preview.

$ td connector:preview load.ymlSubmit the load job. It may take a couple of hours depending on the data size.

$ td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column last_changedYou must specify the database and table where the data is stored.

It is recommended to specify --time-column option, because Treasure Data’s storage is partitioned by time (see also data partitioning). If the option is not given, the data connector selects the first long or timestamp column as the partitioning time. The type of the column specified by --time-column must be either of long and timestamp type.

If your data doesn’t have a time column you can add it using add_time filter option. More details at add_time filter plugin.

You can assign a Time Format column to the "Partitioning Key" by "--time-column" option.

The td connector:issue command assumes that you have already created database(td_sample_db) and table(td_sample_table). If the database or the table do not exist in TD, then this command will not succeed, so create the database and table manually or use --auto-create-table option with td connector:issue command to auto create the database and table:

td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column last_changed \

--auto-create-tableYou can schedule periodic data connector execution for periodic MailChimp import. We have configured our scheduler carefully to ensure high availability. By using this feature, you no longer need a cron daemon on your local data center.

A new schedule can be created using the td connector:create command. You must specify: the name of the schedule, a cron-style schedule, the database and table where the data will be stored, and the data connector configuration file.

td connector:create \

daily_mailchimp_import \

"9 0 * * *" \

td_sample_db \

td_sample_table \

load.ymlThe cron parameter also accepts these three options: @hourly, @daily and @monthly. | By default, schedule is setup in UTC timezone. You can set the schedule in a timezone using -t or --timezone option. The --timezone option only supports extended timezone formats like 'Asia/Tokyo', 'America/Los_Angeles' etc. Timezone abbreviations like PST, CST are *not* supported and may lead to unexpected schedules.

See the following table for more details on available in modes.

| Option name | Description | Type | Required? | Default value |

|---|---|---|---|---|

| auth_method | Auth Method. Valid values : api_key, oauth | string | yes | api_key |

| apikey | A valid MailChimp API Key | string | yes for api_key Auth Method | |

| access_token | A valid MailChimp access token | string | yes for oauth Auth Method | |

| target | Supported data targets : list_memebers, member_activity, campaigns, lists | string | yes | |

| list_id | A valid MailChimp list id. Import all lists when both list_id and more_list_ids is not presented. Only valid for list_members and member_activity target | string | no | |

| more_list_ids | Array of valid MailChimp list ids. Import all lists when both list_id and more_list_ids is not presented. Only valid for list_members and member_activity target | array | no | |

| start_time | Specify the date and time to fetch records from. Formatted yyyy-MM-dd'T'hh:mm:ss'Z' (e.g. ‘2018-05-07T00:00:00Z’). Inclusive | string | no | |

| end_time | Specify the allowable duration to fetch records. formatted yyyy-MM-dd'T'hh:mm:ss'Z' (e.g. ‘2018-05-08T00:00:00Z’). Exclusive | string | no | |

| incremental | true for “mode: append”, false for “mode: replace” (See below). | boolean | no | true |

| use_batch_request | Group all MailChimp API calls into batches, submit batches instead of using the regular API calls | boolean | no | false |

| Option name | Description | Type | Default value |

|---|---|---|---|

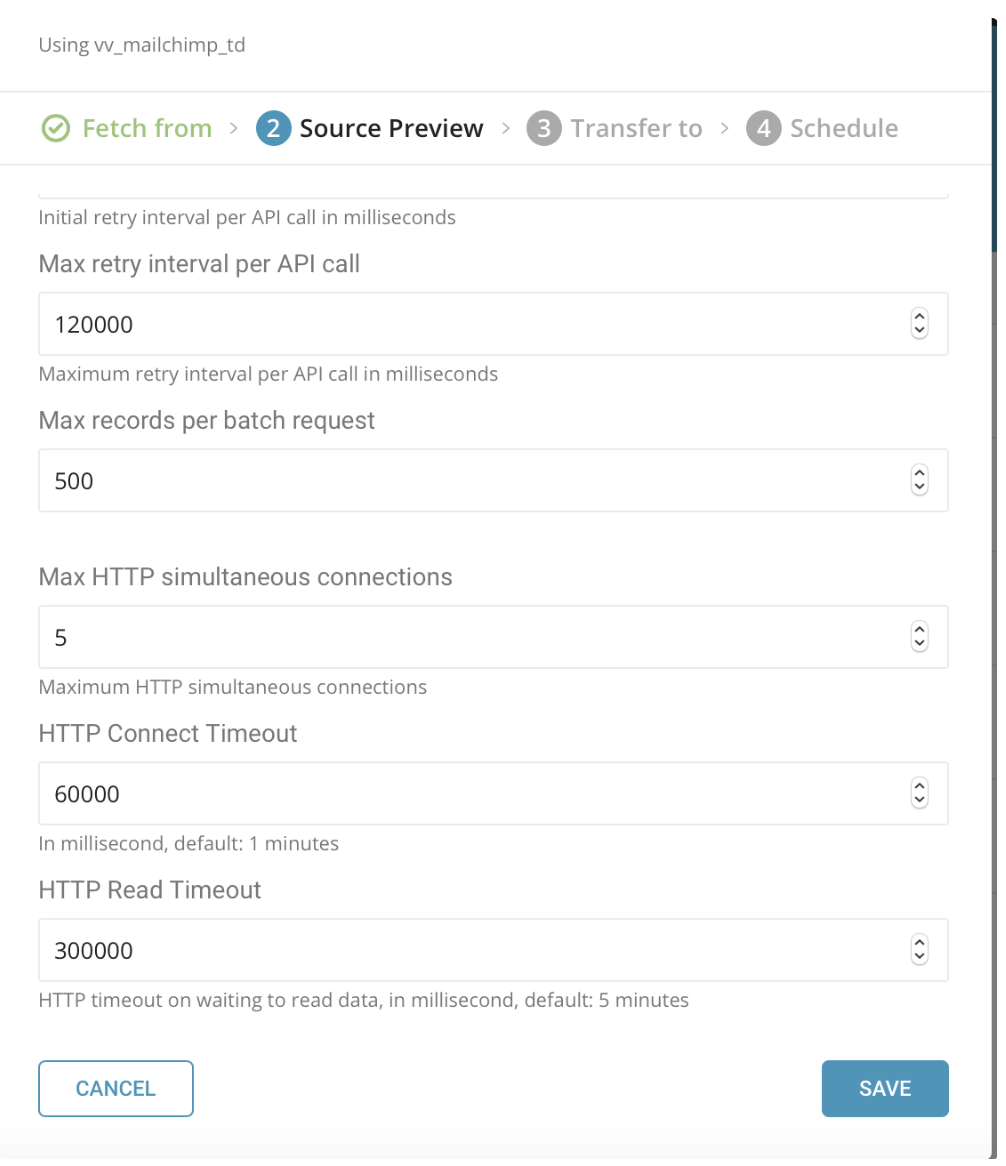

| http_max_connections | Maximum HTTP simultaneous connections (min: 1, max: 10) | integer | 5 |

| skip_on_invalid_records | false fail fast, true. Ignore invalid records/errors and continue loading other records | boolean | false |

| max_records_per_request | Max records per batch request (min: 10) | integer | 500 |

| max_requests_per_minute | Max requests per minute (min: 1, max: 300) | integer | 300 |

| maximum_retries | Maximum retry count per API call | integer | 7 |

| initial_retry_interval_millis | Initial retry interval per API call in milliseconds | integer | 1000 |

| maximum_retry_interval_millis | Maximum HTTP simultaneous connections | integer | 120000 |

| http_connect_timeout_millis | HTTP Connect Timeout | integer | 60000 |

| http_read_timeout_millis | HTTP Read Timeout | integer | 300000 |

http_max_connections is 5 for running jobs. When running more than 2 jobs at the same time, the parameter value should be reduced to ensure that total of http connections don't exceed MailChimp's rate limit of 10 connections.

http_max_connections can be changed by using the http\_max\_connections parameter for CLI or by using Advanced Settings >Preview step, in the TD Console.

- Config File

in:

http_max_connections: 5

out:

mode: append- TD Console

Due to MailChimp's internal implementation, use batch operations won't have performance gains compare to normal import (Sending synchronous requests). Its purpose is to reduce the number of requests made by the Connector. Consider using batch operations if you experience one of the following events:

- Your import failed or unable to success using non-batch operation.

- Your MailChimp job frequently gets rate limited due to too many requests made by the Connector.

- Your MailChimp jobs get errors 501, 503 Http status codes and reach retry limit for these errors.