Magento helps you create customized shopping experiences for your customers. Magento is an open-source e-commerce platform written in PHP.

With this integration, you can import Magento (v2) objects into Treasure Data.

- Basic knowledge of Treasure Data

- Basic knowledge of Magento 2 (Treasure Data supports v. 2.4.6)

When you configure a data connection, you provide authentication to access the integration. In Treasure Data, you configure the authentication and then specify the source information.

- Open the TD Console

- Navigate to Integrations Hub -> Catalog

- Search for Magento.

- Select Create Authentication.

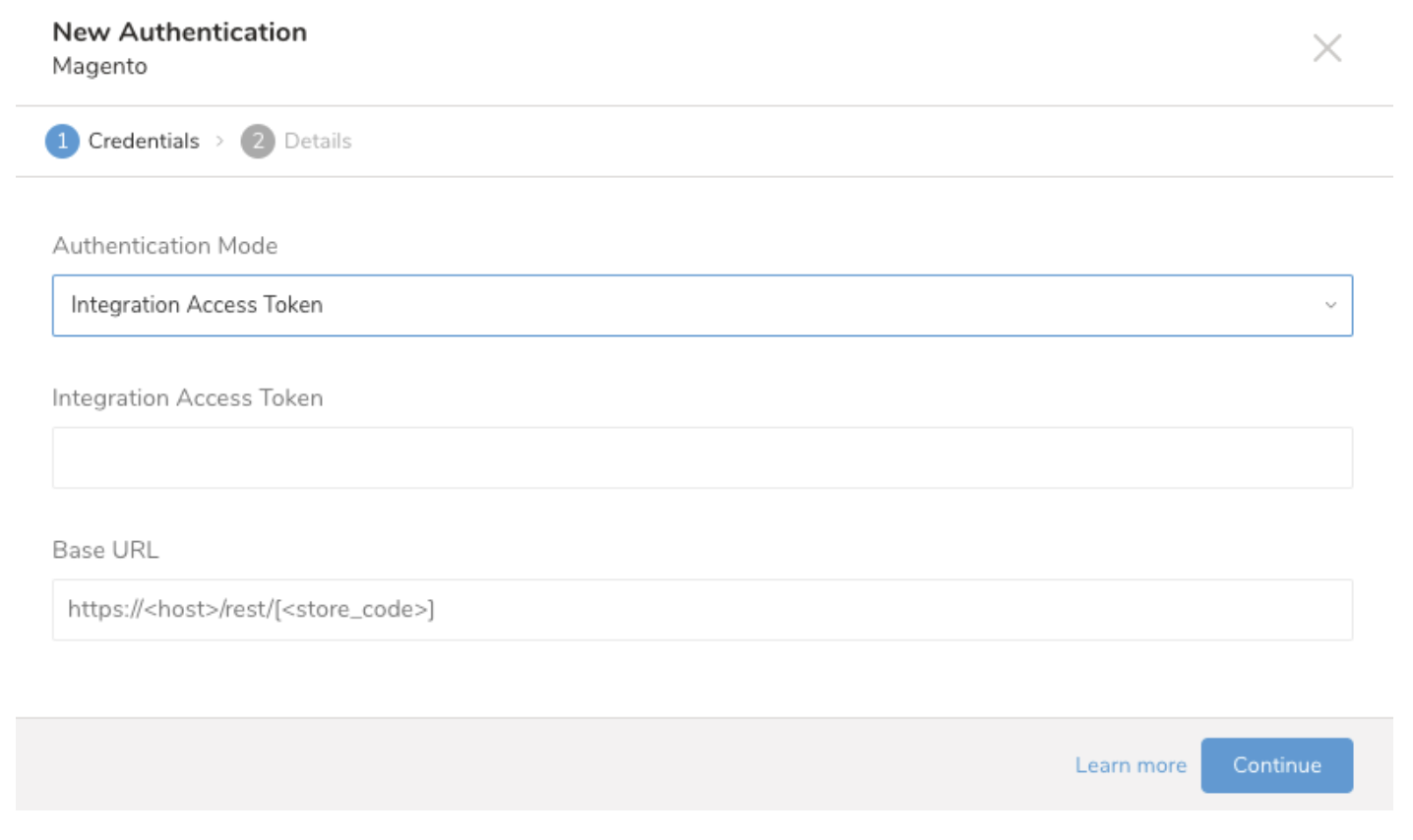

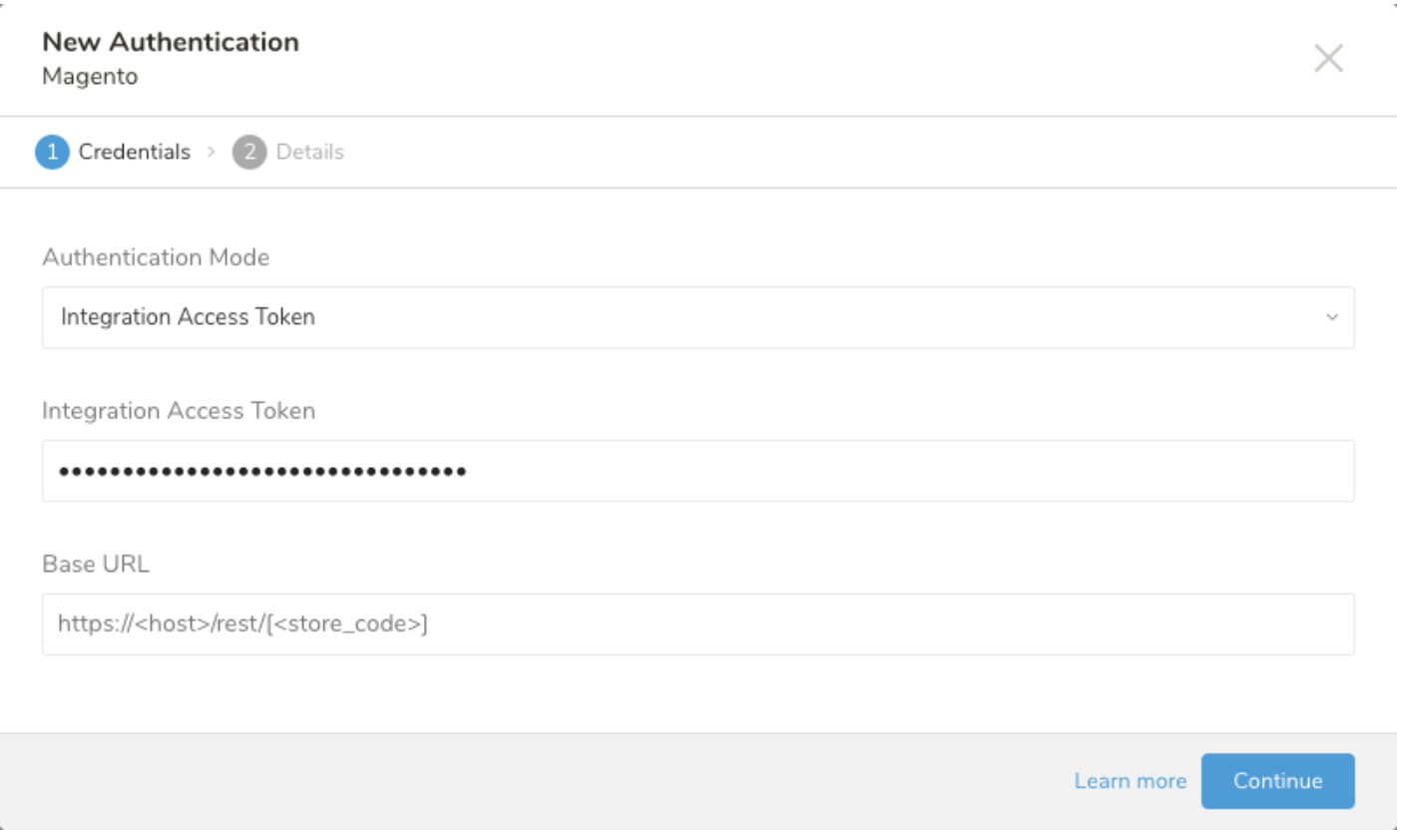

5. The following dialog opens. Choose one of the Authentication Modes. Edit the required credentials.

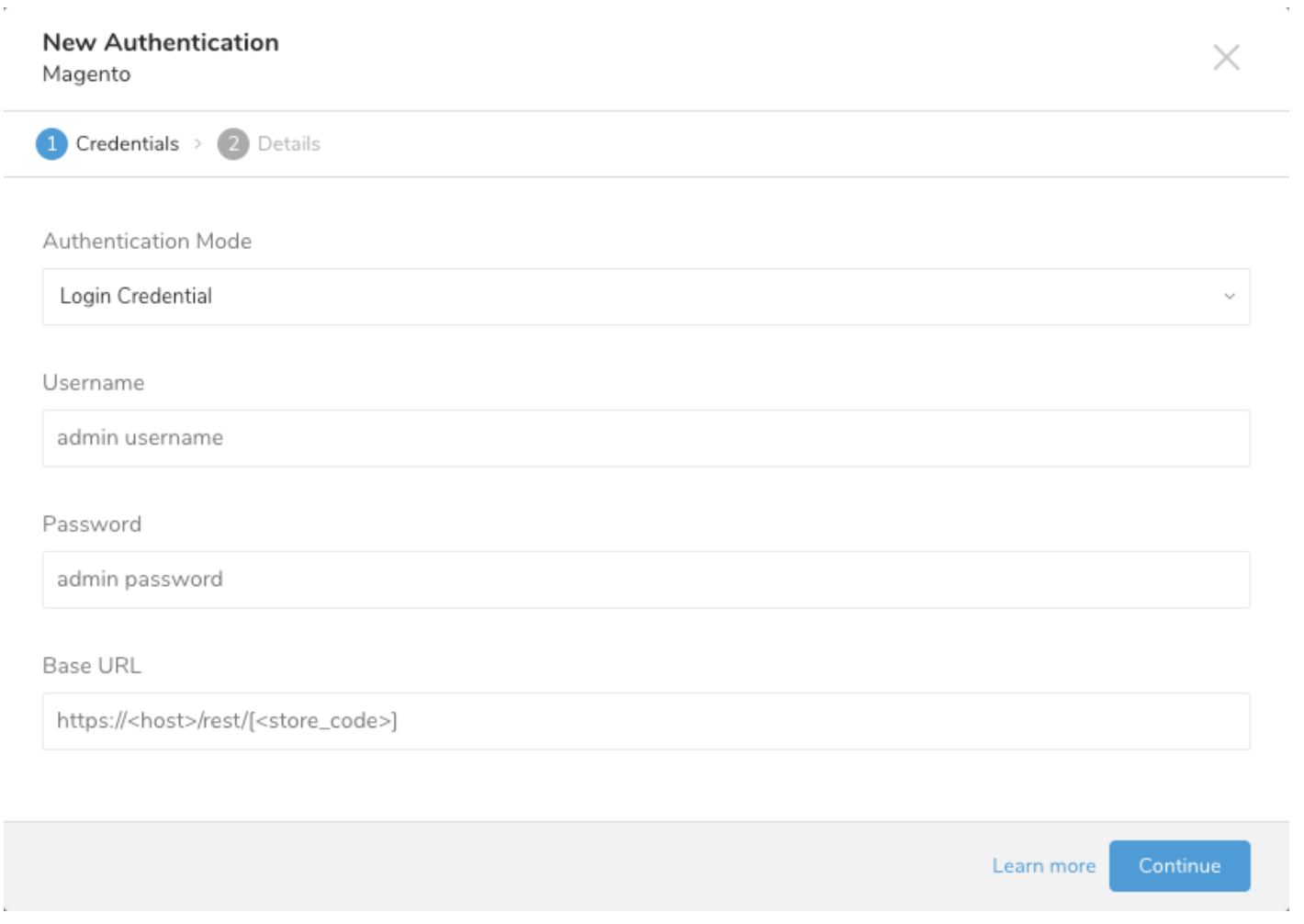

5. The following dialog opens. Choose one of the Authentication Modes. Edit the required credentials.

6. Select Continue

6. Select Continue

- Select Login Credential and enter your Admin-level user name and password.

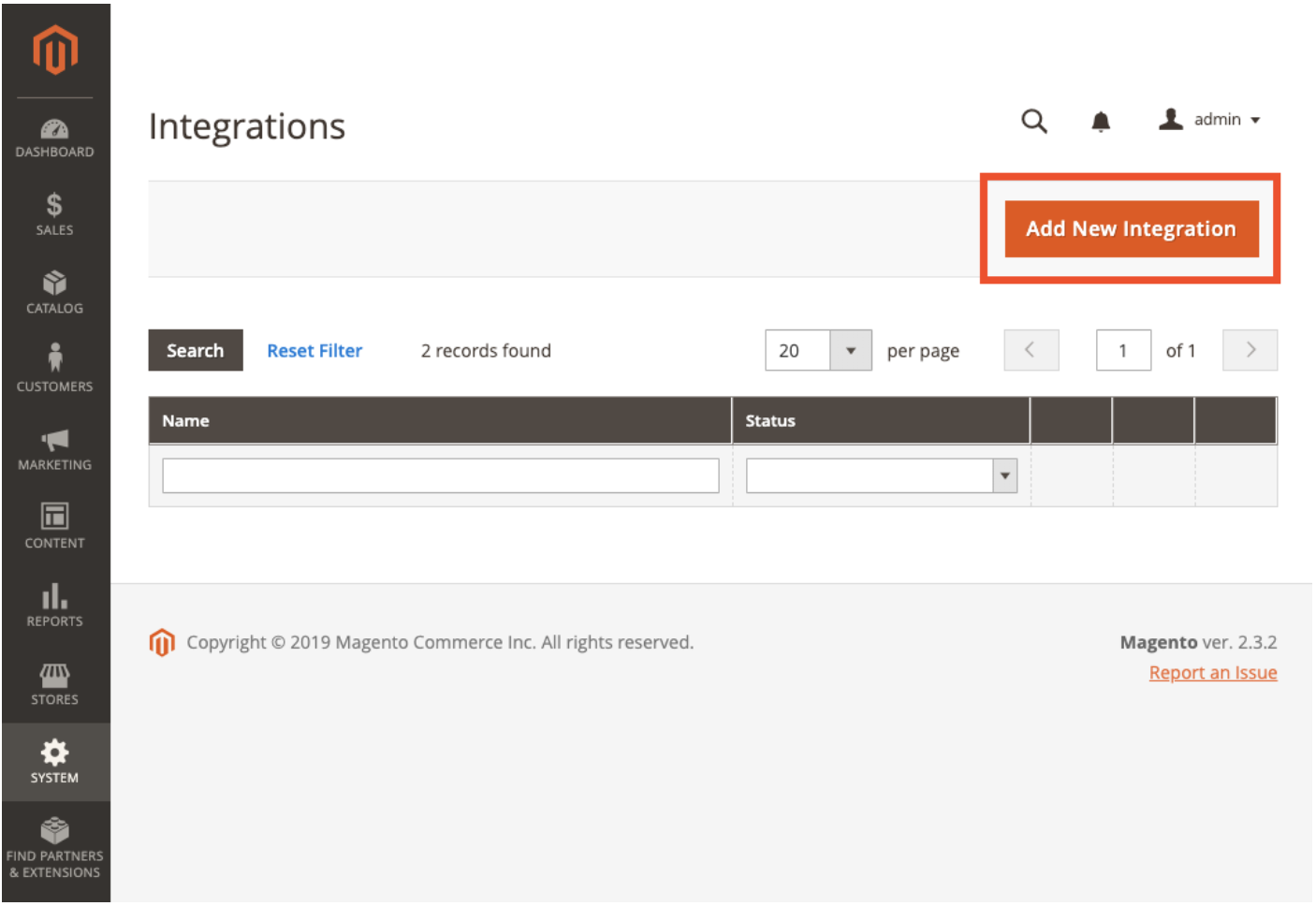

- To get the access token, in your Magento Admin view, go to System > Integrations > Add New Integration (or open an existing one).

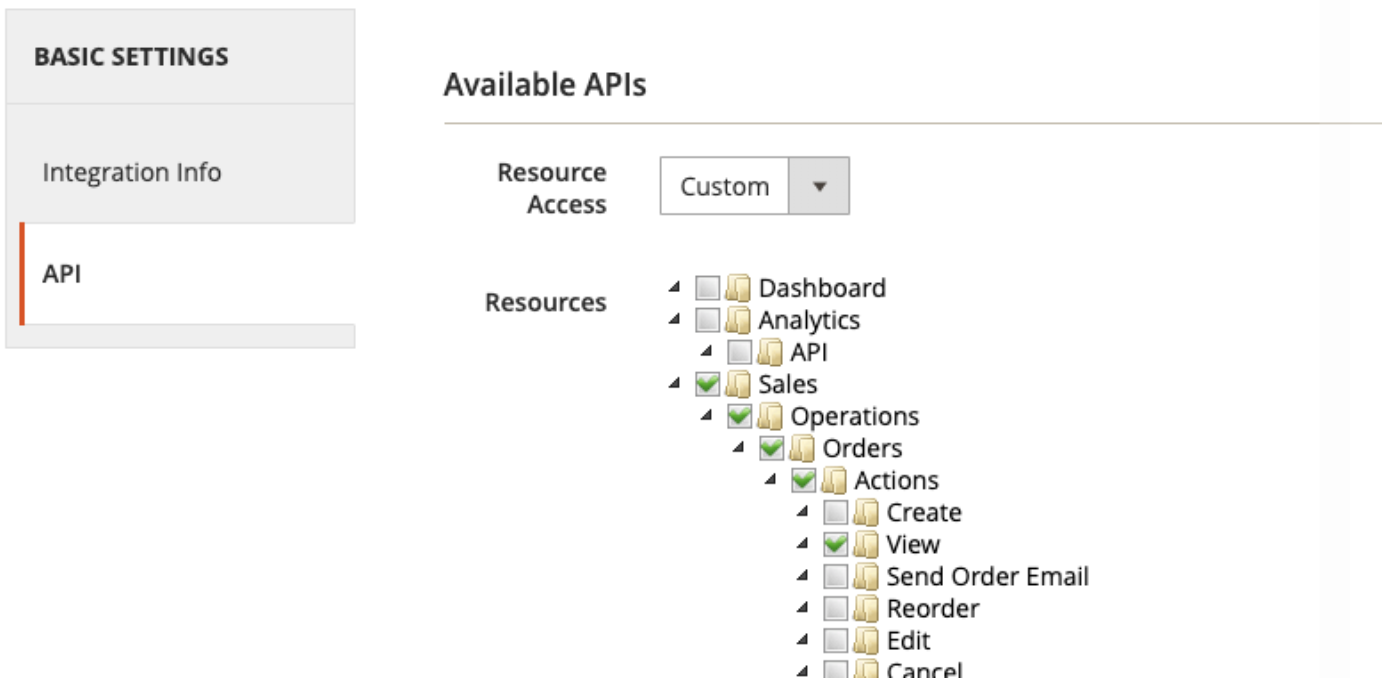

2. Select the appropriate permissions for your target resources.

2. Select the appropriate permissions for your target resources.

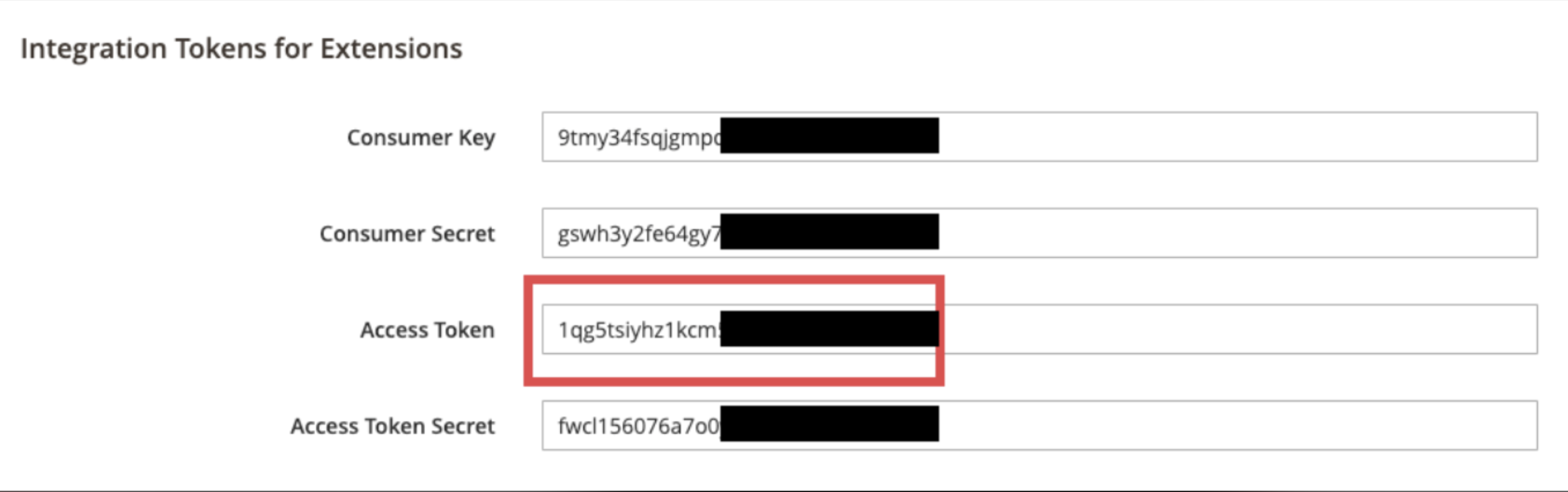

3. Activate the integration and then copy the Access Token.

3. Activate the integration and then copy the Access Token.

4. In Treasure Data, paste the name of the access token. For Base URL, set the value to be your Magento 2 REST API endpoint (for example,

4. In Treasure Data, paste the name of the access token. For Base URL, set the value to be your Magento 2 REST API endpoint (for example, http://my-magento-server/rest/)

5. Select Continue. 6. Name your Authentication. Click Done.

5. Select Continue. 6. Name your Authentication. Click Done.

After creating the authenticated connection, you are automatically taken to the Authentications tab. Look for the connection you created and click New Source.

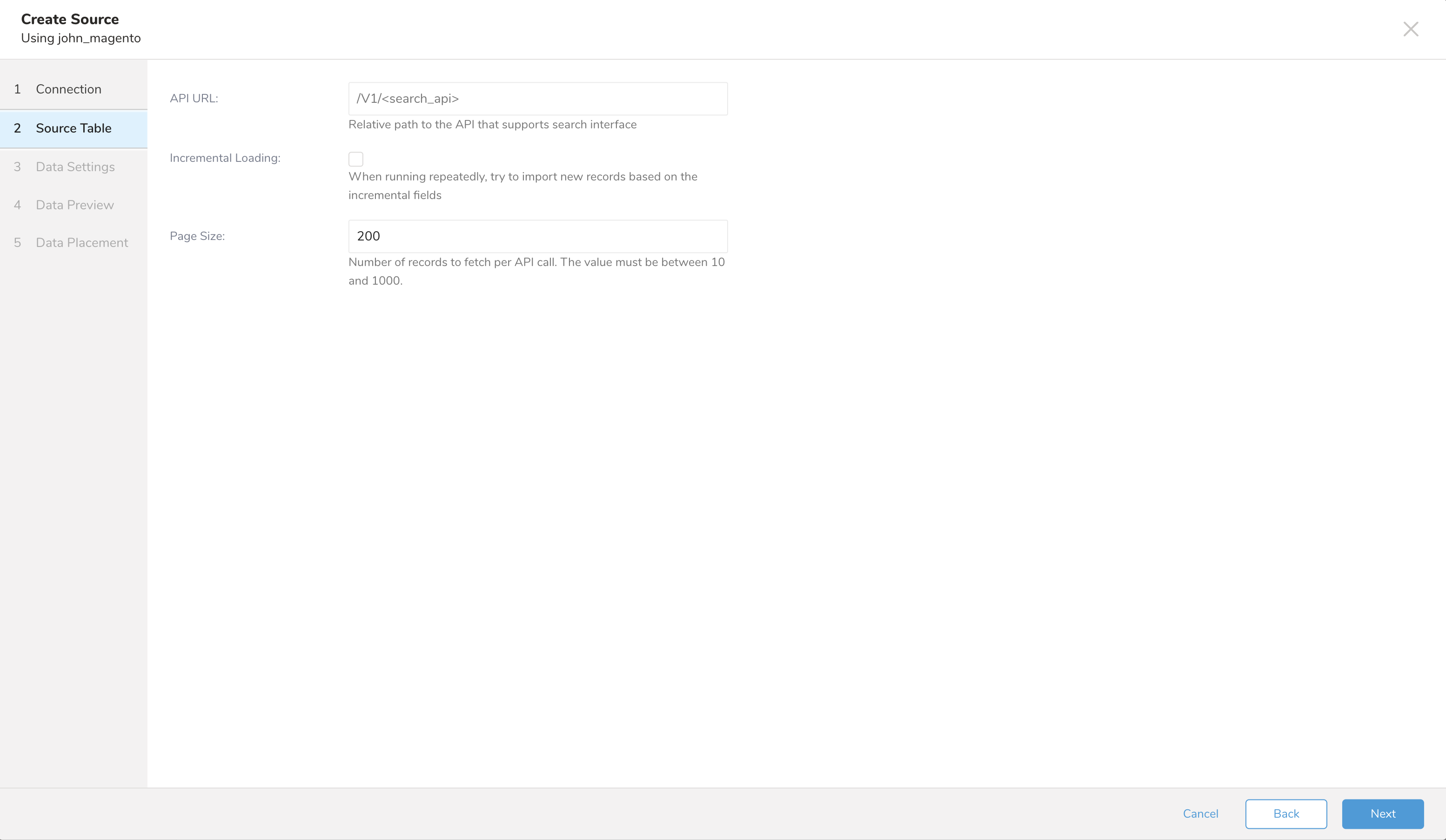

The following dialog opens.

Complete the details.

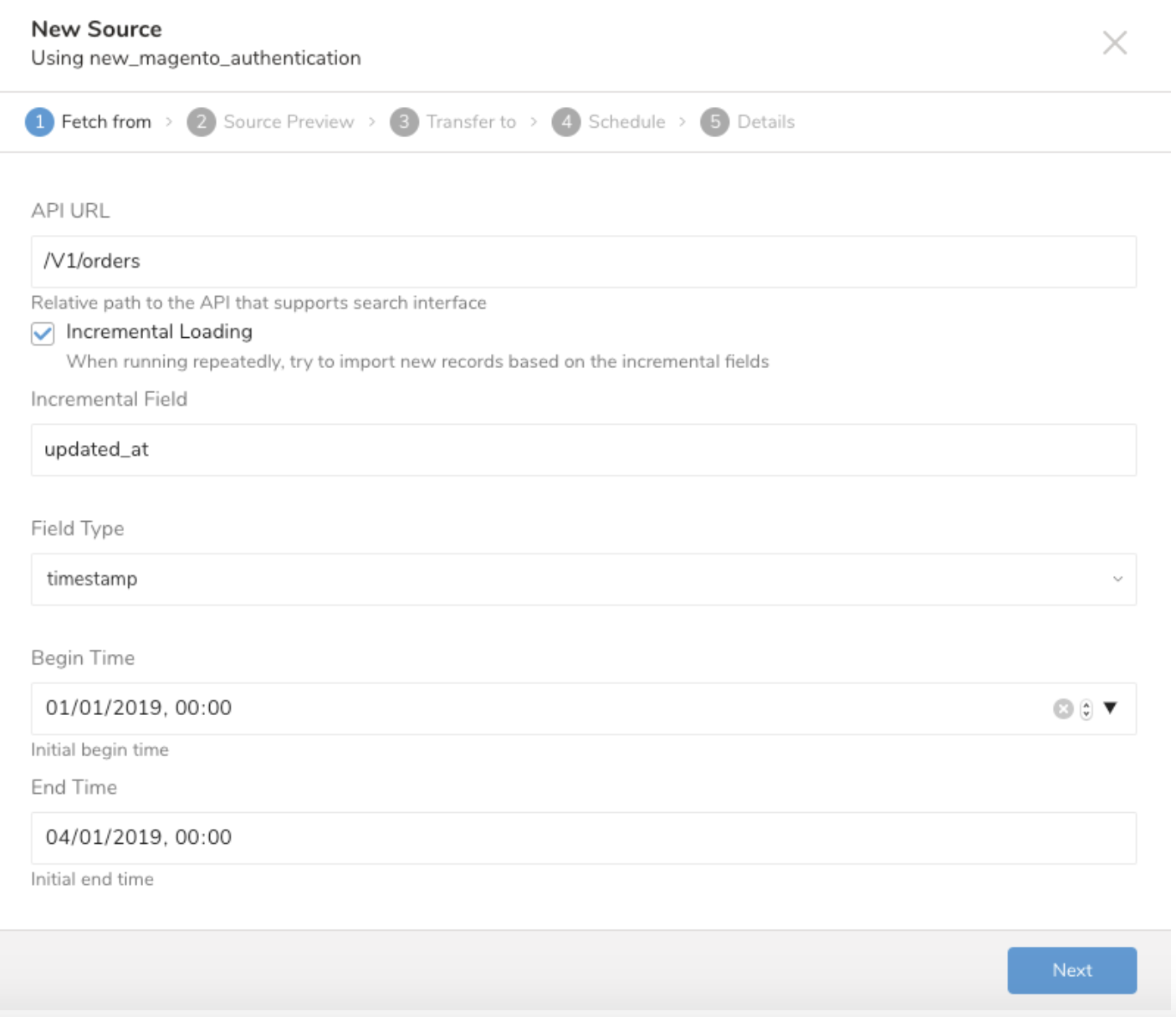

API URL: The desired resource (for example,

/V1/orders). The target API must conform to Magento Search API:Incremental: Specify columns and column type. Set a begin and end value. These values are used as the initial fetching range and are automatically increased while keeping the same range (or duration for timestamp type). When running on schedule, the time window of the fetched data automatically shifts forward on each run. For example, if the initial config is January 1, with ten days in duration, the first run fetches data modified from January 1 to January 10, the second run fetches from January 11 to January 20, and so on.

Review "How Incremental Loading Work: in the Appendix below.

Page Size: Number of records to fetch per API call.

Click Next to preview the data.

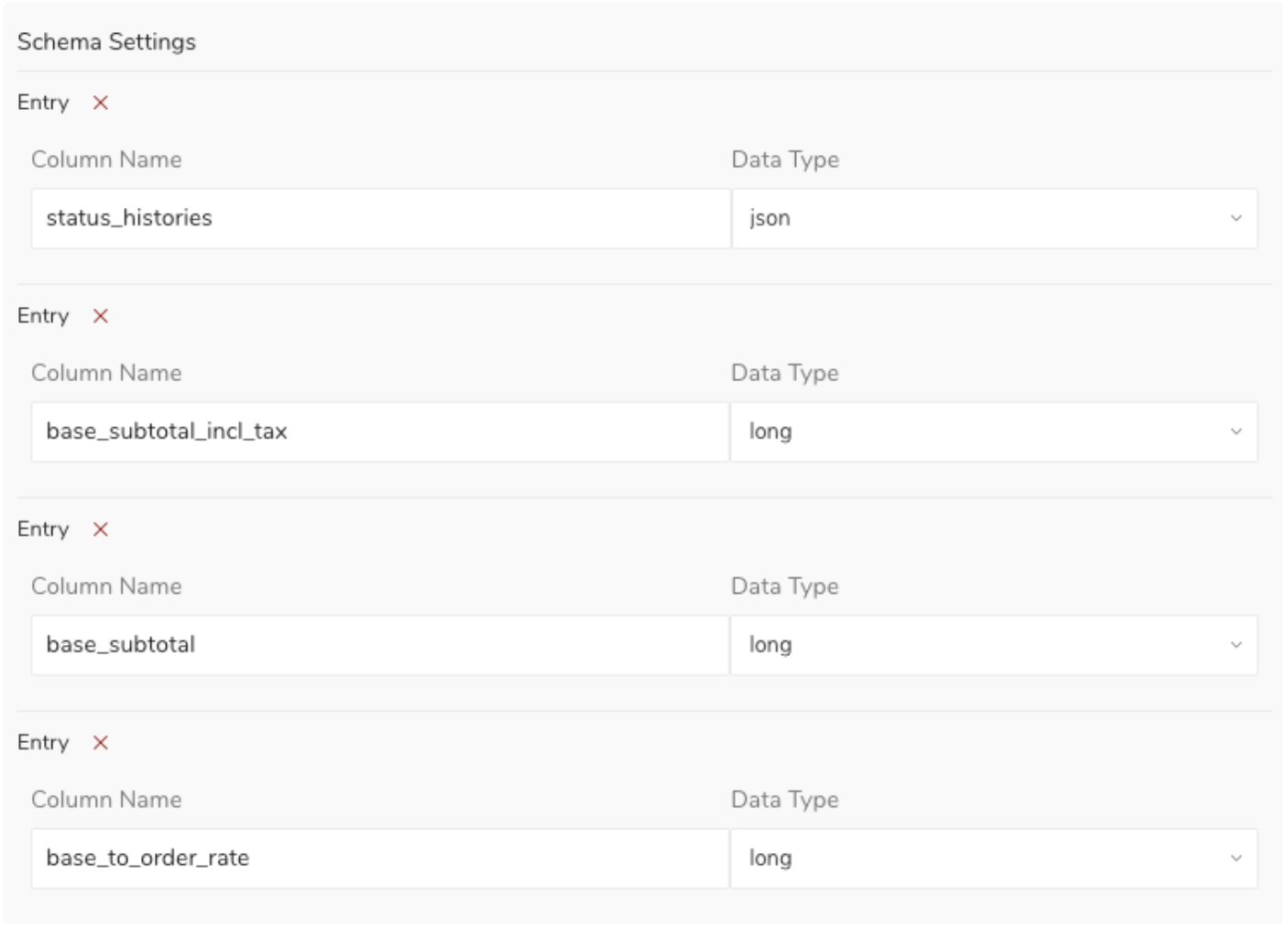

The Magento data connector must sample a response to guess for the resource's schema, and the Magento API potentially omits the decimal part of decimal numbers if they are rounded. The Magento data connector might incorrectly guess a column's data type as long instead of double and cause loss of precision. You can adjust to the correct types in Advance Settings.

Click Advanced Settings to see the schema settings.

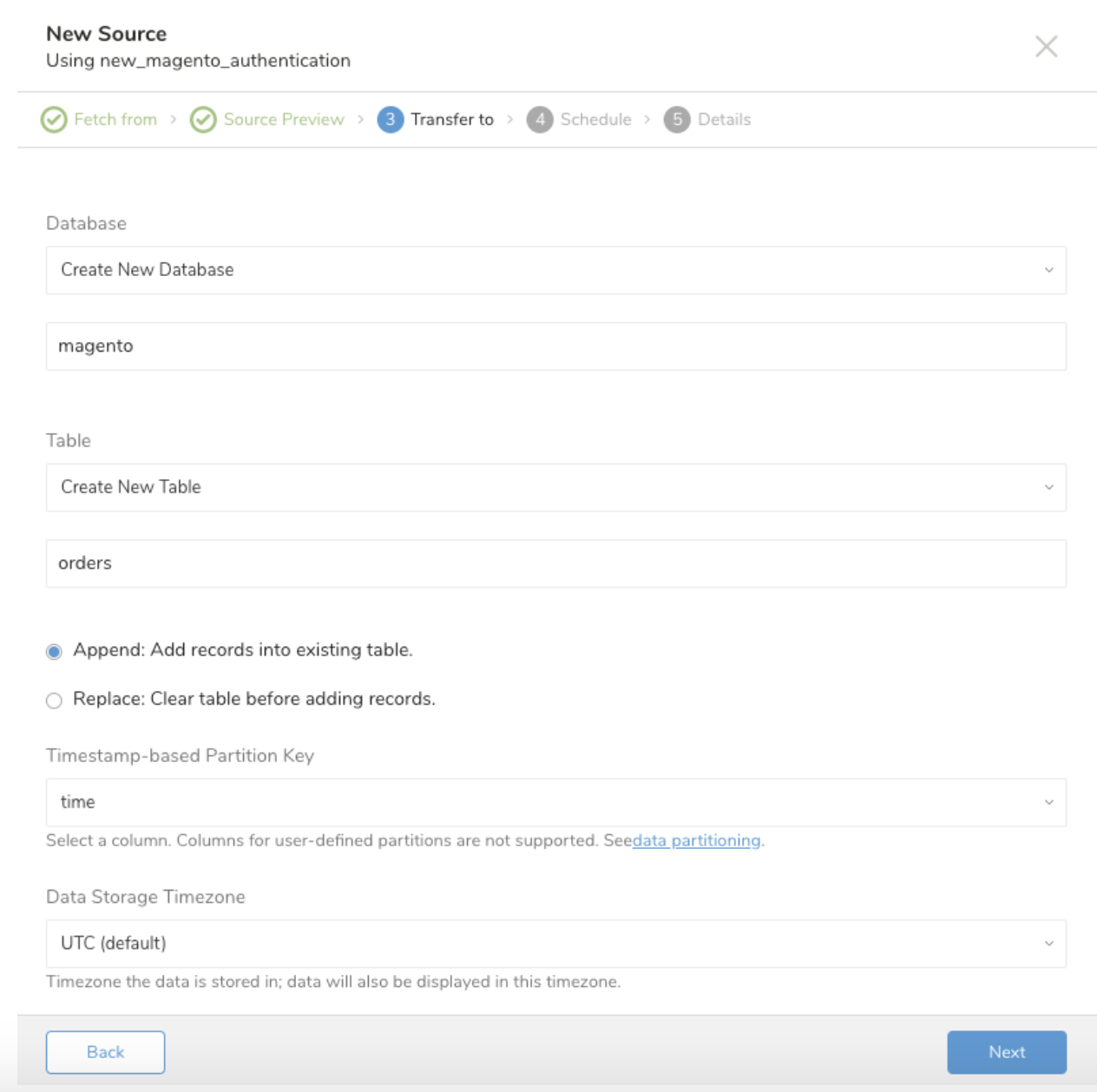

Choose an existing or create a new database and table where you want to transfer data to:

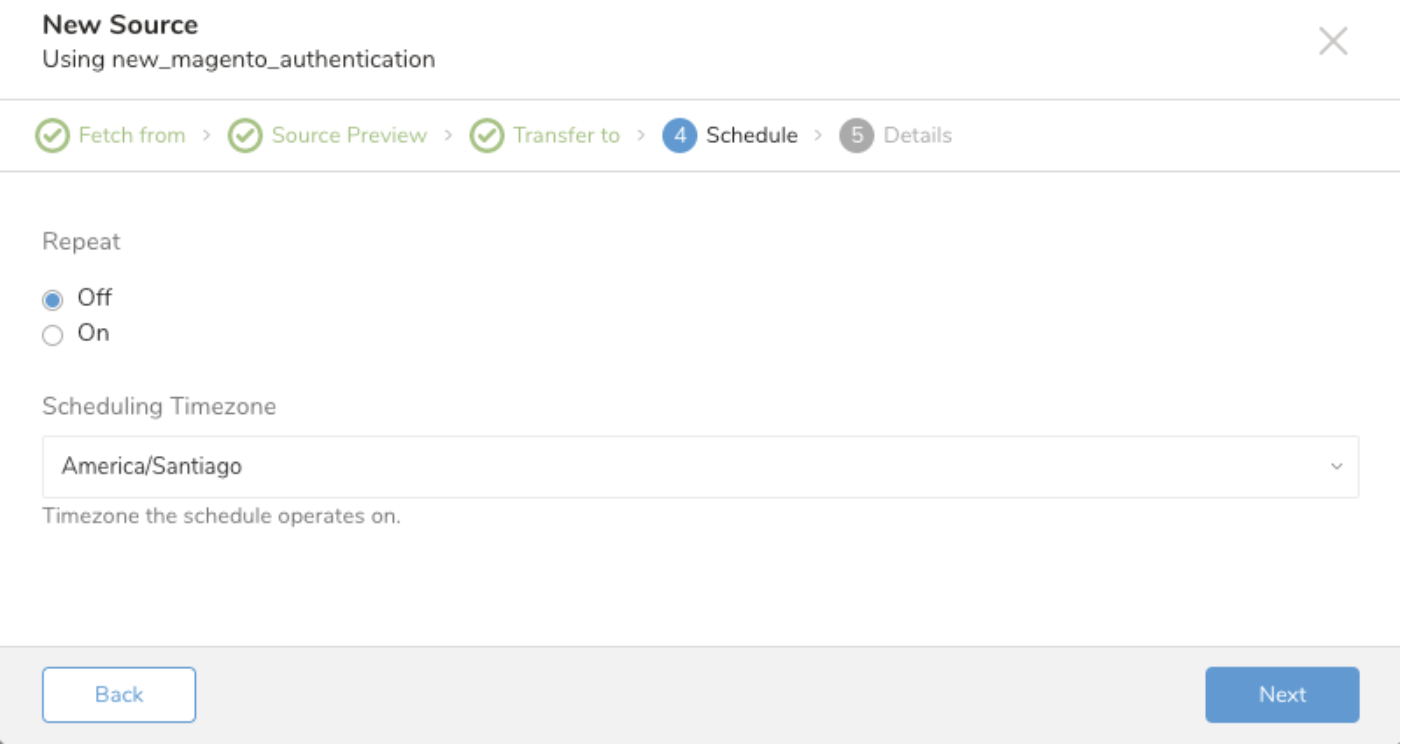

In the Schedule tab, you can specify a one-time transfer, or you can schedule an automated recurring transfer.

If you select Once now, click Start Transfer. If you select Repeat, specify your schedule options, then click Schedule Transfer.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

You can use the CLI to configure your connection.

Open a terminal and run the following command to install the newest Treasure Data Toolbelt.

$ td --version

0.16.3Prepare load.yml. The in: section is where you specify what comes into the connector from Magento and the out: section is where you specify what the connector puts out to the database in Treasure Data.

Provide your Magento account access information as follows:

in:

type: magento

auth_method: token

token: xxxxxxxxxxxxx

base_url: https://your-magento-server/rest/

resource_url: V1/products| Keys | Description |

|---|---|

| auth_method | Either password or token |

| token | The Access Token for auth_method: token |

| username | Admin username for auth_method: username |

| password | Admin password for auth_method: password |

| base_url | Magento REST API endpoint, example: https://my-magento-server/rest |

| resource_url | the target resource URL, example: /V1/products |

| incremental | should data import be continuous or once, default as false. |

| incremental_column | which column should be used as the cursor for incremental loading |

| incremental_column_type | the data type of incremental_column, either timestamp or number |

| incremental_start_timestamp | initial start value for incremental_column_type: timestamp |

| incremental_end_timestamp | initial end value for incremental_column_type: timestamp |

| incremental_start_number | initial start value for incremental_column_type: number |

| incremental_end_number | initial end value for incremental_column_type: number |

| page_size | Number of records to fetch per API call. Default 200. The value must be between 10 and 1000: number |

You can preview data to be imported using the command td connector:preview.

$ td connector:preview load.yml

+-----------------+-------------+----

| product_id:long | name:string | ...

+-----------------+-------------+----

| 1 | "Chocolate" |

| 2 | "Cookie" |

| 3 | "Bike" |

+-----------------+-------------+----Use td connector:issue to execute the job. Processing might take a couple of hours depending on the data size. The following are required:

- name of the schedule

- cron-style schedule

- database and table where their data will be stored

- the Data Connector configuration file

$ td connector:issue load.yml --database td_sample_db --table td_sample_table --time-column created_at \

daily_xxxx_import ...The preceding command assumes you have already created database(td_sample_db) and table(td_sample_table). If the database or the table do not exist in TD, this command will not succeed so create the database and table manually or use -auto-create-table option with td connector:issue command to auto create the database and table:

td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column created_at \

--auto-create-tableIt is recommended to specify --time-column option, because Treasure Data’s storage is partitioned by time. If the option is not given, the data connector selects the first long or timestamp column as the partitioning time. The type of the column, specified by --time-column, must be either of long or timestamp type (use Preview results to check for the available column name and type. Generally, most data types have a last_modified_date column).

A time column is available at the end of the output.

td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column created_atIf your data doesn’t have a time column you may add it using the add_time filter. You add the "time" column by adding the add_time filter to your configuration file as follows.

in:

type: xxxxx

...

filters:

- type: add_time

from_value:

mode: upload_time

to_column:

name: time

out:

type: tdMore details at add_time filter plugin.

If you have a field called time, you do not have to specify -time-column option.

You can schedule a periodic Data Connector execution for a periodic Magento import. We manage our scheduler to ensure high availability. By using this feature, you no longer need a cron daemon on your local data center.

A new schedule can be created using the td connector:create command. The name of the schedule, cron-style schedule, the database and table where their data will be stored, and the Data Connector configuration file are required.

$ td connector:create \

daily_magento_import \

"10 0 * * *" \

td_sample_db \

td_sample_table \

load.yml The cron parameter also accepts these three options: @hourly, @daily and @monthly. | By default, the schedule is setup in the UTC timezone. You can set the schedule in a timezone using -t or --timezone option. The --timezone option only supports extended timezone formats like 'Asia/Tokyo', 'America/Los_Angeles' etc. Timezone abbreviations like PST, CST are *not* supported and may lead to unexpected schedules.

You can load records incrementally by specifying columns in your table using incremental_column.

in:

type: magento

base_url: ...

api_url: ...

auth_method: token

token: ...

incremental_column: true

incremental_column_type: number

incremental_start_number: ...

incremental_end_number: ...

out:

mode: append

exec: {}Replace incremental_start_number and incrementa_end_number to incremental_start_timestamp and incremental_end_timestamp respectively if incremental_column_type: timestamp.

If you’re using scheduled execution, the connector automatically remembers the last value state and holds it internally. Then you can use it at the next scheduled execution. See Appendix: How Incremental Loading Works for details.

You can see the list of currently scheduled entries by td connector:list.

$ td connector:list

+-----------------------+--------------+----------+-------+--------------+-----------------+------------------------------+

| Name | Cron | Timezone | Delay | Database | Table | Config |

+-----------------------+--------------+----------+-------+--------------+-----------------+------------------------------+

| daily_magento_import | 10 0 * * * | UTC | 0 | td_sample_db | td_sample_table | {"type"=>"magento", ... } |

+-----------------------+--------------+----------+-------+--------------+-----------------+------------------------------+For those API that Magento 2 doesn't support off the shelf. Since TD Magento Connector simply requests from its REST API, you have to expose your own API and set the corresponding "Resource URL" (resource_url) configuration. Keep in mind that API have to implement SearchCriteriaInterface for Magento Connector to work.

Incremental loading requires a monotonically increasing unique column (incremental_column) to load records that were inserted (or updated) after the last execution.

This mode is useful when you want to fetch just the object targets that have changed since the previously scheduled run. For example, if incremental_column: updated_at option is set, the request is as follows:

GET https://your-magento-server/rest/V1/searchable-resource

&searchCriteria[filter_groups][0][filters][0][field]=updated_at

&searchCriteria[filter_groups][0][filters][0][value]=incremental_start_timestamp

&searchCriteria[filter_groups][0][filters][][condition_type]=gt

&searchCriteria[filter_groups][0][filters][0][field]=updated_at

&searchCriteria[filter_groups][0][filters][0][value]=incremental_end_timestamp

&searchCriteria[filter_groups][0][filters][][condition_type]=ltWhen bulk data loading finishes successfully, it outputs incremental_last_value and incremental_run_count(number of jobs ran, starting at 0) parameters as config-diff so that the next execution uses it. At the next execution, when those parameters are also set, this plugin uses incremental_last_value as the new lower bound, condition_type will also switch from gt to gteq. For upper bound, it is calculated by: incremental_start_<timestamp/number> + incremetal_run_count * (incremental_end_<timestamp/number> - incremental_start_<timestamp/number>), condition_type stays as lt.

GET https://your-magento-server/rest/V1/searchable-resource

&searchCriteria[filter_groups][0][filters][0][field]=updated_at

&searchCriteria[filter_groups][0][filters][0][value]=incremental_last_value

&searchCriteria[filter_groups][0][filters][][condition_type]=gteq

&searchCriteria[filter_groups][0][filters][0][field]=updated_at

&searchCriteria[filter_groups][0][filters][0][value]=increased_end_value_with_the_mentioned_formula

&searchCriteria[filter_groups][0][filters][][condition_type]=ltCurrently, only strings, timestamp and integers are supported as incremental_columns.

If incremental: false is set, the data connector fetches all the records of the specified Magento object type, regardless of when they were last updated. This mode is best combined with writing data into a destination table using ‘replace’ mode.

When you use Integration Access Token as Authentication Mode, you need to specify Yes to Allow OAuth Access Tokens to be used as standalone Bearer tokens option in Magento Admin. You can find this option via Stores > Configuration > Services > OAuth > Consumer Settings. If you don't do it, you will encounter unexpected 401 error.