Instagram Business lets you obtain insights about your Instagram media objects and user account activities. You can use Treasure Data's connector to bring Instagram insight data into Treasure Data.

- email_contacts-day

- follower_count-day

- get_directions_clicks-day

- impressions-day

- impressions-days_28

- impressions-week

- online_followers-lifetime

- phone_call_clicks-day

- profile_views-day

- reach-day

- reach-days_28

- reach-week

- text_message_clicks-day

- website_clicks-day

- engaged_audience_demographics-lifetime

- reached_audience_demographics-lifetime

- follower_demographics-lifetime

- media_all

- story_metrics

- carousel_album_metrics

- photo_and_videos_metrics

Enter the Facebook page ID that is linked to the Instagram Business account. When a user creates or converts an Instagram account to a business account, the user is required to link the Instagram Account to a Facebook page.

Facebook User Access token, by default, is a short-lived Access token.

Obtain a long-lived Access token to run a scheduled job in the Treasure Data platform:

- Follow the steps in this article to obtain a long-lived User Access token via the Facebook tool

- Follow these steps to obtain a long-lived token using Facebook API

If you run a job using TD Console, the connector already exchanged a long-lived access token for you.

The following permissions (scopes) are required:

public_profileemail manage_pagespages_show_listinstagram_basicinstagram_manage_insightsinstagram_manage_commentsads_management

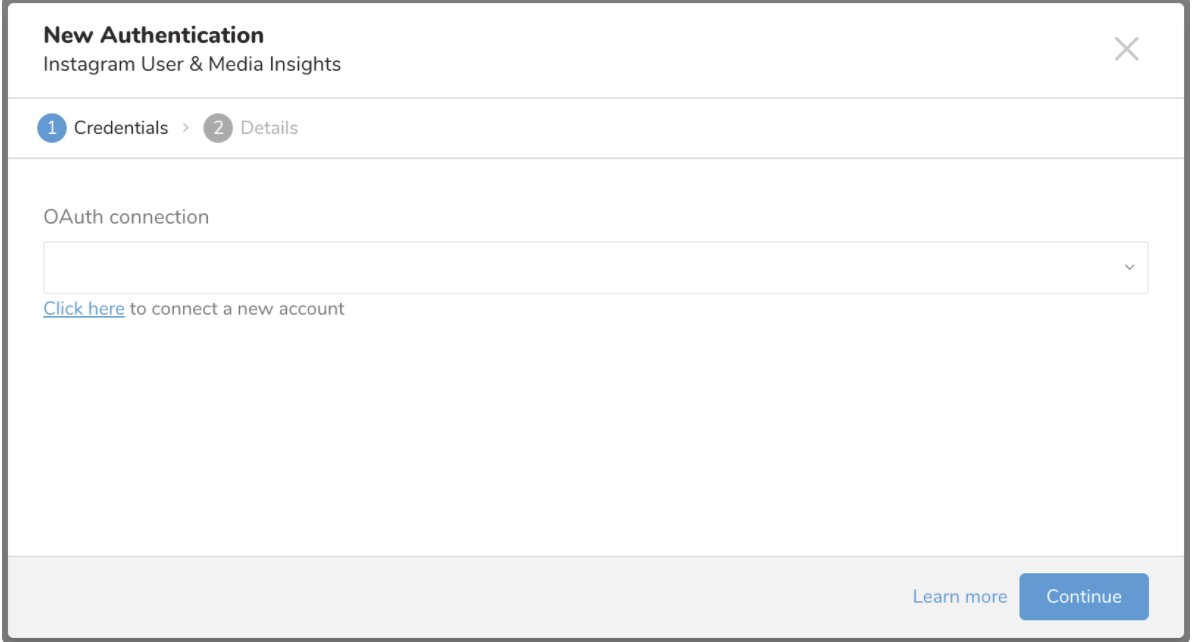

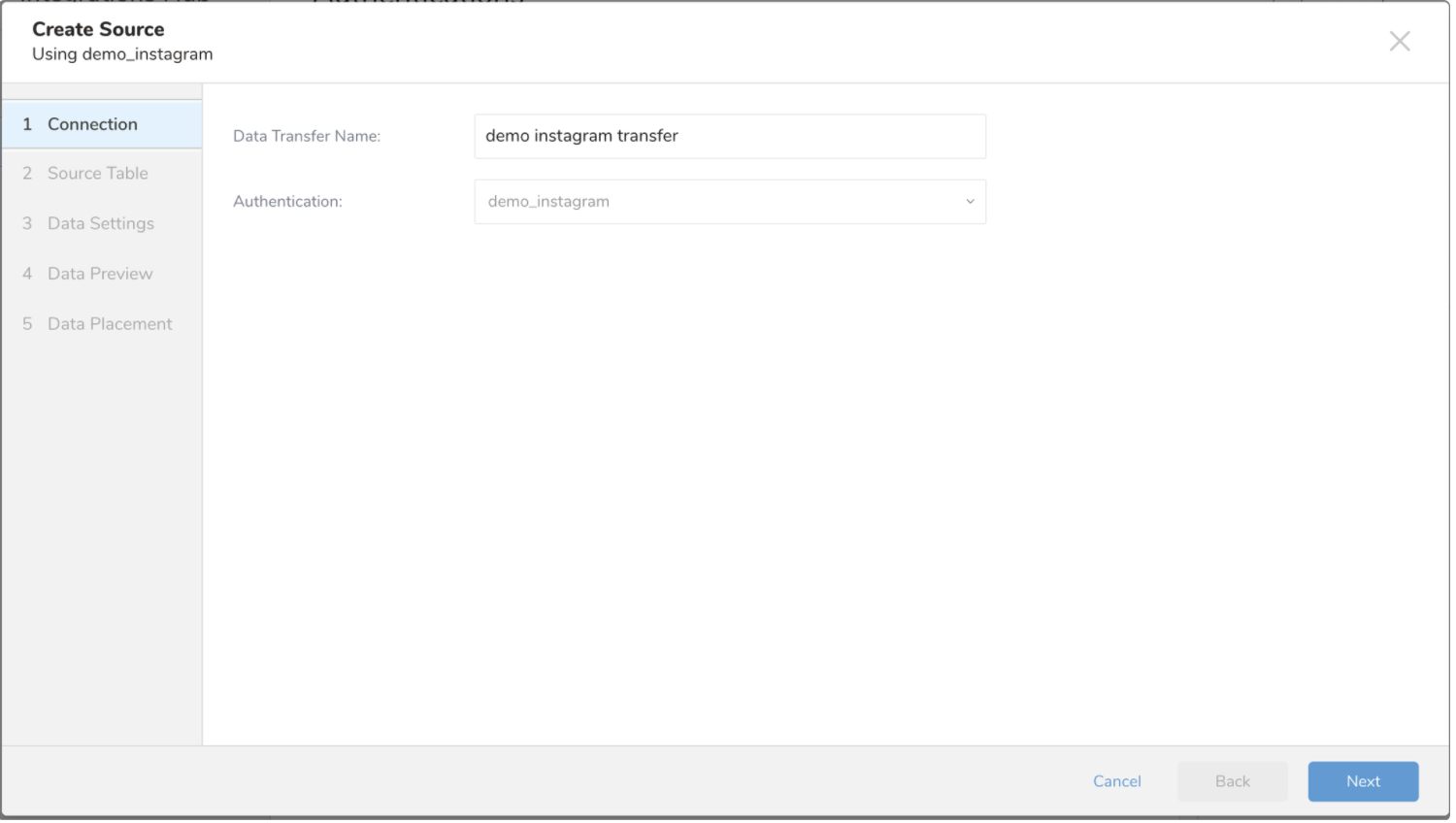

When you configure a data connection, you provide authentication to access the integration. In Treasure Data, you configure the authentication and then specify the source information.

Open TD Console.

Navigate to Integrations Hub > Catalog

Search and select Instagram User & Media Insights.

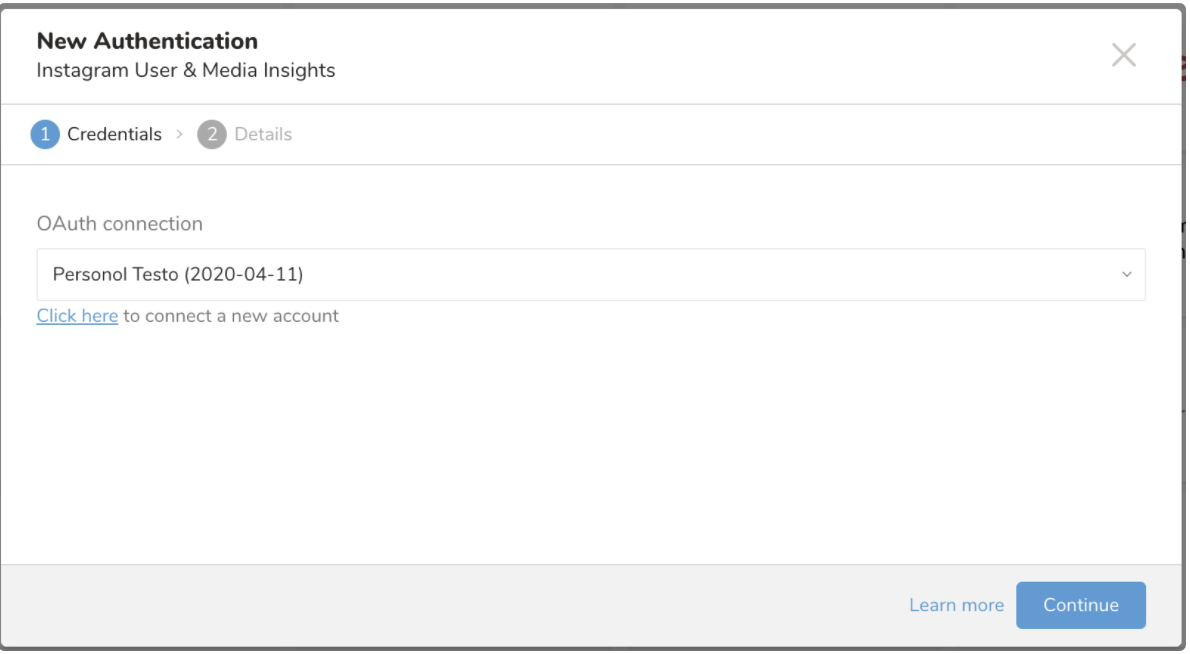

Select an existing OAuth connection for Instagram Insights or the link under OAuth connection to create a new one.

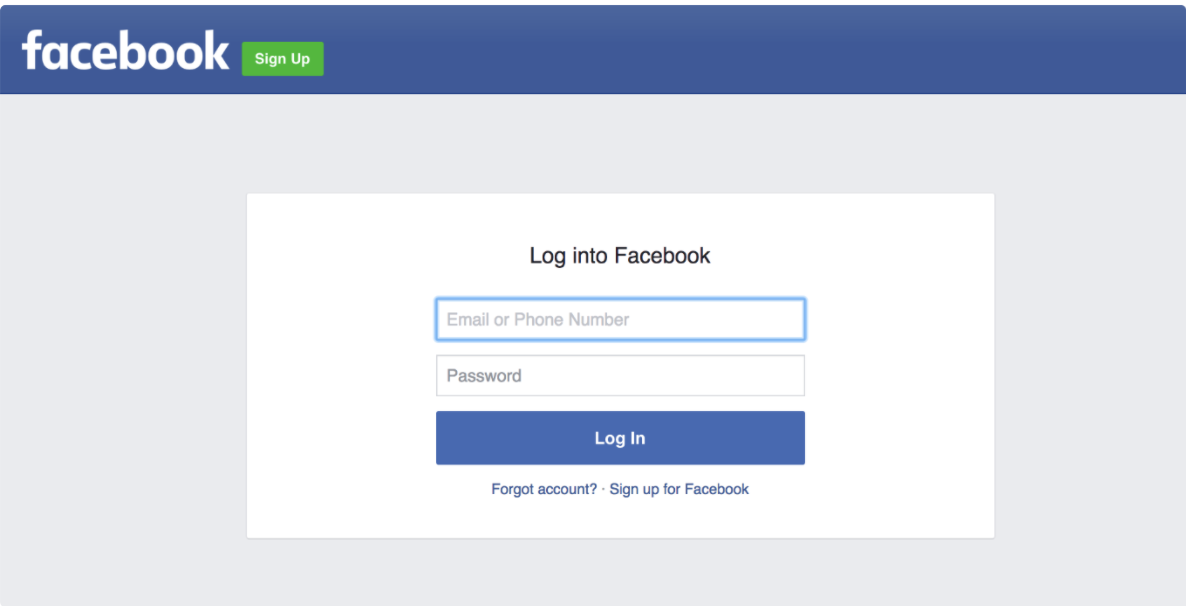

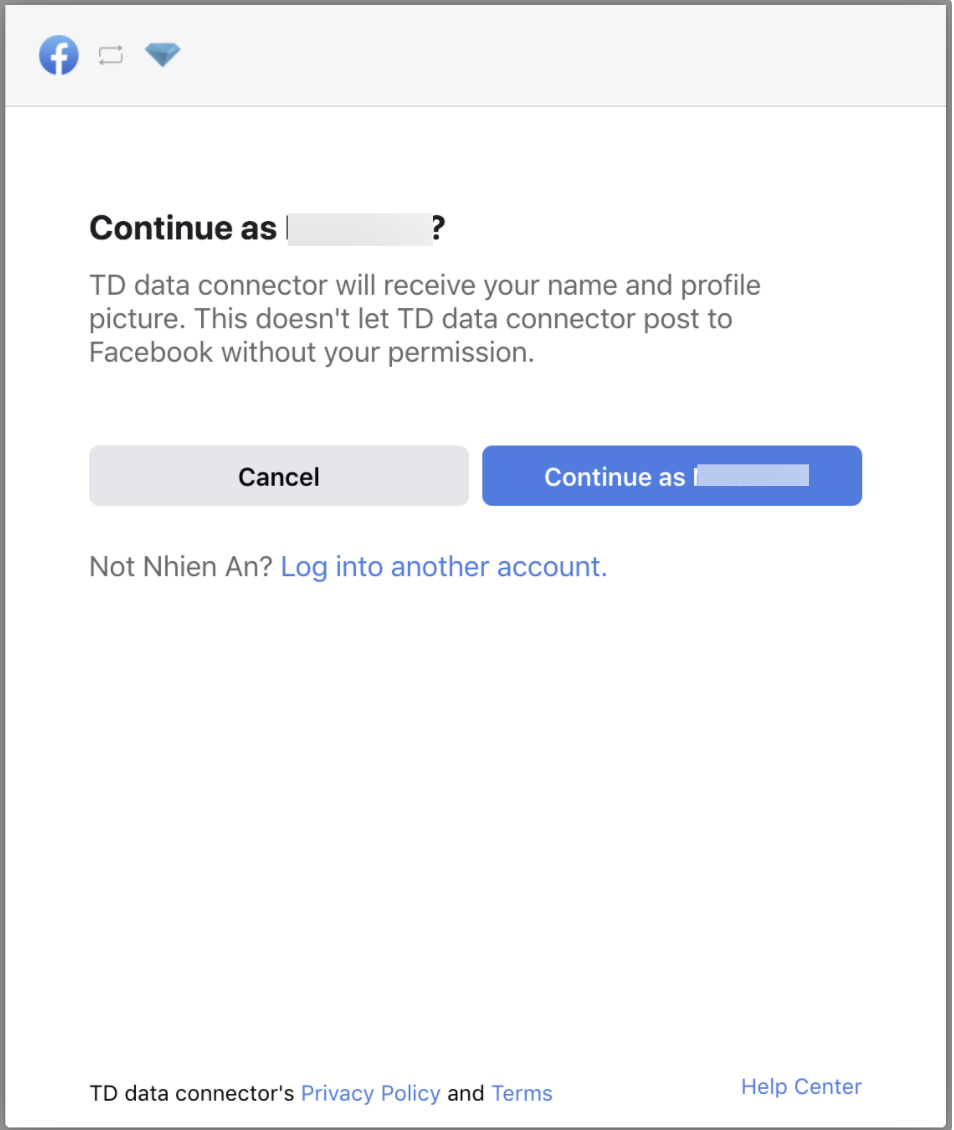

Instagram OAuth uses Facebook OAuth, so you are taken to the Facebook OAuth page.

Log into your Facebook account:

Grant access to the Treasure Data app by selecting the Continue as button.

You will be redirected back to the Catalog. Repeat the first step (

Create a new authentication) and choose your new OAuth connection.

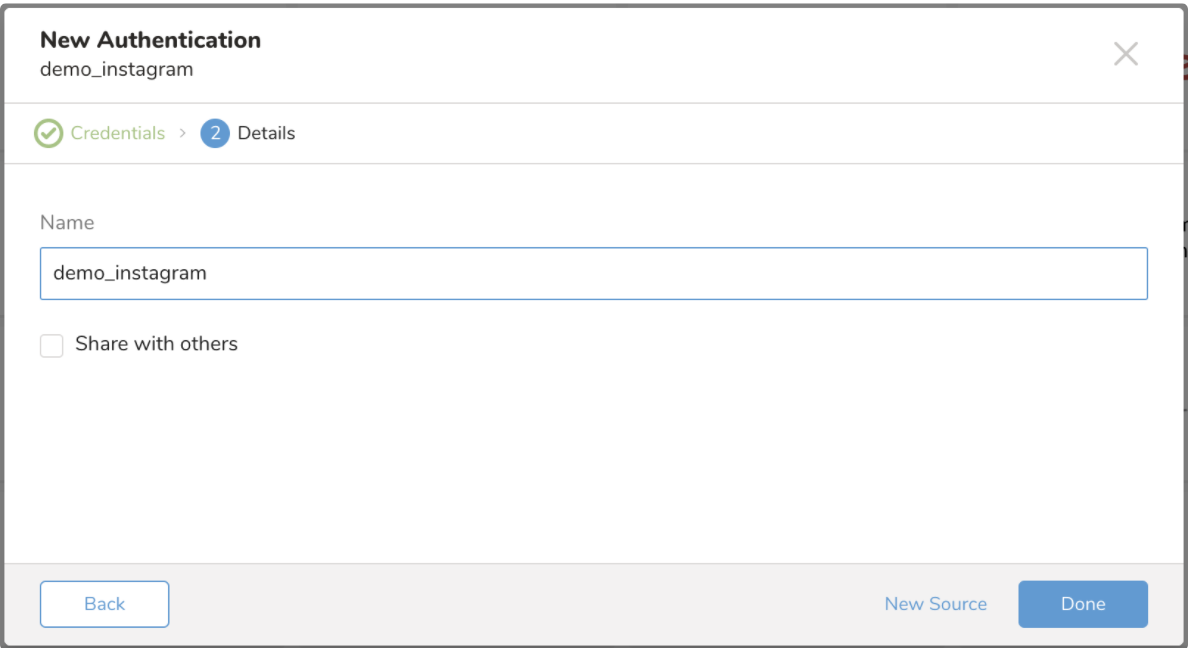

Name your new authentication. Select Done.

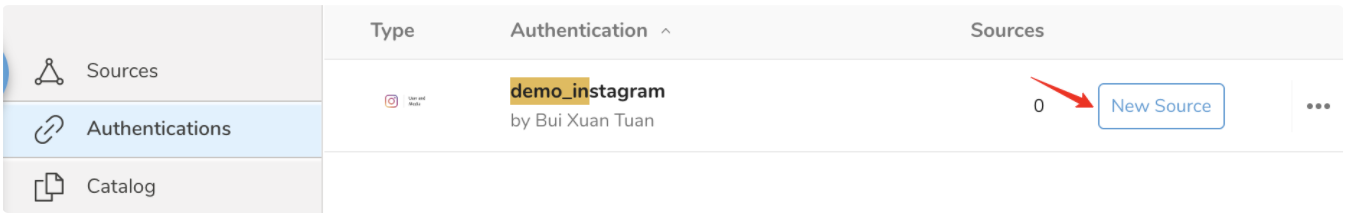

After creating the authenticated connection, you are automatically taken to Authentications.

- Search for the connection you created.

- Select New Source.

- The Connection page opens.

- Type a name for your Source in the Data Transfer field.

- Select Next. The Source Table page opens.

- Edit the following parameters in the Source Table.

| Parameter | Description |

|---|---|

| Facebook Page ID | Required, the Facebook page ID that is linked to the Instagram Business account. |

| Data type | Required, select one of the data types |

| User | Insights are metrics collected about your Instagram Business account, such as impressions, follower_count*, website_clicks, and audience_locale. |

| Media | Insights are metrics collected from content posted for your Instagram business account, such as engagement, impressions, reach, and saved. |

| Comments | Represents an organic Instagram comment. |

| Media List | Represents an Instagram photo, video, story, or album |

| Tags | Represents a collection of IG Media objects in which an IG User has been tagged by another Instagram user. |

| Start Date & End Date | Required for the User data type, the start date and end date must follow the format: YYYY-MM-DD. Example: 2017-11-21 - The Start Date is inclusive and the End Date is exclusive. - If not specified, the end date defaults to the current time in Instagram Business Account Local Time. - If not specified, the start date defaults to 2 days before the end date. The defaults are set by Facebook API. Data is aggregated at the end of each day, so the insights of 2017-01-01 result in end_time = 2017-01-02 00:00:00. |

| Use Individual Metrics | Select if you want to choose the metrics data to import. The dropdown lists all individual metrics for the selected data type. You can select multiple metrics. - Media metrics support only [period: lifetime] - User metrics support day, week, days_28, and lifetime. - The list of metrics (engaged_audience_demographics, reached_audience_demographics, follower_demographics) will required additional configurations below: - breakdown: Can input multiple values and separated by a comma. Supported values (age, country, city, gender) - metric_type: Select total_value - Timeframe: Select the time frame value. Supported values (last_14_days, last_30_days, last_90_days, prev_month, this_month, this_week) |

| Preset User Metrics | The default is Week metrics. You can select from the following preset user metrics: - Week metrics [period: week] - Days 28 metrics [period: days_28] - Day metrics [period: day] - Lifetime metrics [period: lifetime], do not support incremental import |

| Preset Media metrics | The default is All media metrics. You can select from the following preset media metrics: - All media metrics. All media metrics will import metrics for all content in the Instagram account - Story metrics. Import metrics for Instagram Story - Carousel metrics. Import metrics for Instagram Carousel - Photo and video metrics will only import metrics for Instagram images and videos* |

| Import media metrics | If a metric is supported by multiple media objects, for example: IMAGE, VIDEO, STORY, and so on, then the metric values of all those media objects will be imported. |

| Import user metrics | User metrics support day, week, days_28, and lifetime |

| Incremental Loading | You can schedule a job to run iteratively. Each iteration of the job run is calculated based on the Start Date and End Date values, and new values are imported each time with no gap or duplication. In the flet'sing example, let’s use a 9-day range between the start and end date: Start Date: 2017-10-01 End Date: 2017-10-11 Each job will have the same time range as determined by the period between the start and end dates. The transfer of metrics begins at the completion of the previous job until the period extends past the current date (which is the default end date). Further transfers will be delayed until a complete period is available, at which the job will execute and then pause until the next period is available. 1st run: Starting end_time: 2017-10-01 07:00:00 Ending end_time: 2017-10-10 07:00:00 2nd run: Starting end_time: 2017-10-11 07:00:00 Ending end_time: 2017-10-20 07:00:00 3rd run: Starting end_time: 2017-10-21 07:00:00 Ending end_time: 2017-10-30 07 |

*As of May 9, 2021, according to the Facebook API change log, the IG follower_count now returns a maximum of 30 days of data instead of 2 years. User follower_count values align more closely with the corresponding values displayed in the Instagram app. Therefore, when the user follower_count metric is selected individually, querying time MUST be within 30 days. When importing the entire User object, if querying time is older than the last 30 days (excluding the current day), the User follower_count metric will be returned as an empty value. **Except for the online_followers metric, the lifetime metric requires that the Incremental Loading checkbox is unchecked and the Start Date and End Date fields are empty.

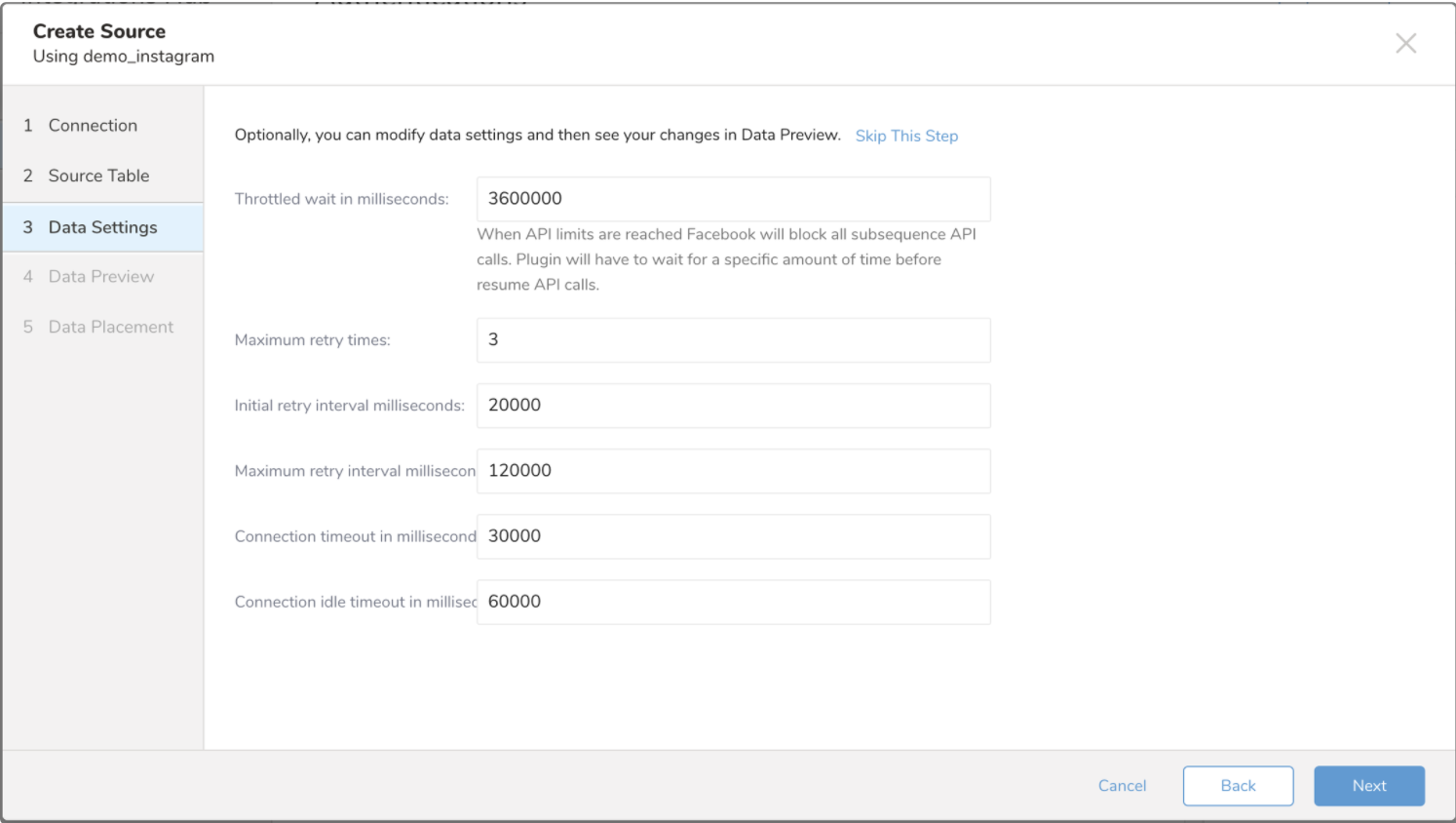

Data Settings allows you to customize the Transfer.

- Select Next. The Data Settings page opens.

- Optionally, edit the data settings or skip this page of the dialog.

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

Install the newest Treasure Data Toolbelt.

in:

access_token: EAAZAB0rX...EOdIc5YqAZDZD

type: instagram_insight

facebook_page_name: 172411252833693

data_type: user

incremental: true

since: 2020-03-20

until: 2020-04-10

use_individual_metrics: true

incremental_user_metrics:

- value: email_contacts-day

- value: impressions-days_28

out:

mode: appendConfiguration keys and descriptions are as follows:

| Config key | Type | Required | Description |

|---|---|---|---|

access_token | string | yes | Facebook long-lived User Access token. |

facebook_page_name | string | yes | Facebook page ID that is linked to the Instagram Business account. |

data_type | string | yes | user, media, comments, media_list or tags |

use_individual_metrics | boolean | no | Option to manually choose the metrics data to import. |

incremental_user_preset_metric | string | no | User preset Metrics when incremental loading is set true |

incremental_user_metrics | array | no | User Metrics to import when incremental loading is set true |

non_incremental_user_preset_metric | string | no | User preset Metrics to import when incremental loading is set false |

non_incremental_user_metrics | array | no | User Metrics to import when incremental loading is set false |

media_metrics | array | no | Select individual Media Metrics to be transferred |

media_preset_metric | string | no | Select Media preset Metrics to be transferred |

incremental | boolean | no | When run repeatedly, only the data since the last import is collected. |

since | string | no | Only import data since this date; see Date Range Import |

until | string | no | Only import data until this date |

throttle_wait_in_millis | int | no | When API limits are reached, Facebook will throttle (block) API calls. You must wait for the specified amount of time before you can start calling the API again. Default: 3600000 |

maximum_retries | int | no | Specifies the maximum retry times for each API call. Default: 3 |

initial_retry_interval_millis | int | no | Specifies the wait time for the first retry. Default: 20000 |

maximum_retries_interval_millis | int | no | Specifies the maximum time between retries. Default: 120000 |

connect_timeout_in_millis | int | no | Specifies the amount of time before the connection times out when doing API calls. Default: 30000 |

idle_timeout_in_millis | int | not | Specifies how long a connection can stay idle in the pool. Default: 60000 |

For more details on available out modes, see Appendix.

Use connector:guess. This command automatically reads the target data and intelligently guesses the missing parts.

$ td connector:guess seed.yml -o load.ymlIf you open the load.yml file, you see the guessed config.

---in: access_token: EAAZA.....dIc5YqAZDZD type: instagram_insight facebook_page_name: '172411252833693' data_type: user incremental: true since: '2020-03-20' until: '2020-04-10' use_individual_metrics: true incremental_user_metrics: - {value: email_contacts-day} - {value: impressions-days_28}out: {mode: append}exec: {}filters:- from_value: {mode: upload_time} to_column: {name: time} type: add_timeThen you can preview the data by using the preview command.

$ td connector:preview load.ymlSubmit the load job. It may take a couple of hours, depending on the data size. Users need to specify the database and table where their data is stored.

$ td connector:issue load.yml --database td_sample_db --table td_sample_tableThe preceding command assumes that you have already created database(td_sample_db) and table(td_sample_table). If the database or the table does not exist in TD, this command will not succeed, so create the database and table manually or use --auto-create-table option with td connector:issue command to automatically create the database and table:

$ td connector:issue load.yml --database td_sample_db --table td_sample_table --time-column created_at --auto-create-tableYou can assign the Time Format Column to the "Partitioning Key" by the "--time-column" option.

You can schedule periodic data connector execution. We carefully configure our scheduler to ensure high availability. By using this feature, you no longer need a cron daemon on your local data center.

A new schedule can be created by using the td connector:create command. The name of the schedule, cron-style schedule, the database and table where their data is stored, and the data connector configuration file are required.

$ td connector:create \ daily_users_import \ "10 0 * * *" \ td_sample_db \ td_sample_table \ load.ymlThe cron parameter also accepts three special options: @hourly, @daily and @monthly.

You can load records incrementally by settinyou're for the incremental option.

in: type: instagram_insight ... incremental: trueout: mode: appendIf you’re using scheduled execution, the connector automatically saves the last import time time_created value and holds it internally. Then it is used at the next scheduled execution.

in: type: instagram_insight ...out: ...Config Diff---in: time_created: '2020-02-02T15:46:25Z'You can see the list of scheduled entries by td connector:list.

$ td connector:listtd connector:show shows the execution setting of a schedule entry.

$ td connector:show daily_leads_importtd connector:history shows the execution history of a schedule entry. To investigate the results of each individual execution, use td job jobid.

td connector:delete removes the schedule.

$ td connector:delete daily_users_importYou can specify file import mode in the out section of the load.yml file.

The out: section controls how data is imported into a Treasure Data table. For example, you may choose to append data or replace data in an existing table in Treasure Data.

| Mode | Description | Examples |

|---|---|---|

| Append | Records are appended to the target table. | in: ... out: mode: append |

| Always Replace | Replaces data in the target table. Any manual schema changes made to the target table remain intact. | in: ... out: mode: replace |

| Replace on new data | Replaces data in the target table only when there is new data to import. | in: ... out: mode: replace_on_new_data |