You can install the newest TD Toolbelt.

$ td --version

0.15.0Prepare configuration file (for eg: load.yml) as shown in the following example, with your HubSpot account access information.

in:

type: hubspot

client_id: xxxxxxxxxxxxx

client_secret: xxxxxxxxxxxxx

refresh_token: xxxxxxxxxxxxx

target: contacts

additional_properties: prop_1, prop_2, prop_3

retry_intial_wait_msec: 500

retry_limit: 3

max_retry_wait_msec: 30000

from_date: 2016-09-01

fetch_days: 2

incremental: true

connect_timeout_millis: 60000

idle_timeout_millis: 60000

out:

mode: replaceThis example dumps HubSpot Contact object:

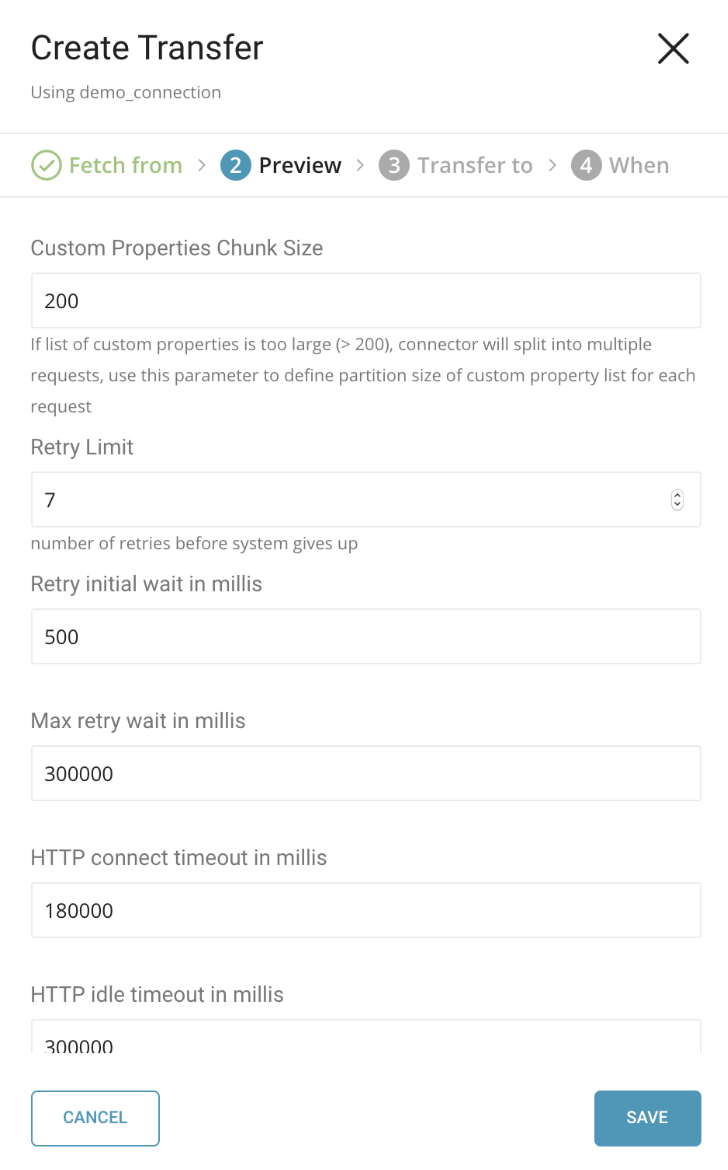

client_idandclient_secret: your HubSpot app credentials (string, required)refresh_token: HubSpot OAuth2 refresh_token, you need to grant access to your HubSpot app, using a HubSpot user account (string, required)target: HubSpot object you want to import. Supported values:contacts,engagements,companies,contact_lists,email_events, deals, properties, and searchadditional_properties: A comma-separated list of extra custom properties, this field is not necessary in most cases. This config only takes effect with a target:contacts, companies, anddeals. The list you provide is then merged with the list of Custom Properties from HubSpot API (string, optional)custom_properties_chunk_size: If a list of custom properties is too large (> 200), the connector splits into multiple requests, use this parameter to define partition size of custom property list for each request (integer, optional, default:200)- object_names: The comma-separated list of HubSpot objects. This is required for the properties target only.

- object_name: The HubSpot object. This is required for search target only.

- fetch_all_properties: The flag to fetch all properties of search target. This is required for search target only.

- incremental_column: It supports only date or datetime type. This is required for search target.

- start_time: Start time for search object.

- end_time: End time for search object.

- retry_intial_wait_msec: initial retry wait time in milliseconds. Default: 1000.

retry_limit: maximum retry times, Default: 7max_retry_wait_msec: maximum retry waiting time in milliseconds, Default:30000from_date: import data from this date, the format is: YYYY-MM-DD. This is required for contacts, companies, deals, and email_events.fetch_days: number of days to import data. Default: 1. This is required for contacts, companies, deals and email_events.incremental: determines whether data import is continual or one time. Default: false (not incremental)- connect_timeout_millis: The maximum time, in milliseconds, a connection can take to connect to destinations. Default: 60000.

idle_timeout_millis: The maximum time, in milliseconds, a connection can be idle (that is, without data traffic in either direction). Default: 60000.

For more details on available out modes, see Modes for Out Plugin.

You can preview data to be imported using the command td connector:preview.

td connector:preview load.ymlSubmit the load job. It may take a couple of hours depending on the data size. Users need to specify the database and table where their data are stored.

It is recommended to specify --time-column option, since Treasure Data’s storage is partitioned by time. If the option is not given, the data connector selects the first long or timestamp column as the partitioning time. The type of the column specified by --time-column must be either of long and timestamp type.

If your data doesn’t have a time column you can add it using add_time filter option. For more details, see add_time Filter Function.

$ td connector:issue load.yml --database td_sample_db --table td_sample_table --time-column updated_dateThe above command assumes you have already created database(td_sample_db) and table(td_sample_table). If the database or the table do not exist in TD, this command will not succeed, so create the database and table manually or use --auto-create-table option with td connector:issue command to auto create the database and table:

$ td connector:issue load.yml --database td_sample_db --table td_sample_table --time-column updated_date --auto-create-tableYou can assign Time Format column to the "Partitioning Key" by --time-column option.

HubSpot API supports incremental loading for Contacts, Companies, Email Events, Deals, and Search.

Companies, Contacts, and Deals will only return records modified in the last 30 days, or the 10k most recently modified records.

If incremental is set to true, the data connector loads records according the date and days specified in from_date and fetch_days.

For example:

from_date: 2016-09-01T00:00:00.000Z

fetch_days: 2- 1st iteration: The data connector fetches records from Sep 01 00:00:00 UTC 2016 to Sep 03 00:00:00 UTC 2016

- 2nd iteration: The data connector starts on Sep 03 00:00:00 UTC 2016 which fetches records from Sep 03 00:00:00 UTC 2016 to Sep 05 00:00:00 UTC 2016 and so on for the next incremental for target specified.

If incremental is set to false,the data connector loads all records for the target specified. This is a one-time activity.

For API v3 Search target, it will use the different incremental configuration.

linenumbers trueincremental_column: createdatestart_time: 2016-09-01T00:00:00.000Zend_time: 2017-09-01T00:00:00.000ZMost of HubSpot API endpoints return 250 records per page. However, deals return 100 records per page for incremental endpoint and 250 records per page for non-incremental endpoint.

For Email Events, HubSpot API supports events that belong to each Campaign ID and App ID. Events are fetched page to page for every combination of Campaign and App.

The HubSpot API supported objects such as Contacts, Contact Lists, Companies, Engagements, Email Events, API v3 Properties, and API v3 Search. These must be specified as a target name in the following format:

| HubSpot object | Target Name |

|---|---|

| Contacts | contacts |

| Contact Lists | contact_lists |

| Companies | companies |

| Engagements | engagements |

| Email Events | email_events |

| Deals | deals |

| API v3 Properties | properties |

| API v3 Search | search |

The data connector now supports Custom Property. The data connector automatically pulls data of all Custom Properties for supported targets: contacts, companies and deals. The feature is enabled by default, so you don’t need to take any action whenever you create a new Custom Property.

In most cases, you don’t have to specify “Additional Custom Properties” field because all Custom Properties are pulled automatically from HubSpot API. If you suspect that some Custom Properties are missing from imported data, you can specify “Additional Custom Properties”, using the format: prop_1, prop_2, prop_3,... (comma-separated)

The list you input will then be merged with the list of Custom Properties from HubSpot API. And if the final list of Custom Properties is big enough (> 200), the data connector splits each request into multiple ones, to avoid URL length limitation, as HubSpot API uses GET method.

You can decide the list size to split under “Advanced Settings” when you create “New Transfer”.

You can schedule periodic data connector execution for periodic HubSpot import. We configure our scheduler carefully to ensure high availability. By using this feature, you no longer need a cron daemon on your local datacenter.

A new schedule can be created using the td connector:create command. The name of the schedule, cron-style schedule, the database and table where their data will be stored, and the Data Connector configuration file are required.

$ td connector:create daily_hubspot_import "10 0 * * *" td_sample_db td_sample_table load.ymlThe cron parameter also accepts these three options: @hourly, @daily and @monthly.

By default, schedule is setup in UTC timezone. You can set the schedule in a timezone using -t or --timezone option. The --timezone option only supports extended timezone formats like Asia/Tokyo, America/Los_Angeles,etc. Timezone abbreviations like PST, CST are not supported and may lead to unexpected schedules.

You can see the list of scheduled entries by typing td connector:list.

$ td connector:list

+-----------------------+--------------+----------+-------+--------------+-----------------+----------------------------+

| Name | Cron | Timezone | Delay | Database | Table | Config |

+-----------------------+--------------+----------+-------+--------------+-----------------+----------------------------+

| daily_hubspot_import | 10 0 * * * | UTC | 0 | td_sample_db | td_sample_table | {"type"=>"hubspot", ... } |

+-----------------------+--------------+----------+-------+--------------+-----------------+----------------------------+td connector:show shows the execution setting of a schedule entry.

% td connector:show daily_hubspot_import

Name : daily_hubspot_import

Cron : 10 0 * * *

Timezone : UTC

Delay : 0

Database : td_sample_db

Table : td_sample_tabletd connector:history shows the execution history of a schedule entry. To investigate the results of each individual execution, use td job jobid.

% td connector:history daily_hubspot_import

+--------+---------+---------+--------------+-----------------+----------+---------------------------+----------+

| JobID | Status | Records | Database | Table | Priority | Started | Duration |

+--------+---------+---------+--------------+-----------------+----------+---------------------------+----------+

| 578066 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-18 00:10:05 +0000 | 160 |

| 577968 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-17 00:10:07 +0000 | 161 |

| 577914 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-16 00:10:03 +0000 | 152 |

| 577872 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-15 00:10:04 +0000 | 163 |

| 577810 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-14 00:10:04 +0000 | 164 |

| 577766 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-13 00:10:04 +0000 | 155 |

| 577710 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-12 00:10:05 +0000 | 156 |

| 577610 | success | 10000 | td_sample_db | td_sample_table | 0 | 2015-04-11 00:10:04 +0000 | 157 |

+--------+---------+---------+--------------+-----------------+----------+---------------------------+----------+

8 rows in settd connector:delete removes the schedule.

$ td connector:delete daily_hubspot_importYou can specify file import mode in the out section of the load.yml file.

The out: section controls how data is imported into a Treasure Data table. For example, you may choose to append data or replace data in an existing table in Treasure Data.

| | Mode | Description | Examples |

| --- | --- | --- | | Append | Records are appended to the target table. | in: ... out: mode: append | | Always Replace | Replaces data in the target table. Any manual schema changes made to the target table remain intact. | in: ... out: mode: replace | | Replace on new data | Replaces data in the target table only when there is new data to import. | in: ... out: mode: replace_on_new_data |