You can import data files from Google Drive into Treasure Data. This data connector supports only CSV and TSV file or gzip of CSV and TSV files.

- Basic knowledge of Treasure Data, including the TD Toolbelt

- A Google Account

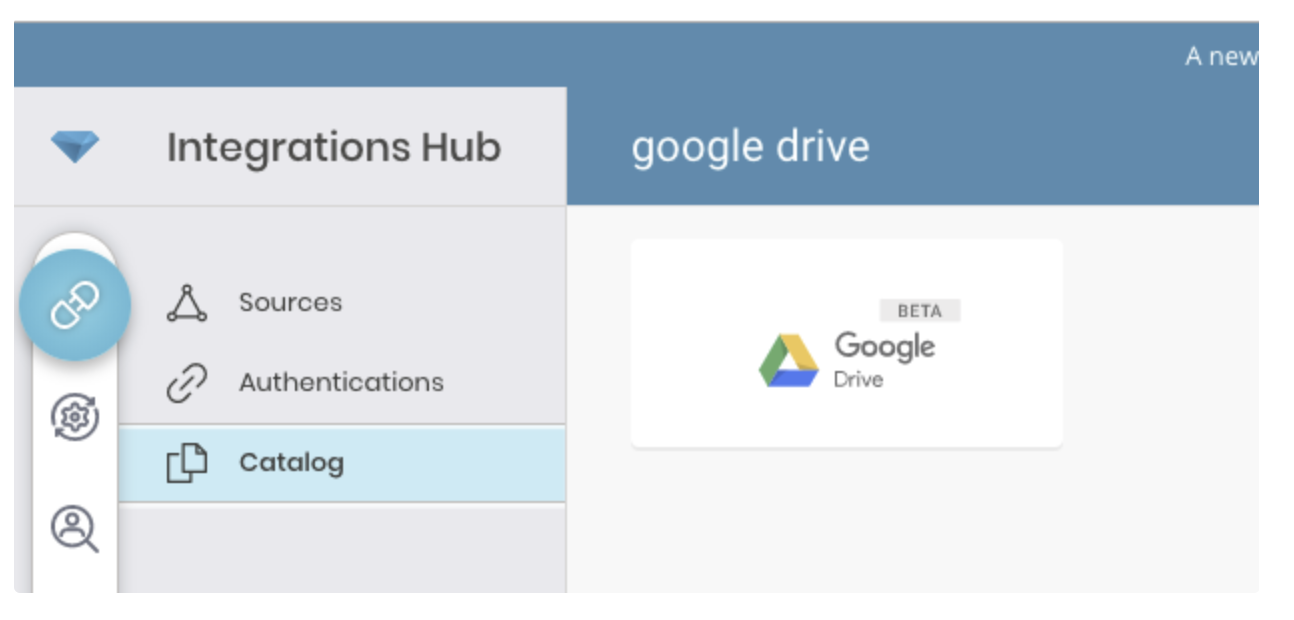

Go to Integrations Hub > Catalog and search and select Google Drive.

Select Create. You are creating an authenticated connection.

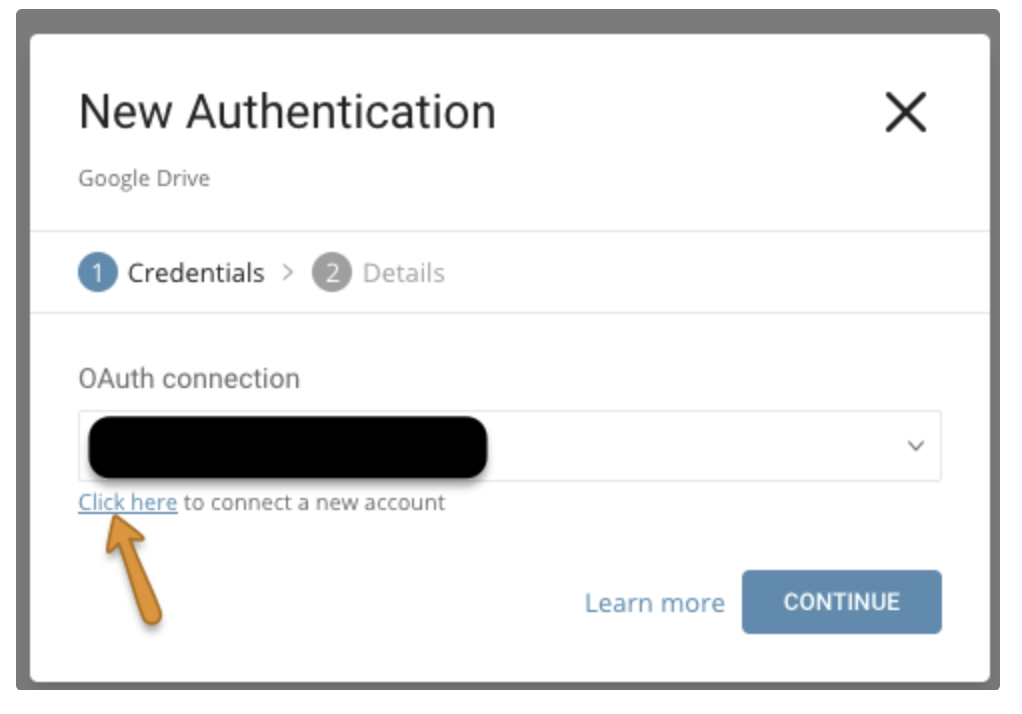

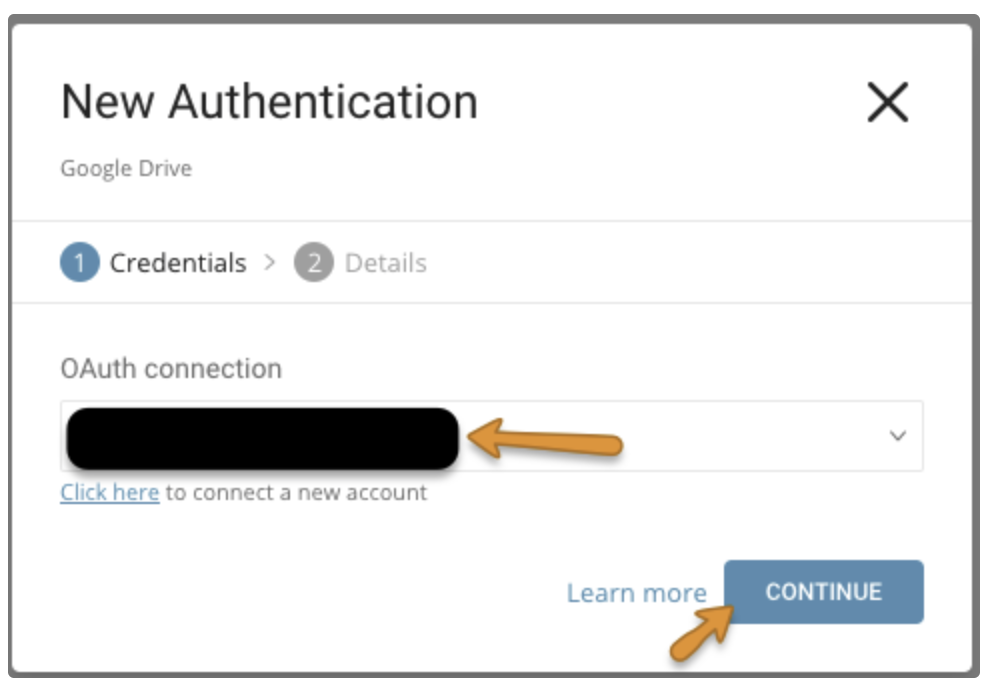

The following dialog opens.

Access to Google Drive requires OAuth2 authentication.

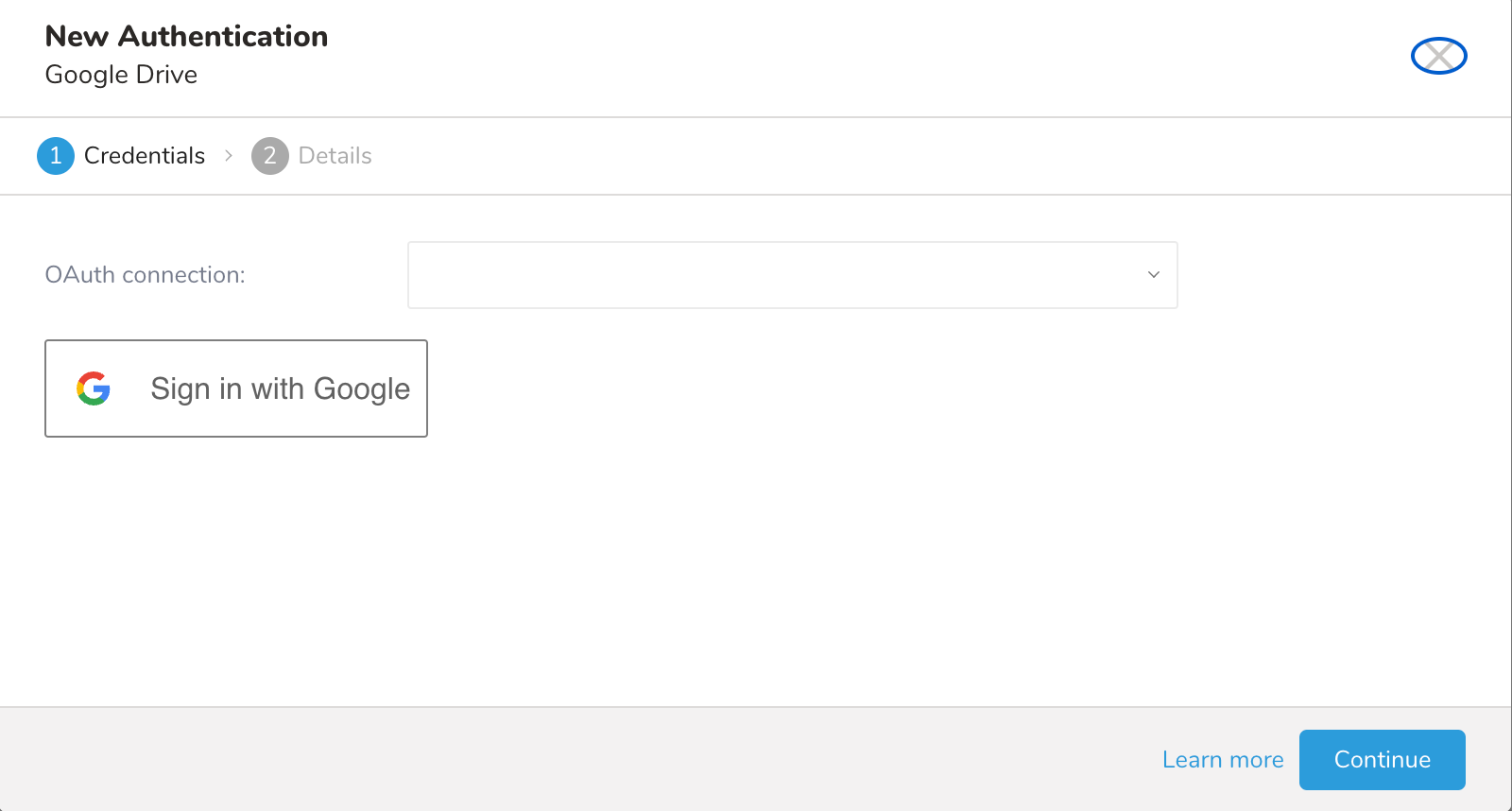

Select Click here to connect to your Google account.

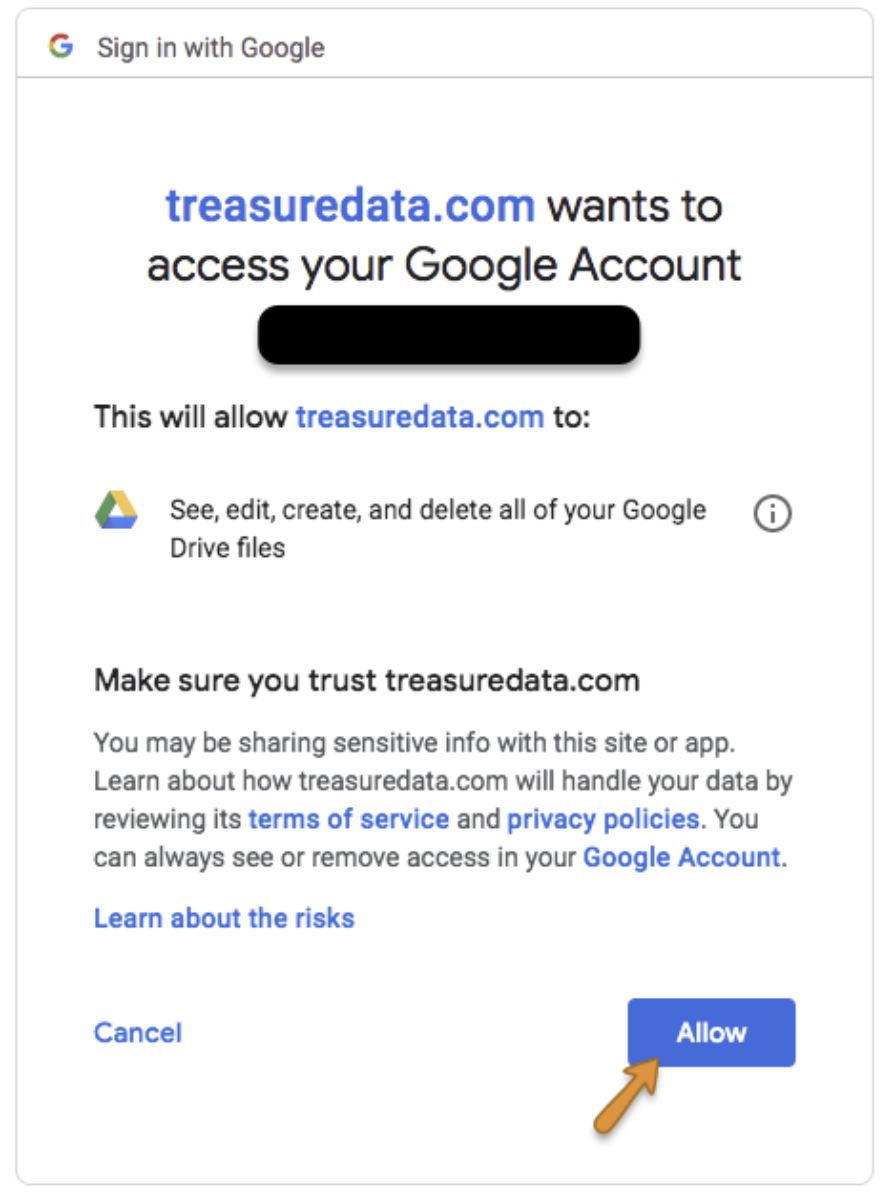

Log into your Google account in the popup window and grant access to the Treasure Data app.

You will be redirected back to TD Console. Repeat the "Create a new connection" step and choose your new OAuth connection. Select Continue.

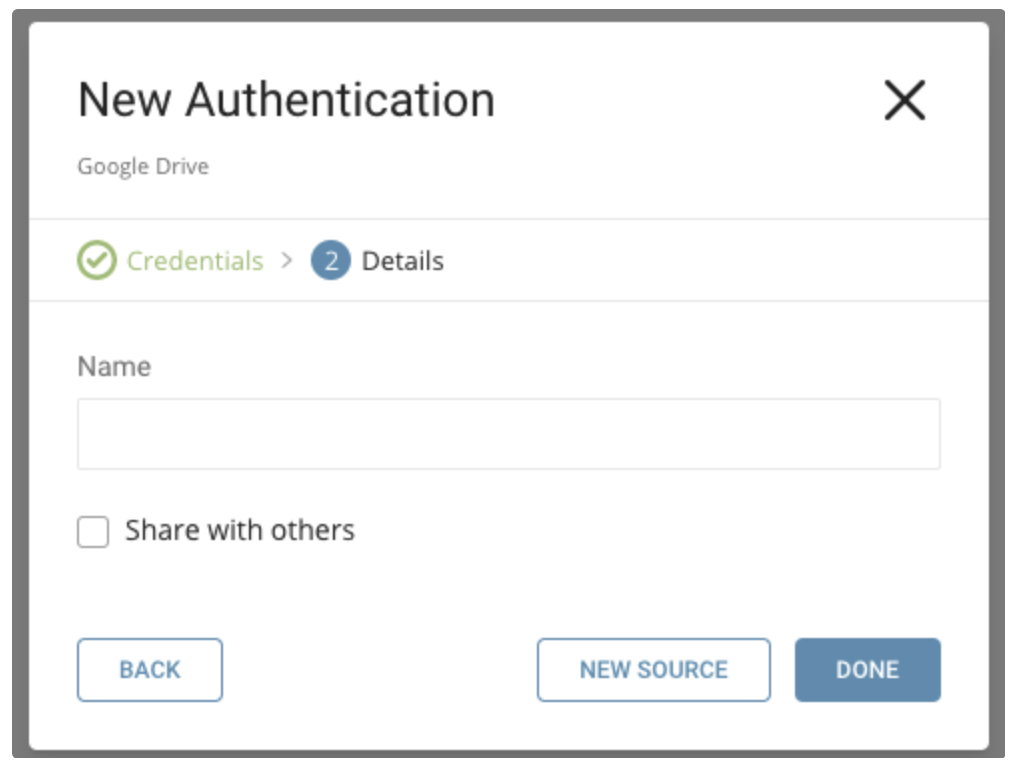

Name your new Google Drive Connection. Select Done.

After creating the authenticated connection, you are automatically taken to the Authentications tab. Look for the connection you created and select New Source.

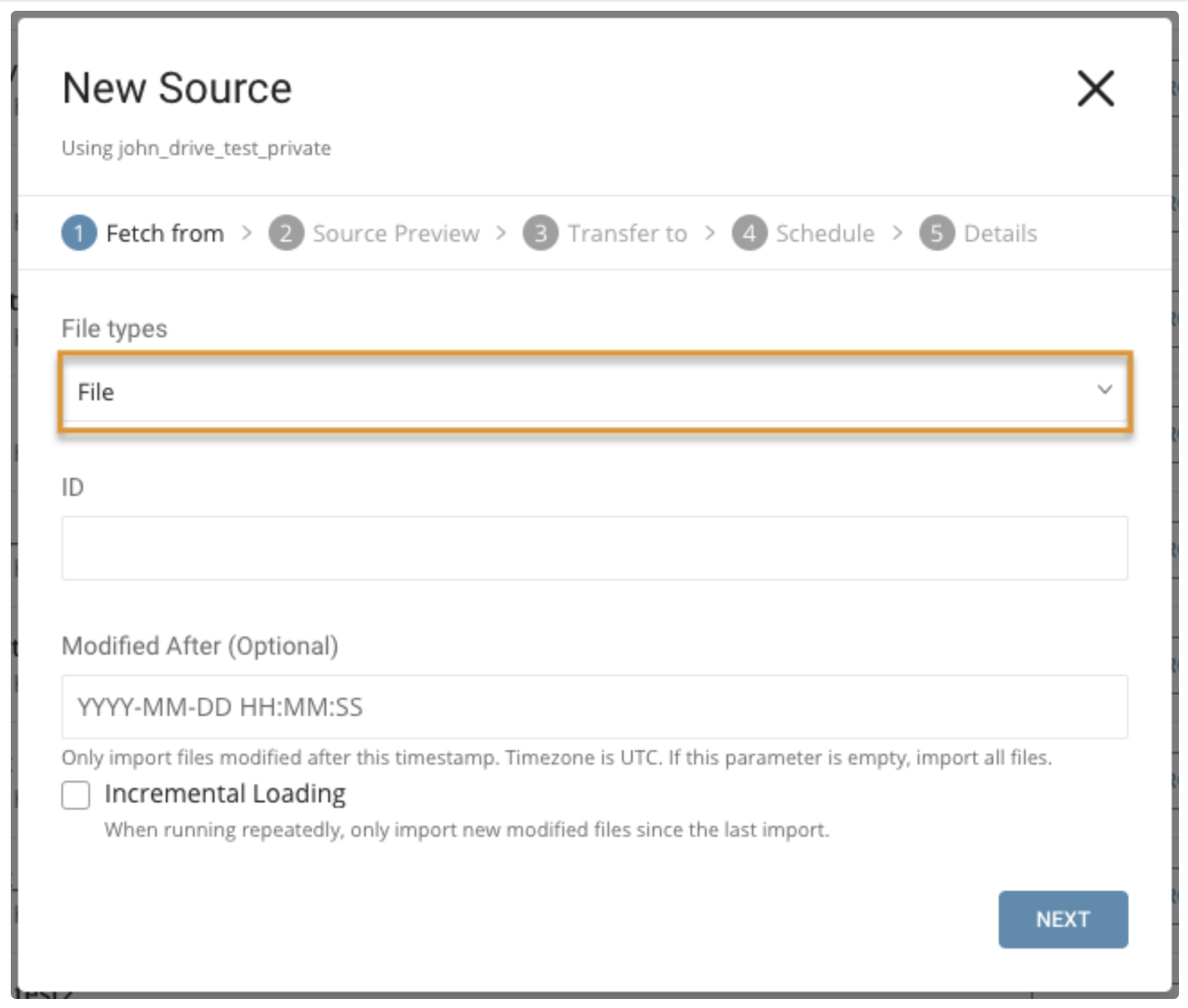

Import a CSV or TSV file or you can import a gzip of a CSV or TSV file.

For File types, select File.

Parameters:

- ID: File Id from Google Drive. Supports only CSV and TSV files or gzip of CSV and TSV files.

- Modified After: Use to import only a file that is modified after this specified timestamp.

- Incremental Loading: Use when importing data based on a schedule. Use to import only new, modified files since the last import.

Import a list of CSV or TSV files or gzip of CSV or TSV files. When importing from folder, the folder must contain files of the same type. For example: a folder that contains only CSV files.

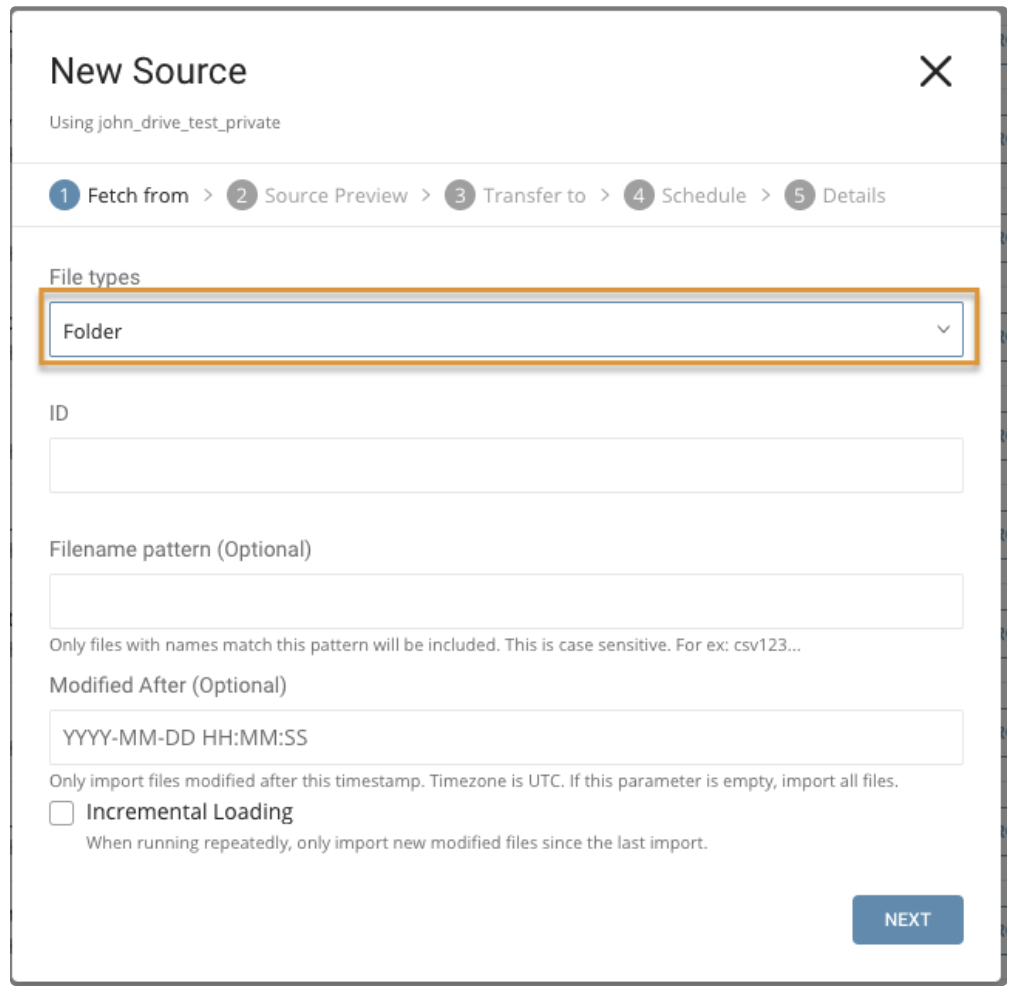

For File types, select Folder.

Parameters:

- ID: File Id from Google Drive. Supports only CSV and TSV files or gzip of CSV and TSV files.

- Modified After: Use to import only a file that is modified after this specified timestamp.

- Filename pattern: Uses regex to match file name. If a file name doesn’t match with this pattern, the file is skipped. (for example, if the file name pattern is .csv$ # then, a file is skipped if its name doesn’t match with this pattern)

- Incremental Loading: Use when importing data based on a schedule. Use to import only new, modified files inside the folder since the last import.

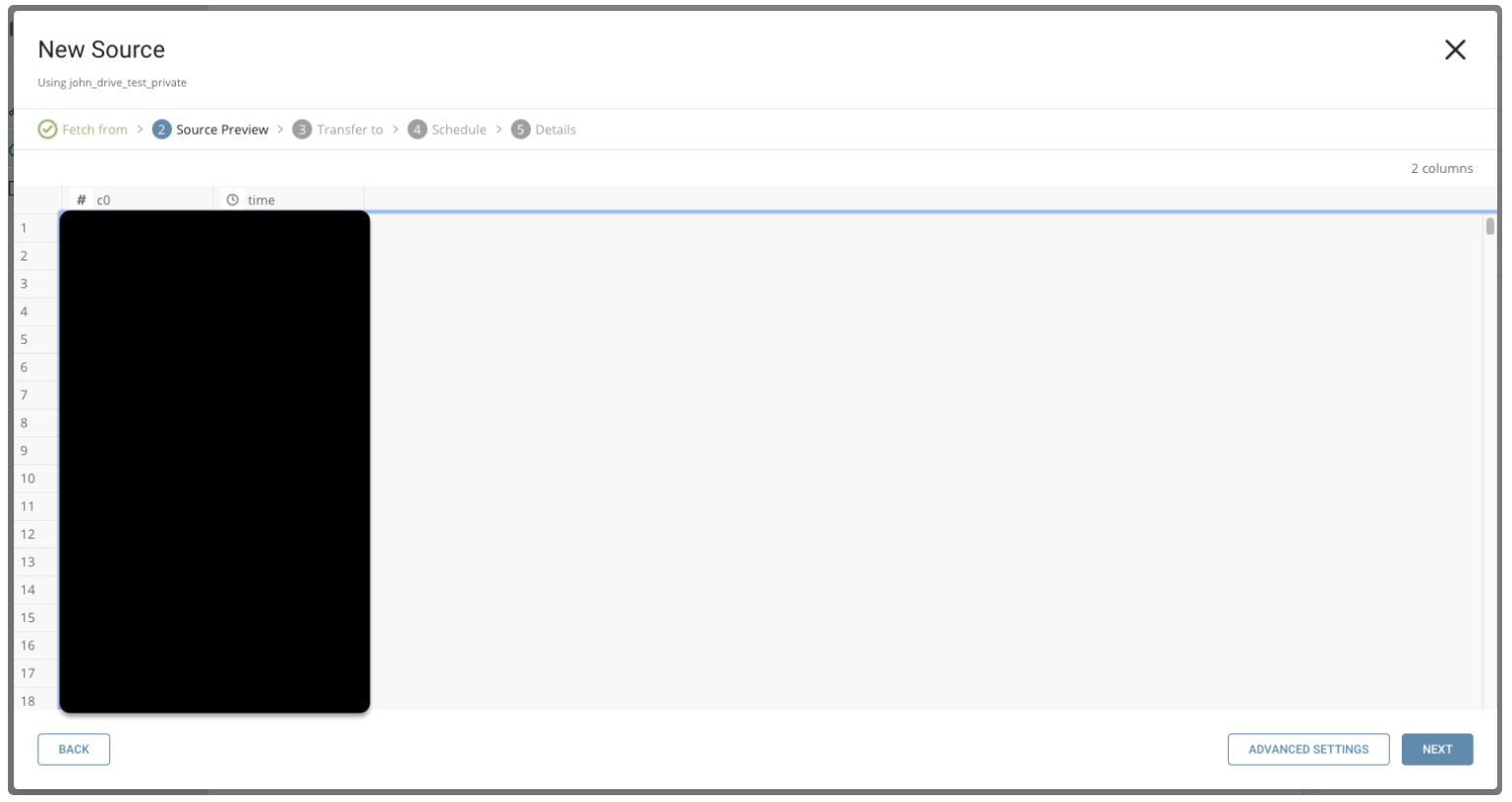

You’ll see a preview of your data. To make changes, select Advanced Settings, otherwise select Next.

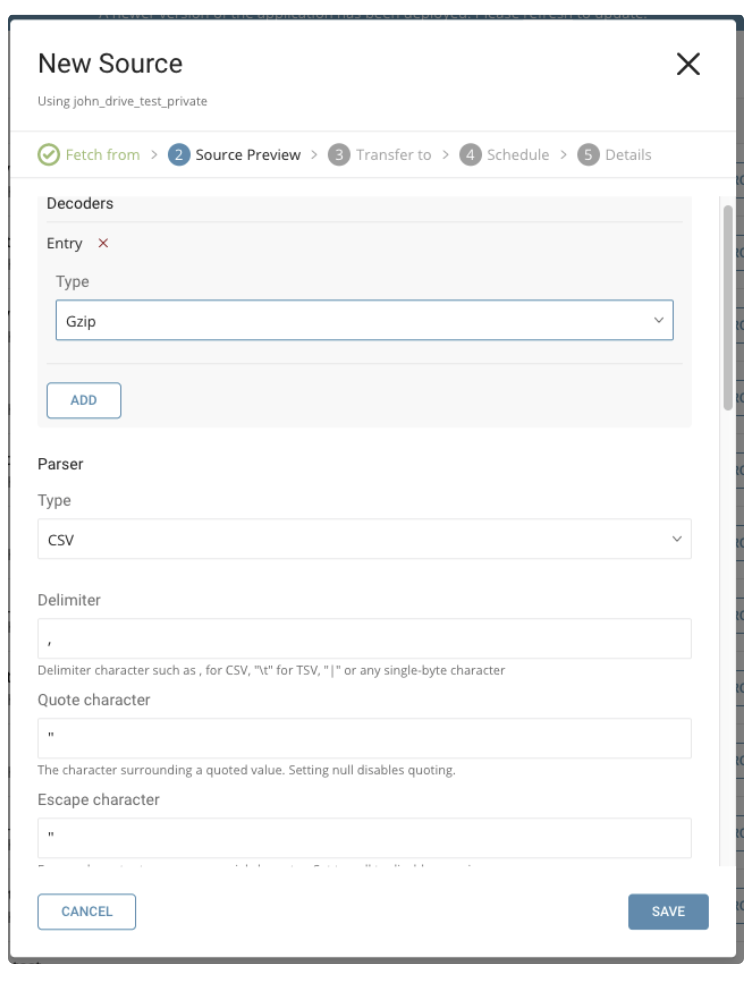

You can specify the following parameters:

Decoders: You can modify the file decoder based on the encode of the file.

Gzip Bzip2 Zip OpenPGP DecryptionParser: Update the config for the CSV/TSV Parser, if needed.

charset: UTF-8 newline: LF type: csv delimiter: ',' quote: '"' escape: '"' trim_if_not_quoted: false skip_header_lines: 1 allow_extra_columns: false allow_optional_columns: false columns:Maximum retry interval milliseconds. Specifies the maximum time between retries.

Type: number Default: 120000Maximum retry times. Specifies the maximum retry times for each API call.

Type: number Default: 7Initial retry interval millisecond. Specifies the wait time for the first retry.

Type: number Default: 1000Maximum retry interval milliseconds. Specifies the maximum time between retries.

Type: number Default: 120000

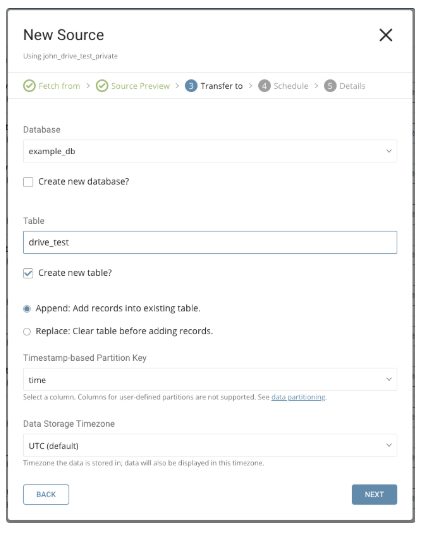

Choose an existing source or create a new database and table.

Create a new database and give your database a name. Complete similar steps to Create new table.

Select whether to append records to an existing table or replace your existing table.

If you want to set a different partition key seed rather than use the default key, you can specify one using the popup menu.

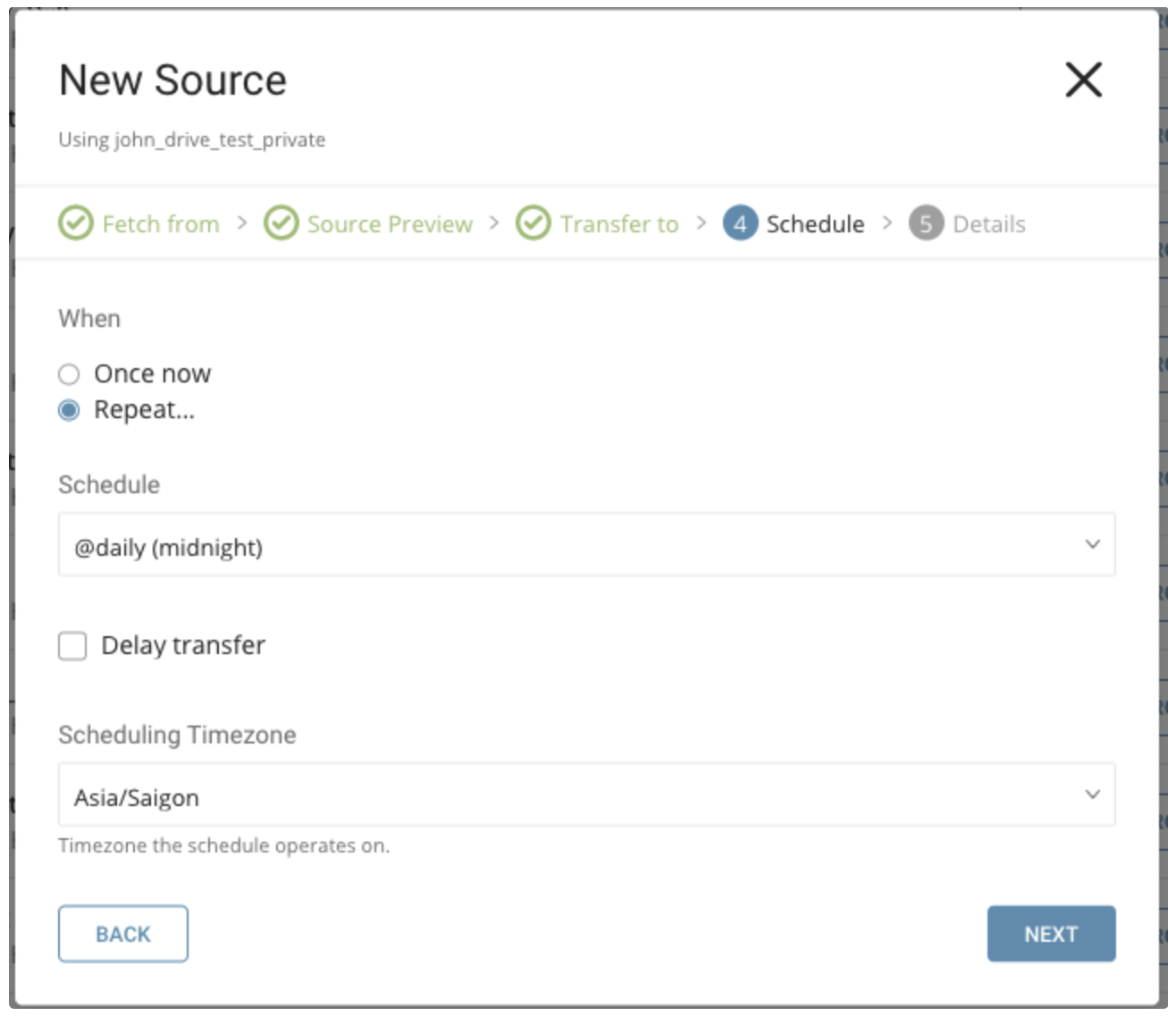

In the When tab, you can specify one-time transfer, or schedule an automated recurring transfer.

Parameters

Once now: set one time job.

Repeat…

- Schedule: accepts these three options: @hourly, @daily and @monthly and custom cron.

- Delay Transfer: add a delay of execution time.

TimeZone: supports extended timezone formats like ‘Asia/Tokyo’.

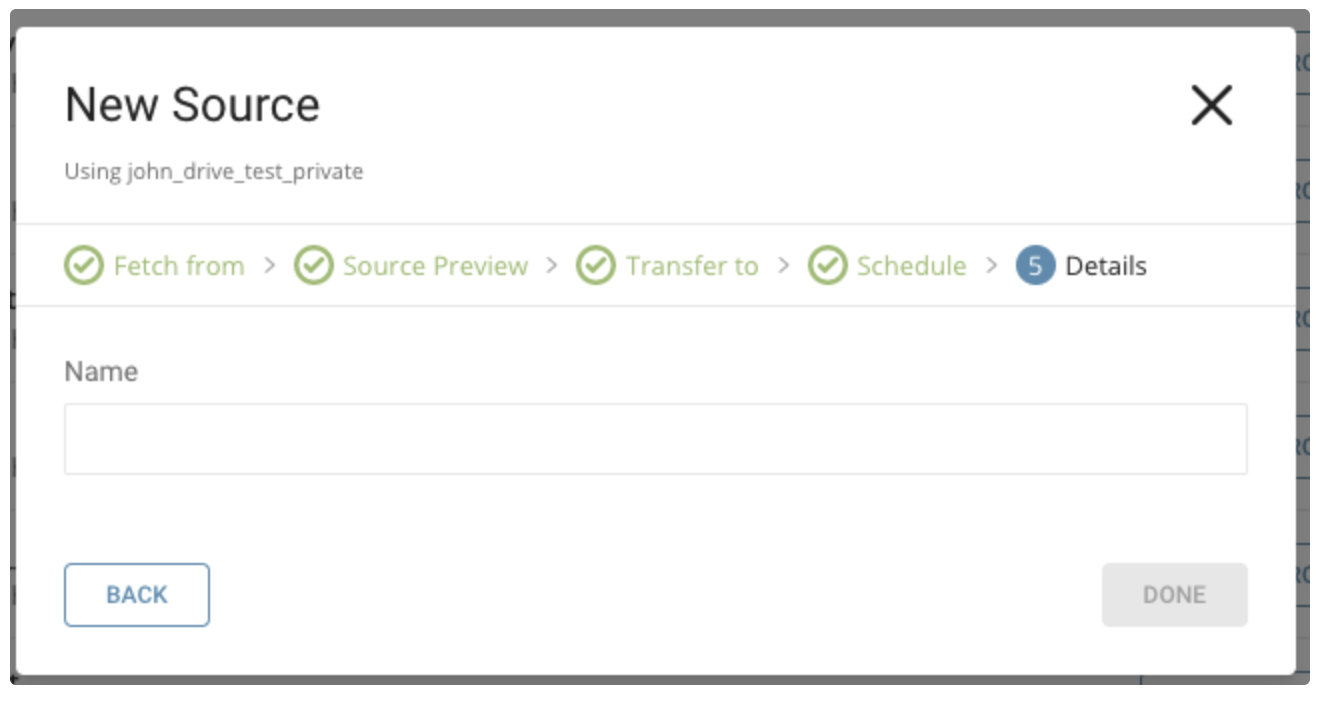

Name your Transfer and select Done to start.

After your transfer has run, you can see the results of your transfer in the Databases tab.

You can use the TD Console to configure your connection.

Install the newest TD Toolbelt.

The configuration file includes an in: section where you specify what comes into the connector from Google Drive and an out: section where you specify what the connector puts out to the database in Treasure Data. For more details on available out modes, see the Appendix.

The following example shows how to specify import a file from Google Drive.

in:

type: google_drive

client_id: xxxxxxxxxxx

client_secret: xxxxxxxxxxx

refresh_token: xxxxxxxxxxx

target: file

id: xxxxxxxxxxx(drive file id)

last_modified_time: "YYYY-MM-DD HH:MM:SS"

incremental: false

out: mode: appendThe following example shows how to specify import files in a folder from Google Drive.

in:

type: google_drive

client_id: xxxxxxxxxxx

client_secret: xxxxxxxxxxx

refresh_token: xxxxxxxxxxx

target: folder

id: xxxxxxxxxxx(drive folder id)

last_modified_time: "YYYY-MM-DD HH:MM:SS"

filename_pattern: xxxxxxxxxxx (Ex: abc.csv$)

incremental: false

out:

mode: appendtd connector:guess seed.yml -o load.yml You can preview data to be imported using the command td connector: preview.

td connector:preview load.yml You use td connector:issue to execute the job.

You must specify the database and table where you want to store the data before you execute the load job. Ex td_sample_db, td_sample_table

td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column date_time_columnIt is recommended to specify --time-column option, because Treasure Data’s storage is partitioned by time. If the option is not given, the data connector selects the first long or timestamp column as the partitioning time. The type of the column, specified by --time-column, must be either of long or timestamp type (use Preview results to check for the available column name and type. Generally, most data types have a last_modified_date column).

If your data doesn’t have a time column, you can add the column by using the add_time filter option. See details at add_time filter plugin.

td connector:issue assumes you have already created a database (sample_db) and a table (sample_table). If the database or the table do not exist in TD, td connector:issue will fail. Therefore, you must create the database and table manually or use --auto-create-table with td connector:issue to automatically create the database and table.

$ td connector:issue load.yml \

--database td_sample_db \

--table td_sample_table \

--time-column date_time_column \

--auto-create-tableFrom the command line, submit the load job. Processing might take a couple of hours depending on the data size.

You can schedule periodic data connector execution for periodic File or Folder import. We configure our scheduler carefully to ensure high availability. By using this feature, you no longer need a cron daemon on your local data center.

Scheduled execution supports configuration parameters that control the behavior of the data connector during its periodic attempts to fetch data from Google Drive:

incrementalThis configuration is used to control the load mode, which governs how the data connector fetches data from Google Drive based on last modified time of File or Files in Folder.- last_modified_time This configuration is used to control the last modified time of files from the previous load job.

See "How Incremental Loading Works" in the below Appendix: for details and examples.

A new schedule can be created using the td connector:create command. The name of the schedule, cron-style schedule, the database and table where their data will be stored, and the data connector configuration file are required.

The cron parameter accepts these options: @hourly, @daily and @monthly.

By default, schedule is setup in UTC timezone. You can set the schedule in a timezone using -t or --timezone option. The --timezone option only supports extended timezone formats like 'Asia/Tokyo', 'America/Los_Angeles' etc. Timezone abbreviations like PST, CST are *not* supported and may lead to unexpected schedules.

$ td connector:create \

daily_import \

"10 0 * * *" \

td_sample_db \

td_sample_table \

load.ymlIt’s also recommended to specify the --time-column option, since Treasure Data’s storage is partitioned by time.

$ td connector:create \

daily_import \

"10 0 * * *" \

td_sample_db \

td_sample_table \

load.yml \

--time-column created_atYou can see the list of scheduled entries by entering the command td connector:list.

$ td connector:list+--------------+------------+----------+-------+--------------+-----------------+--------------------------------------------+| Name | Cron | Timezone | Delay | Database | Table | Config |+--------------+------------+----------+-------+--------------+-----------------+--------------------------------------------+| daily_import | 10 0 * * * | UTC | 0 | td_sample_db | td_sample_table | {"in"=>{"type"=>"google_drive", |+--------------+------------+----------+-------+--------------+-----------------+--------------------------------------------+You can specify file import mode in the out section of the load.yml file.

The out: section controls how data is imported into a Treasure Data table. For example, you may choose to append data or replace data in an existing table in Treasure Data.

Output modes are ways to modify the data as the data is placed in Treasure Data.

- Append(default): Records are appended to the target table.

- Replace (available In td 0.11.10 and later): Replaces data in the target table. Any manual schema changes made to the target table remain intact.

Examples:

in:

...

out:

mode: append

in:

...

out:

mode: replaceIncremental loading uses the last modified time to load only files that have modified time after the last modified time.

Import from File with Incremental:

At the first execution, this connector loads this file. If incremental is true, then the latest modified time is saved as file modified time.

Example:

Import file File0001.csv:

+--------------+--------------------------+ | Filename | modified time | +--------------+--------------------------+ | File0001.csv | 2019-05-04T10:00:00.123Z |

After the job finished, last_modified_time is set to 2019-05-04T10:00:00.123Z

At the next execution, only the file File0001.csv is imported if the file modified time is greater than '2019-05-08T10:00:00.123Z', meaning that the file was updated after the first execution.

Import from Folder with Incremental:

At the first execution, this connector loads all files that have file type CSV/TSV or gzip of CSV/TSV. If incremental is true, we go through the list of files in the folder and get the maximum modified time from the files.

Example:

Import folder contains files:

+--------------+--------------------------+ | Filename | Last update | +--------------+--------------------------+ | File0001.csv | 2019-05-04T10:00:00.123Z | | File0011.csv | 2019-05-05T10:00:00.123Z | | File0012.csv | 2019-05-06T10:00:00.123Z | | File0013.csv | 2019-05-07T10:00:00.123Z | | File0014.csv | 2019-05-08T10:00:00.123Z |Max Modified Time: 2019-05-08T10:00:00.123Z

After the job finished, the last_modified_time is set to Max Modified Time (2019-05-08T10:00:00.123Z).

At the next execution, only files that have modified time greater than 2019-05-08T10:00:00.123Z are imported.

Example:

Import folder has newly updated and added files:

+--------------+--------------------------+ | Filename | Last update | +--------------+--------------------------+ | File0001.csv | 2019-05-04T10:00:00.123Z | | File0011.csv | 2019-05-05T10:00:00.123Z | | File0012.csv | 2019-05-06T10:00:00.123Z | | File0013.csv | 2019-05-09T10:00:00.123Z | | File0014.csv | 2019-05-08T10:00:00.123Z | | File0015.csv | 2019-05-09T13:00:00.123Z |Max Modified Time: 2019-05-09T13:00:00.123Z

Then only files: File0013.csv and File0015.csv are imported. After the job finished, last_modified_time is set to Max Modified Time (2019-05-09T13:00:00.123Z).

To issue jobs from CLI, you need client_id, client_secret, refresh_token.

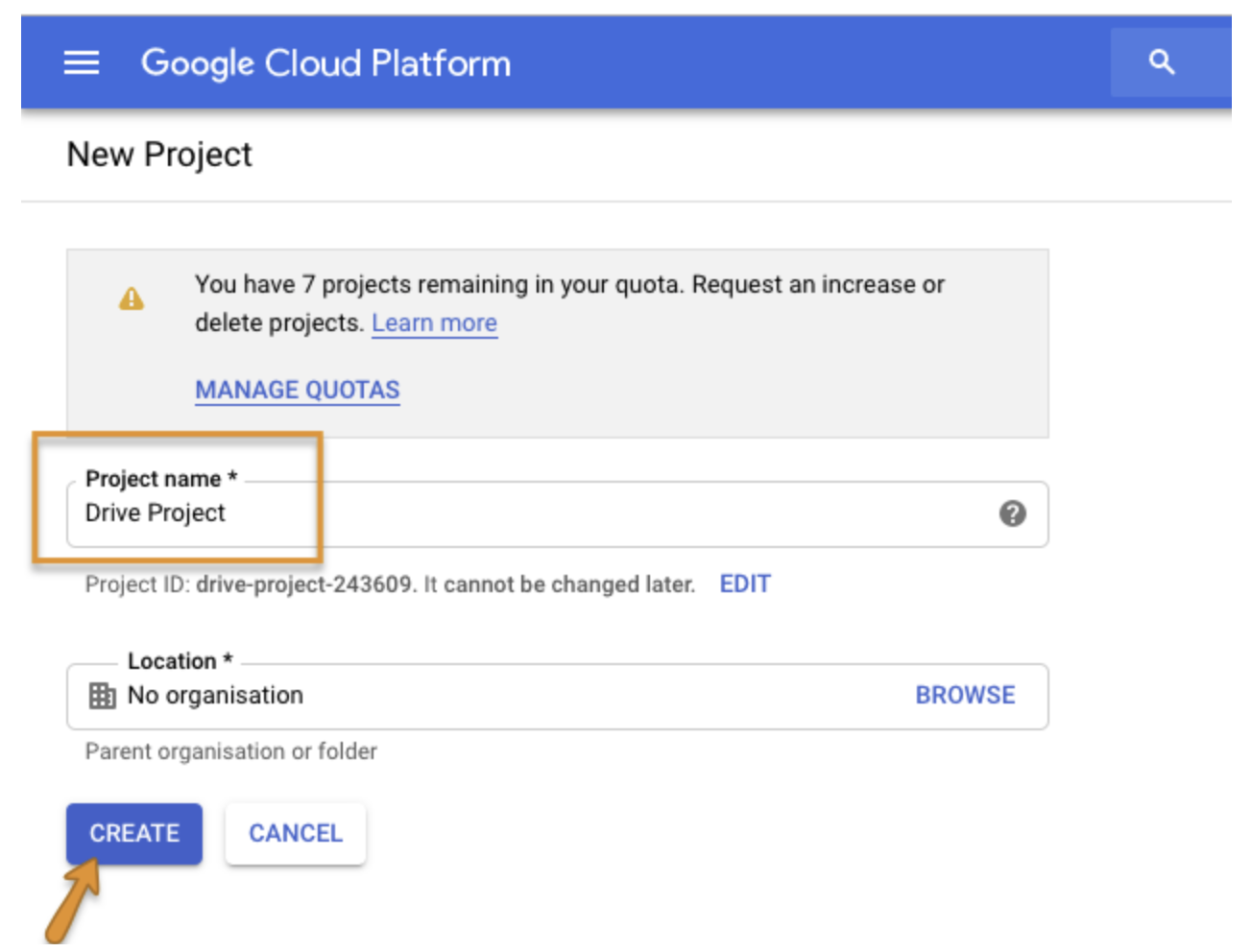

Go to https://console.cloud.google.com/projectcreate to register a project.

Name your project, then select Create.

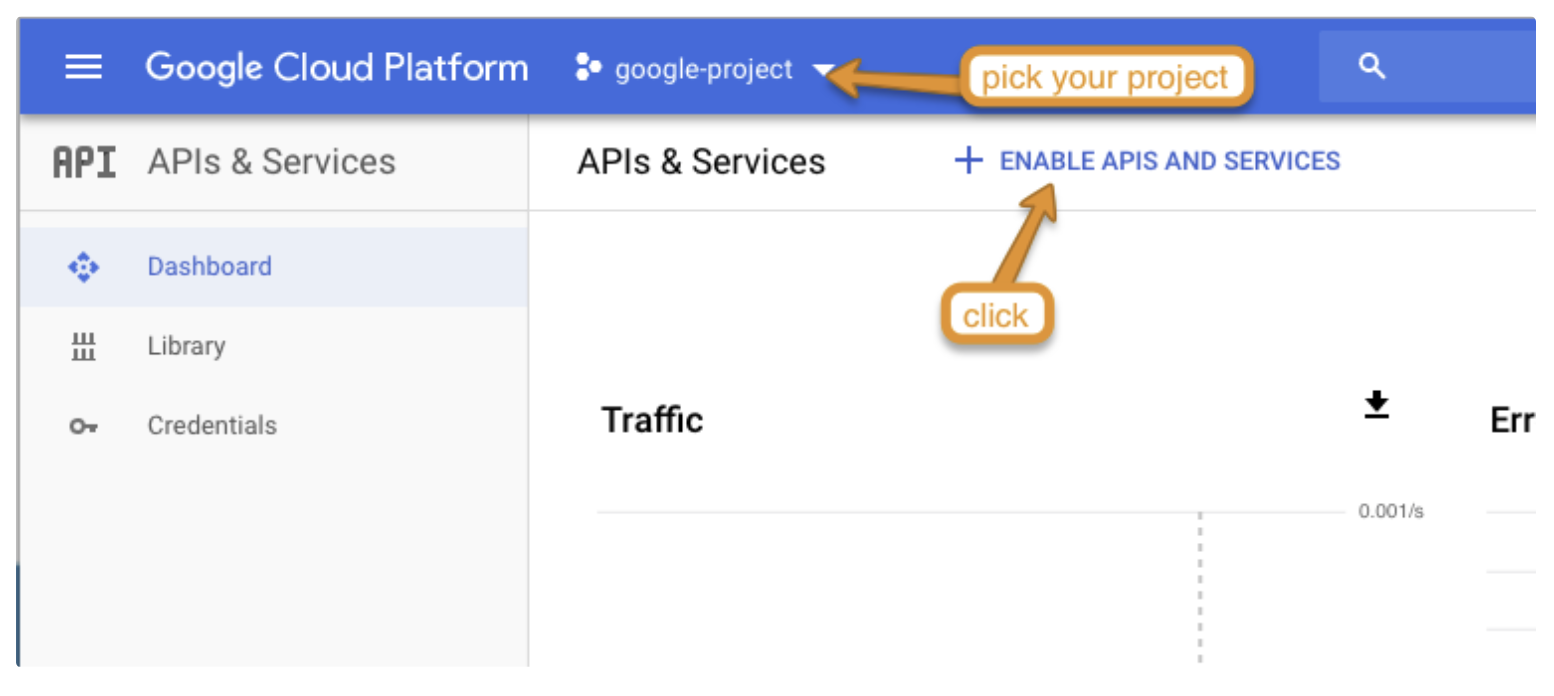

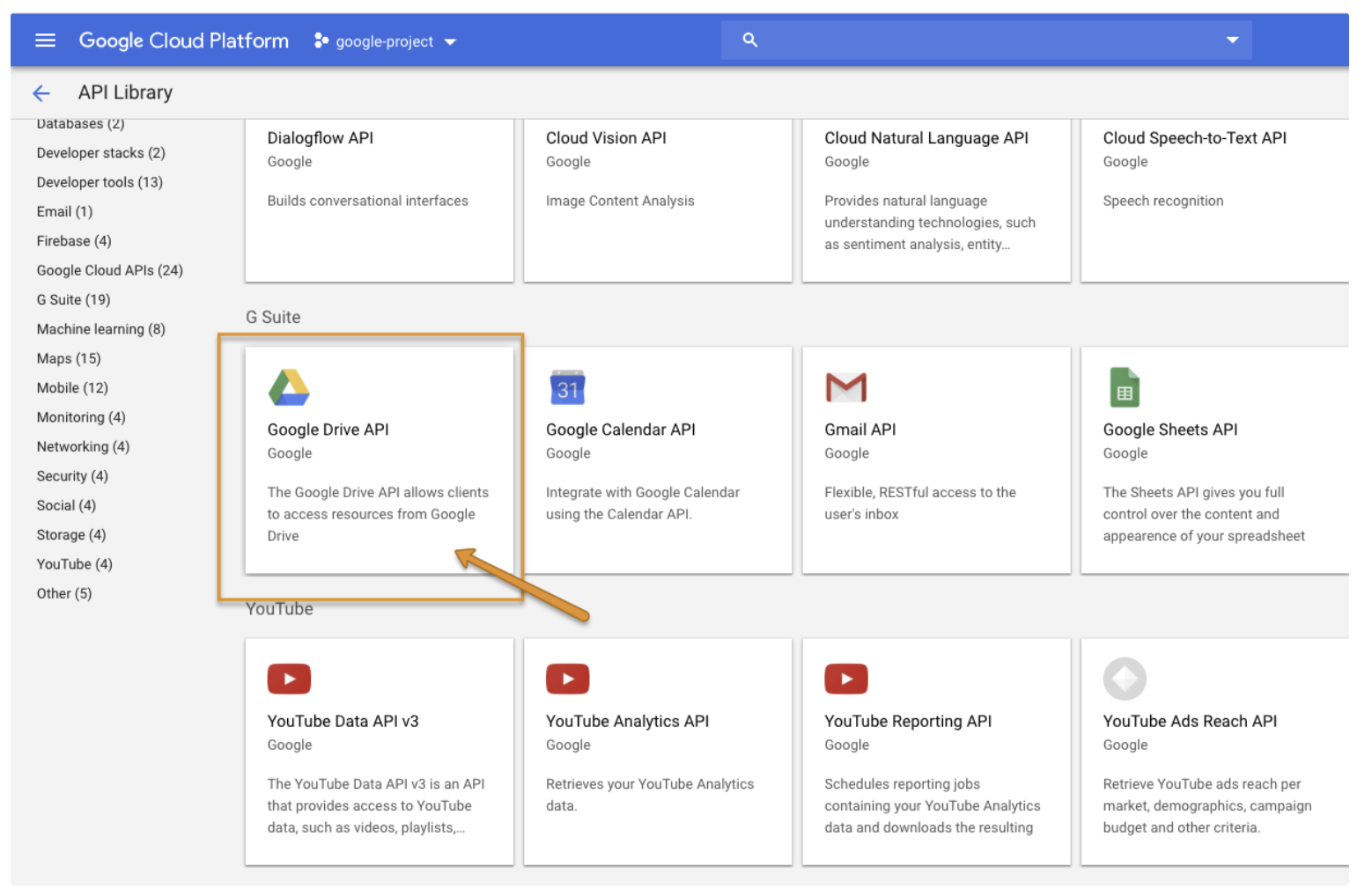

Go to https://console.cloud.google.com/apis/dashboard? to enable Drive Service for your project.

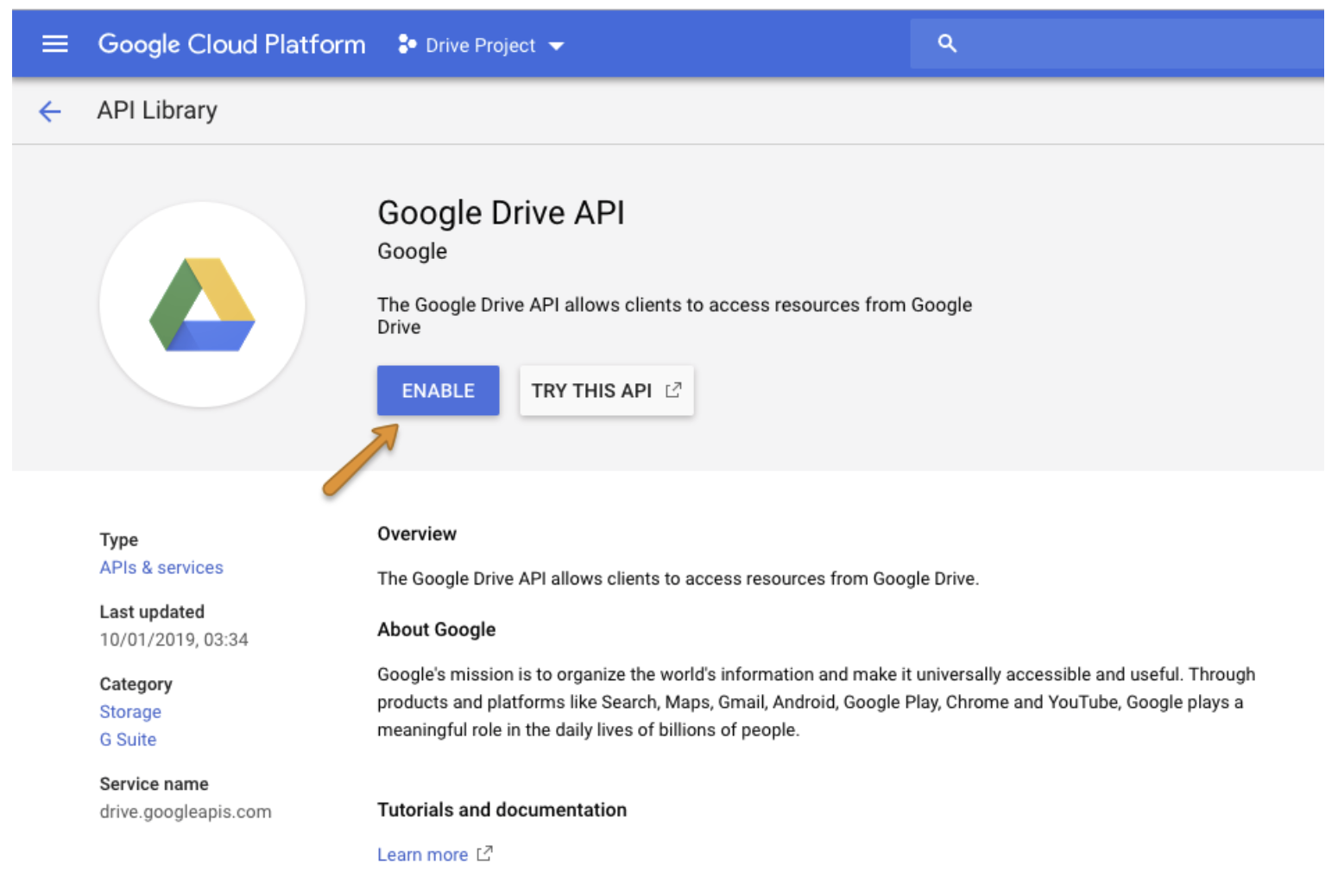

Choose Google Drive API and select Enable.

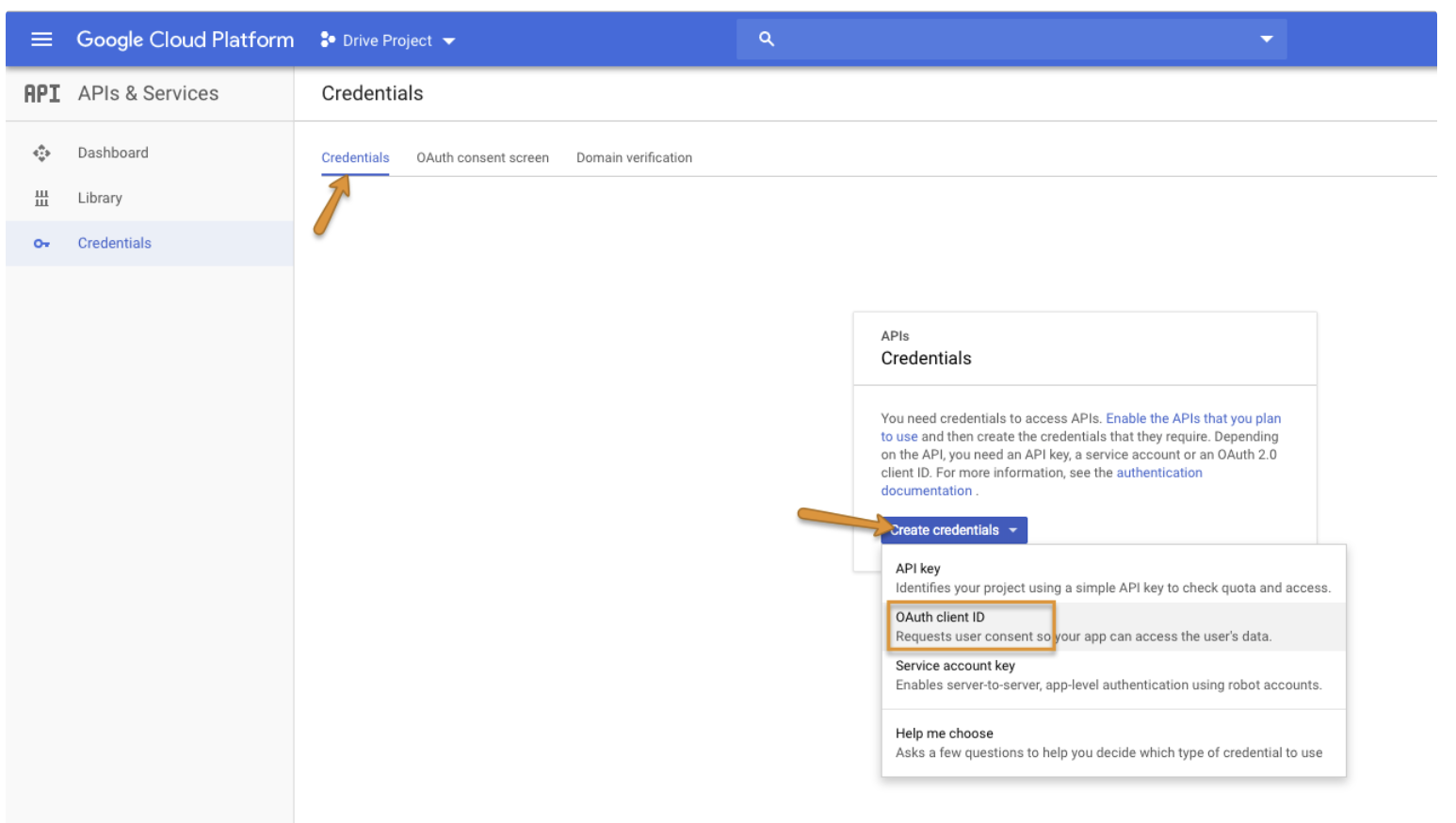

Go to https://console.cloud.google.com/apis/credentials, select your Project and create a new credential.

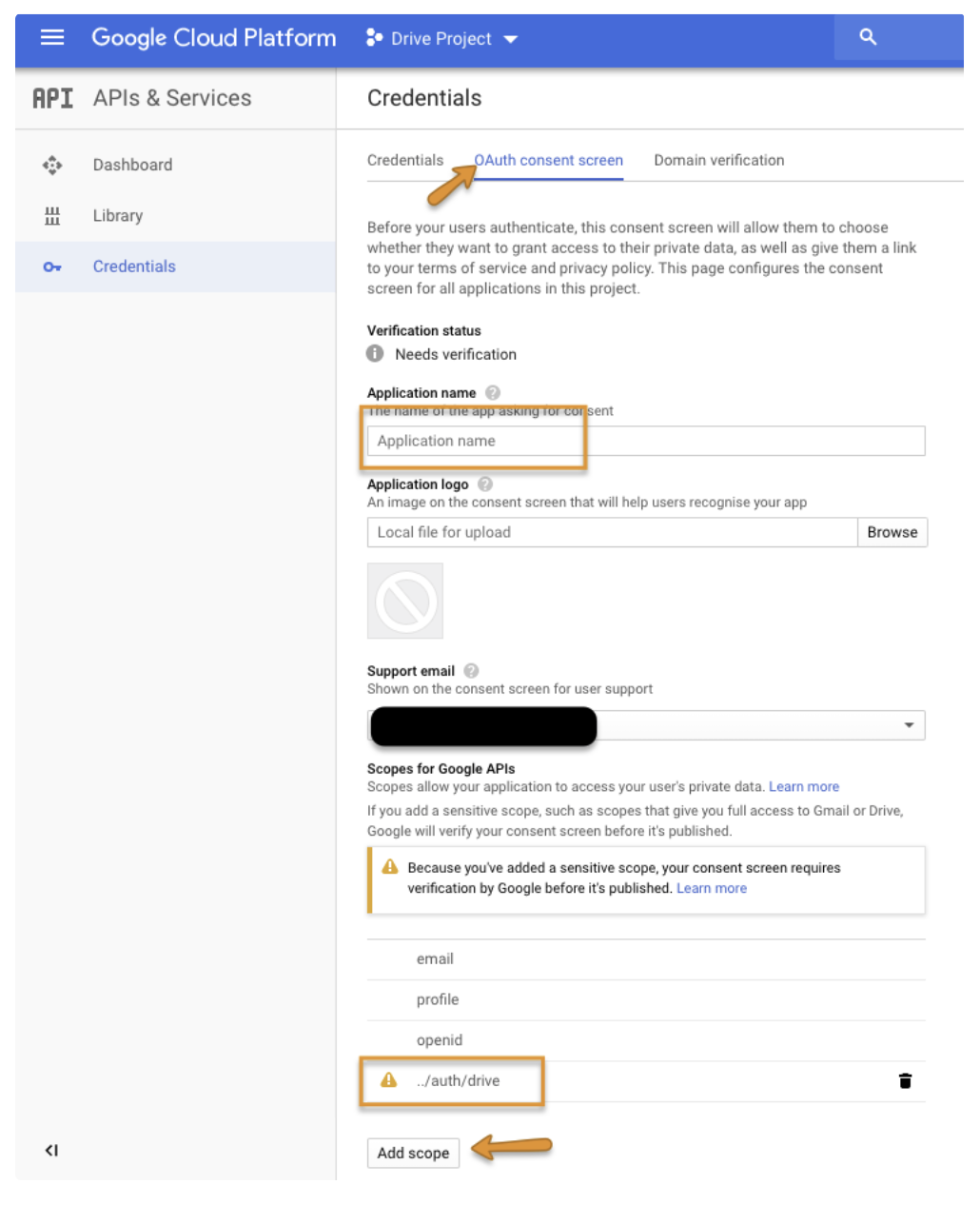

Go to the OAuth consent screentab, provide the Application name, then select Add scope to add the /auth/drive scope then Save.

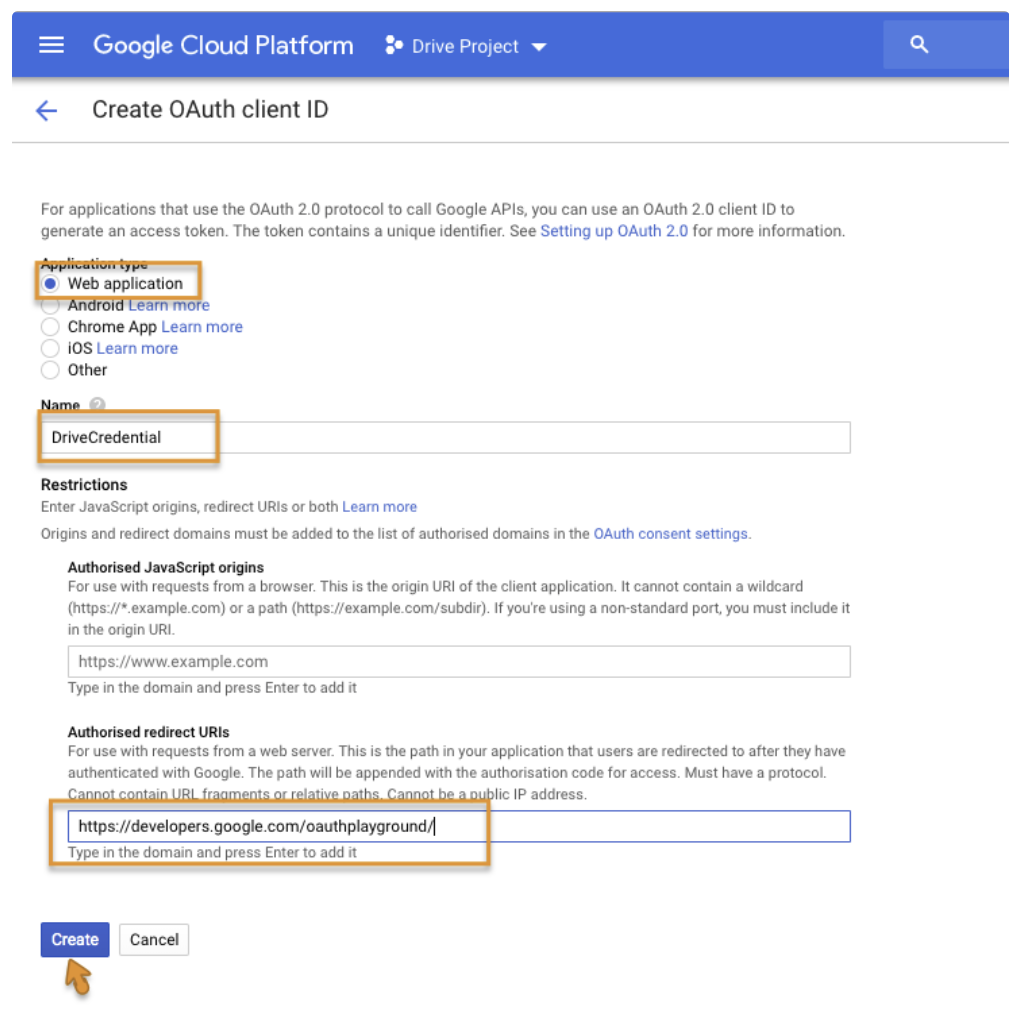

Go to the Credentials tab and click Create credentialsthen select OAuth client ID.

Name your credential and add a redirect URL to https://developers.google.com/oauthplayground/

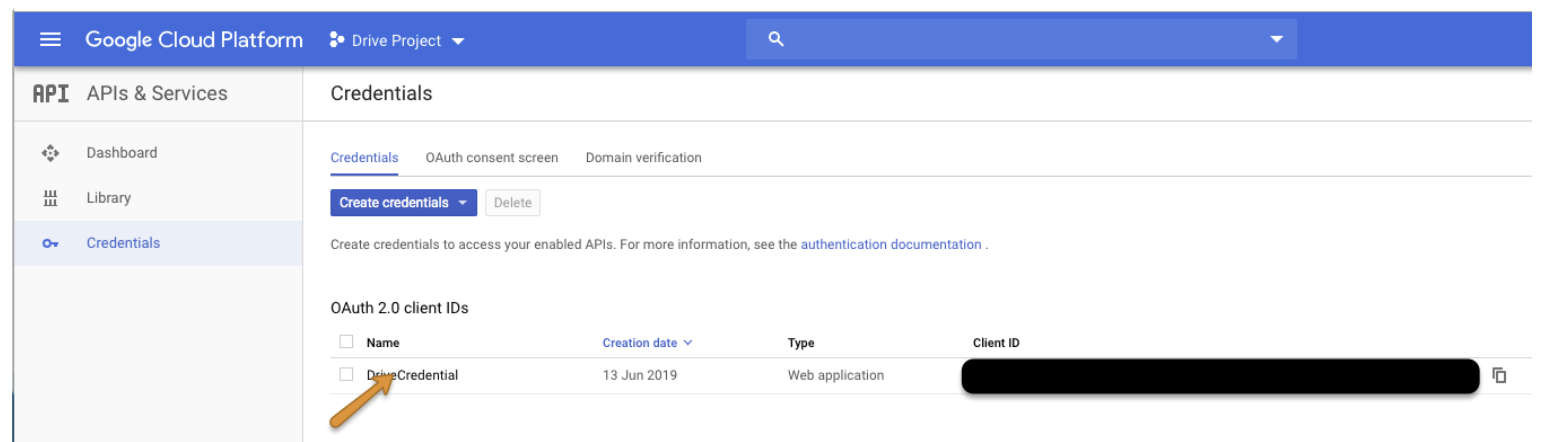

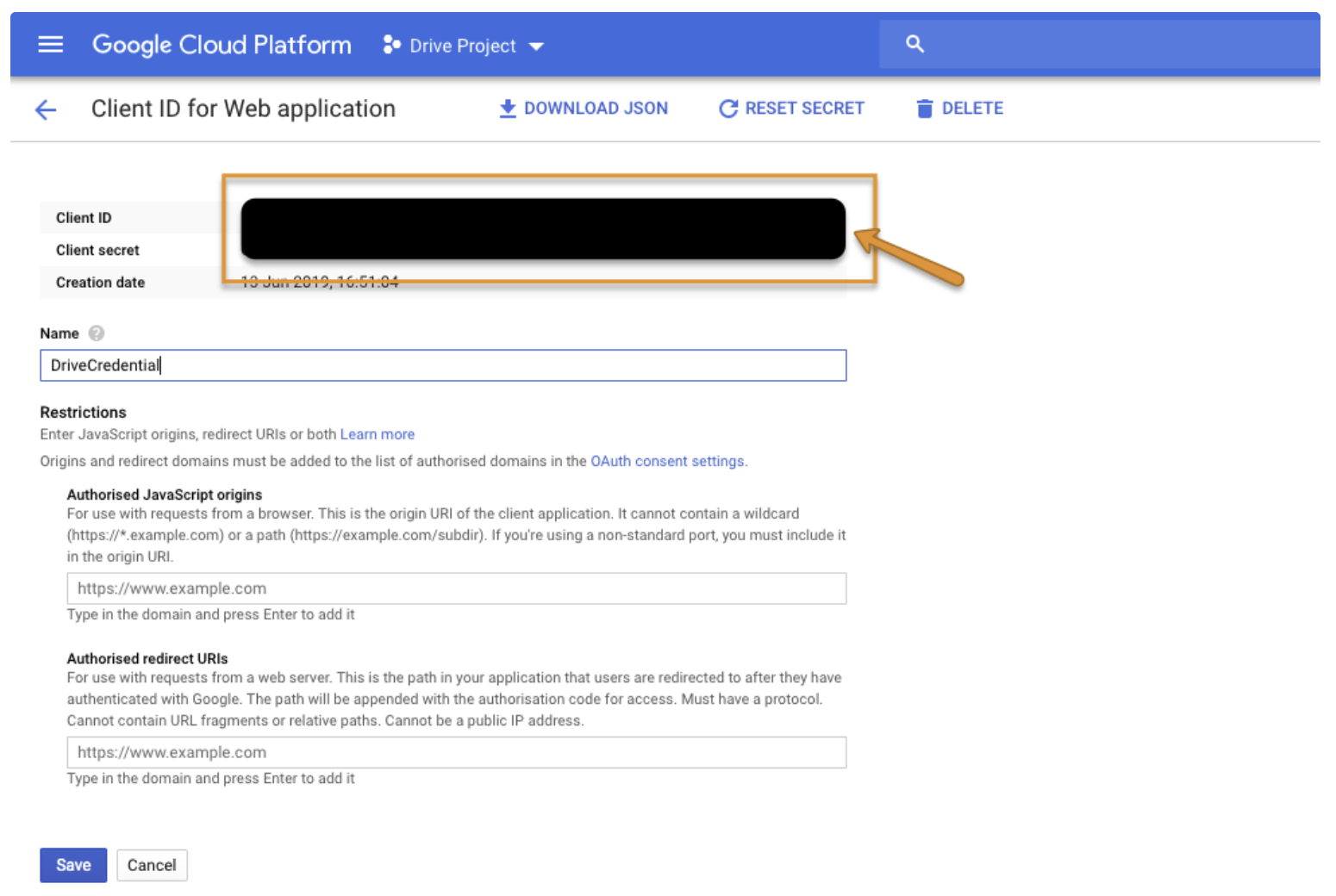

Choose your credential to get your client_id and client_secret.

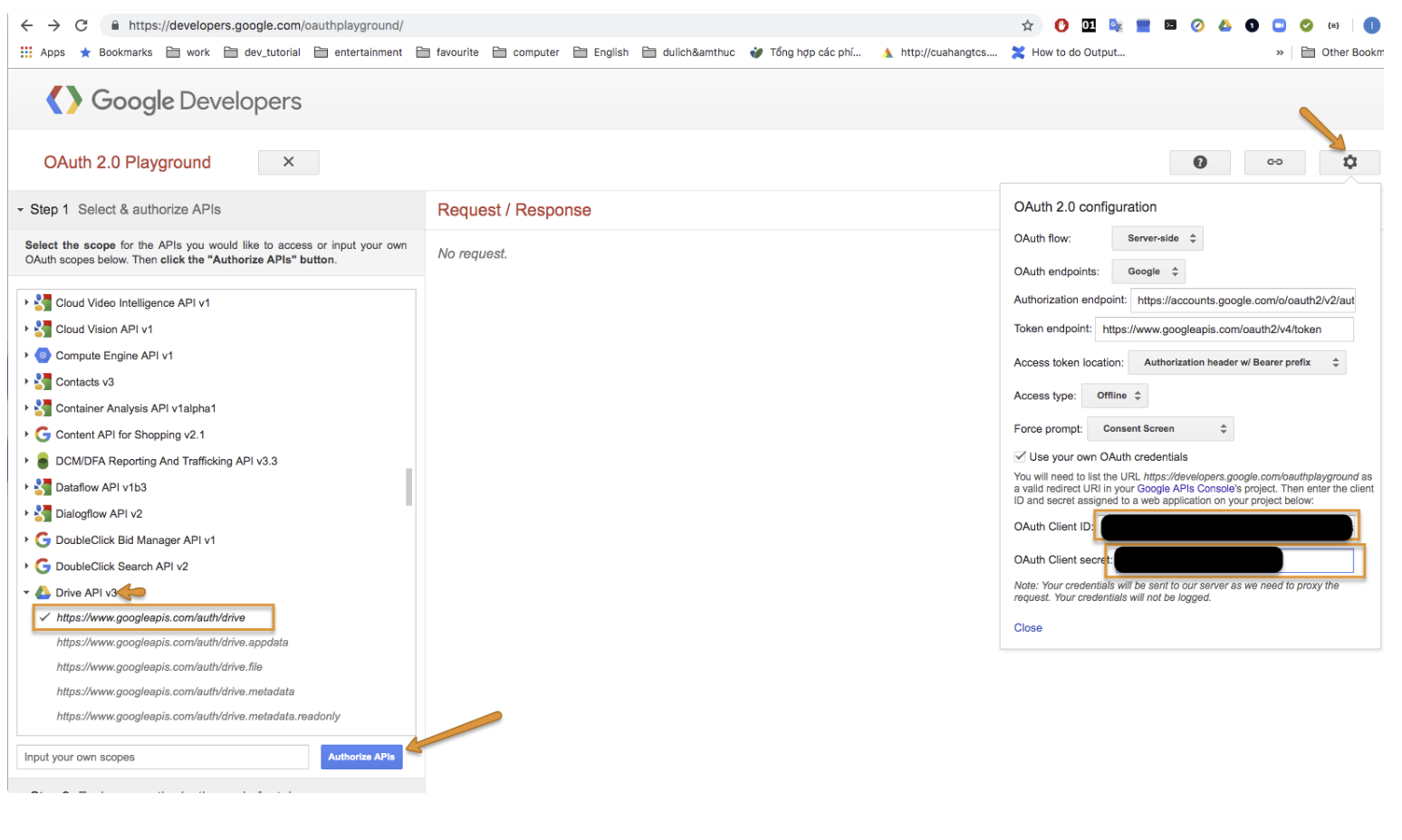

Go to https://developers.google.com/oauthplayground to get a refresh_token.

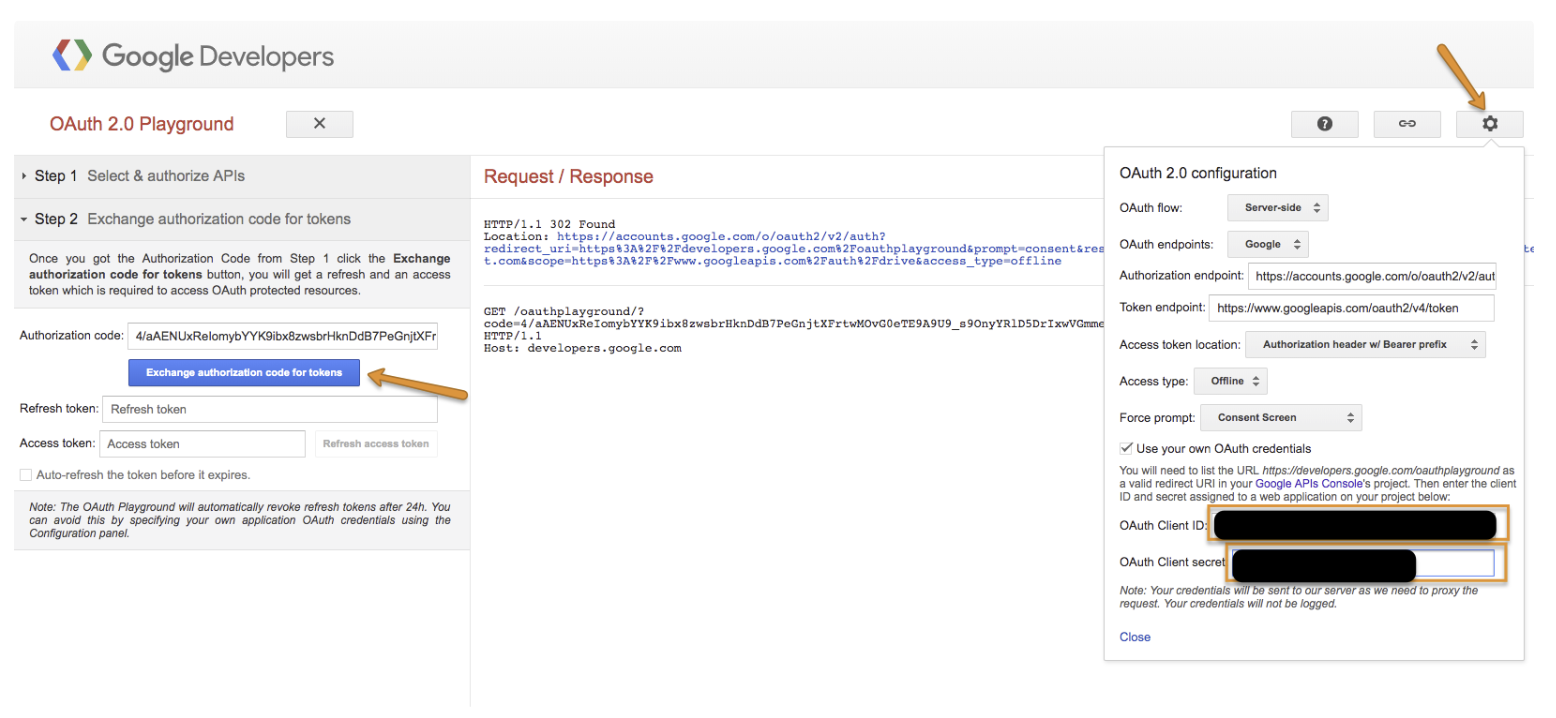

First, select Drive API v3 and scope **auth/drive,**select Settings and provide your **client id, client secret,**that you can get from your credential, then select Authorize APIs.

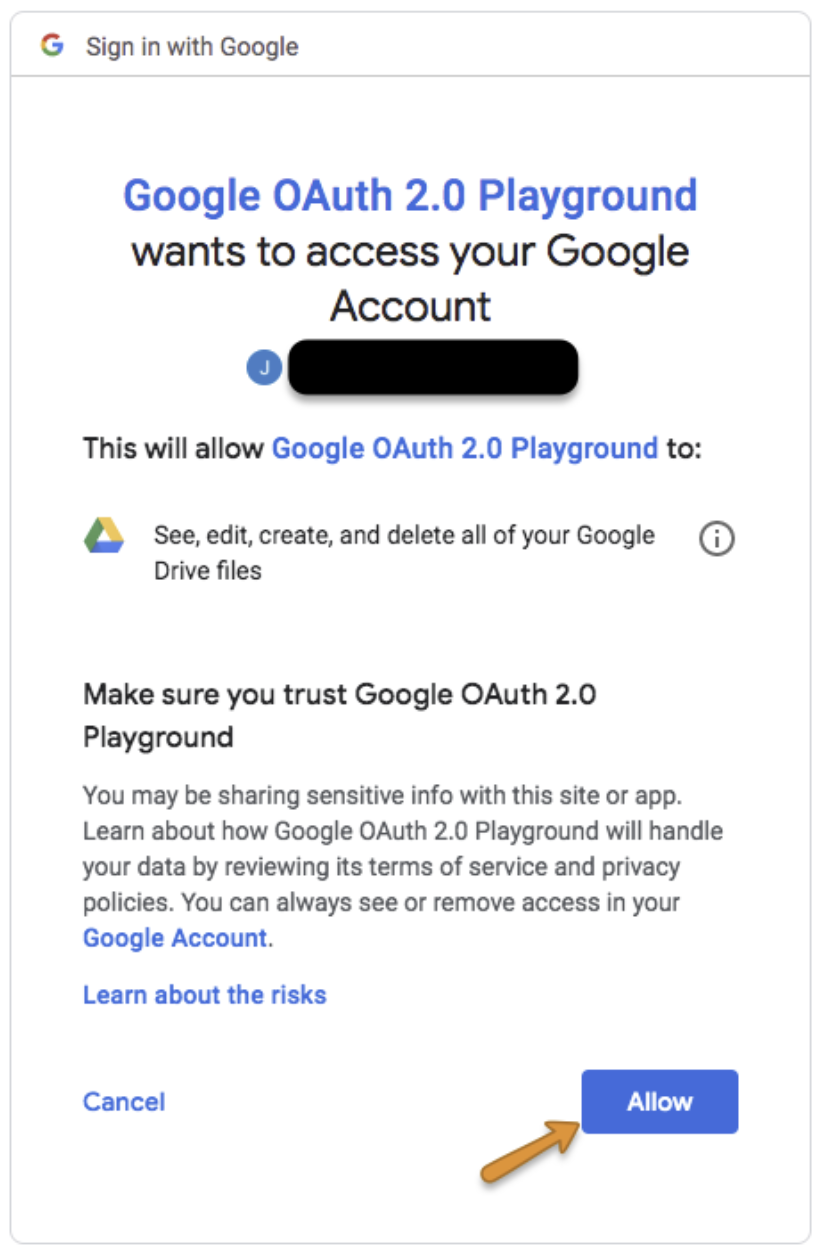

Log in with your Google account and select Allow.

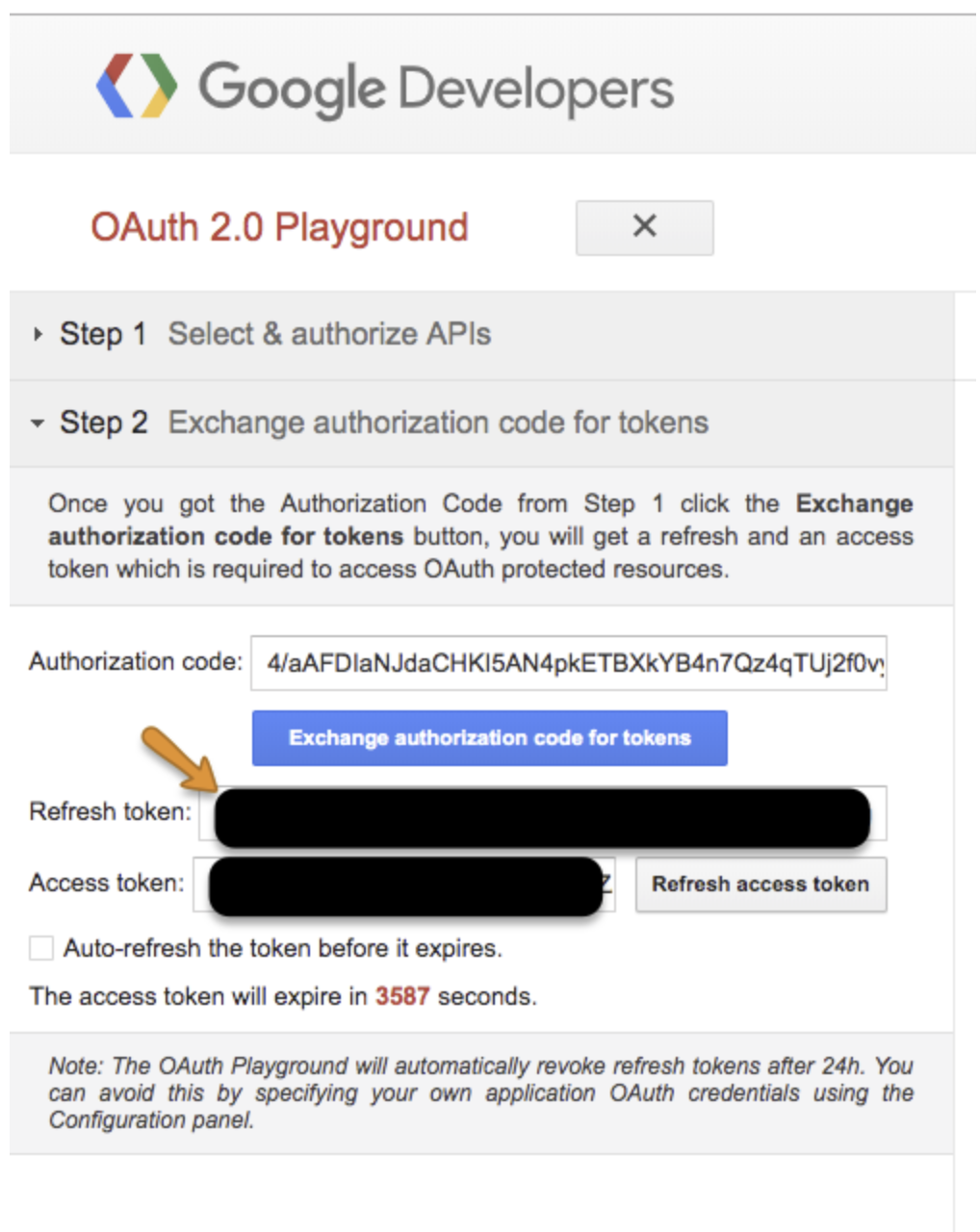

Select Exchange authorization code for tokens.

Copy the refresh token and store it to use later.

A refresh token might stop working for one of these reasons:

- The user has revoked your app's access.

- The refresh token has not been used for six months.

- The user changed passwords and the refresh token contains Gmail scopes.

- The user account has exceeded a maximum number of granted (live) refresh tokens.

There is currently a limit of 50 refresh tokens per user account per client. If the limit is reached, creating a new refresh token automatically invalidates the oldest refresh token without warning.

Reference: https://developers.google.com/identity/protocols/OAuth2

Go to https://drive.google.com and login with your Google account.

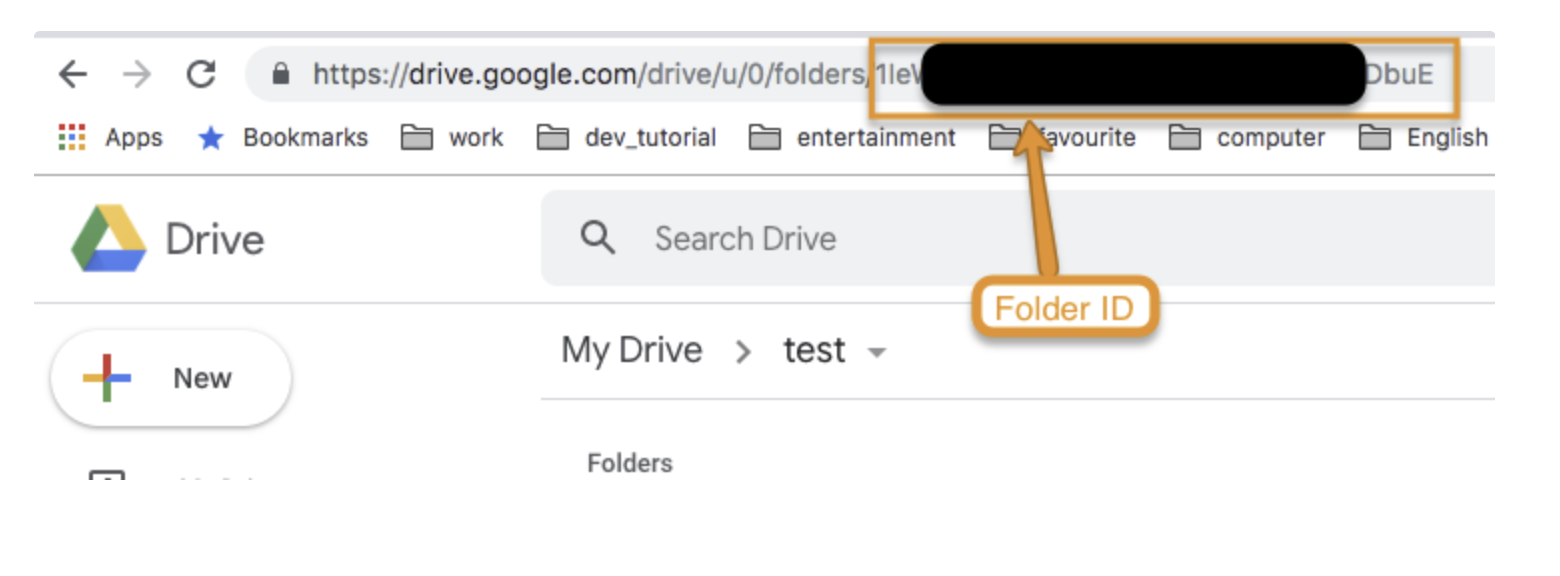

Get Folder ID:

Select the folder that you want to get a Folder ID from and copy the ID from URL.

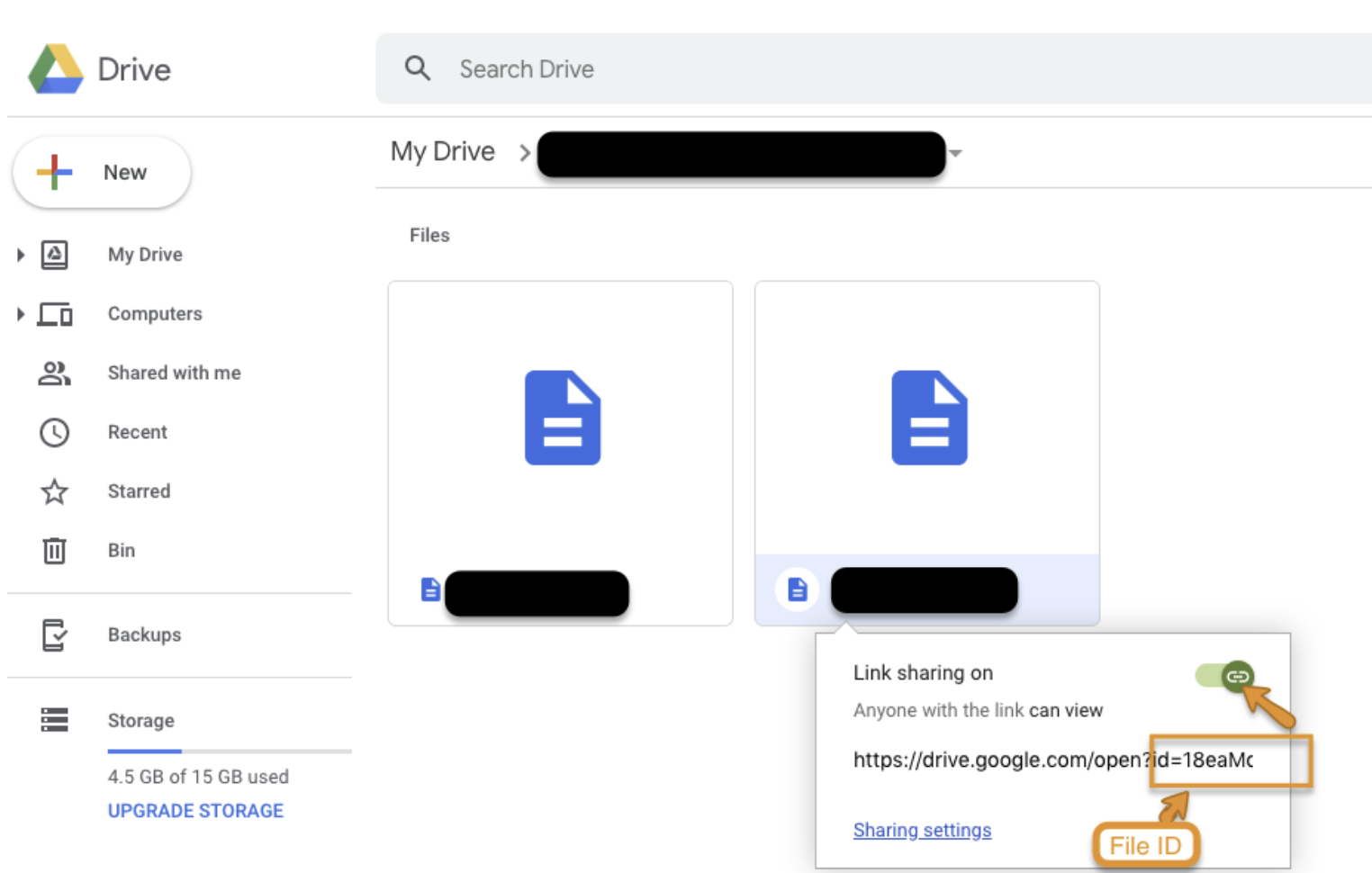

Get File ID:

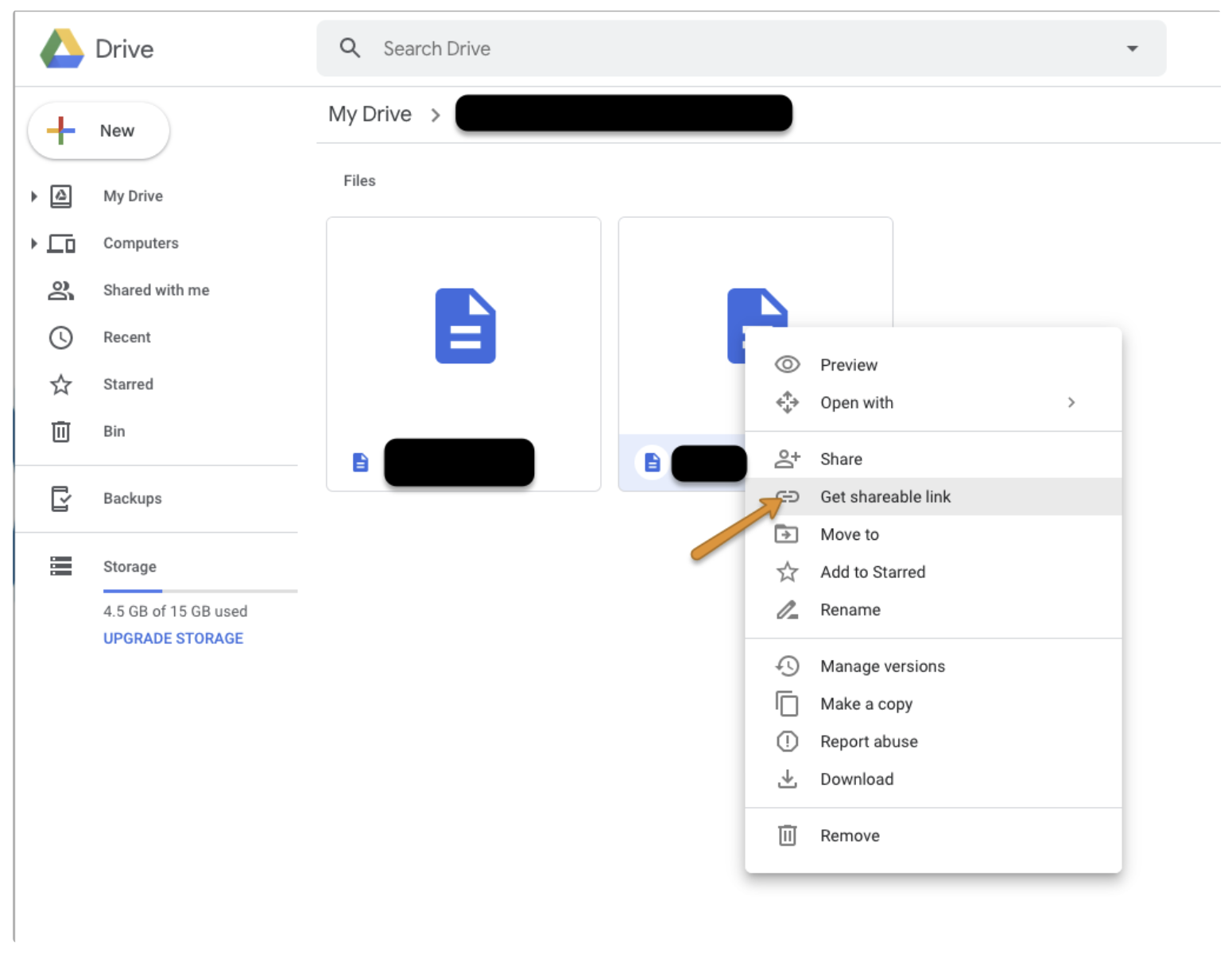

From your drive, choose a file and then right-click and select "Get shareable link".

Turn on Link sharingandcopy the File ID from the link.