You can write job results directly to your Google Cloud Storage. For the Import Integration, see Google Cloud Storage Import Integration.

- Basic knowledge of Treasure Data, including TD Toolbelt.

- A Google Cloud Platform account with specific permissions

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

To use this feature, you need the following:

- Google Project ID

- JSON Credential

- Storage Object Creator role is required to create an Object in the GCS bucket.

- Storage Object Viewer is required to list Objects in the GCS bucket.

List the Cloud Storage buckets. They are ordered in the list lexicographically by name.

To list the buckets in a project:

- Open the Cloud Storage browser in the Google Cloud Console.

- Optionally, use filtering to narrow the results in your list.

Buckets that are part of the currently selected project, appear in the browser list.

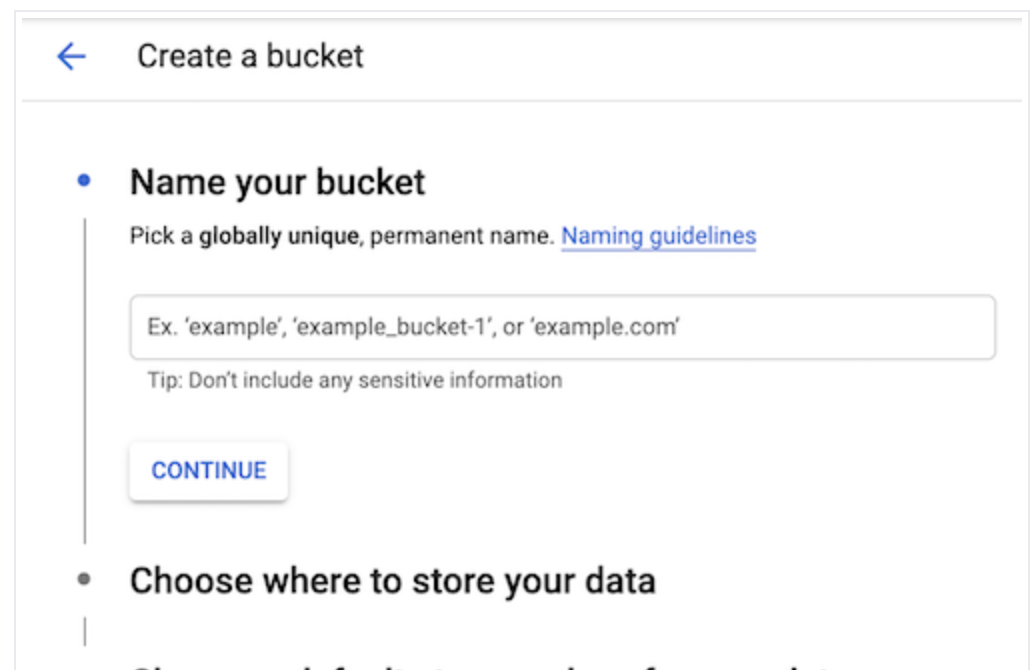

To create a new storage bucket:

- Open the Cloud Storage browser in the Google Cloud Console.

- Select Create bucket to open the bucket creation form.

- Enter your bucket information and select Continue to complete each step:

Specify a Name, subject to the bucket name requirements.

Select a Location type and Location where the bucket data will be permanently stored.

Select a Default storage class for the bucket. The default storage class is assigned by default to all objects uploaded to the bucket.

The Monthly cost estimate panel in the right pane estimates the bucket's monthly costs based on your selected storage class and location, as well as your expected data size and operations.

Select an Access control model to determine how you control access to the bucket's objects.

Optionally, you can add bucket labels, set a retention policy, and choose an encryption method.

- Select Create.

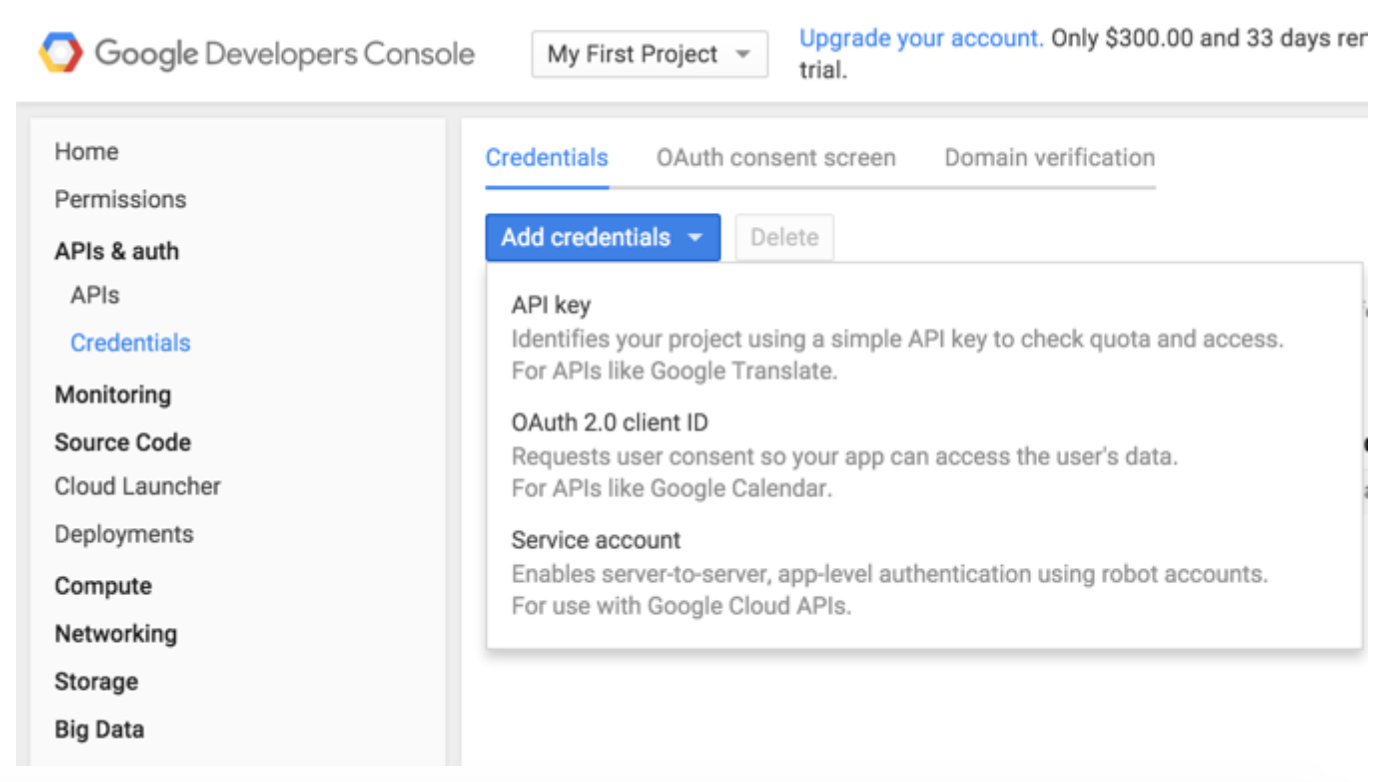

The integration with Google Cloud Storage is based on server-to-server API authentication.

The Service Account used to generate the JSON Credentials must have Storage Object Creator permission and Storage Object Viewer permissions for the destination bucket.

Visit your Google Developer Console.

Select Credentials under APIs & auth at the left menu.

Select Service account:

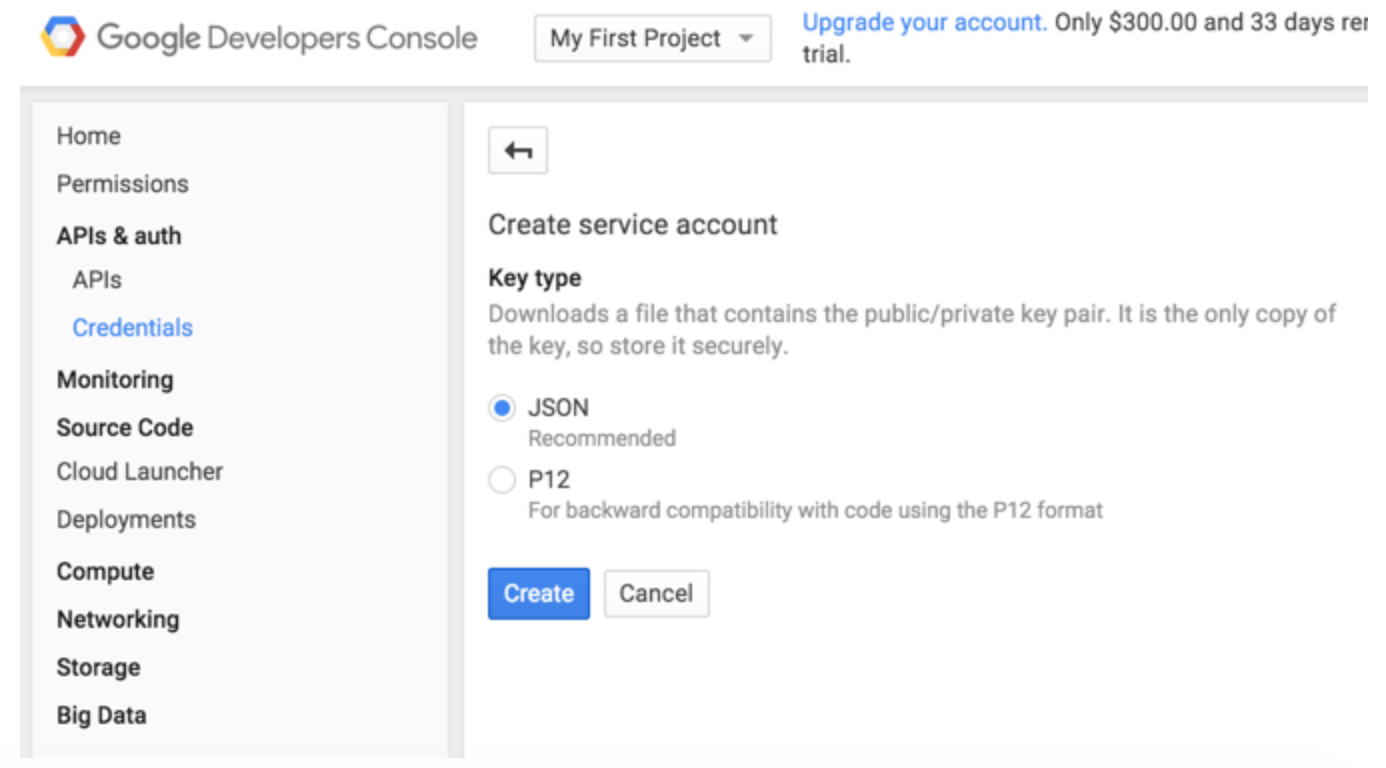

Select the JSON-based key type that is Google’s recommended configuration. The key is automatically downloaded by the browser.

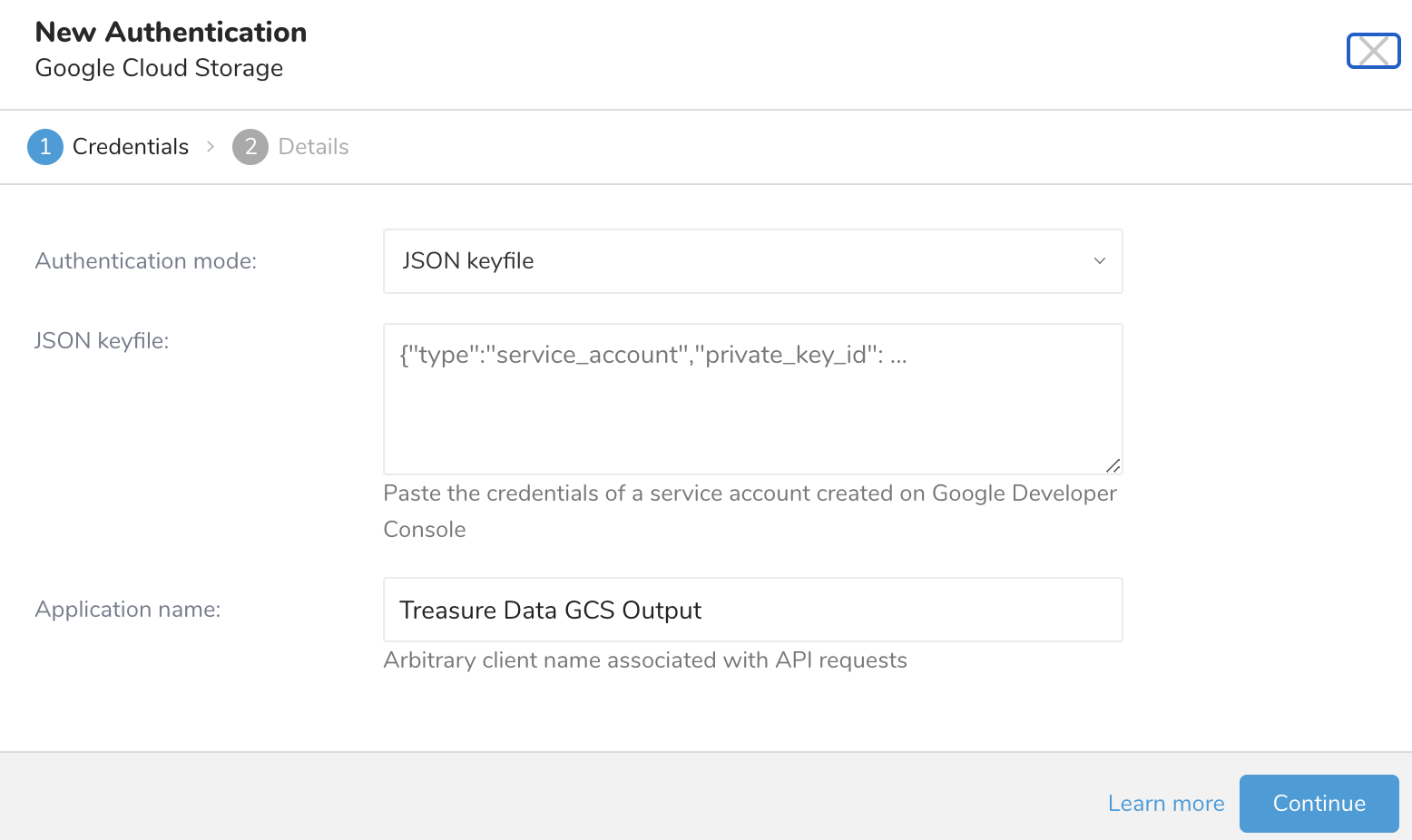

In Treasure Data, you must create and configure the data connection before running your query. As part of the data connection, you provide authentication to access the integration.

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Search for and select Google Cloud Storage.

- Select Create Authentication.

- Type the credentials to authenticate.

- Type a name for your connection.

- Select Continue.

- Complete the instructions in Creating a Destination Integration.

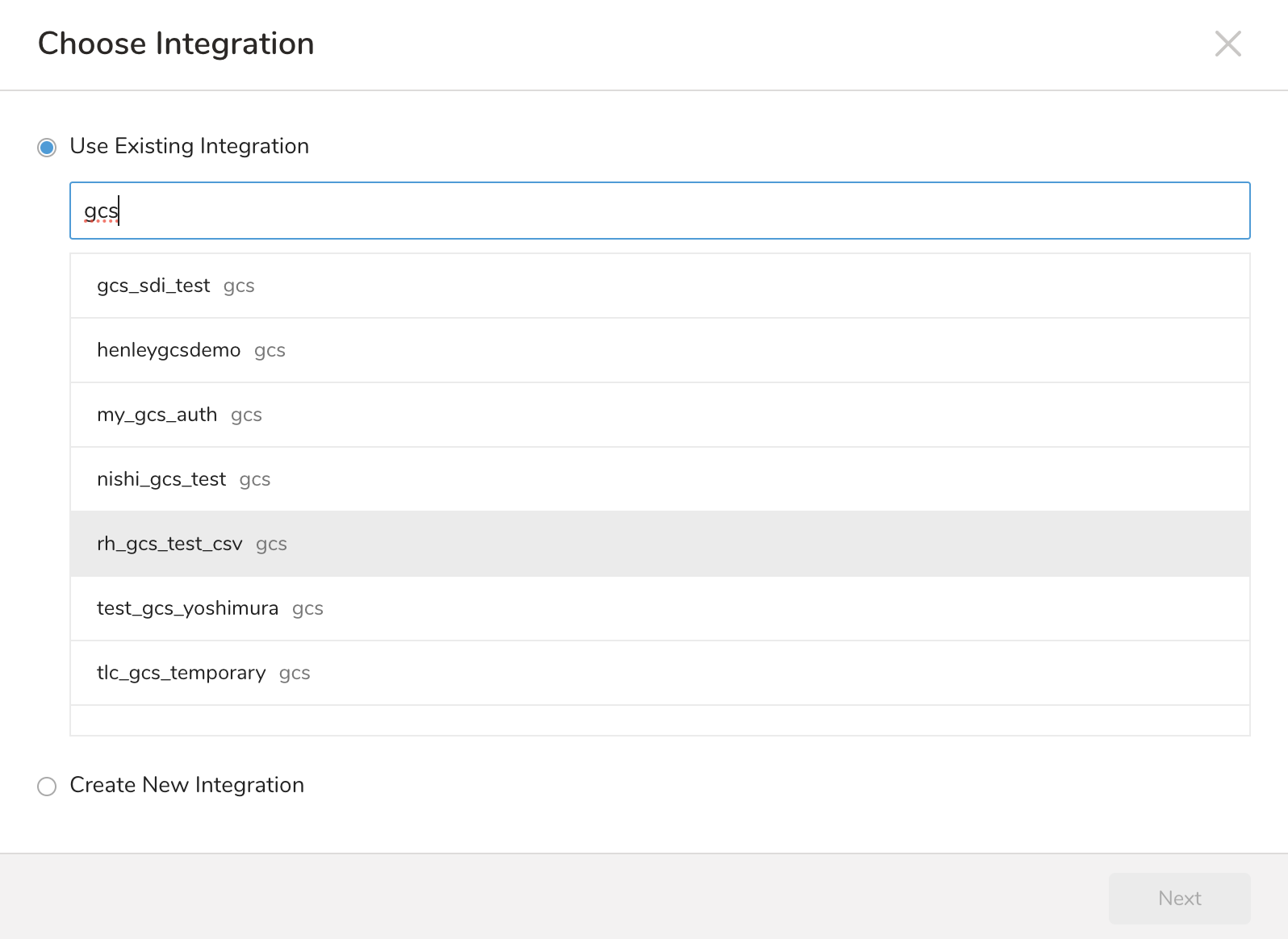

- Navigate to Data Workbench > Queries.

- Select a query for which you would like to export data.

- Run the query to validate the result set.

- SelectExport Results.

- Select an existing integration authentication.

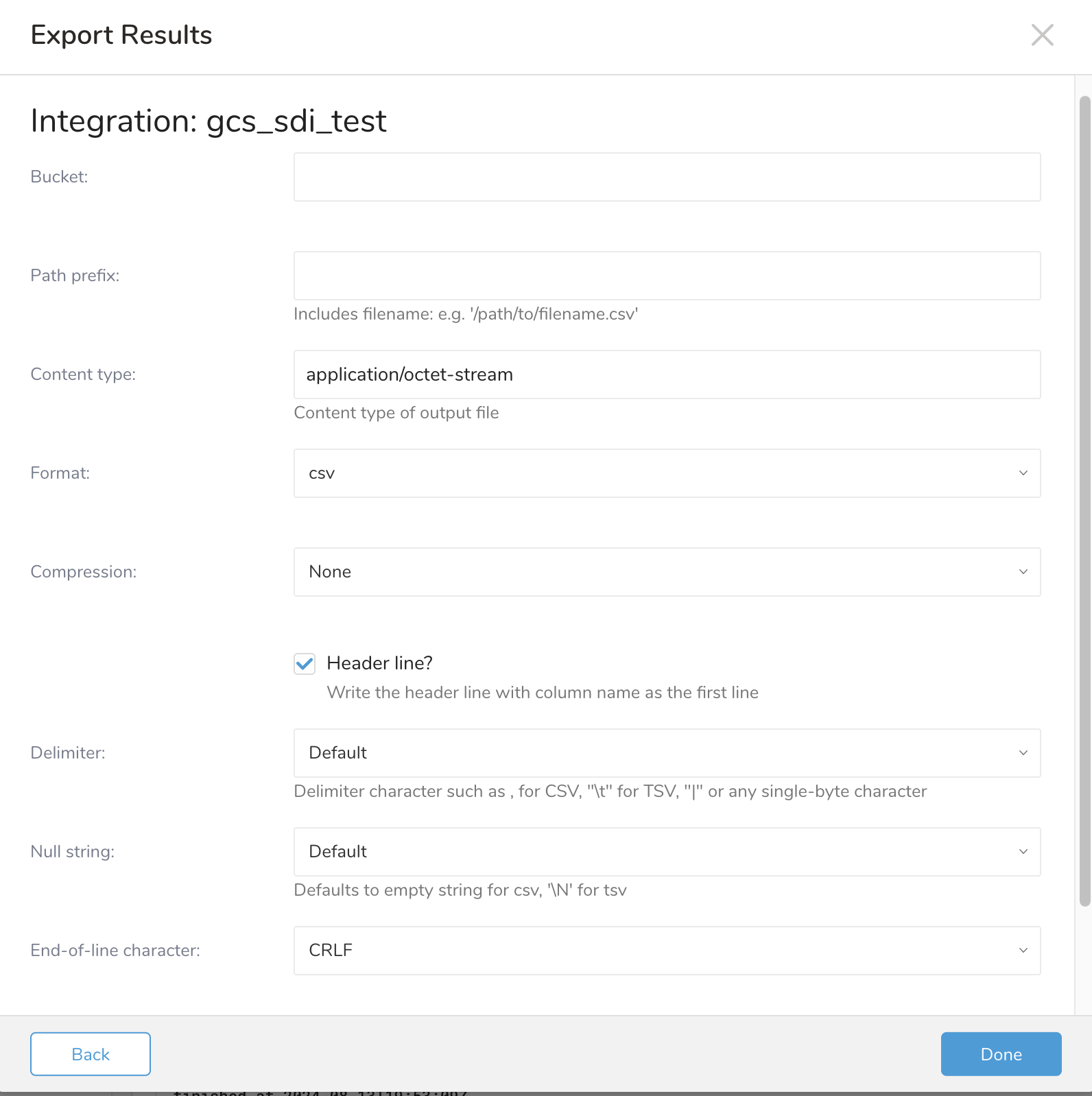

- Define any additional Export Results details. In your export integration content, review the integration parameters. For example, your Export Results screen might be different, or you might not have additional details to fill out.

- Select Done.

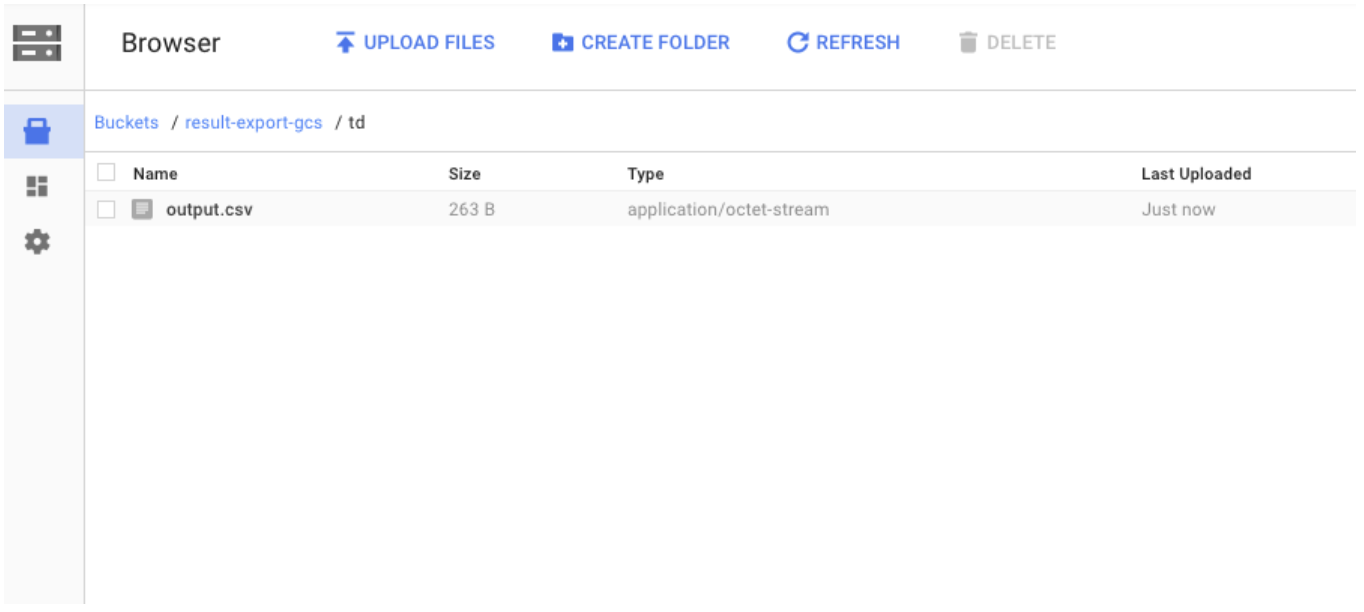

- Run your query.

- Validate that your data moved to the destination you specified.

| Parameter | Values | Description |

|---|---|---|

bucket | Destination Google Cloud Storage bucket name (string, required). | |

path_prefix | Object path prefix, including the filename (string, required). Example: /path/to/filename.csv. | |

content_type | MIME type of the output file (string, optional). Default: application/octet-stream. | |

format | csv, tsv | Output file format (string, required). |

compression | none, gz, bzip2, zip_builtin, zlib_builtin, bzip2_builtin | Compression applied to the exported file (string, optional). Default: none. |

header_line | true, false | Write the header line with column names as the first line (boolean, optional). Default: true. |

delimiter | ,, \t, ` | `, single-byte character |

null_string | Substitution string for NULL values (string, optional). Default: empty string for CSV, \N for TSV. | |

end_of_line_character | CRLF, LF, CR | Line termination character (string, optional). Default: CRLF. |

SELECT

c0 AS EMAIL

FROM

e_1000

WHERE c0 != 'email'Upon successful completion of the query, the results are automatically imported to the specified Google Cloud Storage destination:

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

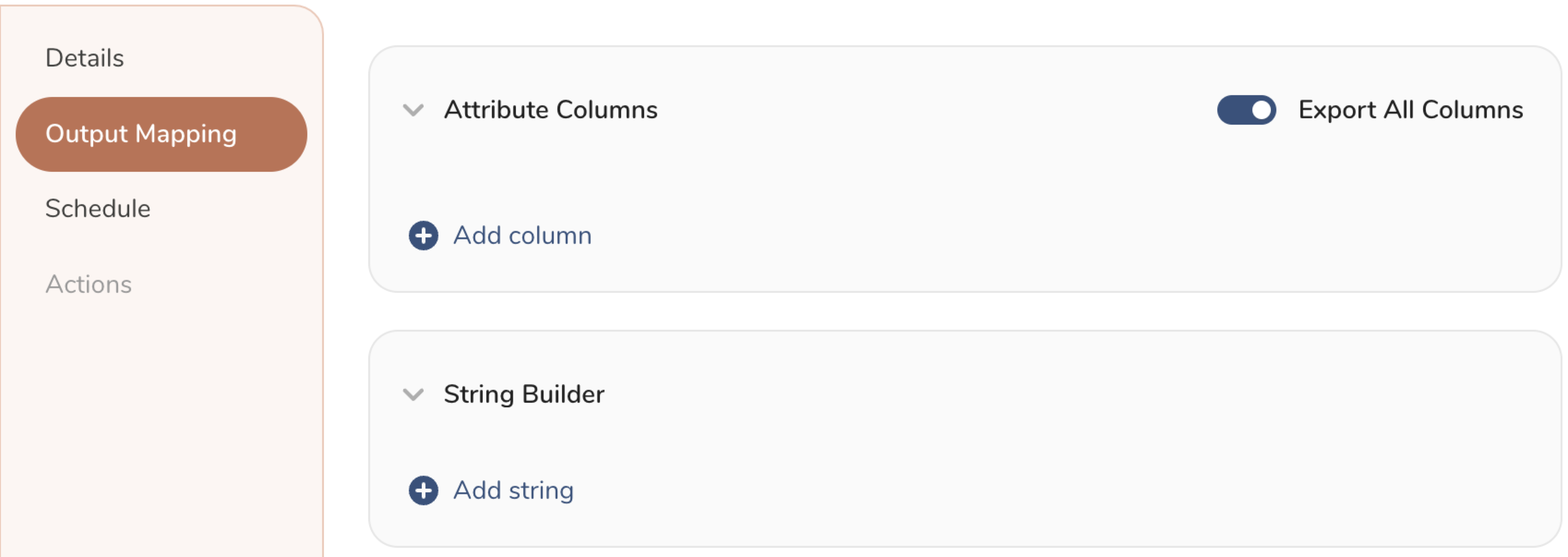

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

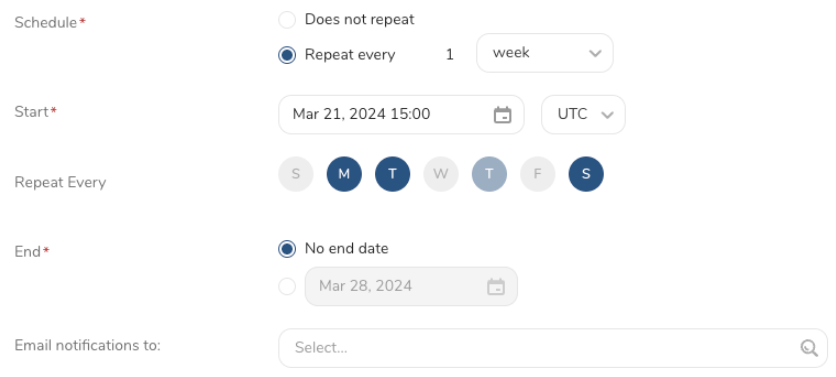

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

The following command allows you to set a scheduled query that sends query results to Google Cloud Storage.

- Specify your JSON keys in the following sample syntax.

- Use backslash to break a line without breaking the code syntax.

'{"type":"gcs","bucket":"samplebucket","path_prefix":"/output/test.csv","format":"csv","compression":"","header_line":false,"delimiter":",","null_string":"","newline":"CRLF", "json_keyfile":"{\"private_key_id\": \"ABCDEFGHIJ\", \"private_key\": \"-----BEGIN PRIVATE KEY-----\\nABCDEFGHIJ\\ABCDEFGHIJ\\n-----END PRIVATE KEY-----\\n\", \"client_email\": \"ABCDEFGHIJ@developer.gserviceaccount.com\", \"client_id\": \"ABCDEFGHIJ.apps.googleusercontent.com\", \"type\": \"service_account\"}"}'For example,

$ td sched:create scheduled_gcs "10 6 * * *" \

-d dataconnector_db "SELECT id,account,purchase,comment,time FROM data_connectors" \

-r '{"type":"gcs","bucket":"samplebucket","path_prefix":"/output/test.csv","format":"csv","compression":"","header_line":false,"delimiter":",","null_string":"","newline":"CRLF", "json_keyfile":"{\"private_key_id\": \"ABCDEFGHIJ\", \"private_key\": \"-----BEGIN PRIVATE KEY-----\\nABCDEFGHIJ\\ABCDEFGHIJ\\n-----END PRIVATE KEY-----\\n\", \"client_email\": \"ABCDEFGHIJ@developer.gserviceaccount.com\", \"client_id\": \"ABCDEFGHIJ.apps.googleusercontent.com\", \"type\": \"service_account\"}"}'Options

| Option | Values |

|---|---|

format | csv or tsv |

compression | "" or gz |

null_string | "" or \N |

newline | CRLF, CR, or LF |

json_keyfile | Escape newline \n with a backslash |

- The Result Export can be scheduled to upload data to a target destination periodically.

- All import and export integrations can be added to a Treasure Workflow. The td workflow operator can be used to export a query result to a specified connector. For more information, see Workflow Operators.

The Embulk-encoder-Encryption document

Note: Please ensure that you compress your file before encrypting and uploading.

When you decrypt using non-built-in encrypti on, the file will return to a compressed format such as .gz or .bz2.

When you decrypt using built-in encrypti on, the file will return to raw data.