Learn more about Google BigQuery Import Integration.

You can write job results directly to your Google BigQuery. You can view how to import data from Google BigQuery.

- Basic knowledge of Treasure Data, including the TD Toolbelt.

- A Google Cloud Platform account

- Nested or repeated data types like ARRAY are not supported as destination columns.

Treasure Data supports the "append, replace, replace backup, delete" modes.

To use this feature, you need:

- Project ID

- JSON Credential

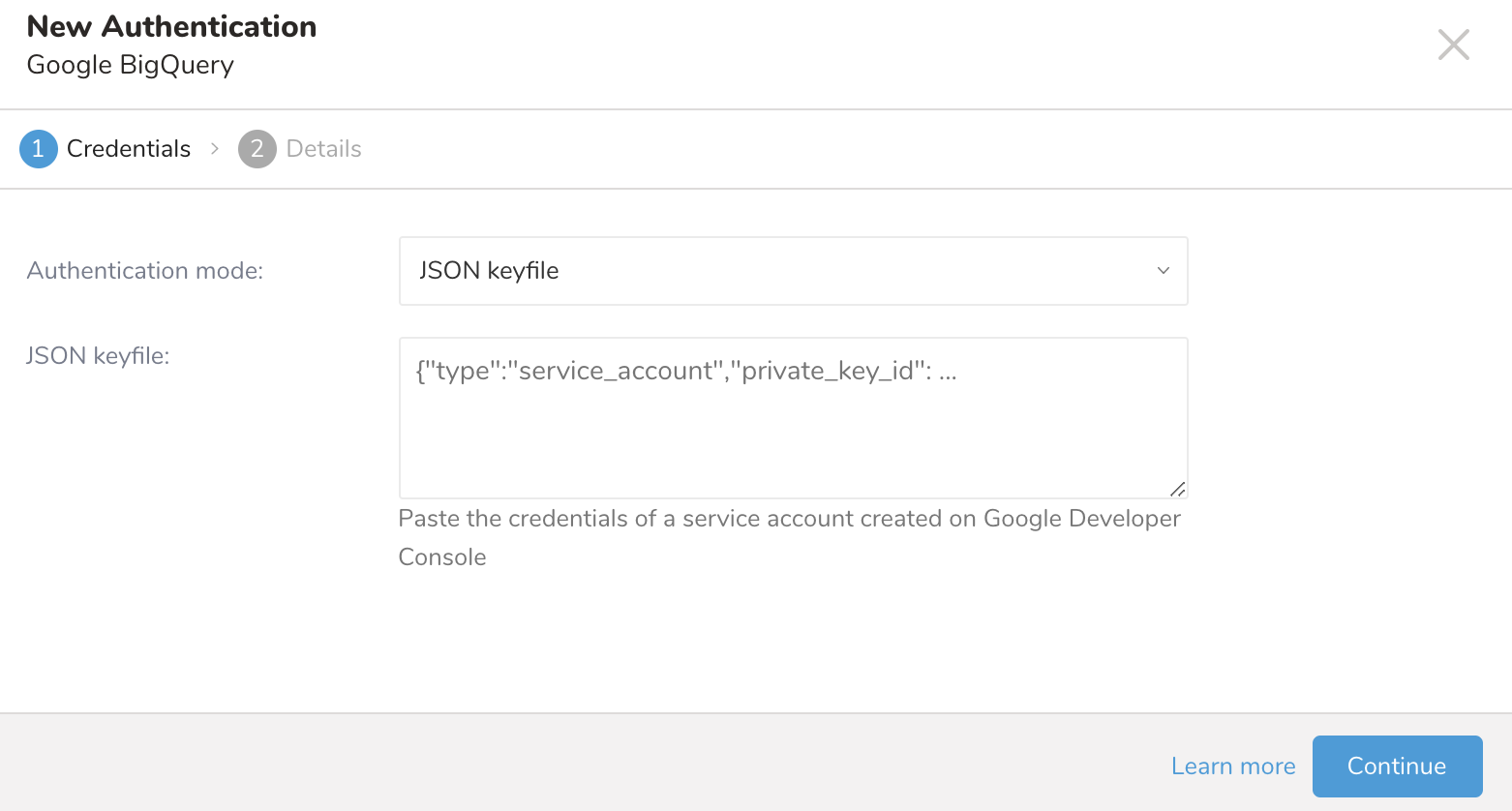

The integration with Google BigQuery is based on server-to-server API authentication.

Navigate to your Google Developer Console.

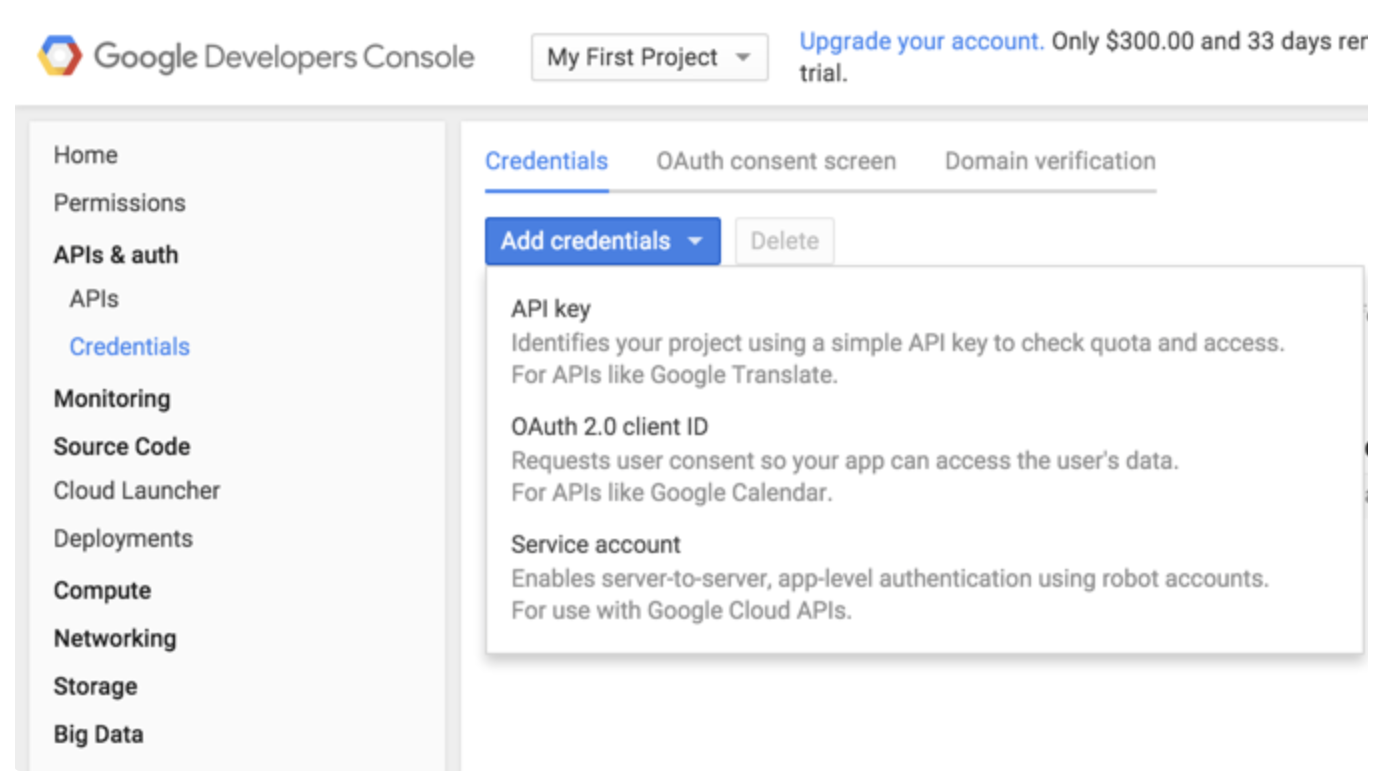

Select APIs & auth > Credentials.

Select Service account.

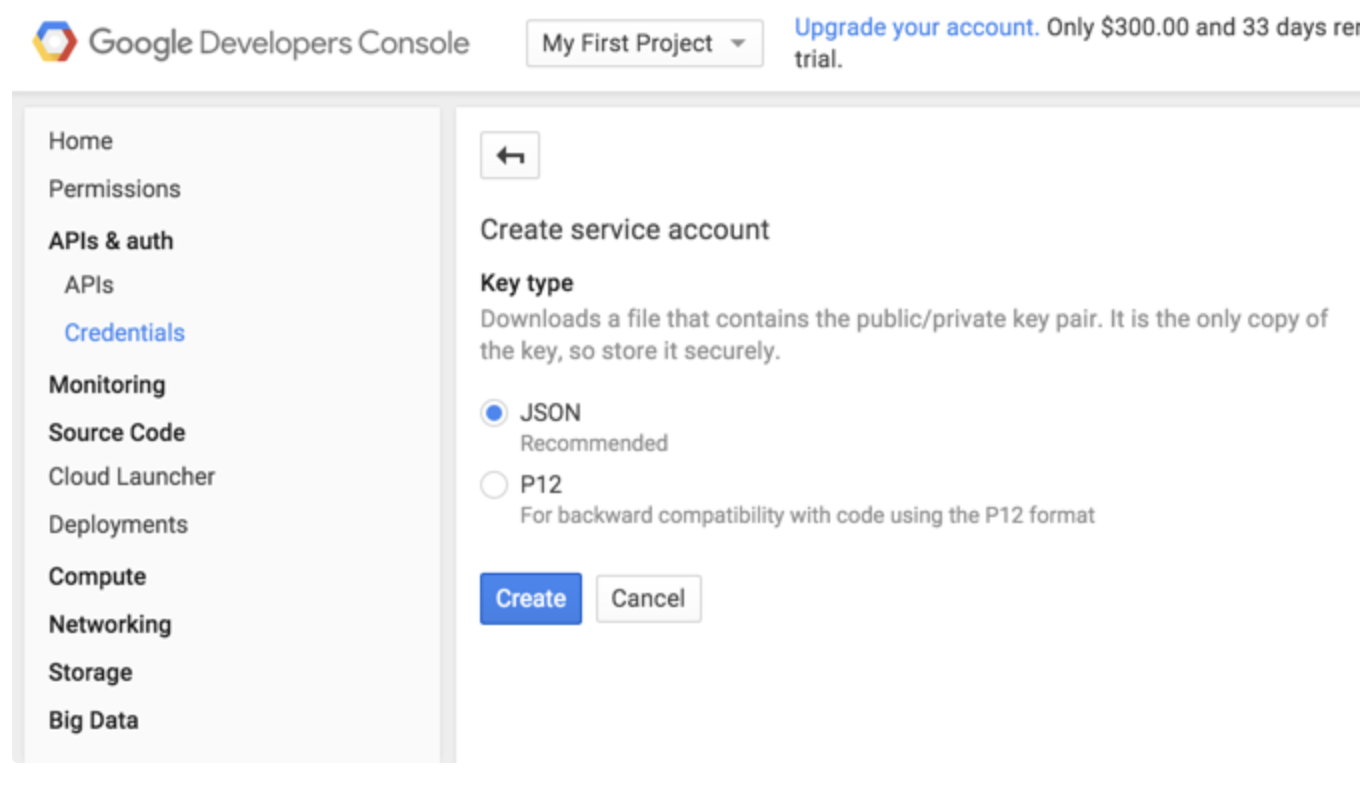

Select the JSON-based key type, which is recommended by Google. The key is downloaded automatically by the browser.

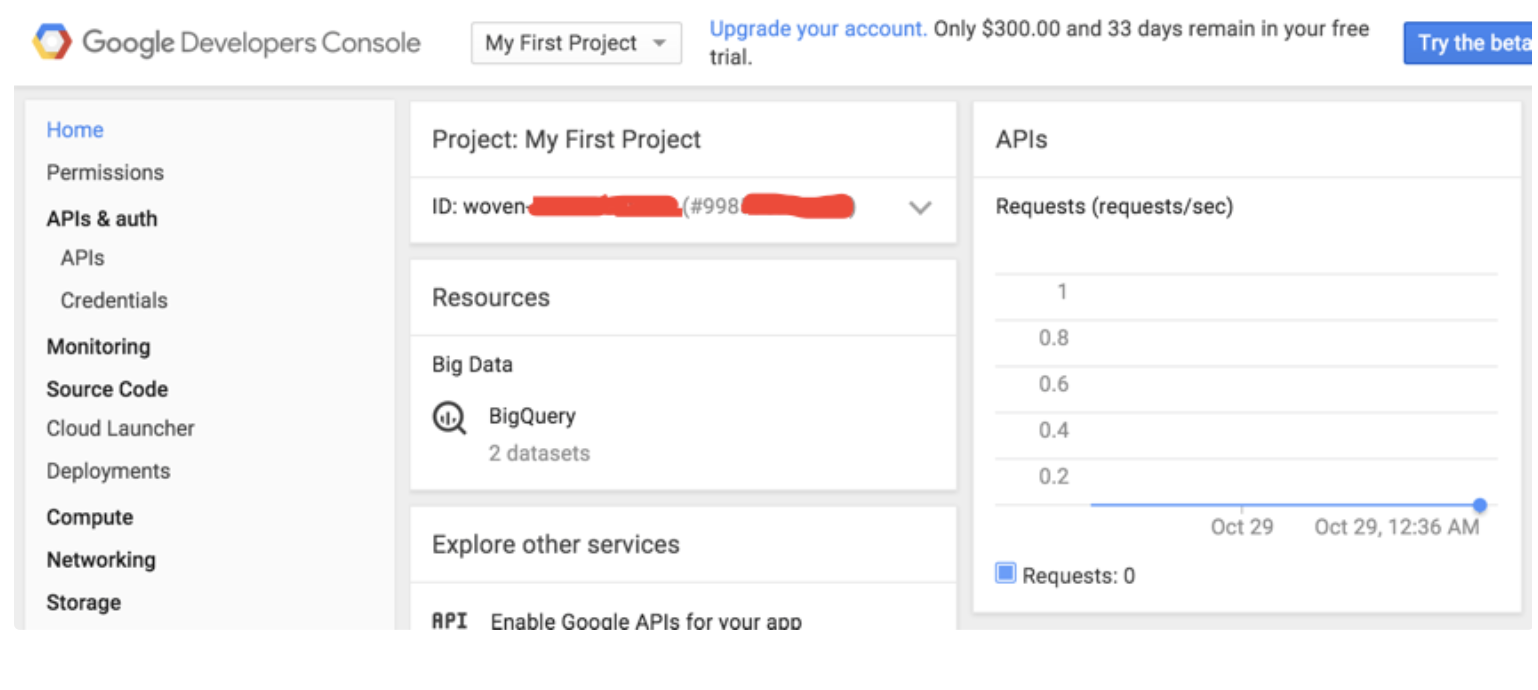

- Navigate to your Google Developer Console.

- Select Home.

- Locate your Project ID.

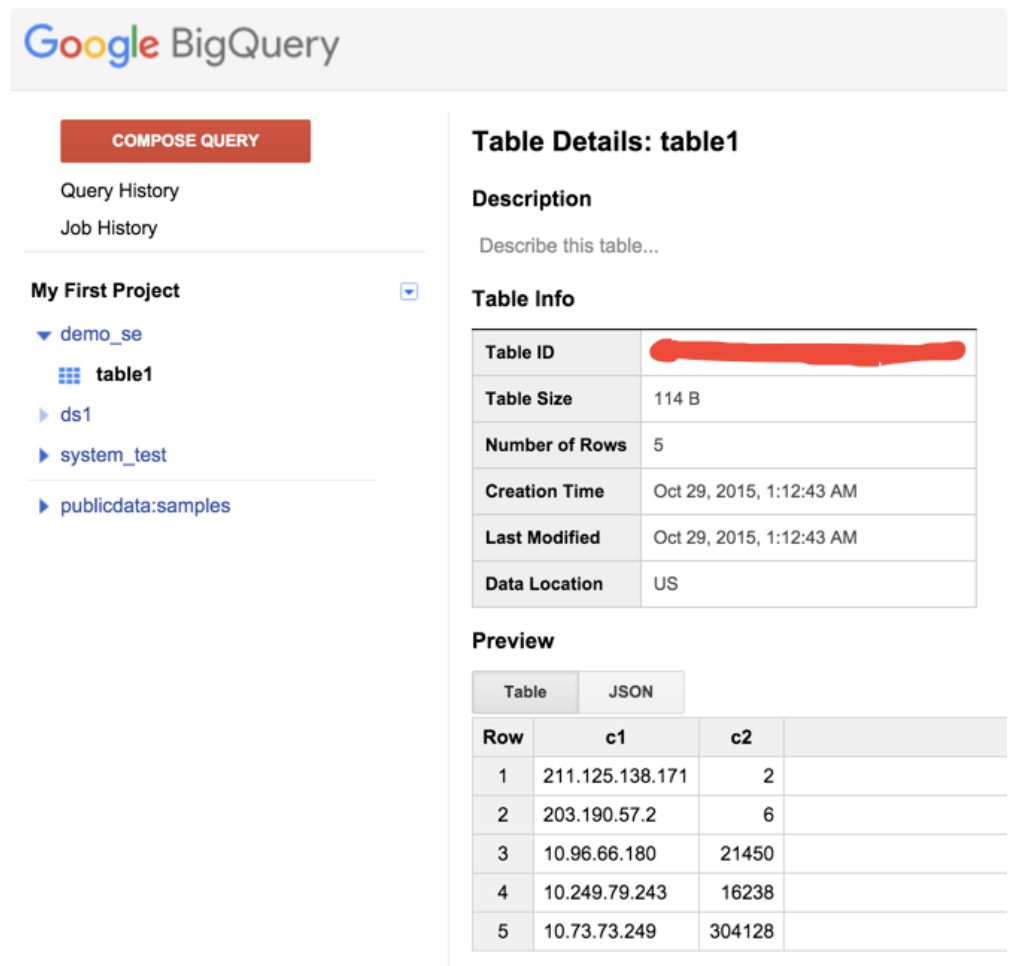

Create your Dataset and Table from your BigQuery console.

Navigate to TD Console.

Navigate to Integrations Hub > Catalog.

Select Google Big Query.

Complete all the information as follows:

Write the query. The query results must be matched with the pre-defined schema on BigQuery. After you execute the job, Treasure Data query results are automatically imported into Google BigQuery.

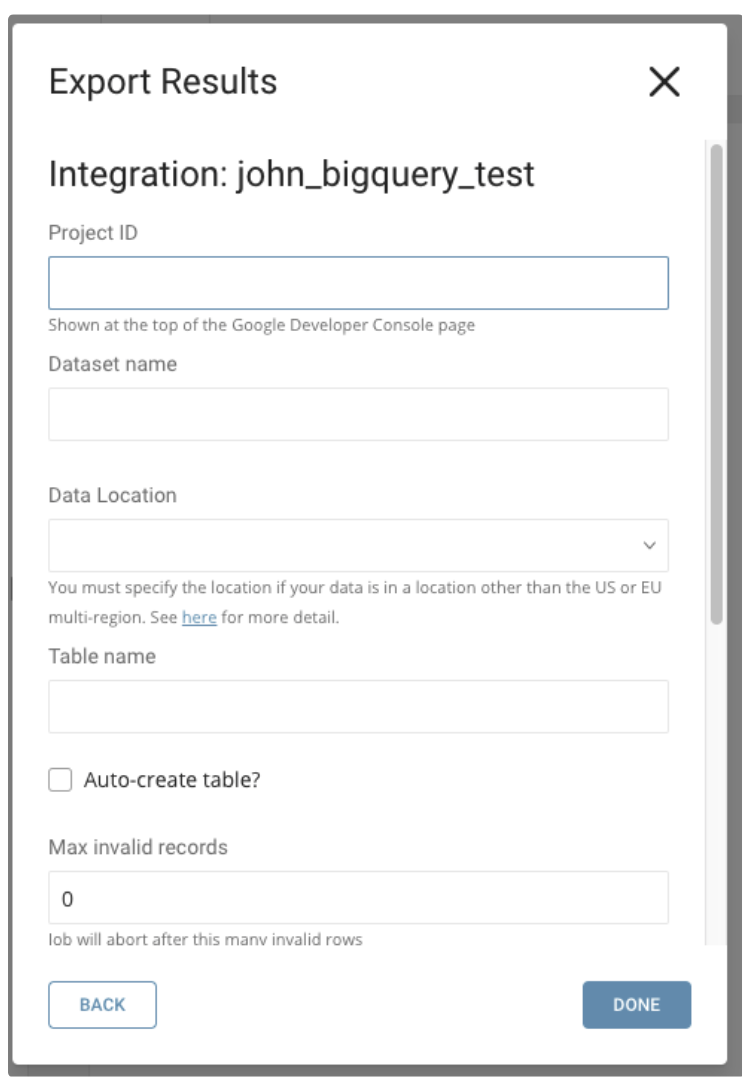

When the table already exists in BigQuery, you see a pane similar to the following:

Fill in Project ID, Dataset Name and Table Name get from BigQuery.

Write the query. The query results must match the pre-defined schema on BigQuery. After you execute the job, Treasure Data query results are automatically imported into Google BigQuery.

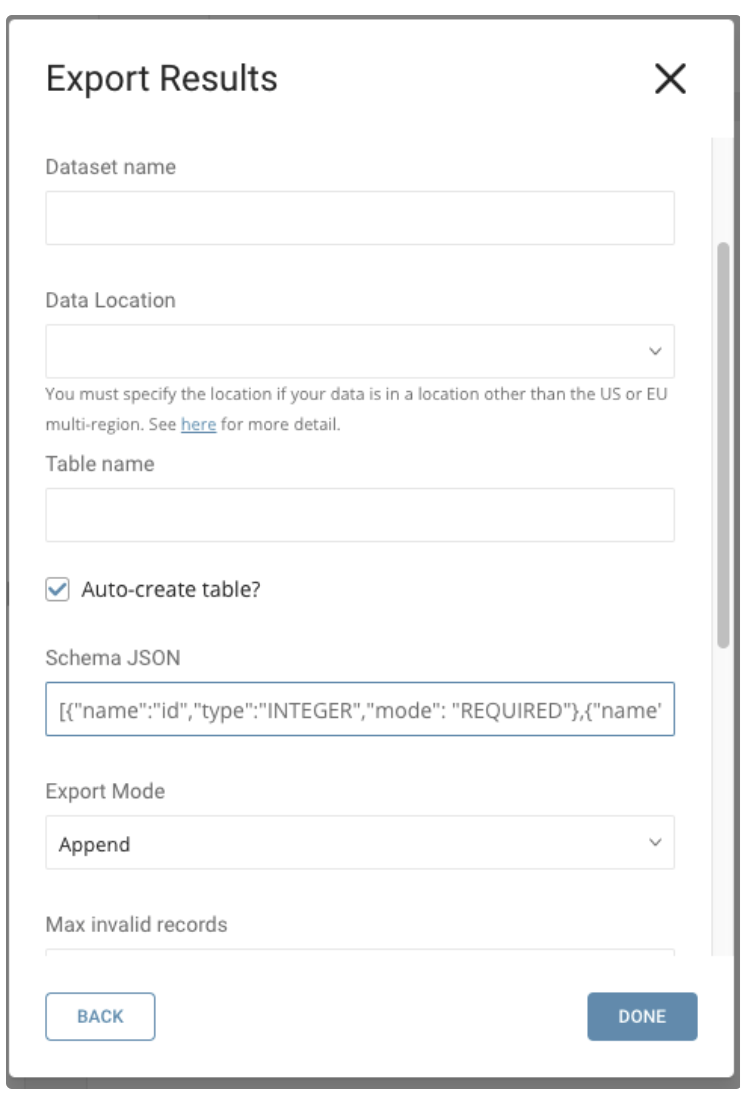

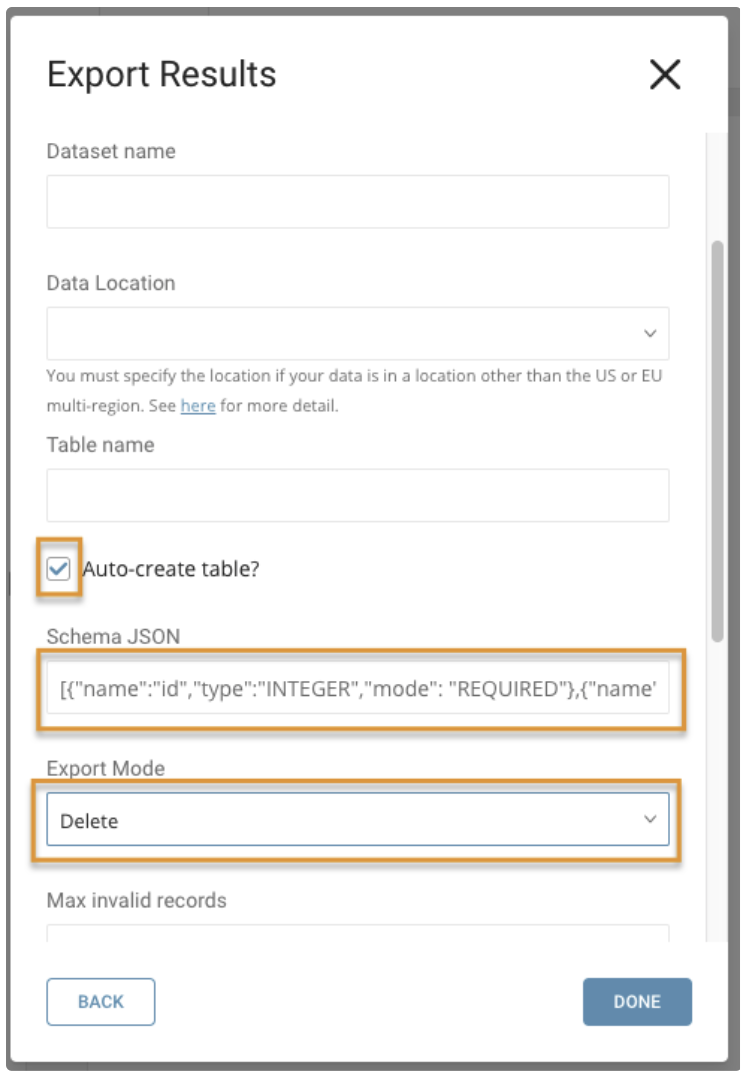

When a table does not exist in BigQuery:

Fill in Project ID, Dataset Name get from BigQuery.

Provide a name for the table that will be created in BigQuery Dataset. Check Auto-create table, provide a schema for the new table. Schema JSON must be matched with the query results(number of field and data type). After you execute the job, a new table with name and schema as your input will be created and Treasure Data query results are automatically imported into this table Google BigQuery.

Example Schema JSON:

[{"name":"id","type":"INTEGER","mode": "REQUIRED"},{"name":"name","type":"STRING"}]

You see a pane similar to the following:

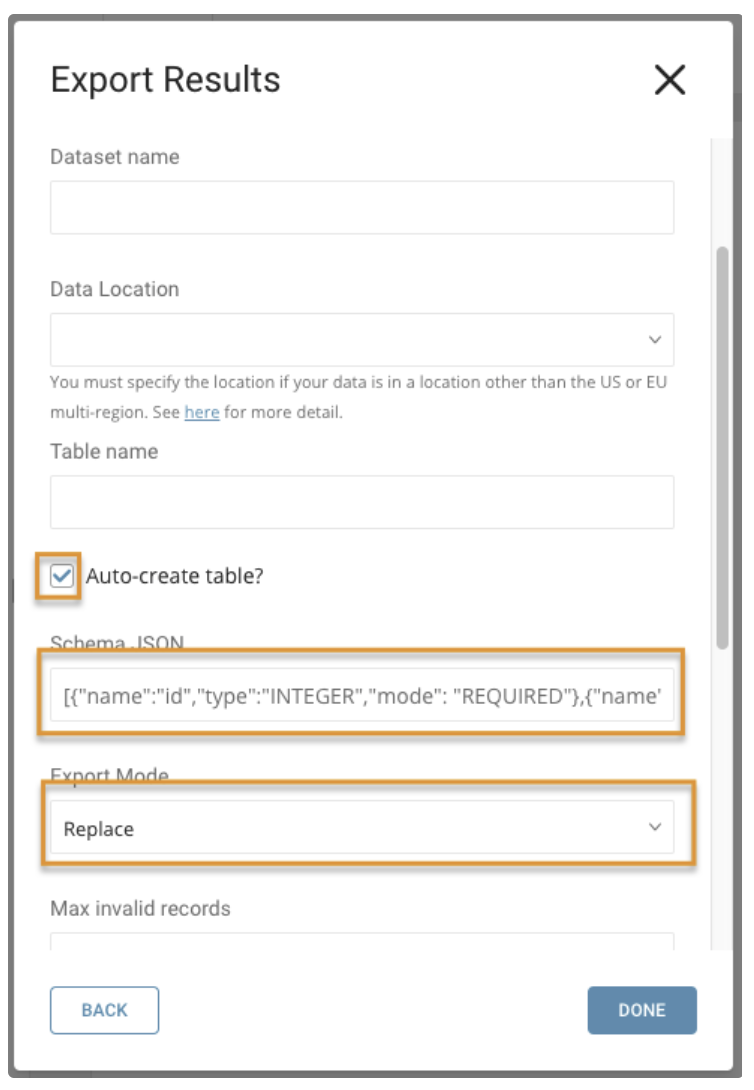

Fill in Project ID, Dataset Name and Table Name get from BigQuery.

Select Auto-create table and provide a schema for the table to be replaced. The value in the Schema JSON field must match the query results (number of fields and data type). Choose Replace from drop down. After you execute the job, if the table already exists in BigQuery, or if the value in Schema JSON is different from table schema, then table is handled as replaced data. If the table does not exist in BigQuery then a new table is created with name and schema as your input. Treasure Data query results are automatically imported into this table.

You see a pane similar to the following:

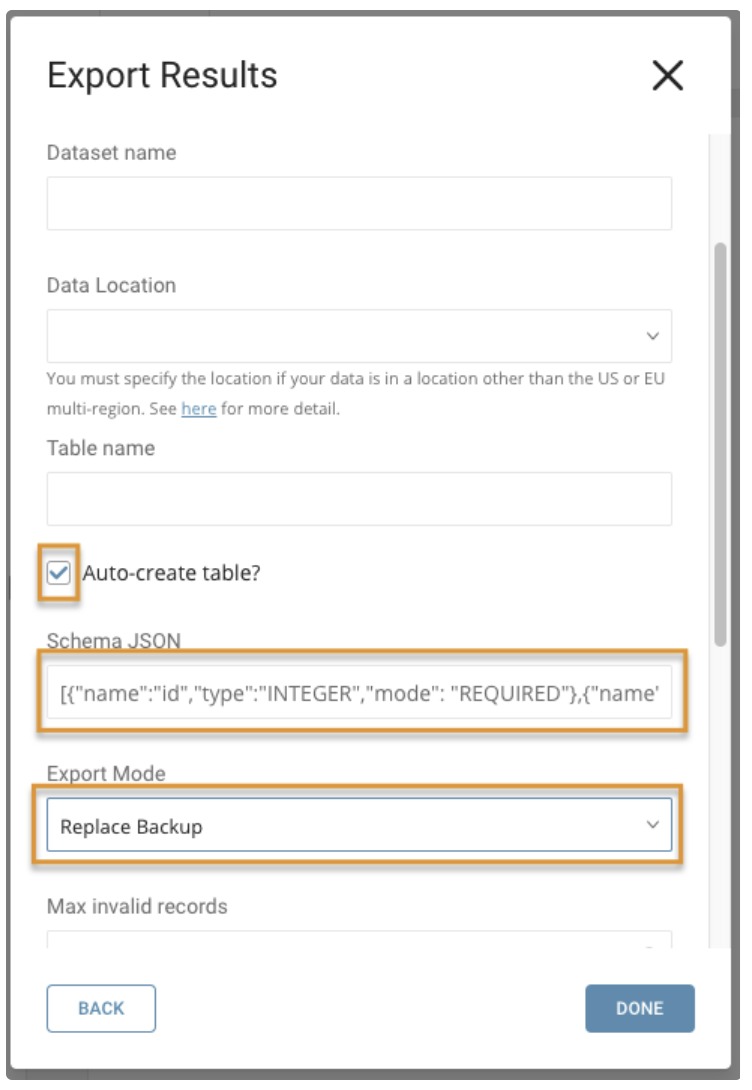

Replace backup mode is similar to replace mode, but in replace backup mode, both data and schema are backed up into a table that is assigned the old name plus "_old" as the suffix.

You see a pane similar to the following:

Fill in Project ID, Dataset Name and Table Name get from BigQuery.

Select Auto-create table and provide a schema for the table to be replaced. The value in the Schema JSON field must match the query results (number of fields and data type). Choose Delete from the drop down. If the table already exists in BigQuery, when you execute the job, the table is deleted and a new table is added with the schema as JSON. If table does not exist in BigQuery, then a new table is created with name and schema as your input. Treasure Data query results are automatically imported into this newly created table.

BigQuery's data types are automatically converted to a corresponding Treasure Data type, as indicated in the following table. If you include unsupported types in the schema JSON or if query result miss-match the data type with schema JSON, you receive errors.

| Treasure Data | BigQuery |

|---|---|

| string | STRING |

| long | INTEGER |

| double | FLOAT |

| long (true is 1, false is 0) | BOOLEAN |

| string (yyyy-MM-dd HH:mm:ss.SSS) | TIMESTAMP |

The following command allows you to set a scheduled query with Results Output to BigQuery. Designate your json_key and escape newline with a backslash.

For example,

$ td sched:create scheduled_bigquery "10 6 14 12 *" \

-d dataconnector_db "SELECT id,account,purchase,comment,time FROM data_connectors" \

-r '{"type":"bigquery","project":"YOUR_PROJECT","dataset":"YOUR_DB","table":"YOUR_TABLE","auto_create_table":true,"max_bad_records":0,"ignore_unknown_values":true,"allow_quoted_newlines":true,"schema_file":"[{\"name\": \"id\", \"type\": \"INTEGER\"}, {\"name\": \"account\", \"type\": \"STRING\"},{\"name\": \"purchase\", \"type\": \"STRING\"}, {\"name\": \"comment\", \"type\": \"STRING\", \"mode\": \"REQUIRED\"}, {\"name\": \"time\", \"type\": \"TIMESTAMP\", \"mode\": \"REQUIRED\"}]", "json_keyfile":"{\"private_key_id\": \"ABDE\", \"private_key\": \"-----BEGIN PRIVATE KEY-----\\nABCDE\\nABCDE\\nABCDE\\n-----END PRIVATE KEY-----\\n\", \"client_email\": \"ABCDE.gserviceaccount.com\", \"client_id\": \"ABCDE.apps.googleusercontent.com\", \"type\": \"service_account\"}"}'You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

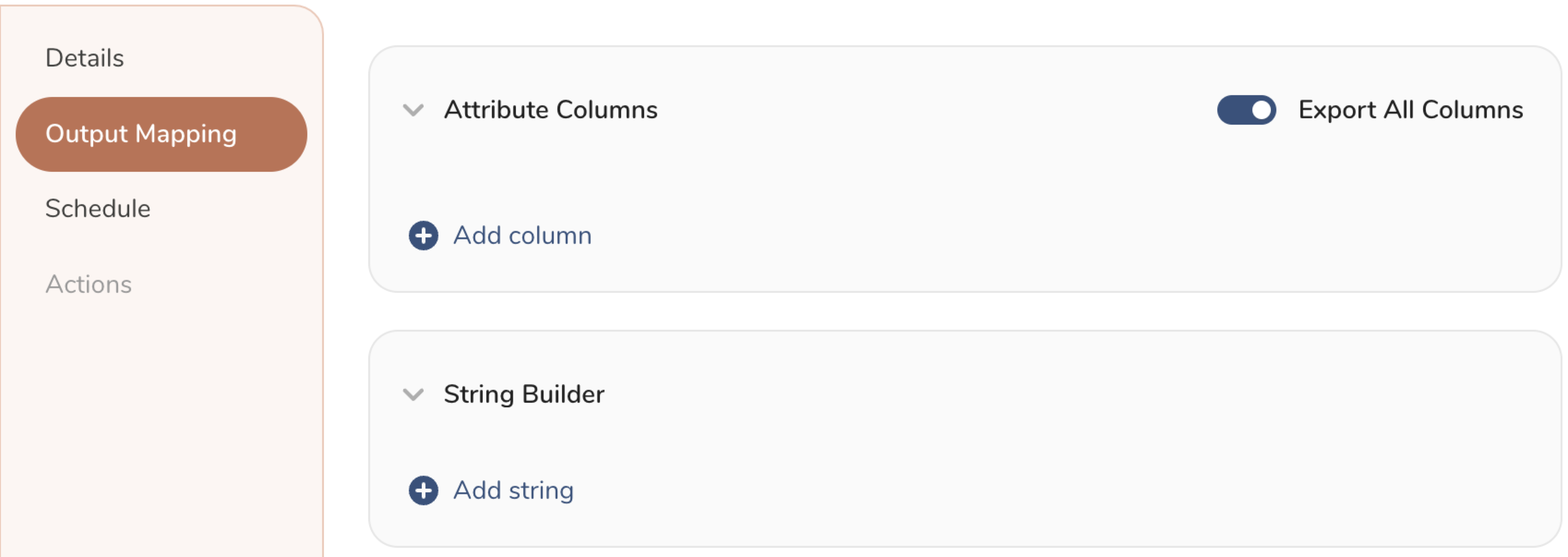

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

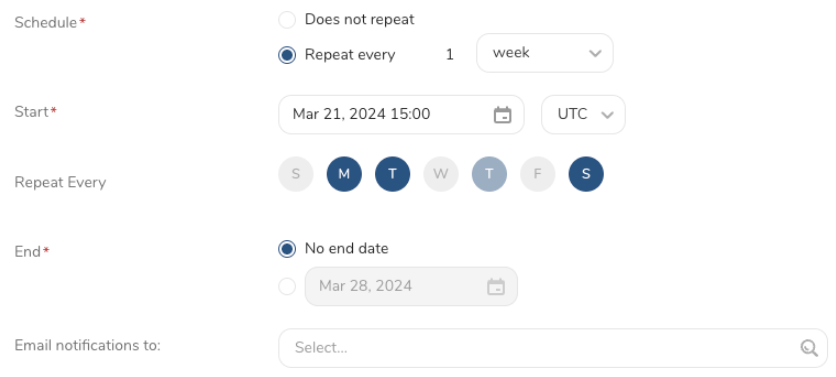

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.