You can connect Facebook Page Insights integration to import the following Facebook data into Treasure Data:

- Basic knowledge of Treasure Data

- Basic knowledge of Facebook Graph API

- Having required permissions for downloading Facebook Page data.

- Authorized Treasure Data account access

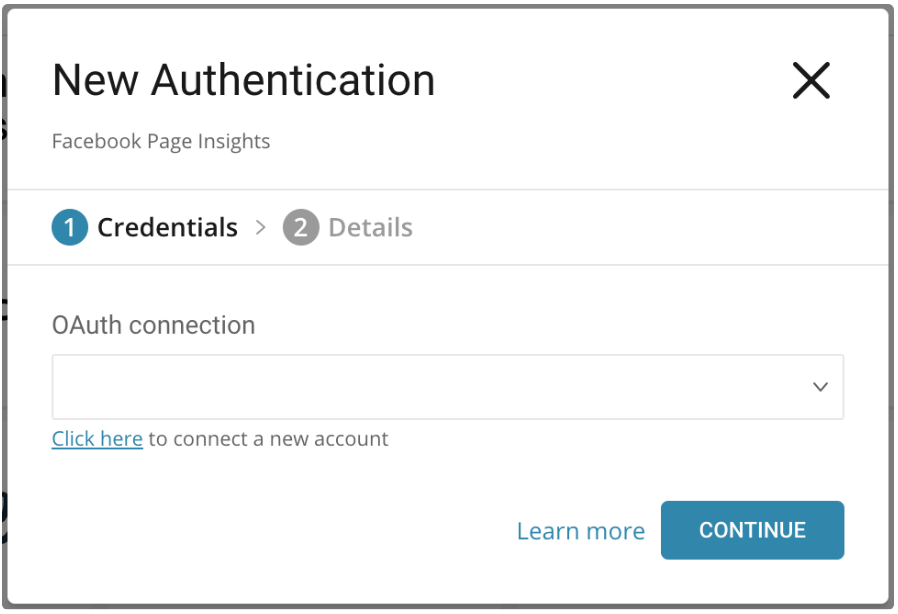

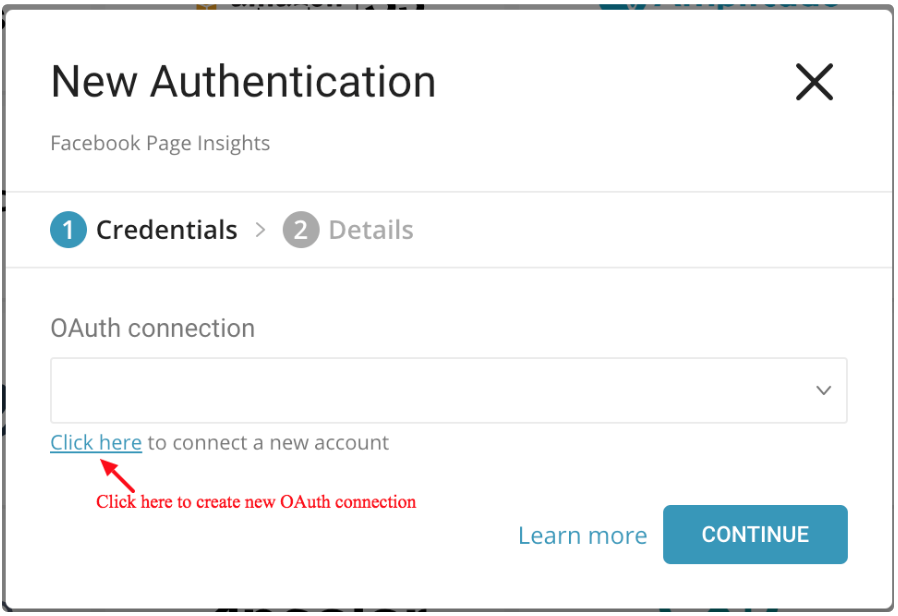

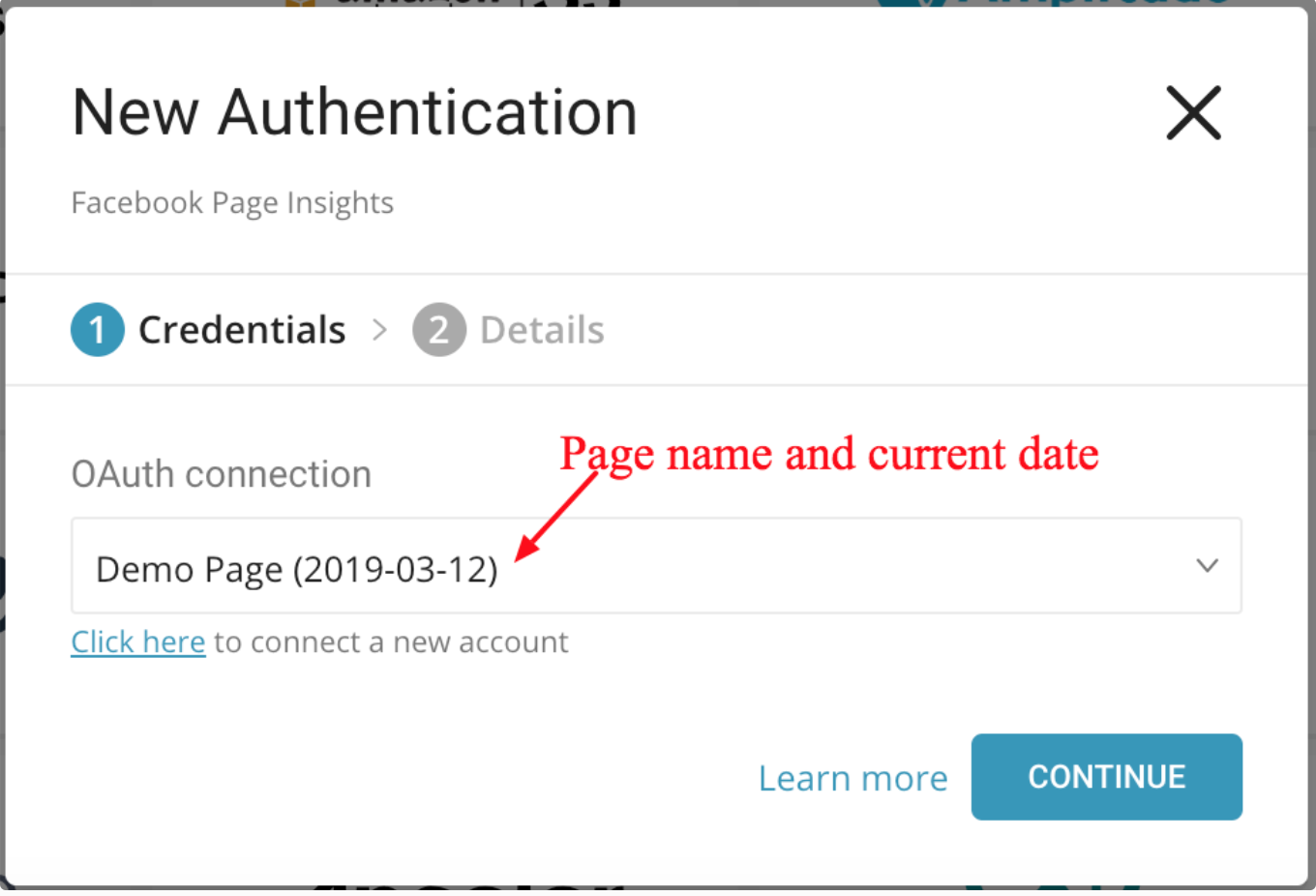

Go to Integrations Hub > Catalog. Search and select Facebook Page Insights. A dialog will open.

You can select an existing OAuth connection for Facebook or click the link under OAuth connection to create a new connection.

Login to your Facebook account in a popup window:

And grant access to the Treasure Data app.

You will be redirected back to the TD Console. Repeat the first step (Create a new authentication**)** and choose your new OAuth connection.

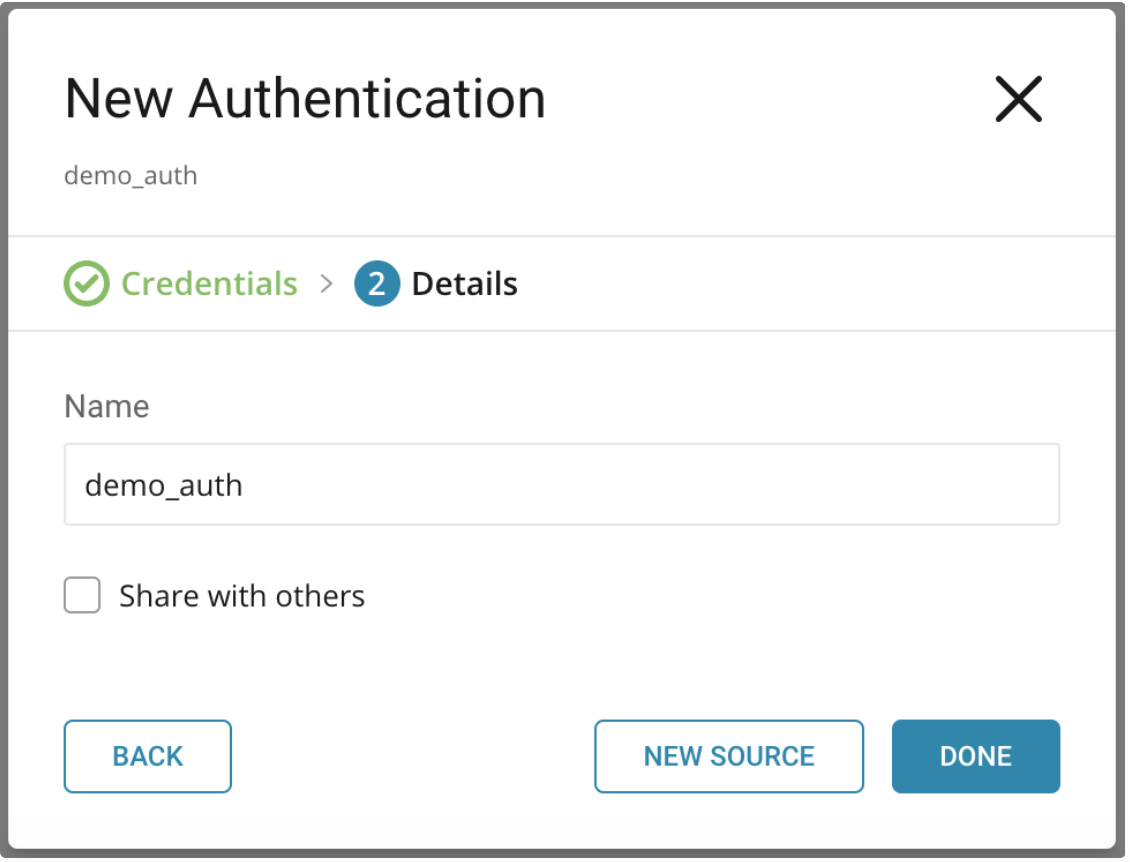

Name your new authentication. Select Done.

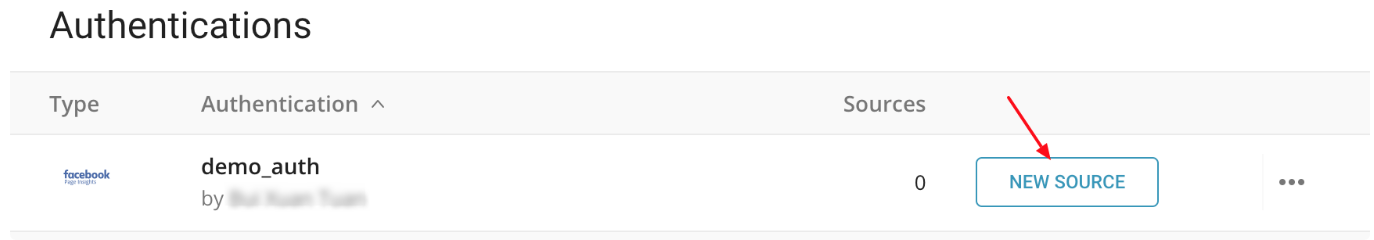

In Authentications, configure the New Source.

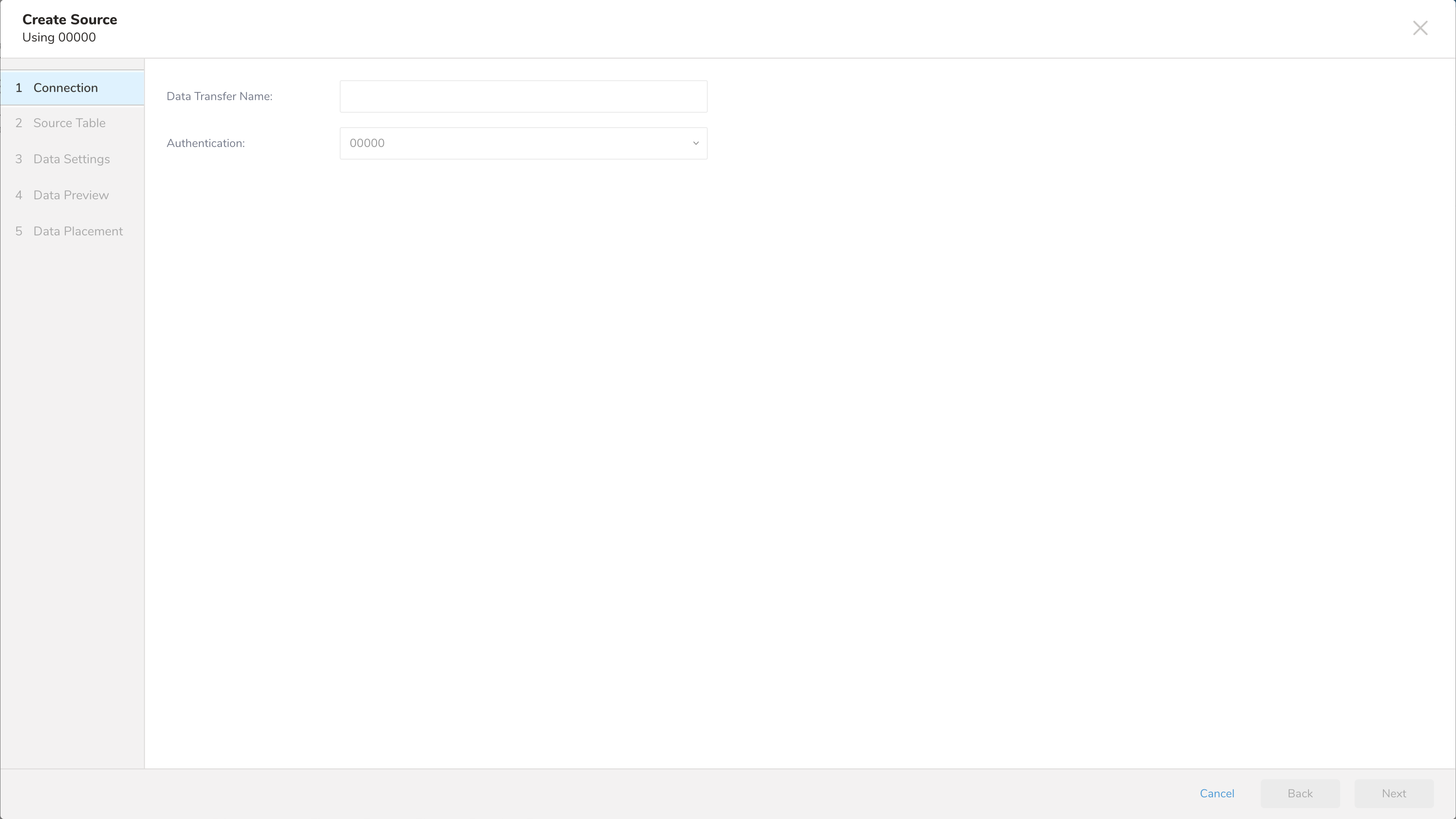

You can name the Source in this dialog by editing the Data Transfer Name.

- Name the Source in the Data Transfer field**.**

- Select Next. The Source Table dialog opens.

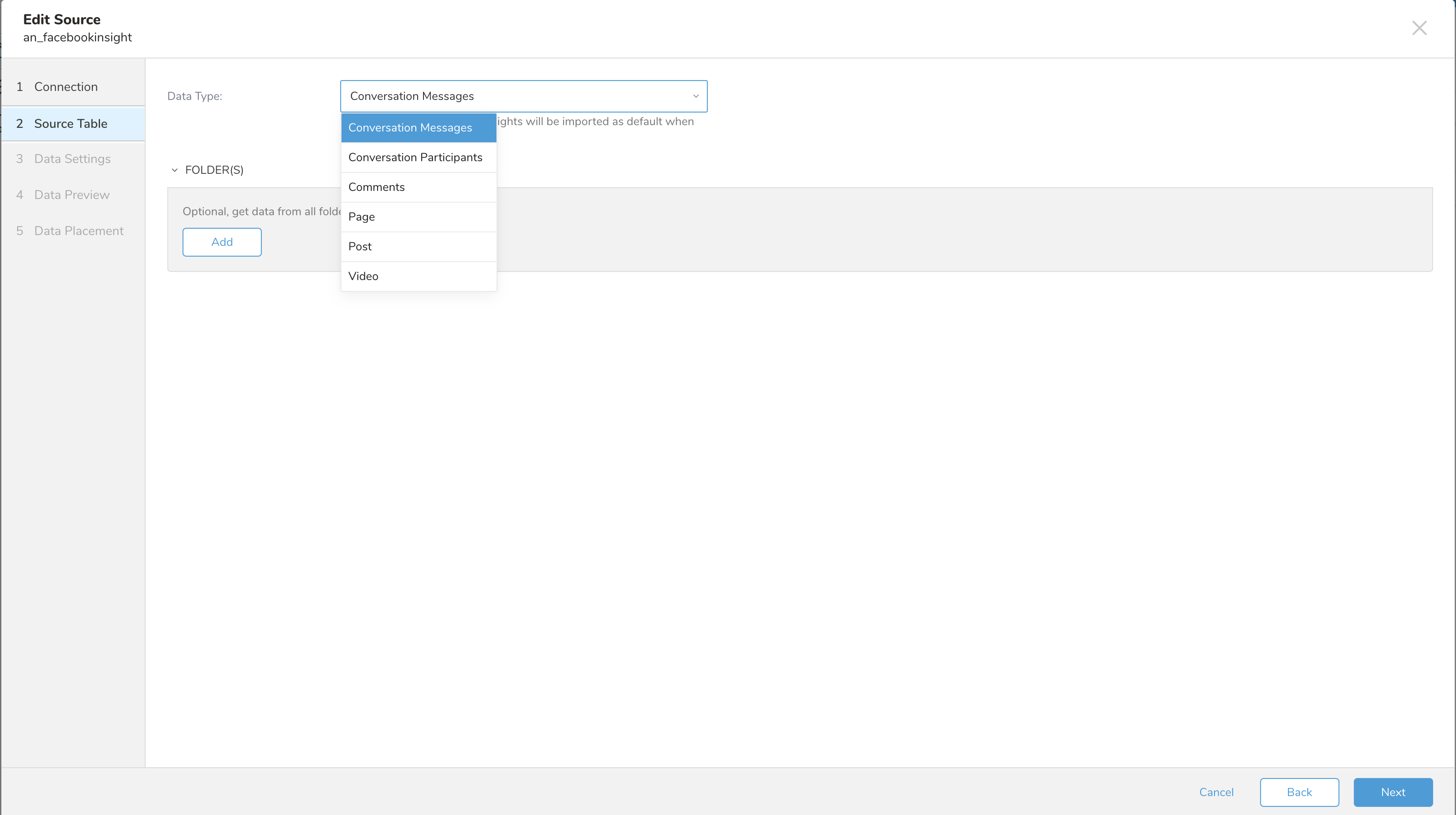

In the Source Table, edit the parameters and select Next.

| Parameter | Description |

|---|---|

| Data Type | Supported data types**:** - Page - Post - Video - Conversation Messages - Conversation Participants - Comments |

| Folders(s) | Conversation folders |

For the data type Video, the only period supported is Lifetime.

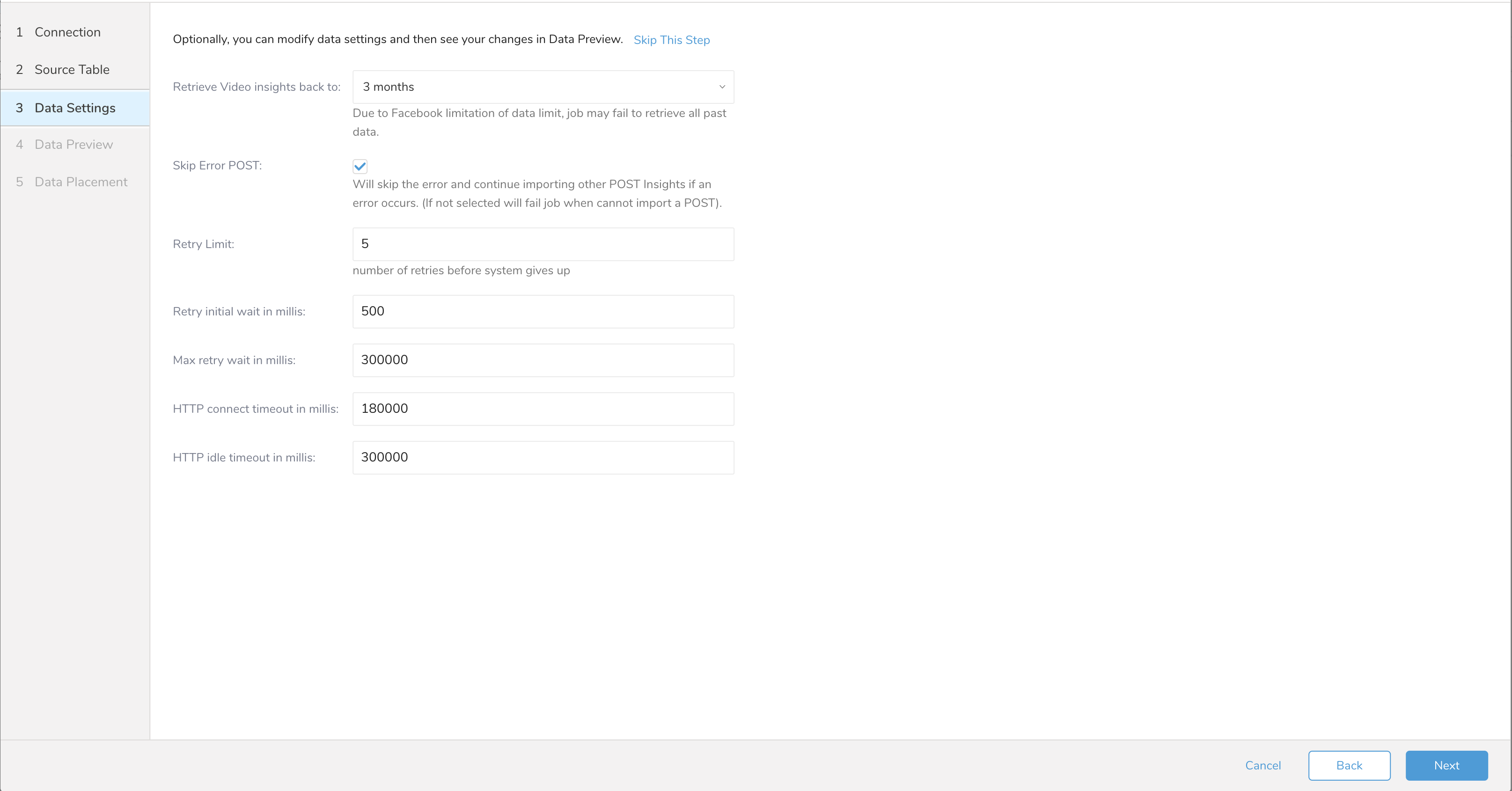

In this dialog, you can edit data settings or skip this step.

- Edit the Data Settings parameters.

- Select Next.

| Parameters | Description |

|---|---|

| Retrieve Video insights back to | Due to Facebook-specified data limits, a job may fail to retrieve all past data. The default setting is to import the last three months of data for Video Insights. Update this value to import more data. |

| Skip Error POST | Default true. Skip the error when importing POST Insights when an error occurs. |

| Retry Limit | The number of retries before the connector stops trying to connect and retrieve data. |

| Retry initial wait in millis | Interval to retry if a recoverable error happens (in milliseconds). |

| Max retry wait in millis | Maximum time in milliseconds between retry attempts. |

| HTTP connect timeout in millis | HTTP connection timeout. |

| HTTP idle timeout in millis | HTTP idle timeout. |

You can see a preview of your data before running the import by selecting Generate Preview. Data preview is optional and you can safely skip to the next page of the dialog if you choose to.

- Select Next. The Data Preview page opens.

- If you want to preview your data, select Generate Preview.

- Verify the data.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

You can use the Treasure Data Console to configure your connection.

Open a terminal and run the following command to install the newest TD Toolbelt.

Facebook provides three types of tokens. You will need the Page Access Token. We recommend that you select the never-expiring Page Access Token.

To obtain the never-expiring Page Access Token, follow the instructions here: https://www.rocketmarketinginc.com/blog/get-never-expiring-facebook-page-access-token/

Using a text editor, create a file called config.yml. Copy and paste the following information, replacing the placeholder text with your Facebook connector info.

The in section specifies what comes into the connector from Facebook, and the out section specifies what the connector sends to the database in Treasure Data. For more details on available out modes, see Appendix.

in:

type: "facebook_page_insights"

access_token: "[your Facebook Page token]"

page_id: [your Facebook Page ID]

data_type: page

incremental: true

select_all_metrics: true

since: 2017-01-01

until: 2017-01-31

out:

mode: appendConfiguration keys and descriptions are as follows:

| Option name | Description | Type | Required? | Default Value |

|---|---|---|---|---|

| access_token | Facebook Page Access Token. | string | yes | — |

| page_id | Facebook Page ID. See Addendum | string | yes | — |

| data_type | - page - post - video - conversation_message - conversation_participant - comment | string | optional | page |

| select_all_metrics | Import all supported insight metrics for the current Data Type. Set this value so you don't need to set metric_presets or metrics. Applicable for Page and Post. See Available Metrics. | bool | optional | |

| metric_presets | Predefined category of metrics or group of related metrics. See Supported Preset Metrics. | array | optional | — |

| metrics | Facebook Graph insight metrics, you can specify each metric as much as you need. This config will override metric_preset if both of them are specified. Supported Metrics | array | optional | — |

| since | A lower bound of the time range to consider, supported formats: yyyy-MM-dd or Unix time i.e. 1584697547 | string | optional | — |

| until | An upper bound of the time range to consider, supported formats: yyyy-MM-dd or Unix time i.e. 1584697547 | string | optional | — |

| period | The aggregation period. See Supported Periods. | enum | optional | — |

| date_preset | Preset a date range, like ‘lastweek’ or ‘yesterday’. The data transfer request will fail if a ‘since’ or ‘until’ date is specified, and the date_preset is also selected. See Supported Date Presets. | enum | optional | — |

| incremental | true for generate “config_diff” with embulk run -c config.diff | bool | optional | false |

| last_in_months | Retrieve Video insights back to this month's range. Specify that a range of more than three months would cause the job error due to Facebook API limitations. | integer | optional | 3 (months) |

| skip_error_post | Skip error when importing POST insights | bool | optional | true |

| retry_limit | Number of error retries before the connector gives up | integer | optional | 7 |

| retry_initial_wait_millis | Wait milliseconds for exponential backoff initial value | integer | optional | 500 (0.5 second) |

| max_retry_wait_millis | Maximum wait milliseconds for each retry | integer | optional | 300000 (5 minutes) |

| connect_timeout_millis | HTTP connect timeout in milliseconds | integer | optional | 180000 (3 minutes) |

| idle_timeout_millis | HTTP idle timeout in milliseconds | integer | optional | 300000 (5 minutes) |

| conversation_folders | Conversation folder: inbox, page_done, other, pending and spam E.g. [{"value":"inbox"}] | array | optional | all folders |

Example of config*.yml* with incremental and Page data type

in:

type: "facebook_page_insights"

access_token: "[your Facebook Page token]"

page_id: [your Facebook Page ID]

data_type: page

page_metric_presets:

- value: page_impressions

- value: page_cta_clicks

- value: page_user_demographics

- value: page_views

- value: page_engagement

incremental: true

since: 2017-01-01

until: 2017-01-31

out:

mode: appendPost data type

in:

type: "facebook_page_insights"

access_token: "[your Facebook Page token]"

page_id: [your Facebook Page ID]

data_type: post

post_metric_presets:

- value: page_post_impressions

- value: page_post_engagement

- value: page_post_reactions

- value: page_video_posts

since: 2017-01-01

until: 2017-01-31

out:

mode: appendVideo data type

in:

type: "facebook_page_insights"

access_token: "[your Facebook Page token]"

page_id: [your Facebook Page ID]

data_type: video

last_in_months: 3

out:

mode: appendYou can preview data to be imported using the command td connector:preview.

td connector:preview config.ymlBefore you execute the load job, you must specify the database and table where you want to store the data. |

You use td connector:issue to execute the job. The following are required: the schedule's name, the cron-style schedule, the database and table where their data will be stored, and the Data Connector configuration file.

td connector:issue config.yml --database td_sample_db --table td_sample_tableIt is recommended to specify --time-column option because Treasure Data’s storage is partitioned by time. You can also use the --time-column option to override auto-generated time values, by specifying end_time as the time column (only applied for data_typepage). Data will be accumulated daily and end_time will be end of the day, using the timezone of your Facebook Page, but converted to UTC format.

If your data doesn’t have a time column, you can add one using the add_time filter option. See details at add_time Filter Plugin for Integrations.

Finally, submit the load job. Depending on the data size, it may take a couple of hours. You must specify the database and table where their data is stored.

td connector:issue config.yml --database td_sample_db --table

td_sample_table --time-column end_timeYou can schedule periodic data connector execution for incremental Facebook Insights data. We configure our scheduler carefully to ensure high availability. This feature eliminates the need for a cron daemon in your local data center.

For the scheduled import, the Data Connector for Facebook Page Insights imports all of your ad data at the first run.

On the second and subsequent runs, the connector imports only newer data than the last load.

A new schedule can be created using the td connector:create command. The following are required: the schedule's name, the cron-style schedule, the database and table where their data will be stored, and the data connector configuration file.

$ td connector:create \

daily_import \

"10 0 * * *" \

td_sample_db \

td_sample_table \

config.ymlThe cron parameter also accepts three special options: @hourly, @daily and @monthly. | By default, the schedule is set in the UTC timezone. You can set the schedule in a different timezone using the —t or—-timezone option. Note that the --timezone option supports only extended timezone formats like 'Asia/Tokyo', 'America/Los_Angeles', etc. Timezone abbreviations like PST and CST are not supported and may lead to unexpected schedules.

2018-05-06 13:00:18.627 +0000 [WARN] (0047:task-0043): Time range does not reach, abort and will retry later. Start Date: '1525330800', End Date: '1528095600', Current Date: '2018-05-06'This means either ‘Start Date’ or ‘End Date’ has exceeded the current date, for example, is specified with a date in the future. The cause of such warning messages could be that you’ve configured cron shorter than the fetching time range. For example, a daily job to pull monthly data.

You need an initial load (or multiple loads, due to the limitation of a 3-month time range for each load). Let’s say today is 2018-05-09, and you need to load data since 2018-01-01:

| Jobs | Start Date | End Date |

|---|---|---|

| First job (one-time) | 2018-01-01 | 2018-04-01 |

| Second job (one-time) | 2018-04-01 | 2018-05-08 |

| [Incremental] Daily job | 2018-05-08 | 2018-05-09 |

Starting from version v0.2.0, you will get insights into all Posts from the very first post of the page until the End Date value. This improvement upgrades the date setting so you will be able to get the Insights data from the Start Date to the End Date of all available Posts. Compared to version v0.1.16, you can only get the insights data from the Start Date to the End Dateof the Posts created within that date range.

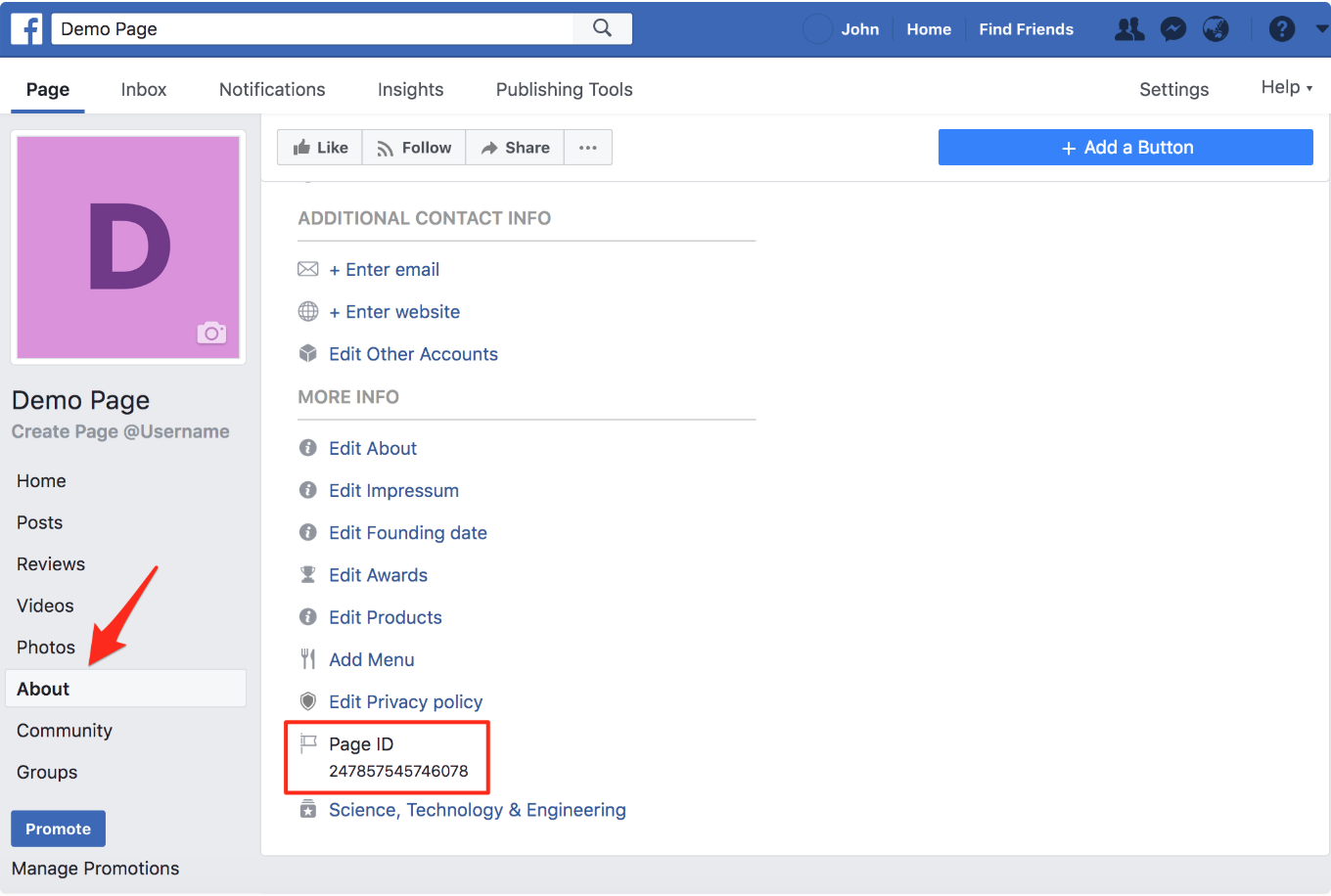

Instead of using Page's username when creating the connector, you can use Page ID. To find the page ID, on your Facebook page, select the About menu and scroll down to the Page ID, as shown: