Update at 2025-03-10 Meta has announced that it's deprecating the Offline Conversions API (OCAPI) which the current Facebook Offline Conversions app uses. In addition, Meta will disable the ability to create new offline event sets (OES). Meta anticipates that the OCAPI will be discontinued May 1, 2025. Ref. https://www.facebook.com/business/help/1835755323554976 In preparation for this change, Treasure Data has added support for offline conversions in Meta Conversion API connector : - Facebook Conversions API Export Integration Please review the different fields name between these 2 APIs. - /int/migration-guide-to-facebook-conversion-connector Users can switch to the connector today to prepare for the upcoming changes to the Facebook Offline Conversions app.

You can use the Facebook Offline Conversions to send job results (in the form of offline event data) from Treasure Data directly to Facebook to measure how much your Facebook ads lead to real-world outcomes, such as purchases in your stores, phone orders, bookings, and more.

- Basic Knowledge of Treasure Data.

- Basic knowledge of Facebook Offline Conversions and Facebook Offline Event

- To upload event data, you need access to one of the following on Facebook:

- Business Manager admin

- Admin system user who created the offline event set

- Admin on the

ad_accountconnected to the offline event set

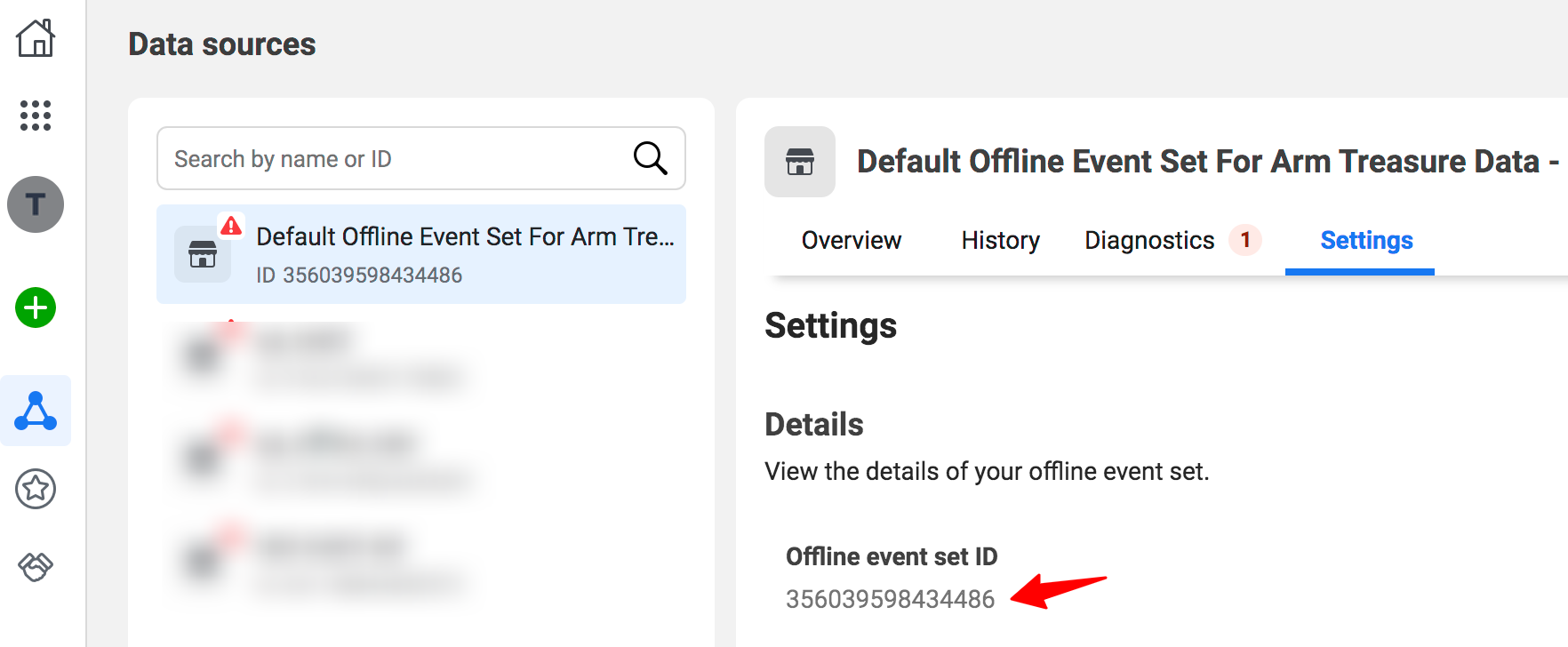

- Open the Business Manager dashboard and select Event Manager.

- Select an Event Set.

- Select the Settings and the Offline event set ID is displayed.

In Treasure Data, you must create and configure the data connection to be used during export prior to running your query. As part of the data connection, you provide authentication to access the integration.

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Search for and select Facebook Offline Conversions.

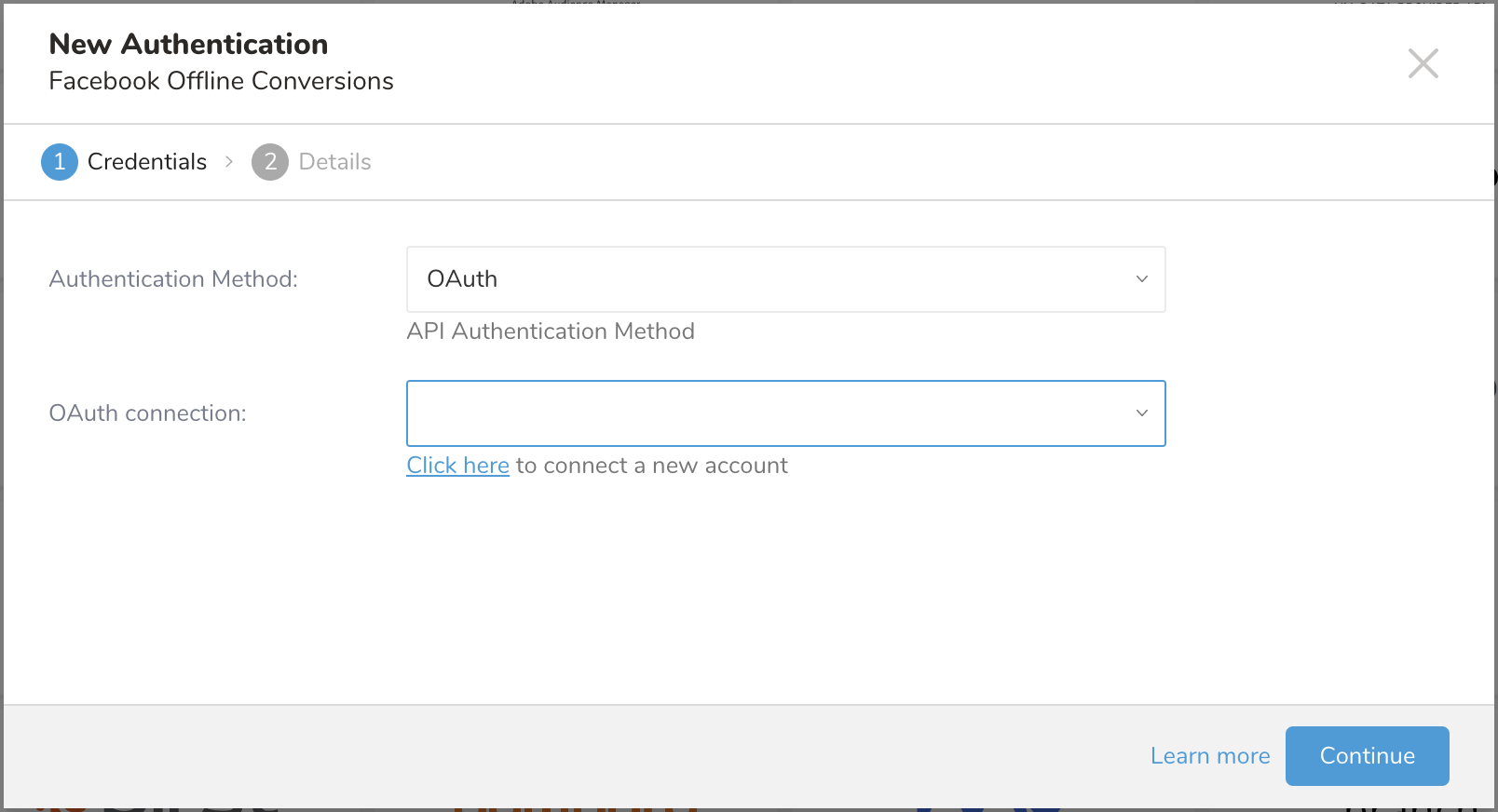

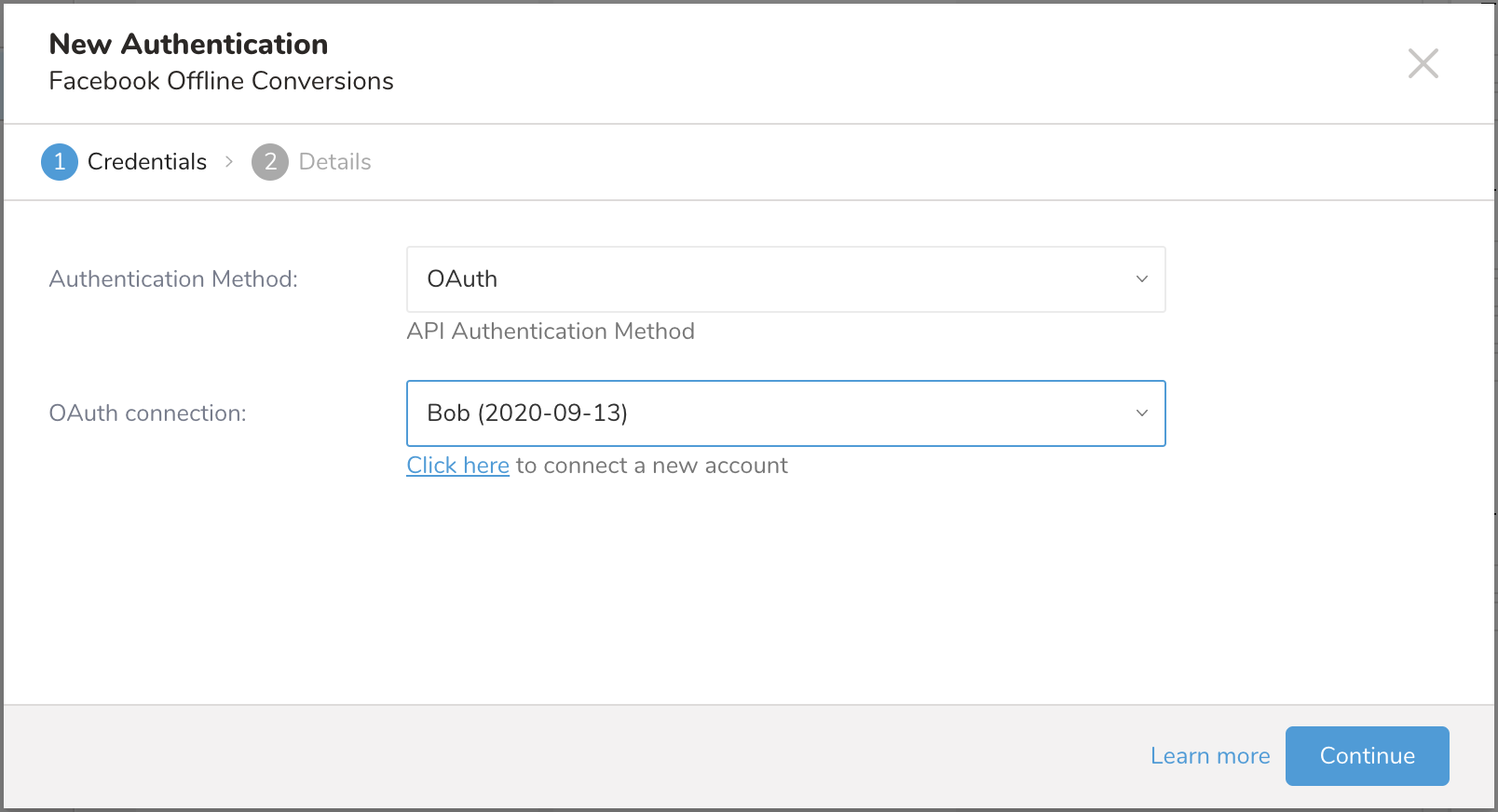

- After the following dialog opens, choose your type of authentication method, which is further described in the following section.

- Enter a name for your connection.

- Select Done.

The method you use to authenticate Treasure Data with Facebook affects the steps you take to enable the data connector to access Facebook. You can choose to authenticate in the following ways:

- Access Token

- OAuth

You need an access token and client secret to authenticate using Access Token. A long-lived user access token or system user access token is recommended. You may need to create a long-lived access token or a system access token.

You need to assign ads_management permission to your access_token.

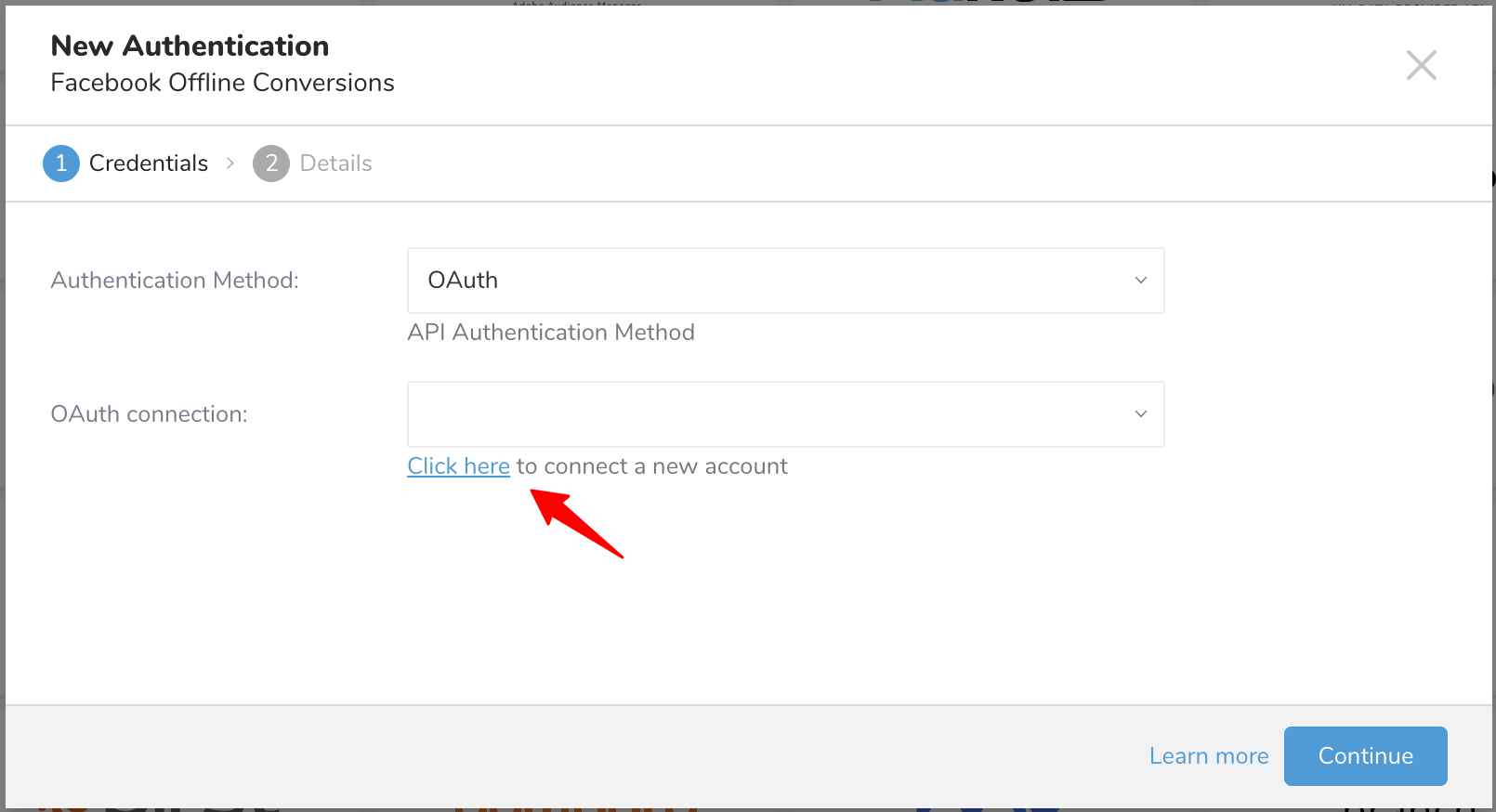

Using OAuth is the most common authentication method. Authentication requires that you manually connect your Treasure Data account to your Facebook Ads account. To authenticate, complete the following procedure:

- Select Click here to connect a new account. You are redirected to Facebook to log in if you haven't logged in yet or the consent page to grant access to Treasure Data.

- Log into your Facebook account in the popup window and grant access to the Treasure Data app. You will be redirected back to TD Console.

- Repeat the first step (Create a new connection) and choose your new OAuth connection.

- Name your new Facebook Offline Conversions connection.

- Select Done.

In this step, you create or reuse a query. In the query, you configure the data connection.

You need to define the column mapping in the query. The columns in the query represent Offline Event data to be uploaded to Facebook.

Additionally, the match_keys column and its data are hashed/normalized before being sent to Facebook. Learn more about hashing and normalization requirements. You need at least one match_keys column to configure export results.

| Column name | Data type | Match Key | Required | Multiple | Example |

|---|---|---|---|---|---|

email | string | Yes | No | Yes | foo@fb.com |

phone | string | Yes | No | Yes | 1-202-555-0192 |

gen | string | Yes | No | No | M |

doby | string | Yes | No | No | 1990 |

dobm | string | Yes | No | No | 10 |

dobd | string | Yes | No | No | 20 |

ln | string | Yes | No | No | Bar |

fn | string | Yes | No | No | Foo |

fi | string | Yes | No | No | L |

ct | string | Yes | No | No | Long Beach |

st | string | Yes | No | No | California |

zip | string | Yes | No | No | 90899 |

country | string | Yes | No | No | US |

madid | string | Yes | No | No | aece52e7-03ee-455a-b3c4-e57283 |

extern_id | string | Yes | No | No | |

lead_id | string | Yes | No | No | 12399829922 |

event_time | long | No | Yes | No | 1598531676 |

event_name | string | No | Yes | No | Purchase |

currency | string | No | Yes | No | USD |

value | double | No | Yes | No | 100.00 |

content_type | string | No | No | No | |

contents | json string | No | No | Yes | {"id": "b20", "quantity": 100} |

custom_data | json string | No | No | No | {"a":12, "b":"c"} |

order_id | string | No | No | No | OD123122 |

item_number | string | No | No | No |

To include Data Processing Options specifying these columns mapping in your query.

| Column name | Data Type | Required | Multiple | Example |

|---|---|---|---|---|

data_processing_options | string | No | No | “LDU“ |

data_processing_options_country | long | No | No | 1 |

data_processing_options_state | long | No | No | 1000 |

To query multiple values with the same name, you specify the name multiple times in the query. For example:

SELECT home_email as email, work_email as email, first_name as fn, last_name as ln

FROM table my_table- Open the TD Console.

- Navigate to Data Workbench > Queries.

- Select the query that you plan to use to export data.

- Select Export Results, located at the top of your query editor.

- The Choose Integration dialog opens.

- You have two options when selecting a connection to use to export the results: using an existing connection or by first creating a new one.

- Type the connection name in the search box to filter.

- Select your connection.

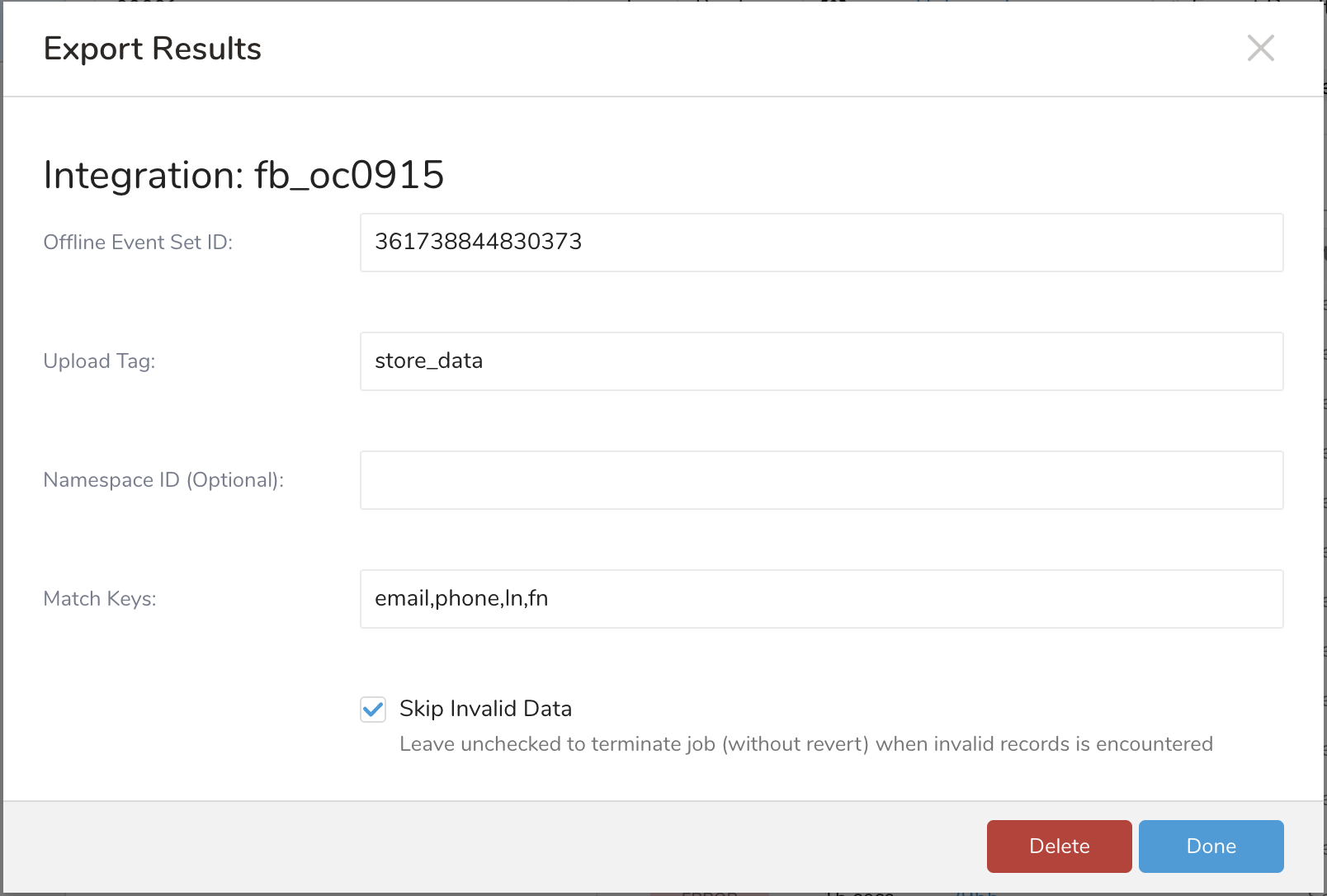

- Set the following parameters.

| Parameter | Description |

|---|---|

| Offline Event Set ID (required) | Facebook offline event set ID. See the Appendix for the Offline Event Set ID. |

| Upload Tag (required) | Use to track your event uploads |

| Namespace ID (optional) | Scope used to resolve extern_id or tpid. It can be another data set or data partner ID. Example: 12345 |

| Match Keys (required) | The identifying information is used to match people on Facebook. The value is a comma-separated string. Example: email,phone,fn,ln,st,country… |

| Skip Invalid Data (optional) | It is used to terminate a job (without reverting) when invalid records are encountered. For example, a record is missing the required columns, e.g. event_name, event_time... |

Here is a sample configuration:

From Treasure Data, run the following query with export results into a connection for Facebook Offline Conversions:

- Regular SELECT query from a table

SELECT

an_email_column AS EMAIL,

a_phone_column AS PHONE,

an_event_time_column AS EVENT_TIME,

an_event_name_column AS EVENT_NAME,

a_double_column AS VALUE,

a_currency_column AS CURRENCY

FROM your_table;- Query multiple email and phone columns for multiple values.

SELECT

'elizabetho@fb.com' as email,

'olsene@fb.com' as email,

'1-(650)-561-5622' as phone,

'1-(650)-782-5622' as phone,

'Elizabeth' as fn,

'Olsen' as ln,

'94046' as zip,

'Menlo Park' as st,

'US' as country,

'1896' as doby,

'Purchase' as event_name,

1598531676 as event_time,

150.01 as value,

'USD' as currency- Query with multiple

contents

SELECT

'elizabetho@fb.com' as email,

'Purchase' as event_name,

1598531676 as event_time,

150.01 as value,

'USD' as currency

'{"id": "b20", "quantity": 100}' as contents

'{"id": "b21", "quantity": 200}' as contents- Query

custom_datacolumn

SELECT

'elizabetho@fb.com' as email,

'Purchase' as event_name,

1598531676 as event_time,

150.01 as value,

'USD' as currency

'{"a":12, "b":"c"}' as custom_dataYou can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

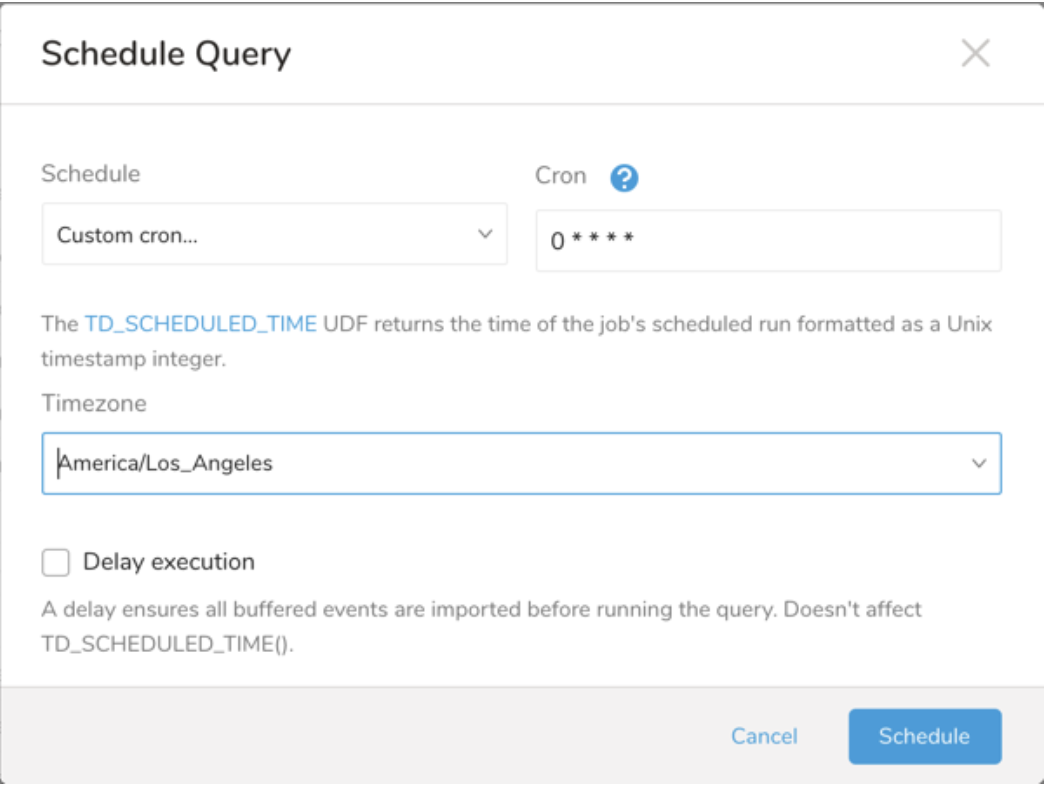

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

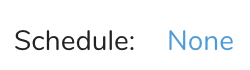

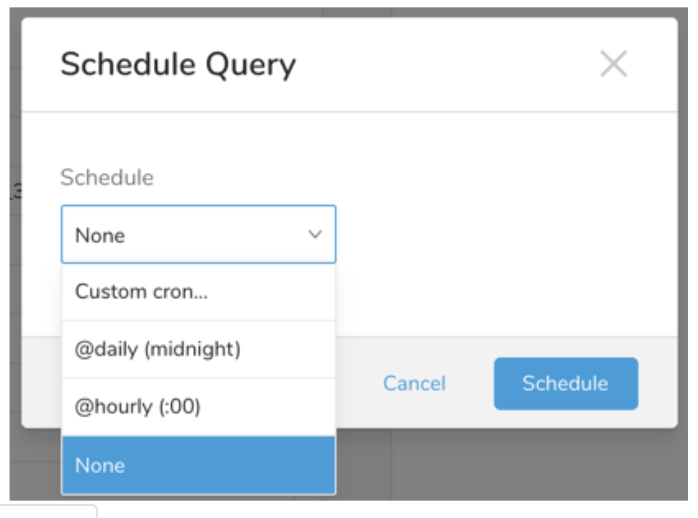

Next to Schedule, select None.

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Within Treasure Workflow, you can specify the use of this data connector to export data.

timezone: UTC

_export:

td:

database: sample_datasets

+td-result-into-target:

td>: queries/sample.sql

result_connection: facebook_offline_conversions

result_settings:

event_set_id: 361738844830373

upload_tag: purcharse_event_upload

match_keys: email,phone,ln,fnLearn about Exporting Data with Parameters for more information on using data connectors in a workflow to export data.