Learn more about Dynalyst Export Integration.

The Dynalyst data connector enables you to import data from your JSON, TSV, and CSV files stored in your S3 buckets into Treasure Data's customer data platform.

Dynalyst uses AWS S3 as a storage place, and the import process is similar to importing data from AWS S3.

You can also use this connector to export to OneDrive. See Dynalyst Export Integration.

You must complete the connection and authentication to be used with Dynalyst, prior to running your imports or query exports.

- Review and complete the following in Dynalyst Import and Export Integration.

- Prerequisites

- Create a New Connection

- Search for your Dynalyst Authentication.

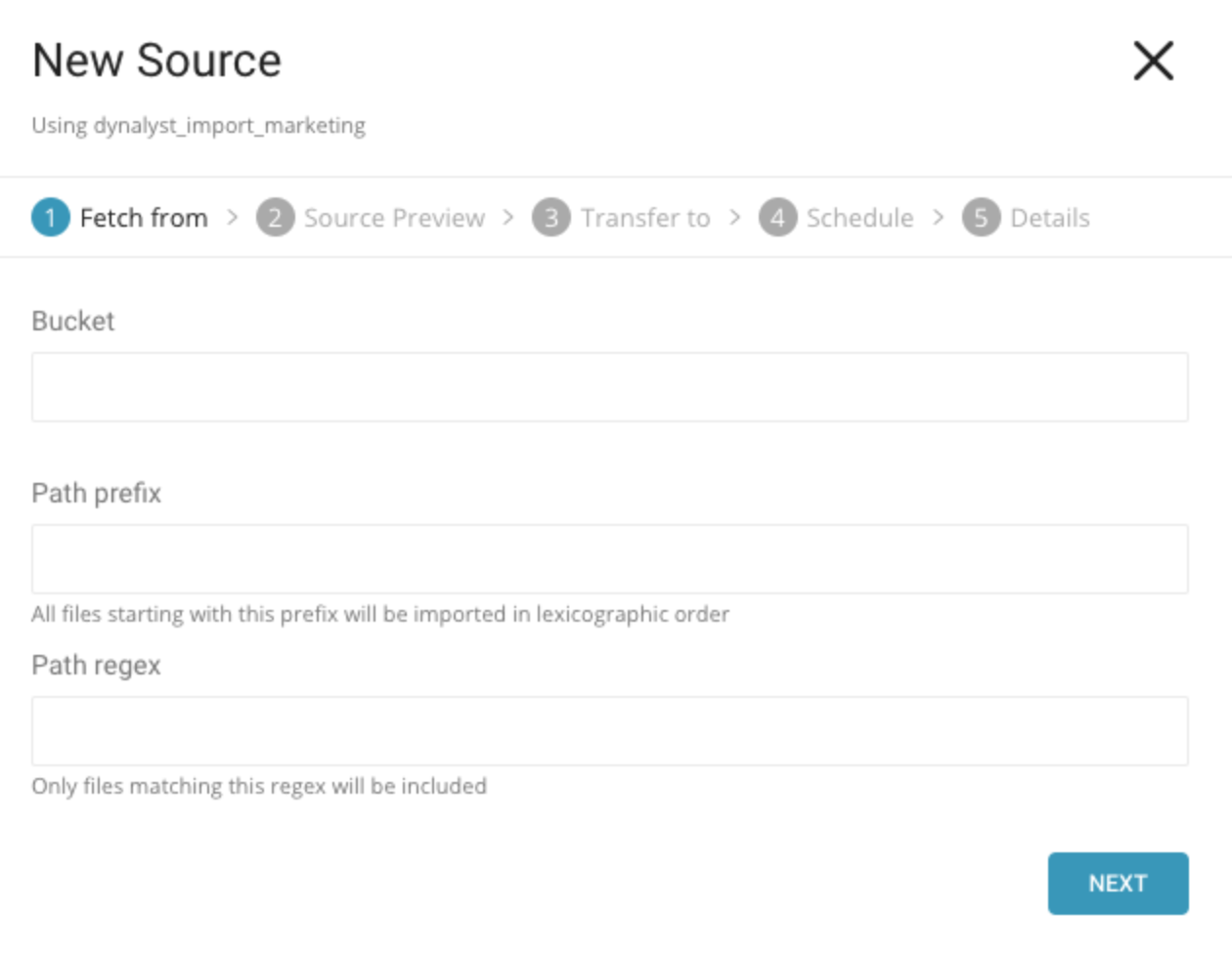

- Select New Source.

- In the New Source window, provide the name of the Bucket that contains the files that you want to import.

Path prefix: Configures the source to import all files that match with the specified prefix. (eg. /path/to/customers.csv)

Path regex: Configures the source to import all files that match using the specified regex pattern.

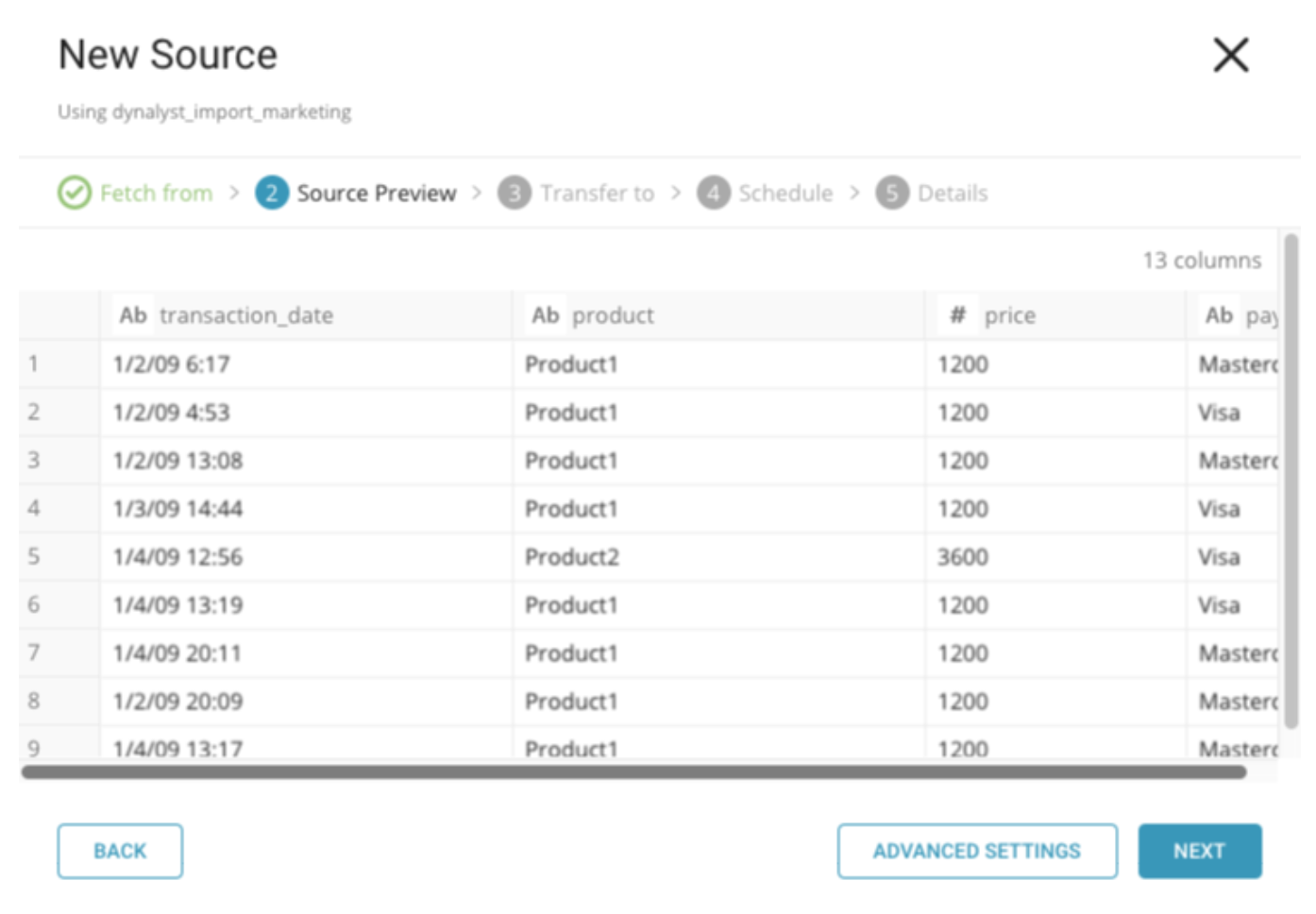

- In the source preview window, select advanced settings to make any adjustments needed for import. For example, changing the import parser from CSV to JSON, setting line-delimiters, and so on*.*

- Select Next.

For data placement, select the target database and table where you want your data placed and indicate how often the import should run.

Select Next. Under Storage, you will create a new or select an existing database and create a new or select an existing table for where you want to place the imported data.

Select a Database > Select an existing or Create New Database.

Optionally, type a database name.

Select a Table> Select an existing or Create New Table.

Optionally, type a table name.

Choose the method for importing the data.

- Append (default)-Data import results are appended to the table. If the table does not exist, it will be created.

- Always Replace-Replaces the entire content of an existing table with the result output of the query. If the table does not exist, a new table is created.

- Replace on New Data-Only replace the entire content of an existing table with the result output when there is new data.

Select the Timestamp-based Partition Key column. If you want to set a different partition key seed than the default key, you can specify the long or timestamp column as the partitioning time. As a default time column, it uses upload_time with the add_time filter.

Select the Timezone for your data storage.

Under Schedule, you can choose when and how often you want to run this query.

- Select Off.

- Select Scheduling Timezone.

- Select Create & Run Now.

- Select On.

- Select the Schedule. The UI provides these four options: @hourly, @daily and @monthly or custom cron.

- You can also select Delay Transfer and add a delay of execution time.

- Select Scheduling Timezone.

- Select Create & Run Now.

After your transfer has run, you can see the results of your transfer in Data Workbench > Databases.

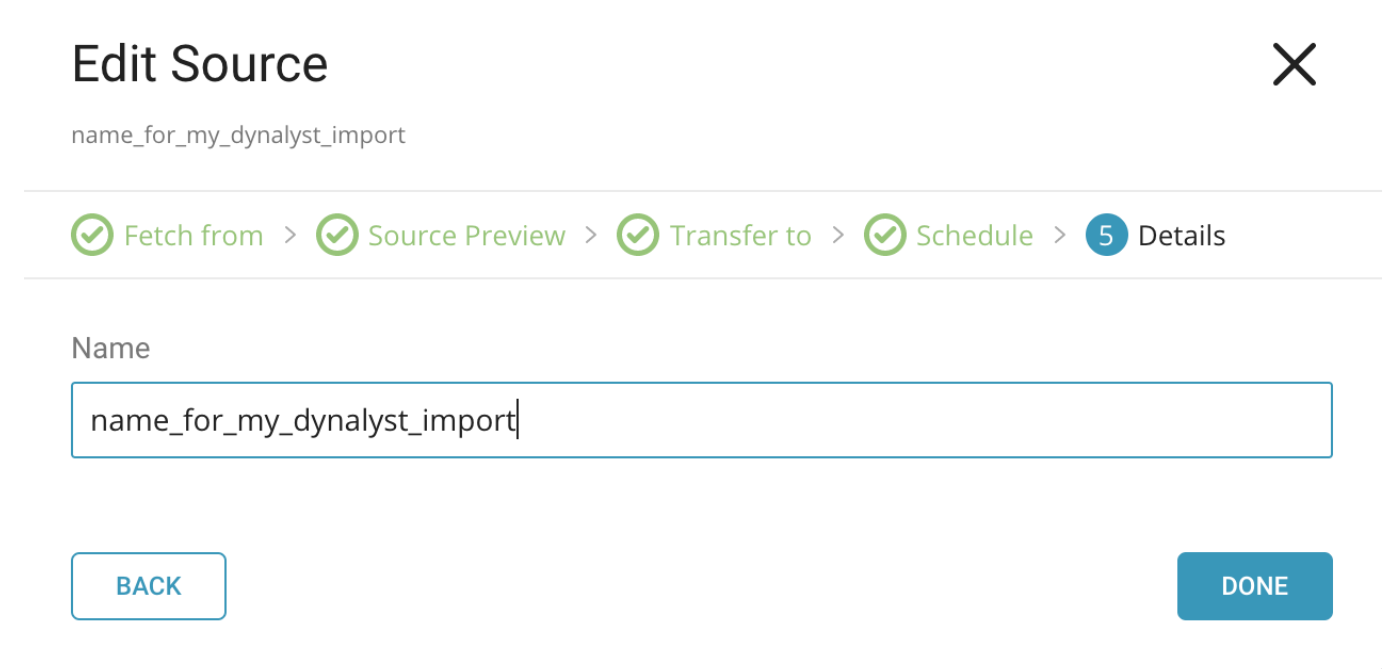

- Type a name for your source.

- Select Done. Your source job runs according to the schedule you specified.