Learn more about Amplitude Export Integration.

You can integrate Amplitude with Treasure Data to enhance data, add more data points, and to integrate the data Amplitude collects with all the other parts of your marketing stack.

To view sample workflows for importing data from Amplitude, view Treasure Boxes.

You use this same connector to create and export Amplitude Events. See Amplitude Export Integration.

Integrating Amplitude with Treasure Data makes it easy to:

- Add new features to Amplitude. As an example, you can use Treasure Data to unify Amplitude behavior data with customer data from Salesforce, creating personally identifiable records with cross-device behavior tracking.

- Use data collected from Amplitude to make the rest of your marketing stack smarter. For example, you can use Treasure Data and Amplitude to reduce churn by setting up automated triggers to create segments based on usage and feeding them into custom nurture funnels in Marketo.

If you don’t have a Treasure Data account, contact us so we can get you set up.

Connecting to Amplitude using the Treasure Data Console is quick and easy. Alternatively, you can use a CLI to create connections.

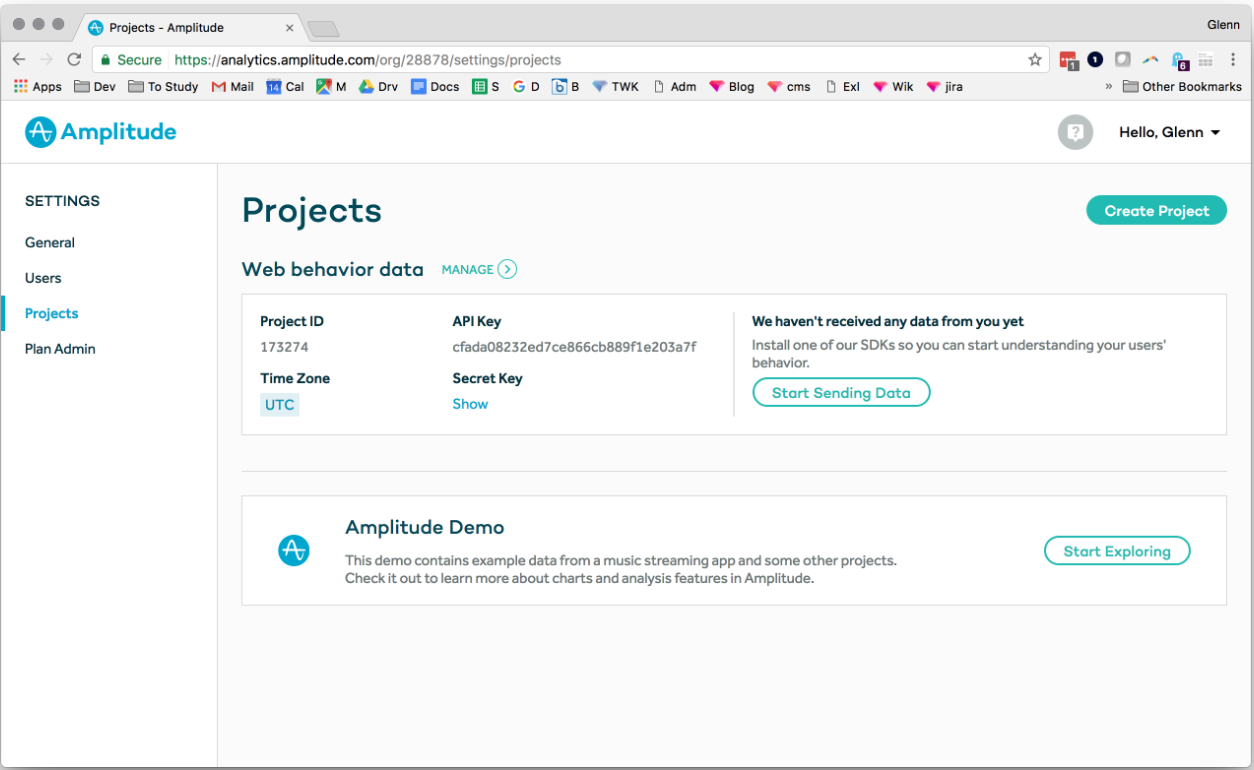

Go to Settings -> Projects and get your API Key and Secret Key, which you need for the next step.

- Open the TD Console.

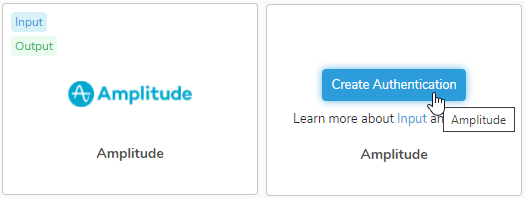

- Navigate to the Integrations Hub > Catalog.

- Click the search icon on the far-right of the Catalog screen, and enter Amplitude.

- Hover over the Amplitude connector and select Create Authentication.

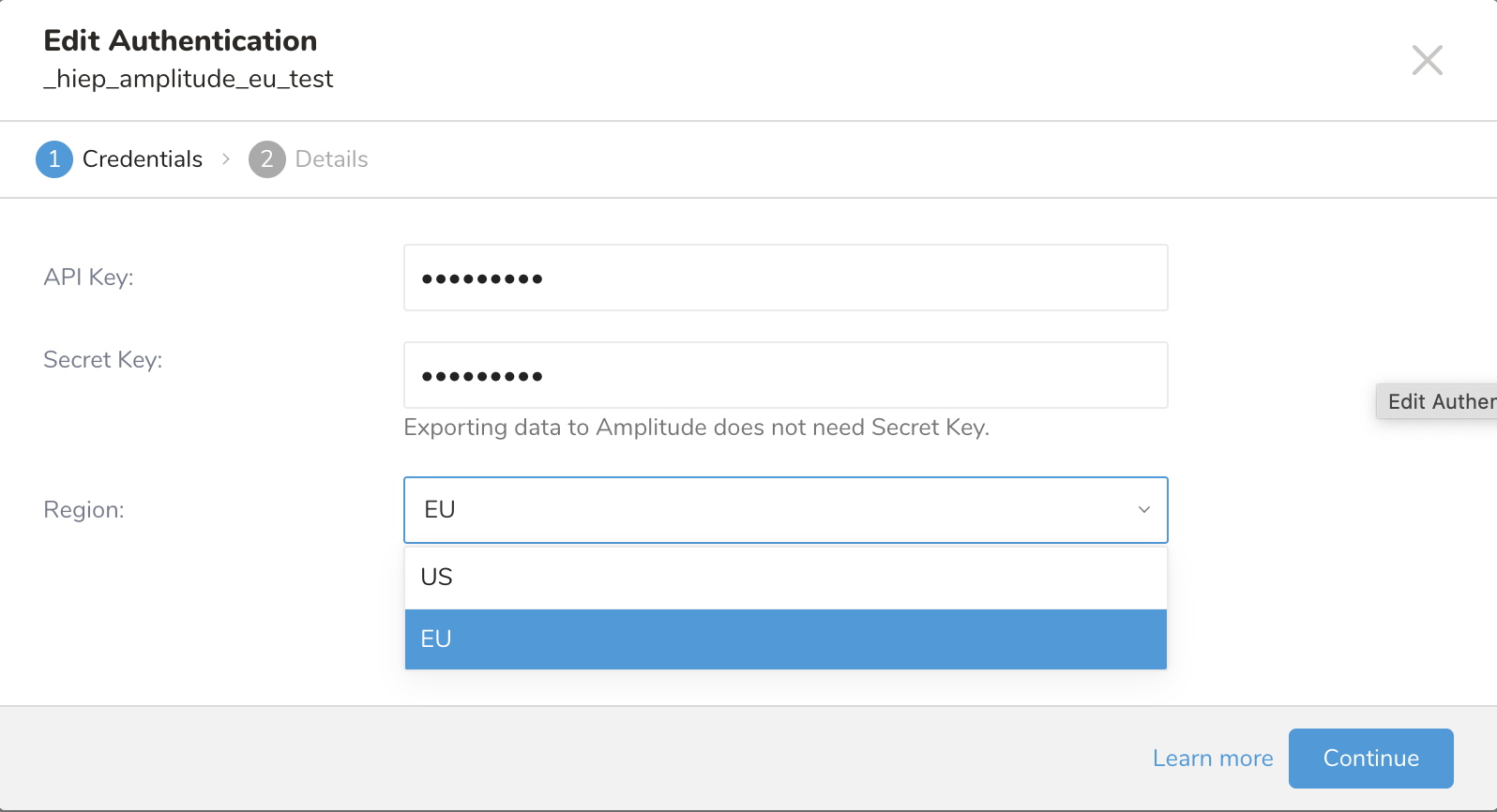

5. Enter the required credentials and select Continue.

5. Enter the required credentials and select Continue.

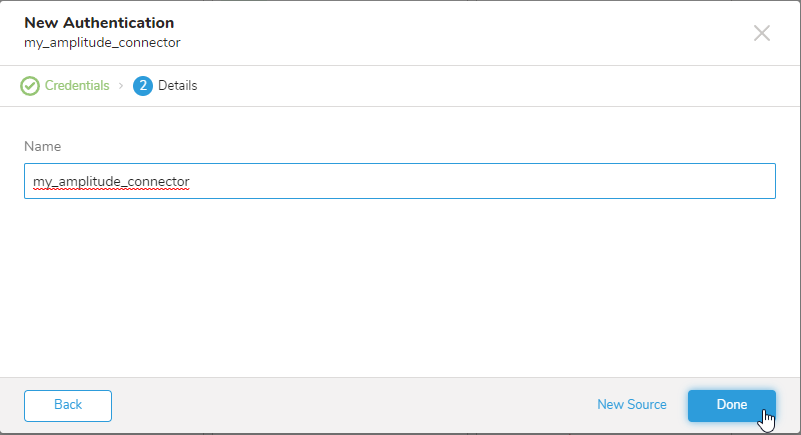

- Give the connection a name and click Done.

Region: support the data center for each region in the EU and the US (default)

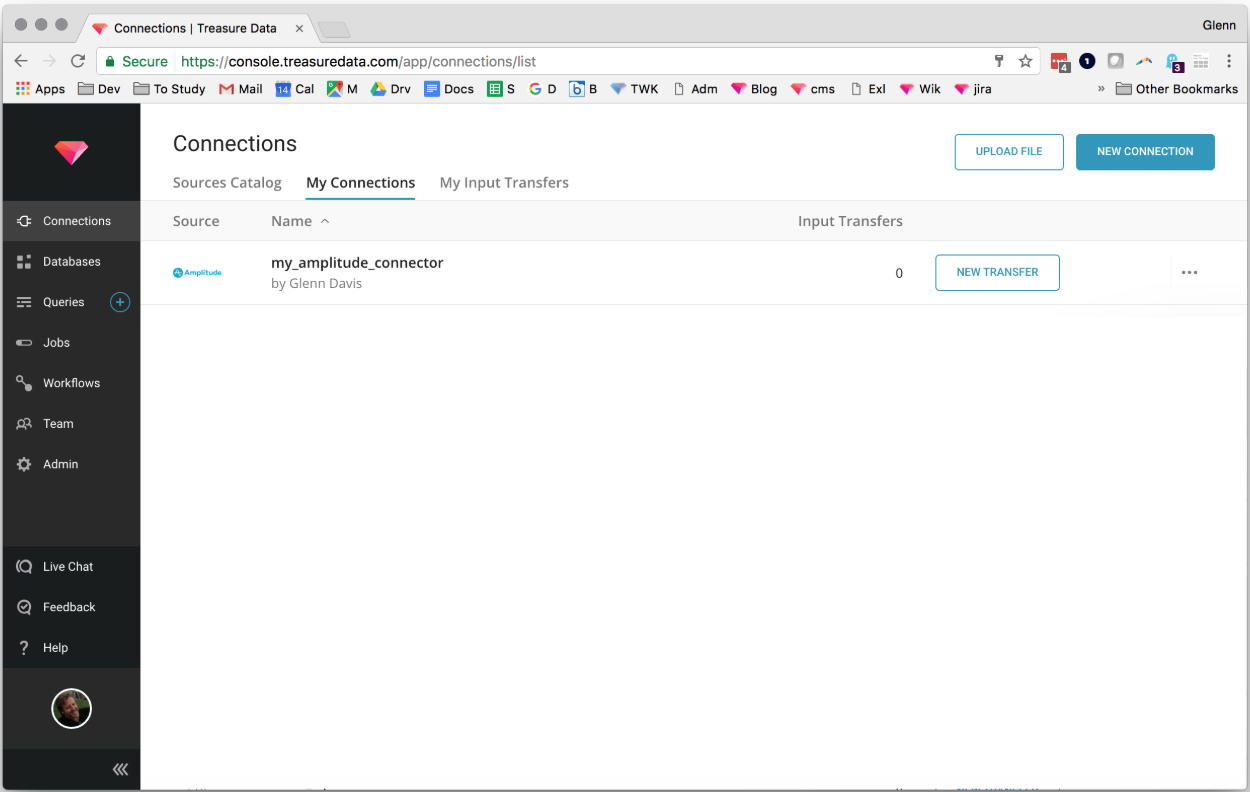

Select the Amplitude connection you created in Connections -> My Connections.

Select New Transfer.

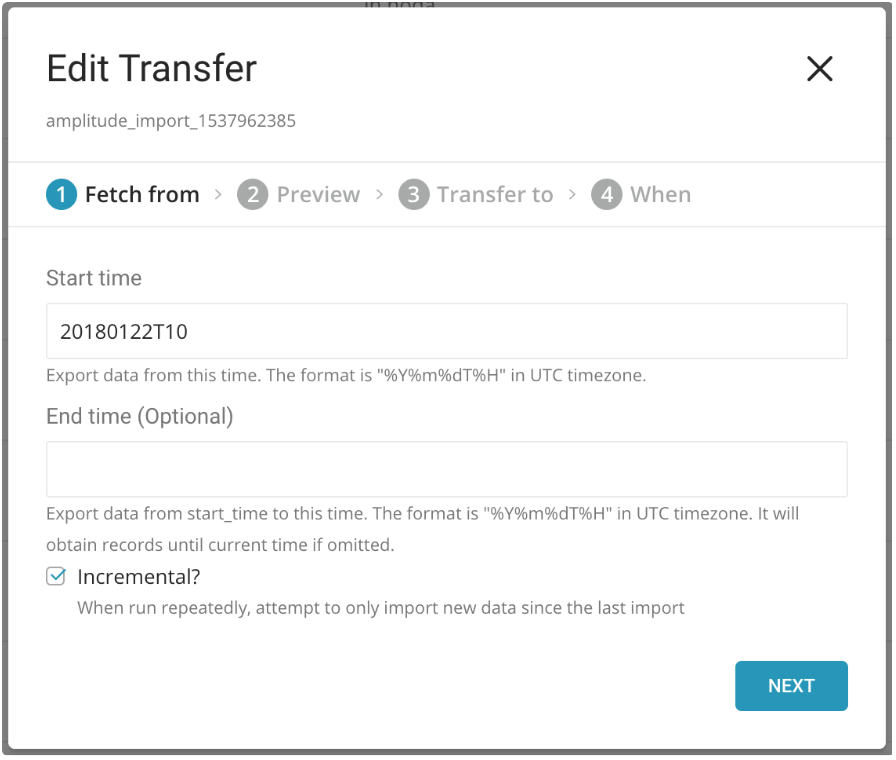

Enter the start time in the format YYYYMMDDTHH.

Optionally, you can specify an End Time using the same format. If End Time is not specified the default is the current time of your browser timezone.

Select Next to preview your data.

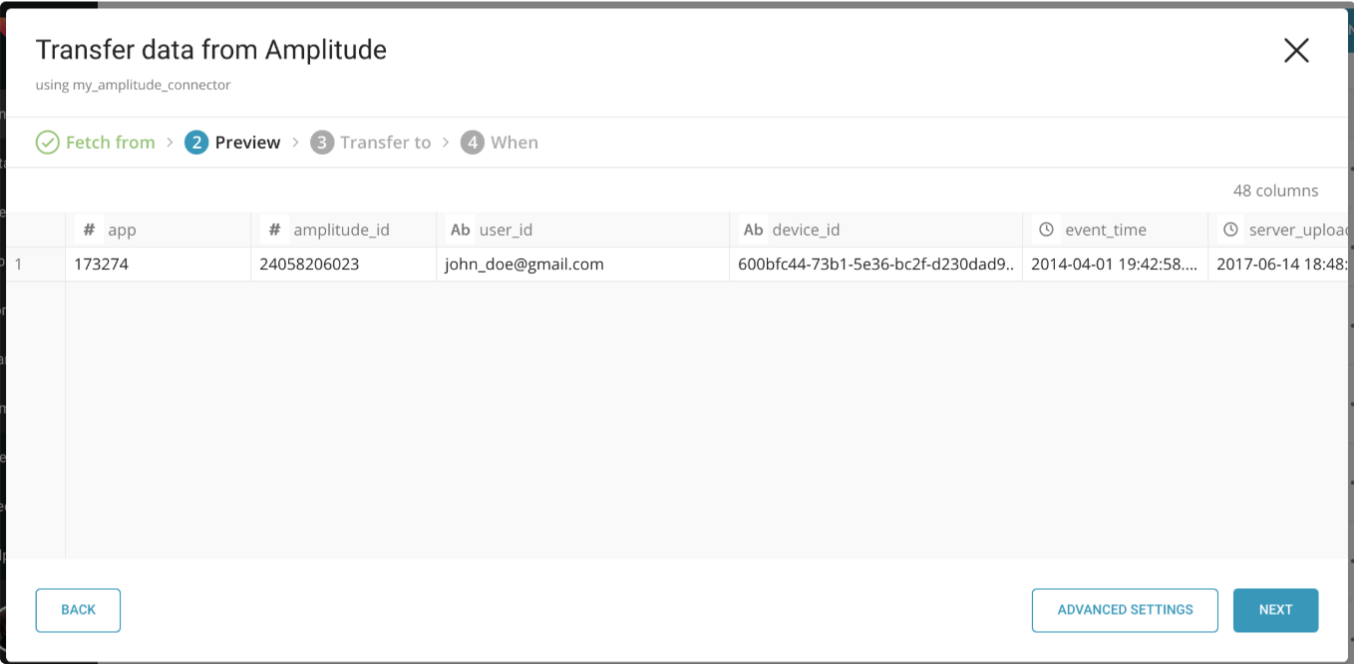

You’ll see a preview of your data. Select Next.

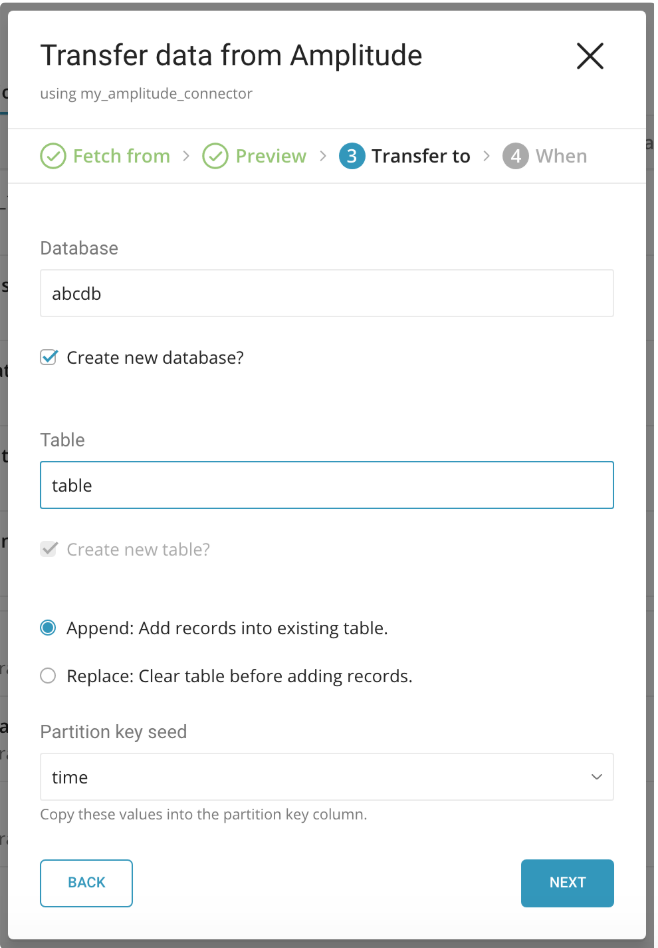

If you are creating a new database, check Create new database and give your database a name. Do the same with Create new table

Select whether to append records to an existing table or replace your existing table.

If you want to set a different partition key seed than the default key, you can specify a key using the popup menu.

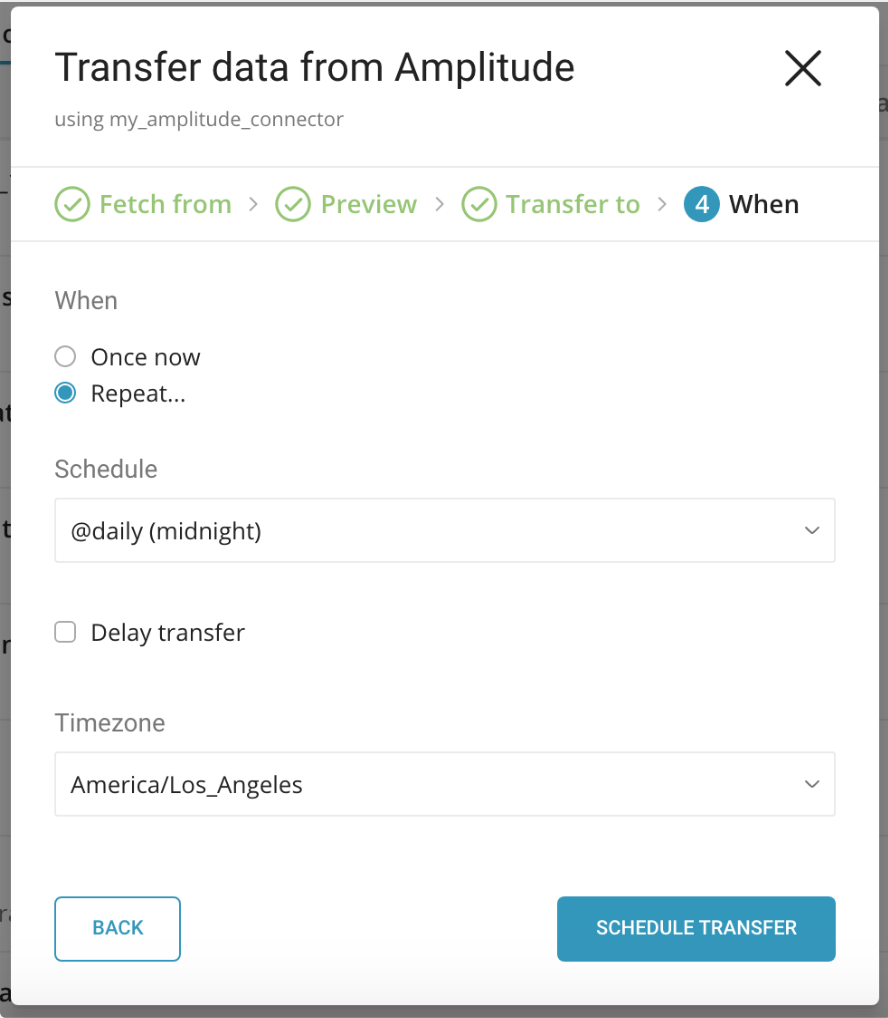

In the When tab, you can specify a one-time transfer, or you can schedule an automated recurring transfer. If you select Once now, select Start Transfer. If you select Repeat… specify your schedule options, then select Schedule Transfer.

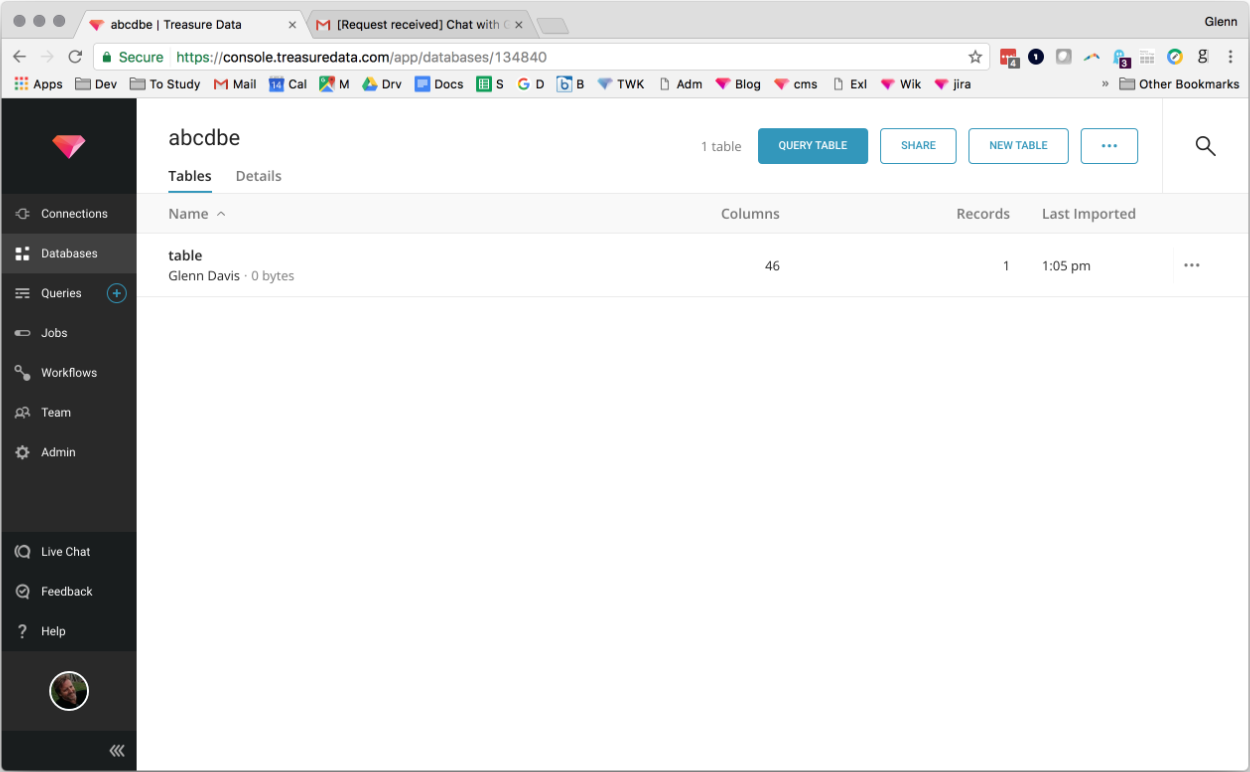

After your transfer runs, you can see the results of your transfer in the Databases tab.

Before you can use the command line to configure a connector, you must install the Treasure Data Toolbelt.

Install the newest Treasure Data Toolbelt.

$ td --version

0.15.3First, create a file called seed.yml as shown in the following example, with your credentials.

in:

type: amplitude

api_key: "YOUR_API_KEY"

secret_key: "YOUR_SECRET_KEY"

start_time: "20160901T03" # UTC Timezone. The format is yyyymmddThh. "T" is a static character.Run the following command in your terminal:

td connector:guess seed.yml -o load.ymlConnector:guess automatically reads the target data and intelligently guesses the data format.

Open the file load.yml, where you’ll see guessed file format definitions including, in some cases, file formats, encodings, column names, and types.

in: {type: amplitude, api_key: API_KEY, secret_key: SECRET_KEY,

start_time: 20160901T03}

out: {}

exec: {}

filters:

- type: rename

rules:

- rule: upper_to_lower

- rule: character_types

pass_types: ["a-z", "0-9"]

pass_characters: "_"

replace: "_"

- rule: first_character_types

pass_types: ["a-z"]

pass_characters: "_"

prefix: "_"

- rule: unique_number_suffix

max_length: 128

- type: add_time

to_column: {name: time}

from_value: {mode: upload_time}For more details on the rename filter, see the rename filter plugin for Data Connector (needs link).

You can preview how the system will parse the file by using the preview command.

td connector:preview load.ymlFinally, submit the load job. It may take a couple of hours depending on the size of your data.

Amplitude connector provides a time column automatically, but you can also specify it as any timestamp column such as --time-column server_upload_time.

td connector:issue load.yml --database td_sample_db --table td_sample_tableYou can also schedule incremental, periodic Data Connector execution from the command line, removing the need for a cron daemon on your local data center.

For the first scheduled import, the Data Connector for Amplitude imports all of your data. On the second and subsequent runs, only newly added files are imported.

A new schedule can be created by using the td connector:create command.

The name of the schedule, cron-style schedule, database and table where data will be stored, and the Data Connector configuration file are required.

td connector:create \

daily_import \

"10 0 * * *" \

td_sample_db \

td_sample_table \

load.ymlThe cron parameter also accepts three special options: @hourly, @daily and @monthly. | By default, the schedule is set in the UTC time zone. You can set the schedule in a time zone using the -t or --timezone option. The --timezone option only supports extended timezone formats like 'Asia/Tokyo', 'America/Los_Angeles' etc. Timezone abbreviations like PST, CST are *not* supported and may lead to unexpected results.

You can see the list of scheduled entries by running the command td connector:list.

$ td connector:listtd connector:show shows the execution settings of a schedule entry.

td connector:show daily_importtd connector:history shows the execution history of a schedule entry. To investigate the results of each individual run, use td job jobid.

td connector:history daily_importtd connector:delete removes the schedule.

td connector:delete daily_importSee the following table for more details on available in modes.

| Option name | Description | Type | Required? | Default value |

|---|---|---|---|---|

| api_key | API key | string | yes | N/A |

| secret_key | API Secret key | string | yes | N/A |

| start_time | First hour included in data series, formatted YYYYMMDDTHH (e.g. ‘20150201T05’). This is UTC timezone. | string | yes | N/A |

| end_time | Last hour included in data series, formatted YYYYMMDDTHH (e.g. ‘20150203T20’). This is UTC timezone. | string | no | processed time |

| incremental | true for “mode: append”, false for “mode: replace” (See below). | bool | no | true |

You can specify the file import mode in the out section of seed.yml.

This is the default mode, which appends records to the target table.

in:

...

out:

mode: appendThis mode replaces data in the target table. Any manual schema changes made to the target table remains intact with this mode.

in:

...

out:

mode: replace