Amazon Simple Storage Service (Amazon S3) is an object storage service that offers scalability, data availability, security, and performance. Amazon S3 provides features for data organization and configuration of access controls for your business, organization, and compliance requirements.

This TD export integration allows you to write job results from Treasure Data directly to Amazon S3.

- Store data: Store an infinite amount of data in a bucket.

Review the information in the following table to understand the features that are available in v2 that are not available in v1.

| Feature | Amazon S3 v2 | Amazon S3 v1 |

|---|---|---|

| Server-side Encryption with Customer Master Key (CMK) stored in AWS Key Management Service | X | |

| Support for Quote Policy for output data format | X | |

| Support Assume Role authentication method | X |

- The following AWS permissions for the IAM User :

- with s3:PutObject, s3:AbortMultipartUpload permissions.

- with kms:Decrypt, kms:GenerateDataKey permissions when selecting the sse-kms setting.

- (Optional) Basic knowledge of Treasure Data, including the TD Toolbelt.

- The default query result limit for export to S3 is 100GB. You can configure the part size setting up to 5000 (MB). The file limit will be about 5TB.

- The default export format is CSV RFC 4180.

- Output in TSV and JSONL format are also supported.

- TLS v.1.2 supported

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

You can encrypt upload data with AWS S3 Server-Side Encryption. You don't need to prepare an encryption key. Data will be encrypted at the server side with 256-bit Advanced Encryption Standard (AES-256).

Use the Server-Side Encryption bucket policy if you require server-side encryption for all objects that are stored in your bucket. When you have the server-side encryption enabled, you don't have to turn on the SSE option. However, job results may fail if you have bucket policies that reject HTTP requests without encryption information.

You can encrypt upload data with Amazon S3-managed encryption keys (SSE-S3).

When you enable AWS KMS for server-side encryption in Amazon S3:

- If you don't input the KMS Key ID, it will use (or create) the default KMS key.

- If you input the KMS Key ID, you must choose symmetric CMK (not asymmetric CMKs).

- The AWS KMS CMK must be in the same region as the bucket.

In Treasure Data, you must create and configure the data connection before running your query. As part of the data connection, you provide authentication to access the integration.

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Search for S3 v2and select Amazon S3 (v2).

- Select Create Authentication.

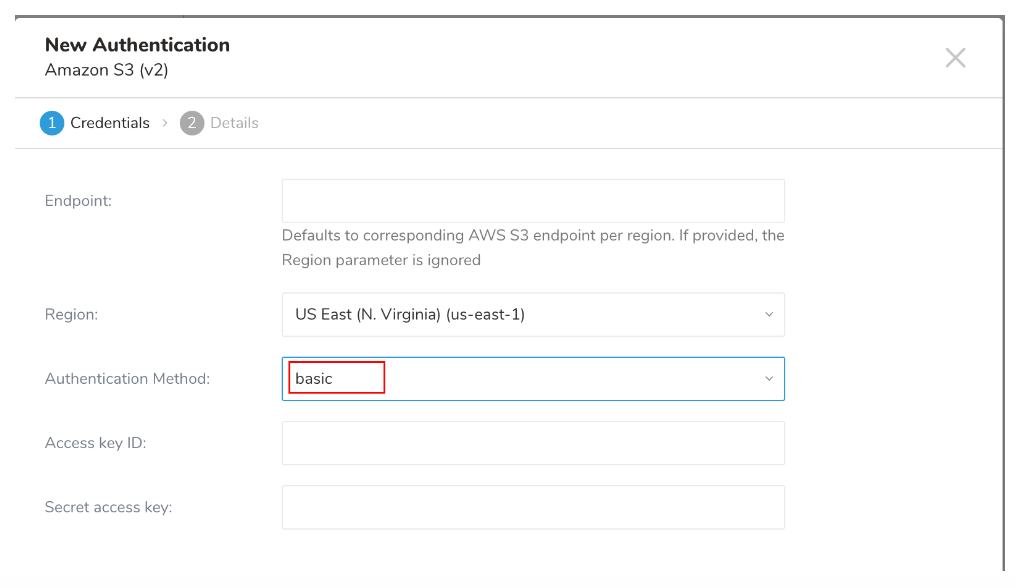

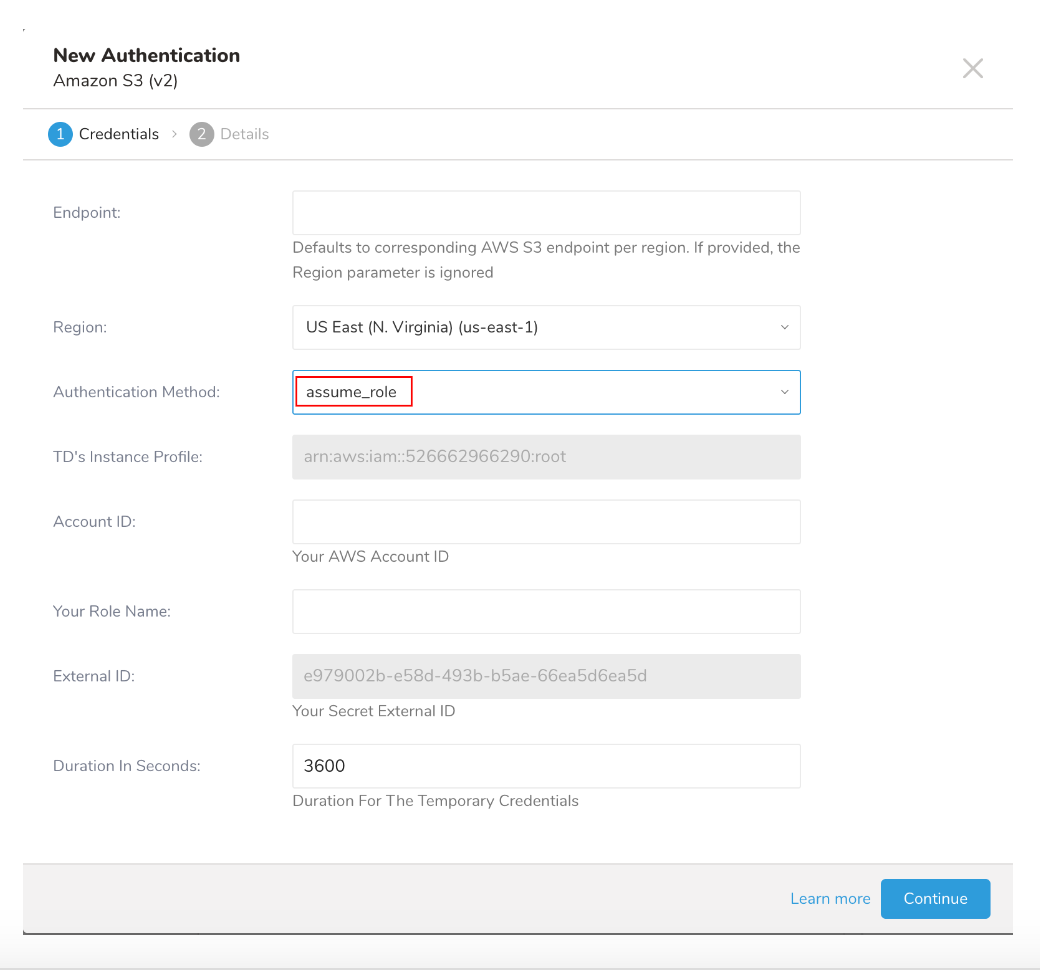

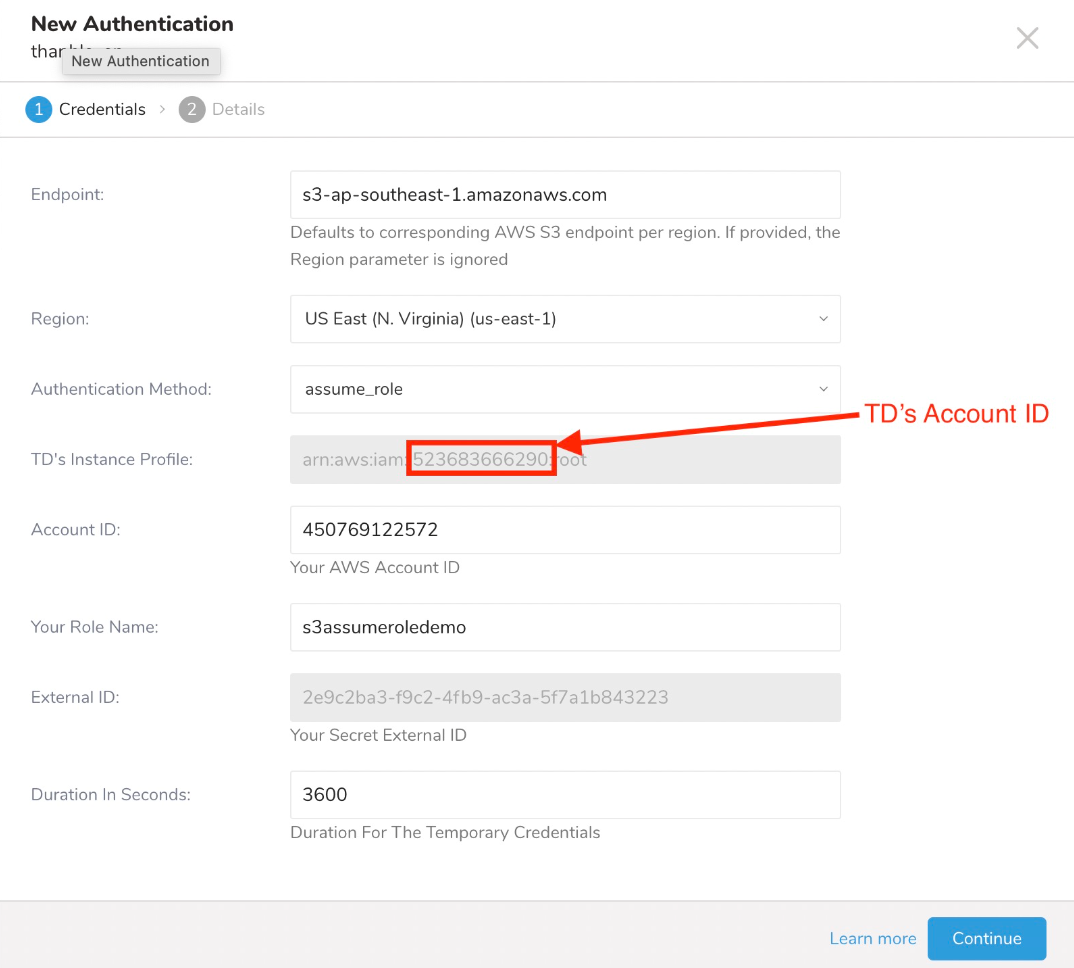

A new Authentication dialog opens. Depending on the Authentication method you choose, the dialog may look like one of these screens:

- Configure the authentication fields, and then select Continue.

| Parameter | Description |

|---|---|

| Endpoint | S3 service endpoint override. You can find region and endpoint information from the AWS service endpoints document. (Ex. s3.ap-northeast-1.amazonaws.com) When specified, it will override the region setting. |

| Region | AWS Region |

| Authentication Method |

|

| Access Key ID | AWS S3 issued |

| Secret Access Key | AWS S3 issued |

| Secret token | AWS S3 issued |

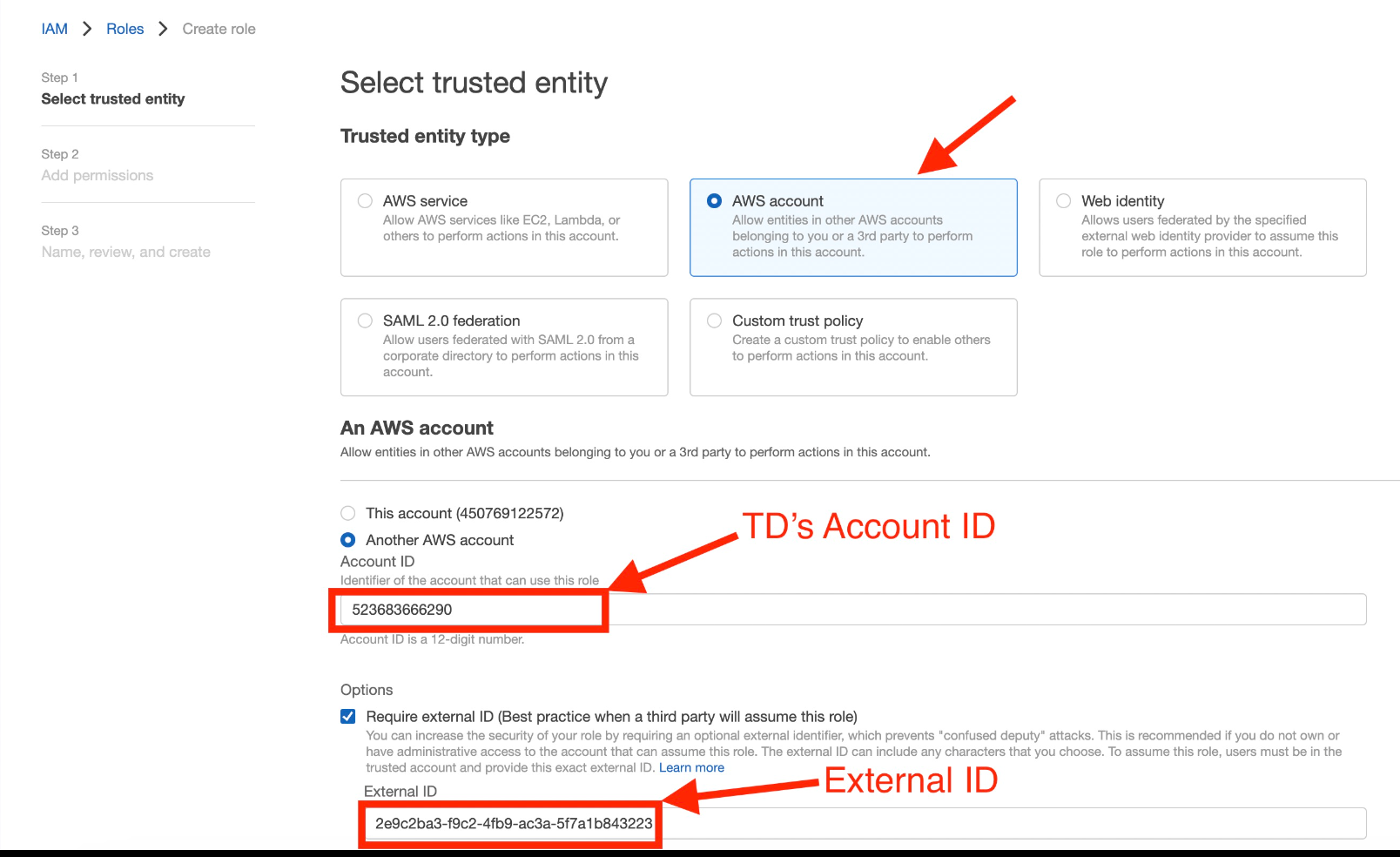

| TD's Instance Profile | This value is provided by the TD Console. The numeric portion of the value constitutes the Account ID that you will use when you create your IAM role. |

| Account ID | Your AWS Account ID |

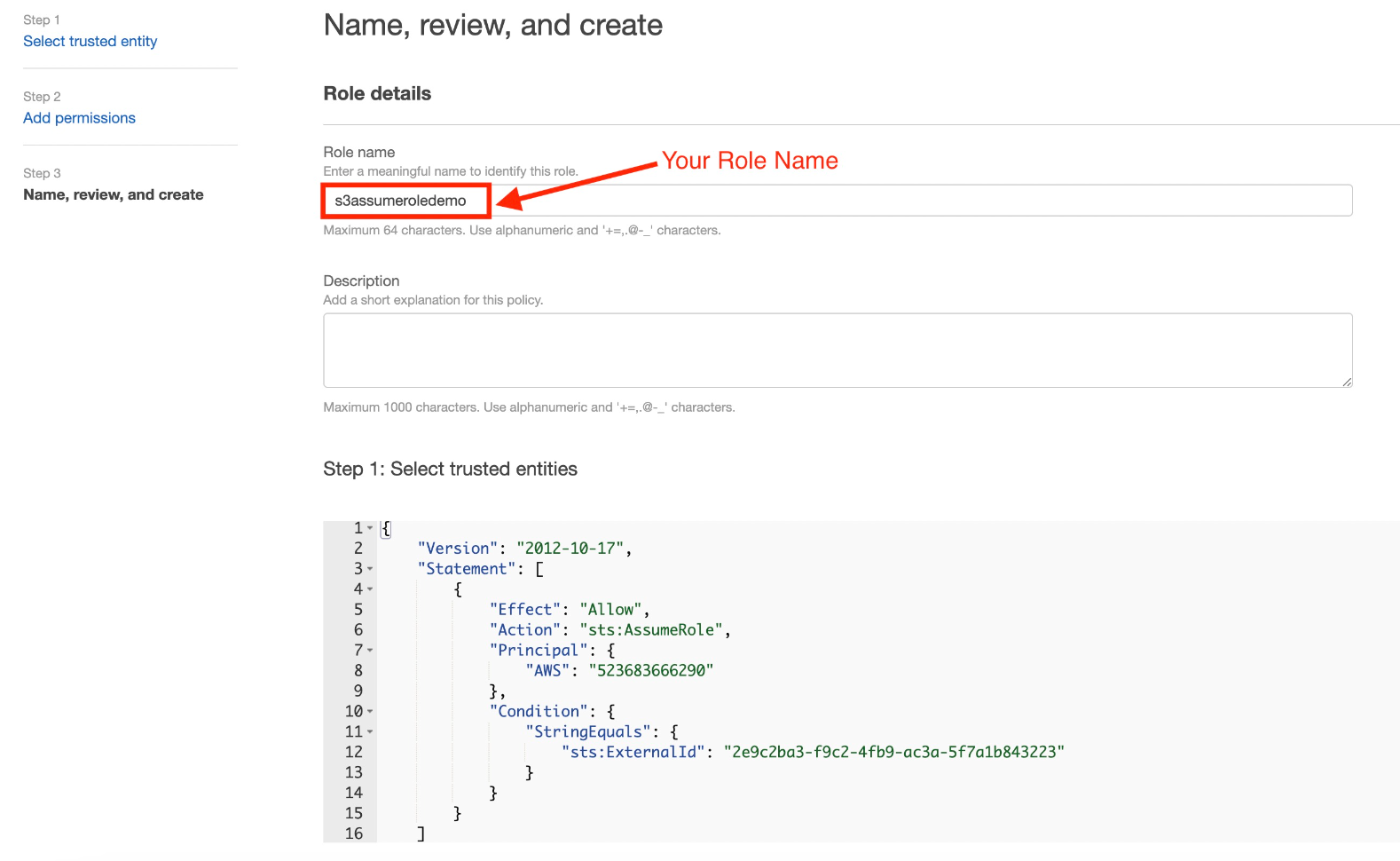

| Your Role Name | Your AWS Role Name |

| External ID | Your Secret External ID |

| Duration In Seconds | Duration For The Temporary Credentials |

- Name your new AWS S3 connection, and select Done.

- Create a new authentication with the assume_role authentication method.

- Make a note of the numeric portion of the value in the TD's Instance Profile field.

- Create your AWS IAM role.

- Select Export Results.

- You can select an existing authentication or create a new authentication for the external service to be used for output. Choose one of the following:

Use Existing Integration

Create a New Integration

(Optional) Specify information for Export to Amazon S3.

| Field | Description |

|---|---|

| Is user directory Root? | If selected, the user directory is treated as the root directory. (ex. '/home/treasure-data' as '/') |

| Path prefix | The file path where the file will be stored. |

| Rename file after upload finish | If selected, SFTP result output renames the file on the remote SFTP server from ".xxx.tmp" to ".xxx" after all the data is transferred. Some MA tools try to import data when a file with a specific name exists on the SFTP server. The temp name option is useful for such cases. |

| Format | The format of the exported files:

|

| Compression | The compression format of the exported files:

|

| Header line? | The header line with column name as the first line. |

| Delimiter | The delimited character:

|

| Quote policy | The policy for a quote:

|

| Null string | How null value of the result of the query displays:

|

| End-of-line character | The EOL (end-of-line) character:

|

| Temp filesize threshold | The maximum file size (in bytes) of a local temp file. When the temp file reaches the threshold, the file flushes to a remote file. If you encounter the error channel is broken, reduce the value of this option to resolve the error. |

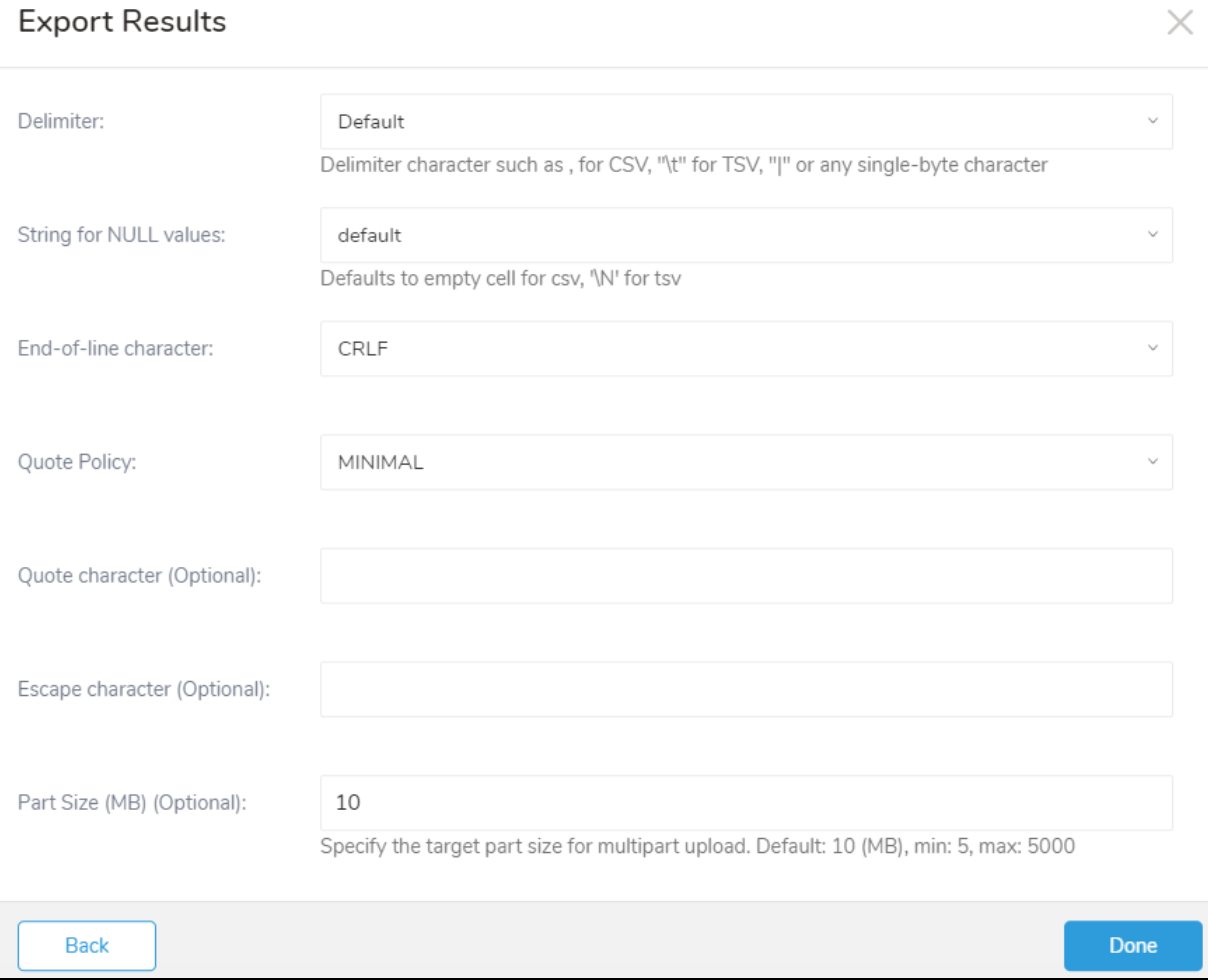

- Define any additional Export Results details and content review the integration parameters. For example, your Export Results screen might be different, or you might not have additional details to fill out.

- Select Done.

- Run your query

- Validate that your data moved to the destination you specified.

| Parameter | Data Type | Required? | Supported in V1? | Description |

|---|---|---|---|---|

| Server-side Encryption | String | yes, only sse-s3 | Support values: - sse-s3: Server-side Encryption Mode - sse-kms: new SSE Mode | |

| Server-side Encryption Algorithm | String | yes | Support value: - AES256 | |

| KMS Key ID | String | no | Symmetric AWS KMS Key ID. If there is no input for the KMS Key ID, it will create/use the default KMS Key. | |

| Bucket | String | yes | yes | Provide the S3 bucket name (Ex., your_bucket_name). |

| Path | String | yes | yes | Specify the s3 filename (object key), and include an extension (Ex. test.csv). |

| Format | String | yes | Format of the exported file: csv, tsv, jsonl | |

| Compression | String | yes | The compression format of the exported files (Ex., None or gz) | |

| Header | Boolean | yes | Include a header in the exported file. | |

| Delimiter | String | yes | Use to specify the delimiter character (Ex., (comma)) | |

| String for NULL values | String | yes | Placed holder to insert for null values (Ex. Empty String) | |

| End-of-line character | String | yes | Specify the EOL(End-Of-Line) representation (Ex. CRLF, LF) | |

| Quote Policy | String | no | Use to determine field type to quote. Support values: - ALL Quote all fields - MINIMAL Only quote those fields which contain delimiter, quote or any of the characters in the lineterminator. - NONE Never quote fields. When the delimiter occurs in the field, escape with escape char. Default value: MINIMAL | |

| Quote character (Optional) | Char | yes | The character used for quotes in the exported file (Ex. "). Only quote those fields which contain the delimiter, quote, or any of the characters in the lineterminator. If the input is more than 1 character, the default value will be used. | |

| Escape character(Optional) | Char | yes | The escape character is used in the exported file. If the input is more than 1 character, the default value will be used. | |

| Part Size (MB) (Optional) | Integer | no | The part size in multipart upload. Default 10, min 5, max 5000 | |

| JSON Columns | String | no | Comma-separated list of string columns to send as JSON |

SELECT * FROM www_accessYou can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

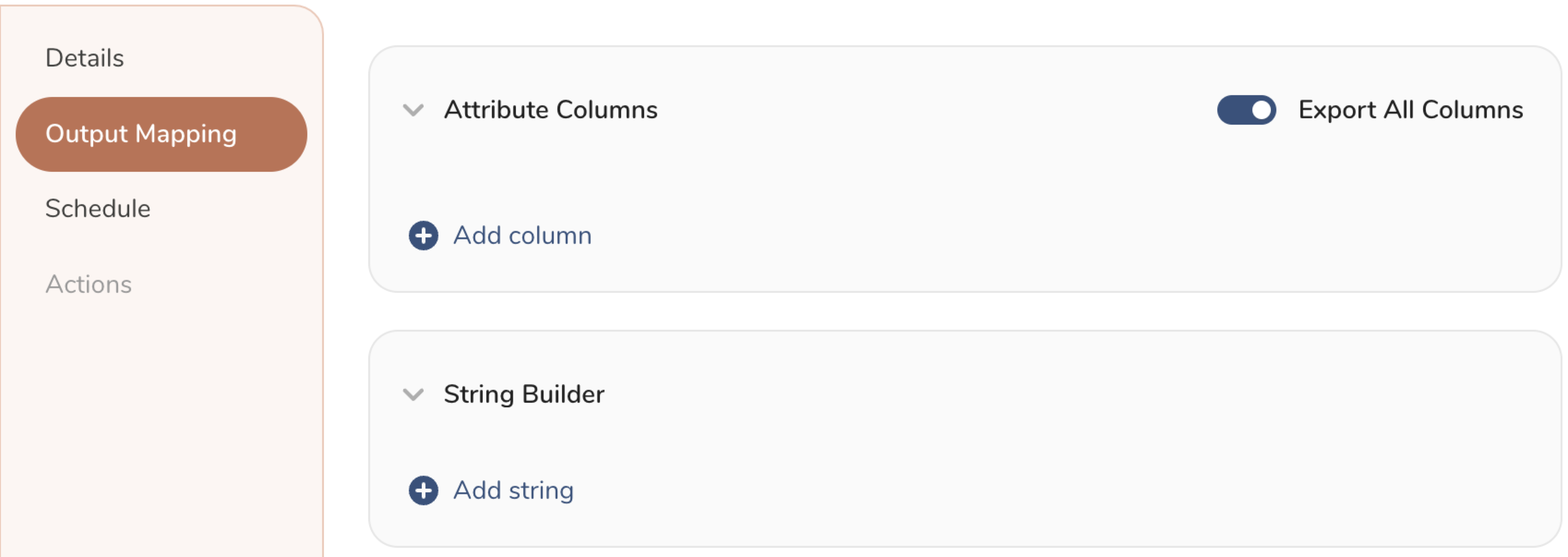

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

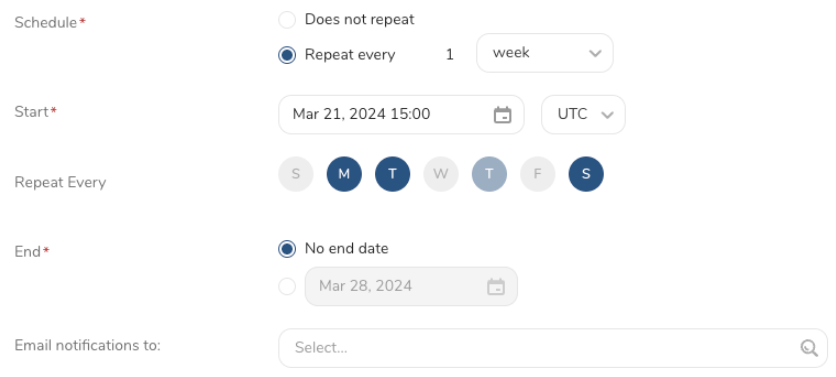

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

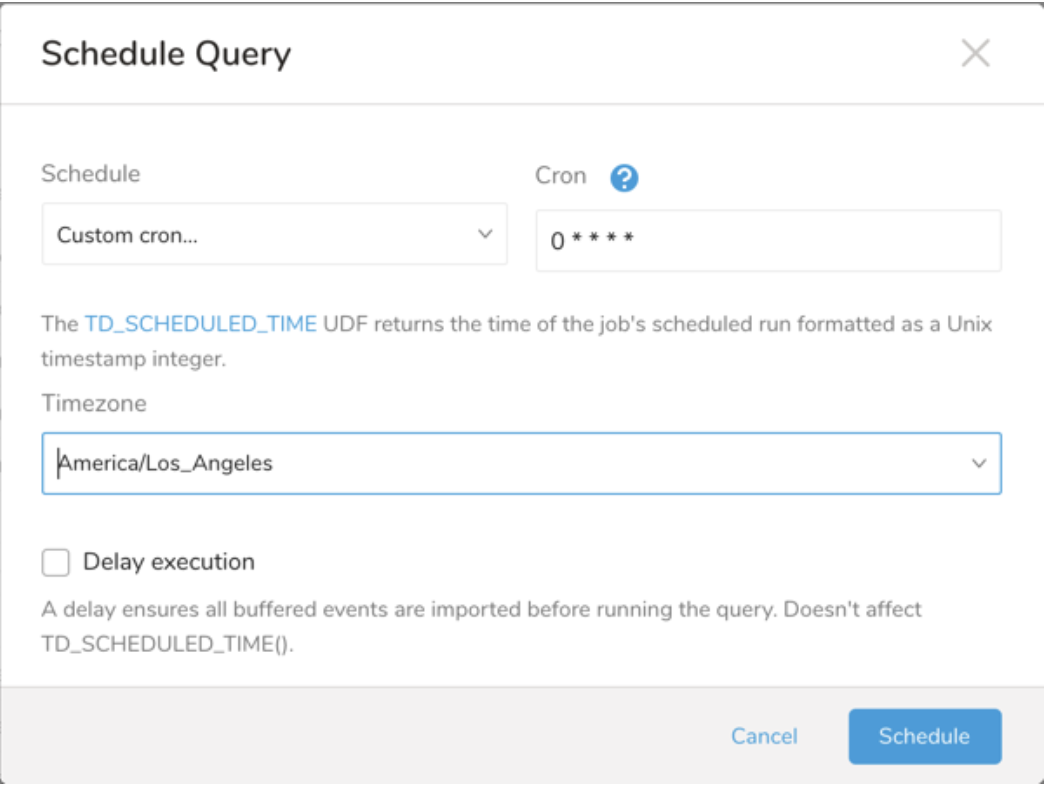

You can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

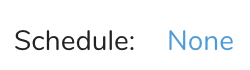

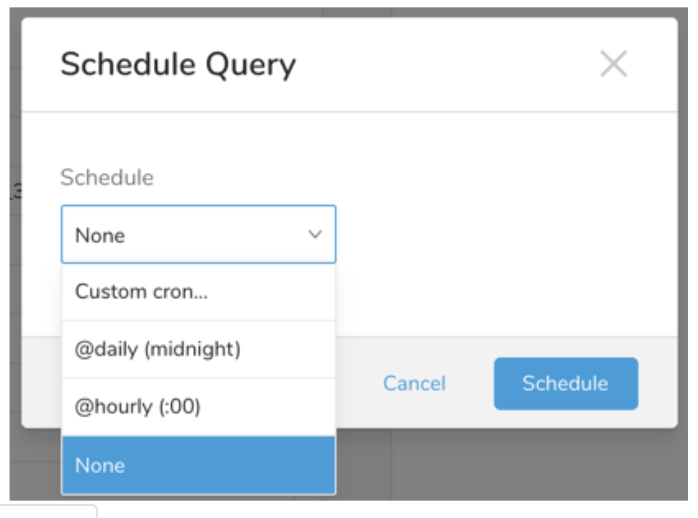

Next to Schedule, select None.

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

Within Treasure Workflow, you can specify the use of this data connector to export data.

Learn more at Exporting Data with Parameters.

| Name | Type | Required | Description | |

|---|---|---|---|---|

| bucket | String | Yes | ||

| path | String | Yes | ||

| sse_type | String | sse-s3, sse-kms | ||

sse_algorithm | String | AES256 | ||

kms_key_id | String | |||

| format | String | csv, tsv, jsonl | ||

| compression | String | none, gz | ||

| header | Boolean | Default true | ||

| delimiter | String | default , \t | ||

| null_value | String | default, empty, \N, NULL, null | ||

| newline | String | CR, LF, CRLF | ||

| quote_policy | String | ALL, MINIMAL, NONE | ||

| escape | Char | |||

| quote | Char | |||

| part_size | Integer | |||

| json_columns | String |

_export:

td:

database: td.database

+s3v2_test_export_task:

td>: export_s3v2_test.sql

database: ${td.database}

result_connection: s3v2_conn

result_settings:

bucket: my-bucket

path: /path/to/target.csv

sse_type: sse-s3

format: csv

compression: gz

header: false

delimiter: default

null_value: empty

newline: LF

quote_policy: MINIMAL

escape: '"'

quote: '"'

part_size: 20

json_columns: col_map,col_array,col_json_stringTo output the result of a single query to an S3 bucket add the "--result option" to the td query command. After the job is finished, the results are written into your S3 bucket.

You can specify detailed settings to export your S3 via the "--result parameter".

Create an Authentication with Assume Role is only supported through the console UI. Follows the instruction Reuse the existing Authentication to get the Authentication ID, and reuse it for CLI

td query \

--result '{"type":"s3_v2","auth_method":"basic","region":"us-east-2","access_key_id": "************","secret_access_key":"***************","bucket":"bucket_name","path":"path/to/file.csv","format":"csv","compression":"none","header":true,"delimiter":"default","null_value":"default","newline":"CRLF","quote_policy":"NONE","part_size":10}' \

-w -d testdb \

"SELECT 1 as col" -T prestotd query \

--result '{"type":"s3_v2","td_authentication_id": 77348,"bucket":"bucket_name","path":"path/to/file.csv","format":"csv","compression":"none","header":true,"delimiter":"default","null_value":"default","newline":"CRLF","quote_policy":"NONE","part_size":10}' \

-w -d testdb \

"SELECT 1 as col" -T presto