Integrating Amazon Marketing Cloud (AMC) and Treasure Data CDP allows advertisers to use Amazon Ads to pass strategic CDP segments from Treasure Data in a pseudonymized form to AMC. Advertisers can then combine the CDP inputs with Amazon Ads signals and obtain unique insights on topics such as media impact, audience segmentation, segment overlap, and customer journeys in a privacy-safe manner. Learn more about how integrating Treasure Data CDP with AMC can drive better Ad campaigns.

This Amazon Marketing Cloud Export Integration lets you write job results from Treasure Data and upload pseudonymized audience datasets directly to Amazon Marketing Cloud.

Personally identifiable information (PII) fields are programmatically normalized and hashed using SHA-256. If PII fields are already hashed, they are transferred as is.Hashing Identifier Type: EMAIL, FIRST_NAME, LAST_NAME, PHONE, ADDRESS, and CITY.

- Upload pseudonymized audiences dataset to Amazon Marketing Cloud.

- Delete identities in all existing datasets.

- Create a rule-based audience and activate it directly in Amazon DSP.

Amazon Marketing Cloud only accepts hashed or pseudonymized information. All information in an advertiser's AMC instance is handled strictly with Amazon's privacy policies, and an advertiser's signals cannot be exported or accessed by Amazon. Advertisers can only access aggregated and anonymous outputs from AMC.

- Basic knowledge of Treasure Data, including TD Toolbelt.

- Amazon Marketing Cloud account.

- S3 bucket that grant access to an Amazon Marketing Cloud account.

- Amazon DSP account that was invited into the AMC instance.

- (Optional) Create the dataset definition before using the Amazon Marketing Cloud Export Integration. Visit Treasure Boxes to see an example of dataset creation workflow. This integration supports creating a dataset the first time the activation runs.

- Query columns must be specified with the exact column names (case insensitive) and data type.

- It is recommended not to use the existing S3 bucket tied to the AMC instance. Create a new S3 bucket for data upload.

- An Amazon DSP minimum audience size is 2,000 identities.

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

Login into https://advertising.amazon.com/marketing-cloud.

After logging into an Amazon Marketing Cloud instance, perform the following steps to obtain the Amazon Marketing Cloud Instance ID, Account ID,and Data upload AWS account ID information.

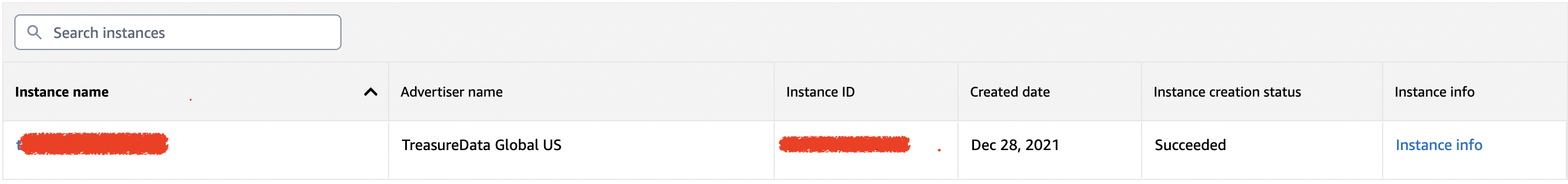

- Obtain the Amazon Marketing Cloud Instance ID from the Instance list.

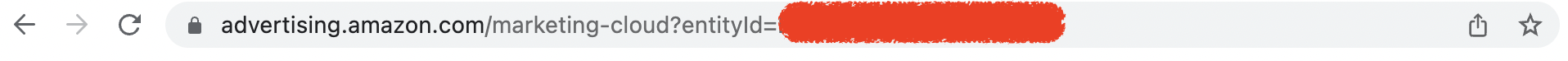

- View the Amazon Marketing Cloud Account ID assigned to parameter entityId.

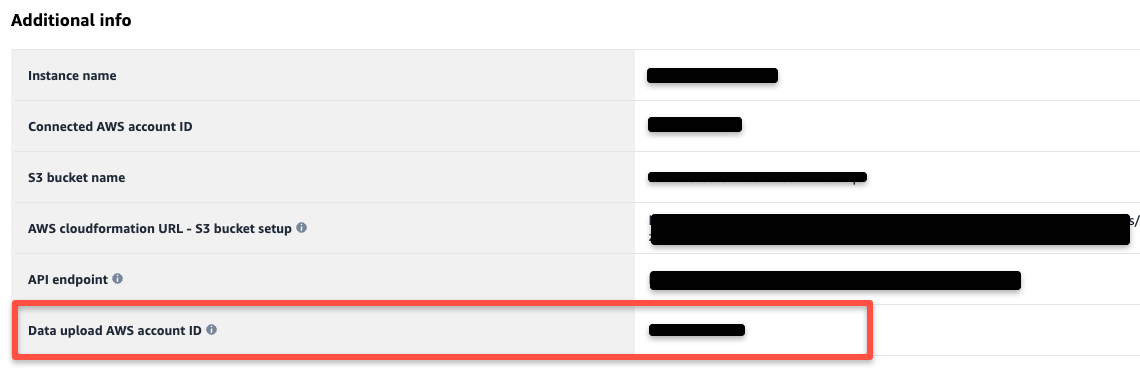

- You can get the Data upload AWS account ID from the Instance Info page.

During the first activation, you can create data sets on the fly. Subsequent runs will reuse the dataset without manual intervention. This requires the dataset definition to be configured.

The following are examples of dataset definitions. If your target dataset exists, you can skip this section.

Example of a simple Dimension dataset without any hashed PII columns

In this dataset, there are two columns: product_asin and product_sku. Note that if the schema from your query doesn't match this, the upload will result in an error.

{ "dataSet": {"columns":[{"name": "product_asin","columnType": "DIMENSION","dataType": "STRING"},{"name": "product_sku","columnType": "METRIC","dataType": "STRING"}],"dataSetId": "mydemosimledimensionds","description": "my demo dimension dataset"}}Example of a fact dataset with email as hashed PII and TCF as consent type

In this example, there are three columns: email (as hashedPII), record_date (isMainEventTime = True indicates this is a fact dataset), and tcf_string (is tcf string of consent).

{"dataSet": {"dataSetId": "mydemofactdswithidentity","columns": [{"columnType": "DIMENSION","dataType": "STRING","externalUserIdType": {"hashedPii": "EMAIL"},"name": "email"},{"columnType": "DIMENSION","dataType": "DATE","isMainEventTime": true,"name": "record_date"},{"columnType": "DIMENSION","dataType": "STRING","name": "tcf_string","consentType": "TCF"}],"countryCode": "US"}}Check out the External Reference section for more detailed Amazon guidelines. Contact our technical support team if you need any assistance.

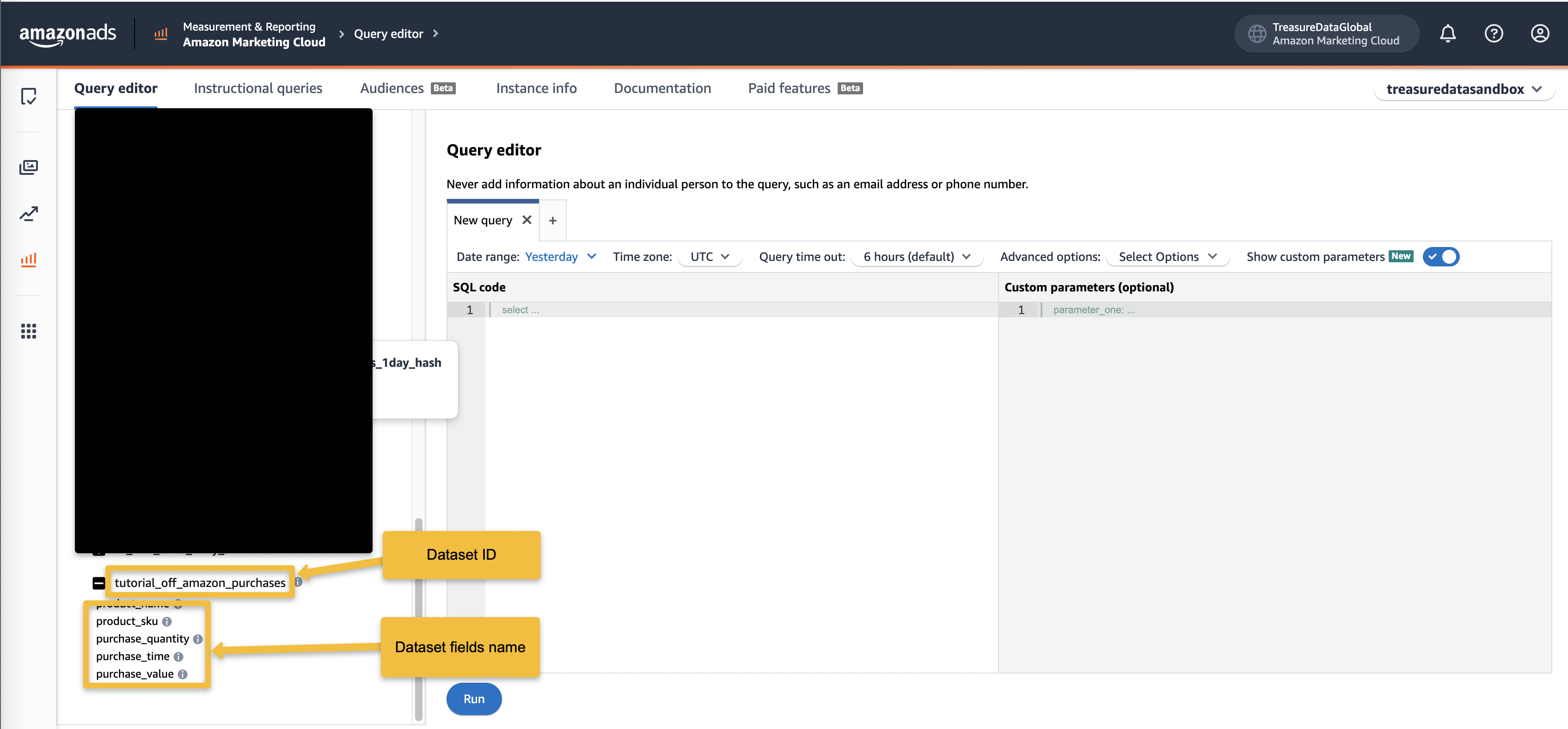

If you create the dataset using this integration, the Dataset ID specified will be re-used in the next activation runs; there is no requirement to obtain it from the Amazon Query editor page.duration.

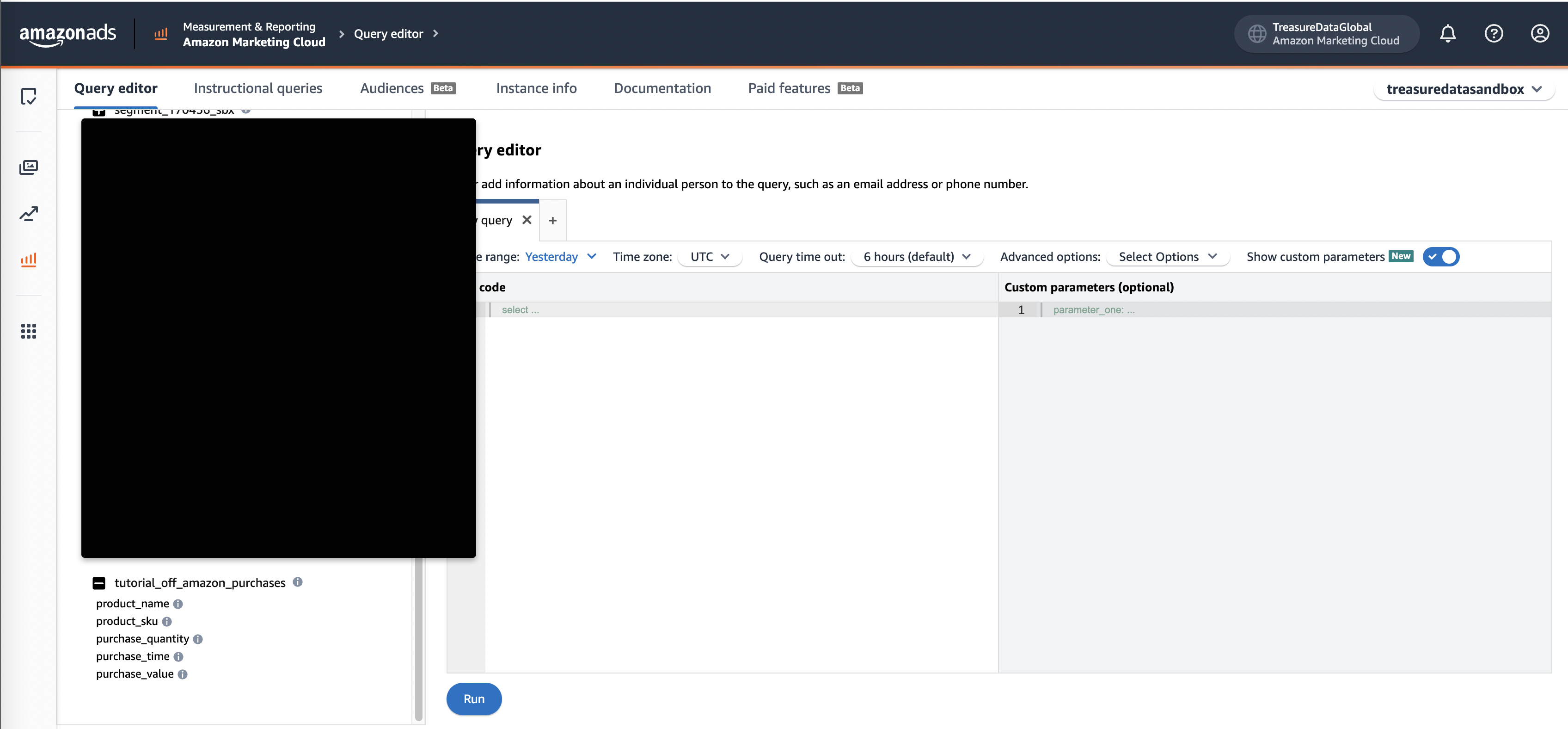

- From the Amazon Query editor page, you can obtain the Dataset ID and Dataset Fields Name.

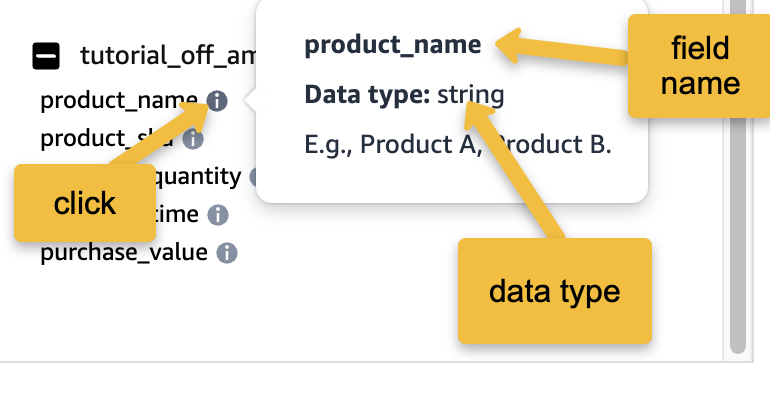

2. Select the info icon to obtain each filename's data type.

2. Select the info icon to obtain each filename's data type.

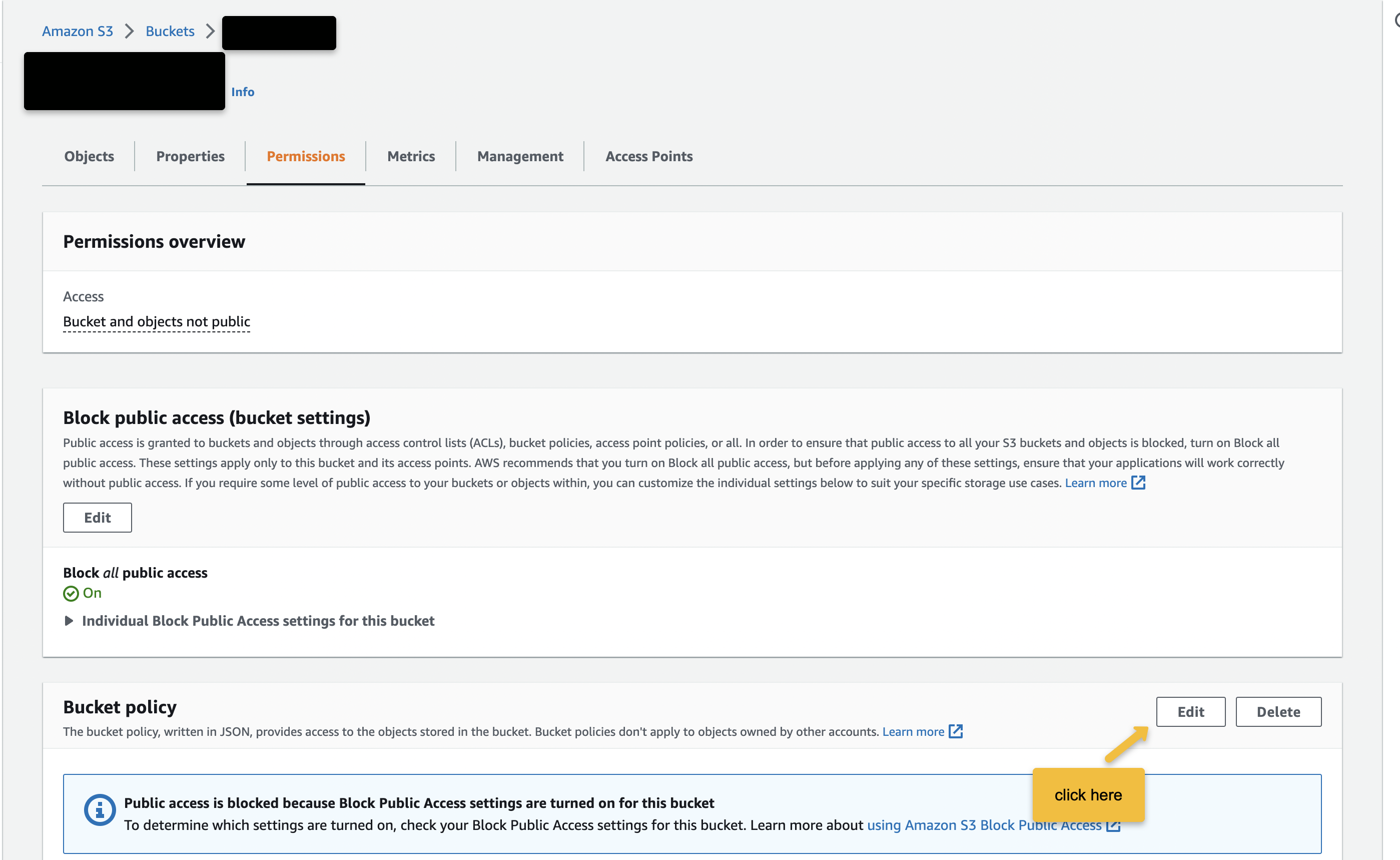

- Log in to S3, navigate to your bucket > permission tab, and select Edit beneath the Bucket policy.

2. Copy and paste this configuration after replacing your Data upload AWS account ID and Bucket name and select Save.

2. Copy and paste this configuration after replacing your Data upload AWS account ID and Bucket name and select Save.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::{{Data upload AWS account ID}}:root"

},

"Action": [

"s3:GetObject",

"s3:GetObjectVersion",

"s3:ListBucket",

"s3:PutObject",

"s3:PutObjectAcl",

"s3:GetObjectTagging",

"s3:GetBucketTagging"

],

"Resource": [

"arn:aws:s3:::{{bucket name}}/*",

"arn:aws:s3:::{{bucket name}}"

]

}

]

}- Define tags for your bucket

To define a tag for your instance, perform the following steps:

- Navigate to your Amazon S3 console and click on the bucket name you want to associate tags.

- Click Properties and scroll to the Tags section. Click Edit.

- Click Add tag to define a key for the tag and provide a value for the key. For our purpose, define the key a" "instance"d" and type the identifier of the instance you use to upload data as the value for the key.

- Click Save.

Visit https://advertising.amazon.com/API/docs/en-us/guides/amazon-marketing-cloud/advertiser-data-upload/advertiser-data-s3-bucket for more details.

Before running your query, you must create and configure the data connection in Treasure Data. As part of the data connection, you provide authentication to access the integration.

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Search for Amazon Marketing Cloud and select Amazon Marketing Cloud.

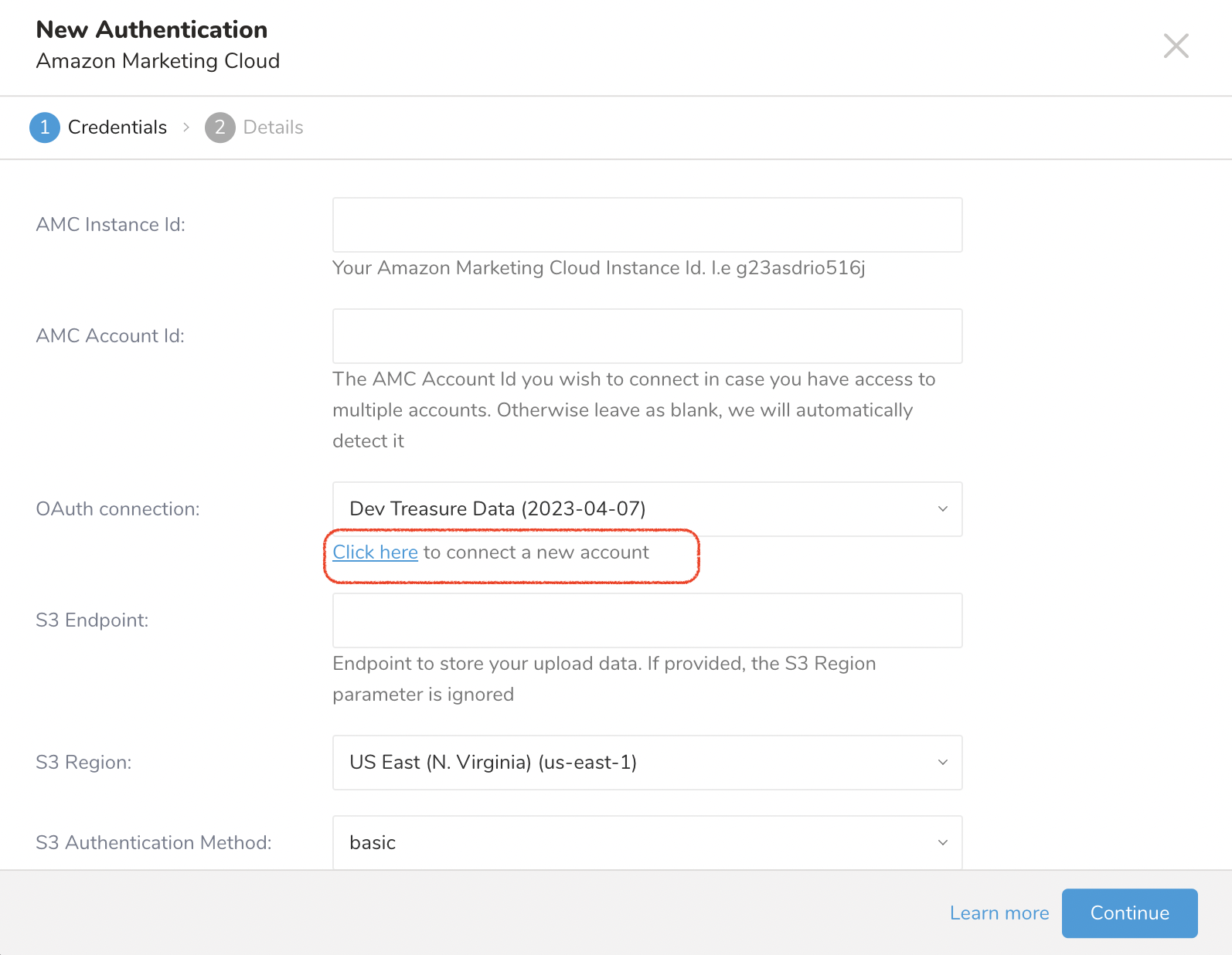

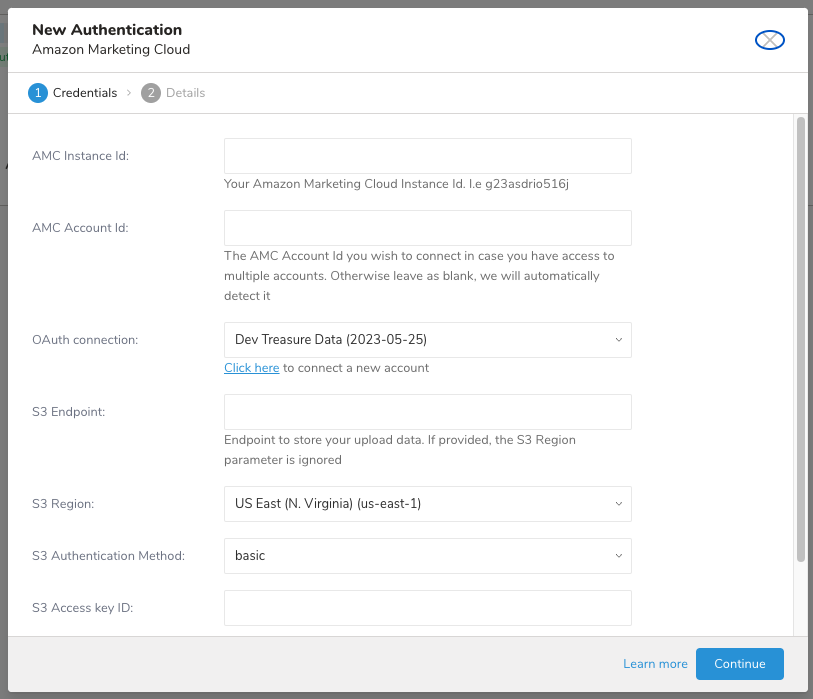

- Select the Click here link in New Authentication to connect to a new Amazon Account.

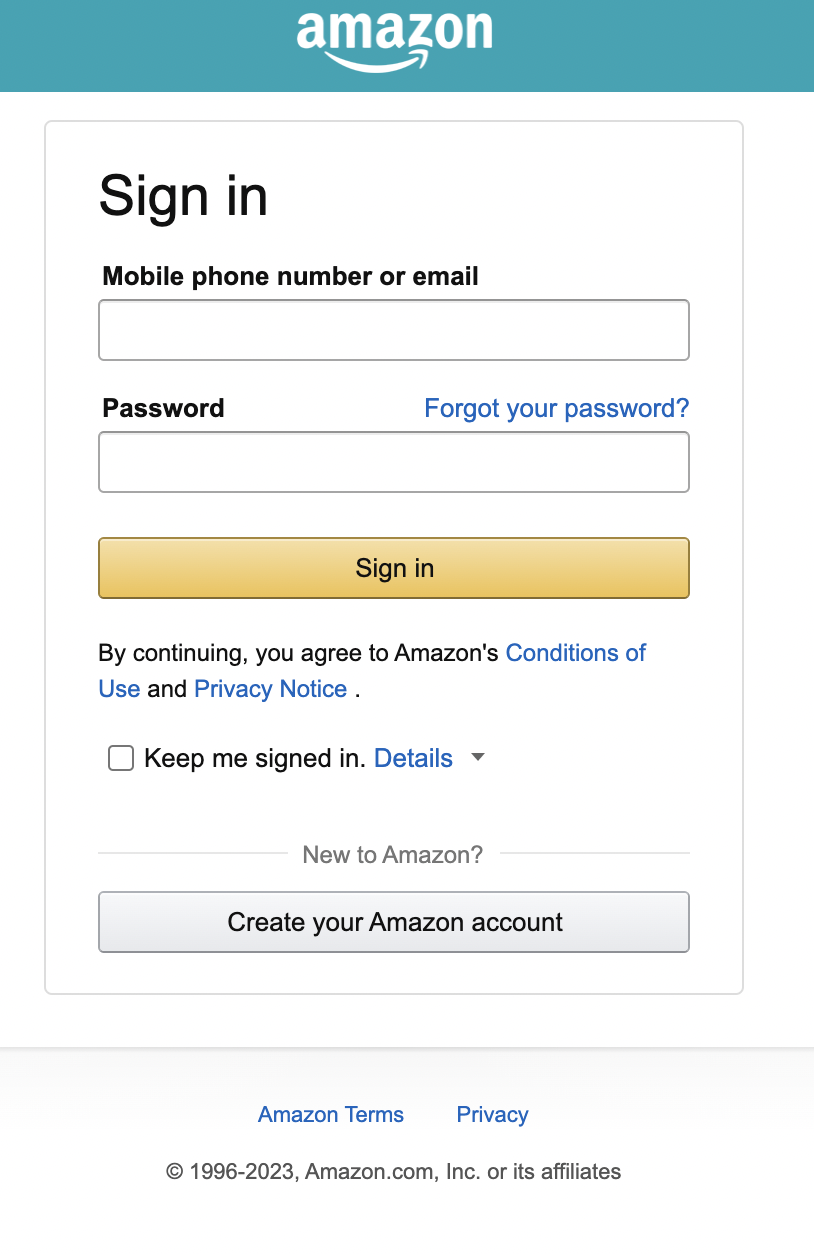

- You will be redirected to the Amazon Marketing Cloud instance, where you can log in using OAuth. Provide the username and password.

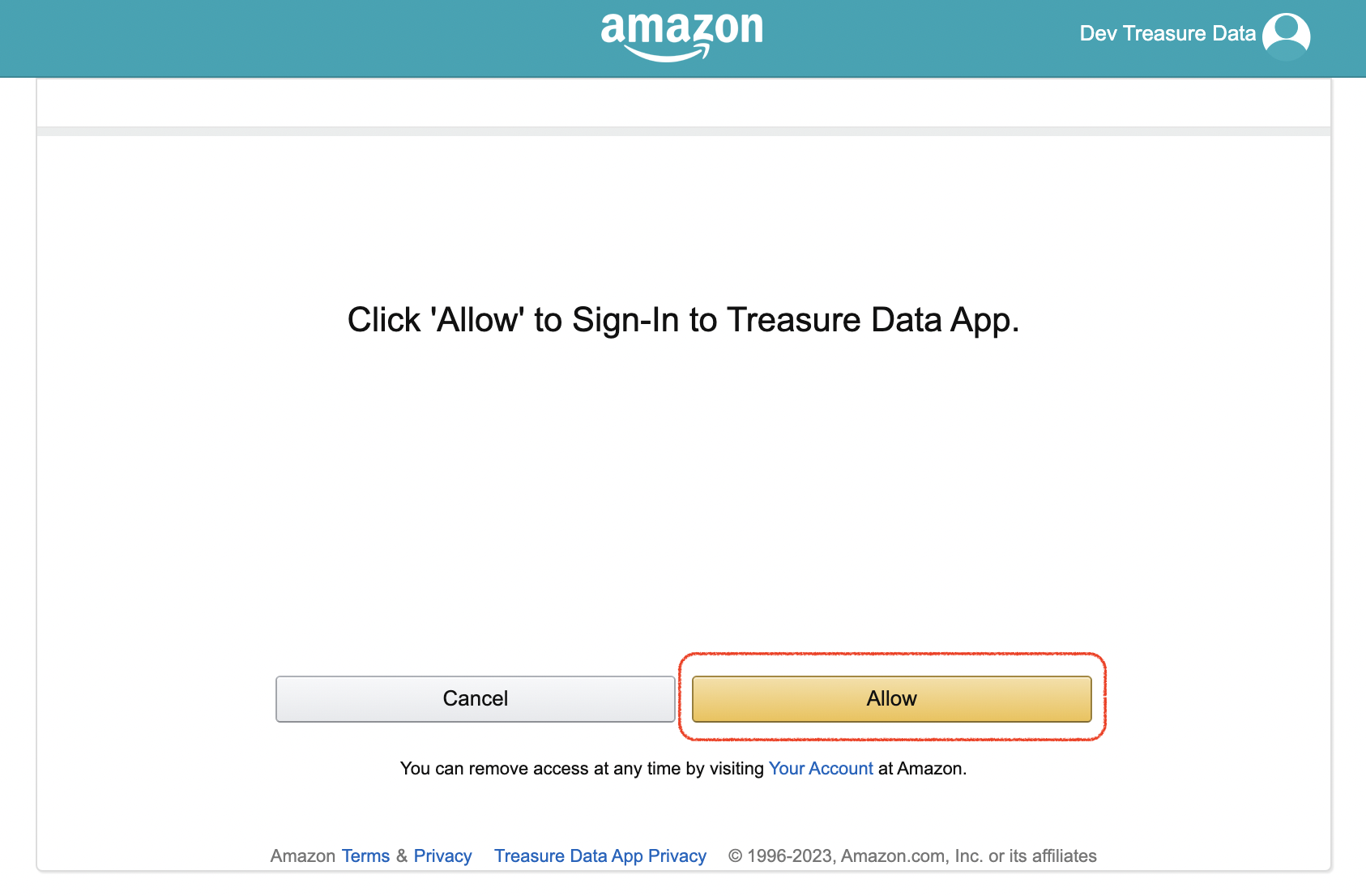

- Select Allow to accept consent screen, which redirects to the TD console.

- Fill in the required credentials fields.

- Select Continue.

- Type a name for your authentication.

- Select Done.

The following table describes the parameters for configuring the Amazon Marketing Cloud Export Integration.

| Parameter | Description |

|---|---|

| AMC Instance Id | Amazon Marketing Cloud Instance ID |

| AMC Account Id | Amazon Marketing Cloud Account ID. If you leave it blank, then the first account of the instance ID will be used. |

| S3 Endpoint | S3 service endpoint override. You can find region and endpoint information from the AWS service endpoints document. (For example, s3.ap-northeast-1.amazonaws.com). When specified, it overrides the region setting. |

| S3 Region | AWS Region |

| S3 Authentication Method | Choose from the following authentication methods:

|

| Access Key ID | AWS S3 issued Access Key ID |

| Secret Access Key | AWS S3 issued Secret Access Key |

| S3 Session token | Your temporary AWS Session Token |

| TD's Instance Profile | The TD Console provides this value. The numeric portion of the value constitutes the Account ID you use to create your IAM role. |

| Account ID | Your AWS Account ID |

| Your Role Name | Your AWS Role Name |

| External ID | Your Secret External ID |

| Duration In Seconds | Duration For The Temporary Credentials |

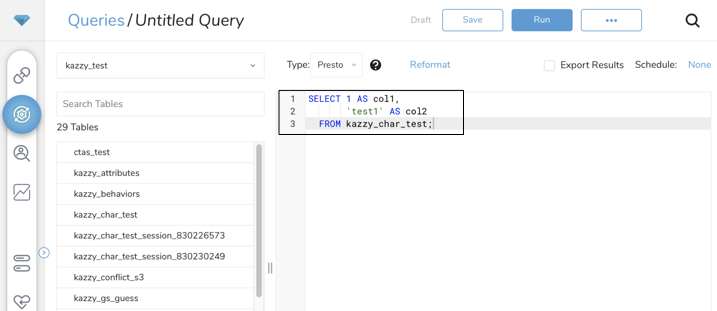

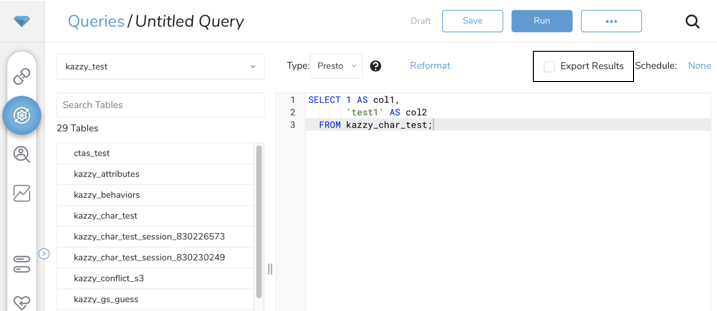

- Navigate to Data Workbench > Queries.

- Select New Query.

- Run the query to validate the result set.

- Select Export Results

Select an existing integration authentication.

Define any additional Export Results details. In your export integration content, review the integration parameters.

- Select Upload or Delete for Dataset Operation.

Check o" "Create new dataset if it does not exist "t" to create a new dataset on the fly. Provide the dataset definition described in the section. "Prepare Dataset as upload target."

The following table describes the configuration parameters for the Amazon Marketing Cloud export integration.

| Parameter | Required | Description |

|---|---|---|

| Target | yes | Support 2 target types:

|

| For "AMC Data" Type | ||

| API version | yes, the default is Latest | AMC version. Values include Latest only |

Dataset Operation | yes, if the target is the AMC dataset | Dataset operation: Upload: Upload data into dataset Delete: Delete identities from all existing data set |

| Dataset Identifier | yes, if the target is the AMC dataset and the dataset operation is upload | The dataset ID that data will be uploaded to |

| Dataset Definition | required when the option "Create dataset if not exist" is true | Define the target dataset in json format. |

Update Strategy | yes, if the API version is the latest | Values include ADDITIVE, FULL REPLACE, OVERLAP REPLACE, OVERLAP KEEP Visit https://advertising.amazon.com/API/docs/en-us/guides/amazon-marketing-cloud/advertiser-data-upload/advertiser-data-upload for more details. |

Country Code | The source country of your uploaded data is ISO_3166-1_alpha-2 format. Visit https://en.wikipedia.org/wiki/ISO_3166-1_alpha-2#Officially_assigned_code_elements for more details. | |

| S3 Bucket | yes, if the target is the AMC dataset and the dataset operation is upload | S3 bucket name |

| S3 Path | yes, if the target is the AMC dataset and the dataset operation is upload | The S3 path where you store data upload |

| File Name Prefix | File upload name prefix | |

| Wait Until The Operation Finish? | yes, if the target is the AMC dataset and the dataset operation is upload | Wait until the operation is finished on the AMC side |

| Failed On Upload Status Error | If checked, the operation will fail if there is an error in the upload status response from AMC. This option only applies when the "Wait Until The Operation Finish?" checkbox is selected. | |

| Clean Uploaded Files After Done? | Remove all uploaded files on S3 after they are done | |

| Add The IAB European Transparency & Consent Framework (TCF) | If checked, TCF consent column (tcf_string) will be added to the dataset if it does not already exist. Visit for more information. | |

| Add The Global Privacy Protocol (GPP) | If checked, GPP consent column (gpp_string) will be added to the dataset if it does not already exist. Visit for more information. | |

| Add The Amazon Consent Signal (ACS) | If checked, ACS consent columns (amazon_ad_storage, amazon_user_data) shall be added to the dataset if they do not already exist. Visit for more information. | |

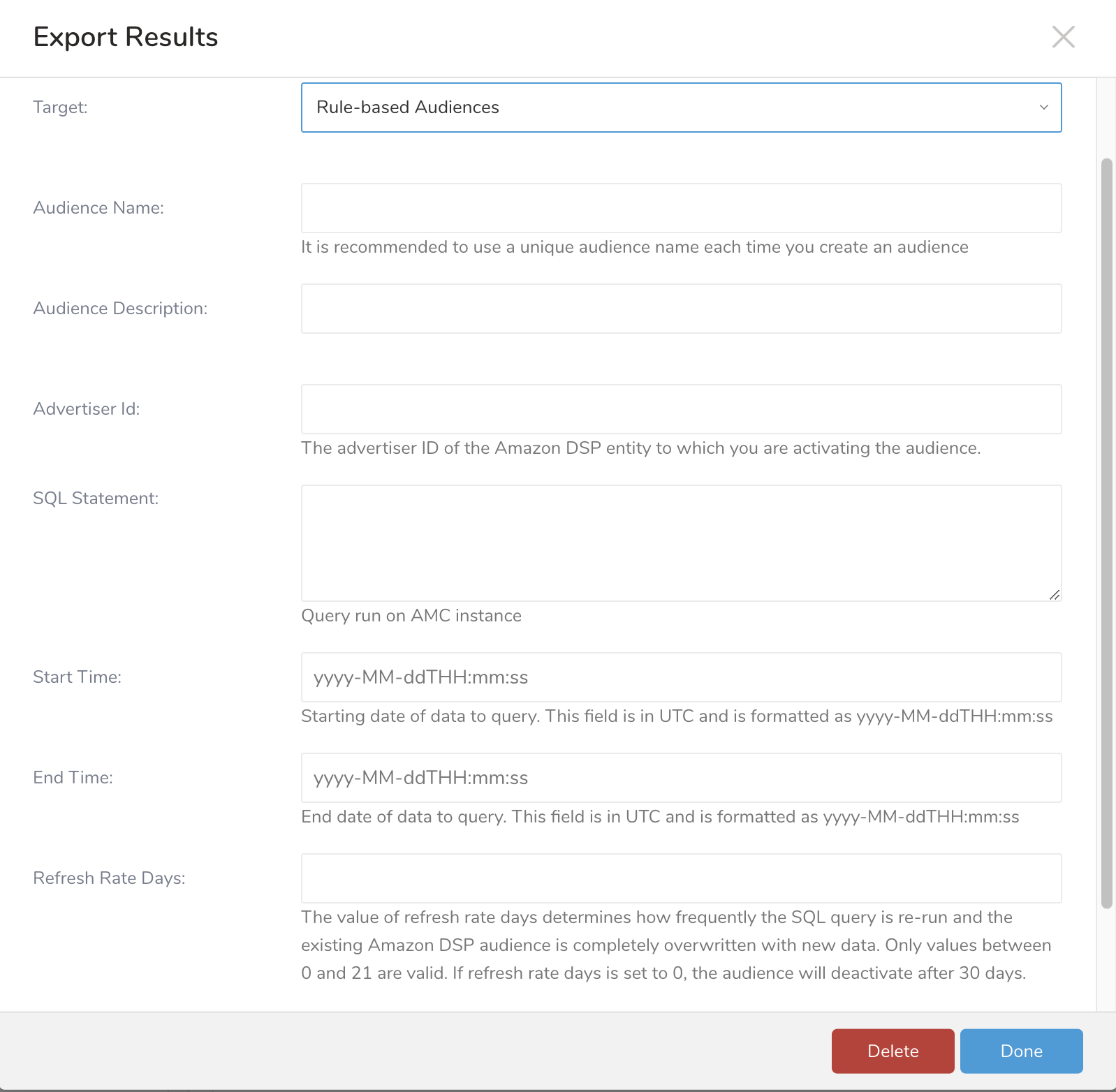

| For "Rule-based Audiences" Type | ||

| Audience Name | yes, if the target is Rule-based Audiences | |

| Audience Description | ||

| Advertiser Id | yes, if the target is Rule-based Audiences | Advertiser ID on Amazon DSP |

SQL Statement | yes, if the target is Rule-based Audiences | Query run on AMC instance. user_id (case sensitive) must always be part of the SELECT statement – the audience is constructed from user_ids. For example: select user_id from tbl; |

| Start Time | yes, if the target is Rule-based Audiences | Starting date of data to query |

| End Time | yes, if the target is Rule-based Audiences | Ending date of data to query |

Refresh Rate Days | The value of refresh rate days determines how frequently the SQL query is re-run and how completely the existing Amazon DSP audience is overwritten with new data. Only values between 0 and 21 are valid. If refresh rate days are set to 0, the audience will deactivate after 30 days. Default value: 21 | |

Time Window Relative | The time window relative parameter allows you to increment the date range of the SQL query by the refresh rate days value. Default value: false |

- To upload data to the dataset, your query result must have a column name matching the field name (case-sensitive). All mismatches between query columns and dataset fields are ignored.

- The main event time column is required when we upload data to the FACT dataset.

- All non-nullable fields of the dataset are required in the query.

- If a dataset field is non-nullable, and a row of result queries for this field has a null value, the row is skipped. For example:

- We have the FACT dataset tutorial_off_amazon_purchaseswith purchase_time as the main event time field. All fields are non-nullable.

Sample query:

SELECT

product_name,

product_sku,

product_quantity,

purchase_time,

purchase_value

FROM

table_name;Alternatively, you can use an alias to match your query column name with dataset fields. Sample query:

SELECT

column_a AS product_name,

column_b AS product_sku,

column_c AS product_quantity,

column_d AS purchase_time,

column_e AS purchase_value

FROM

table_nameNote:

- When Add The IAB European Transparency & Consent Framework (TCF) is checked, the tcf_string field is required in the query and must be of string data type.

- When Add The Global Privacy Protocol (GPP) is checked, the gpp_string field is required in the query and must be of string data type.

- When Add The Amazon Consent Signal (ACS) is checked, the amazon_ad_storage and amazon_user_data fields are required in the query and must be of string data type. The amazon_ad_storage and amazon_user_data fields accept the following string values: "GRANTED", "DENIED", or "NULL".

The data type of each column from the query result must be compatible with the dataset field.

| Column Data Type | Dataset Field Data type |

|---|---|

| STRING | STRING |

| DOUBLE | DECIMAL |

| LONG | INTEGER |

| LONG | LONG |

| TIMESTAMP | TIMESTAMP (yyyy-MM-ddThh:mm:ssZ) |

| TIMESTAMP | DATE (yyyy-MM-dd) |

| LONG (epoch second) | TIMESTAMP (yyyy-MM-ddThh:mm:ssZ) |

| LONG (epoch second) | DATE (yyyy-MM-dd) |

To delete identities from all existing data sets, your query result must have at least one identity column name. The identity column name should include first_name, last_name, email, phone, address, city, state, zip, andcountry_code**.** Columns with other names are ignored. Sample query:

SELECT

first_name,

last_name,

email

FROM

table_nameCreating an Audience doesn't require data from TD. It runs a query from the dataset and creates an audience based on the query result. So, we only use "Select 1" to trigger a job from the TD side. We don't allow any query to return more than one row from the TD side.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

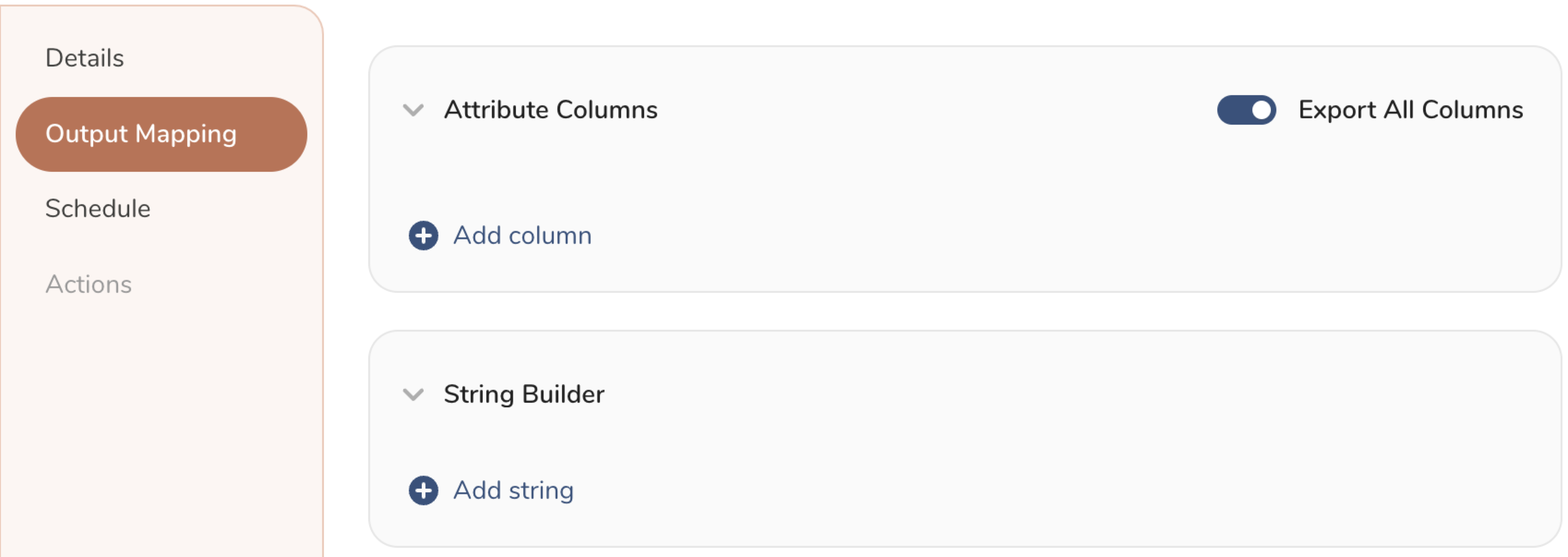

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

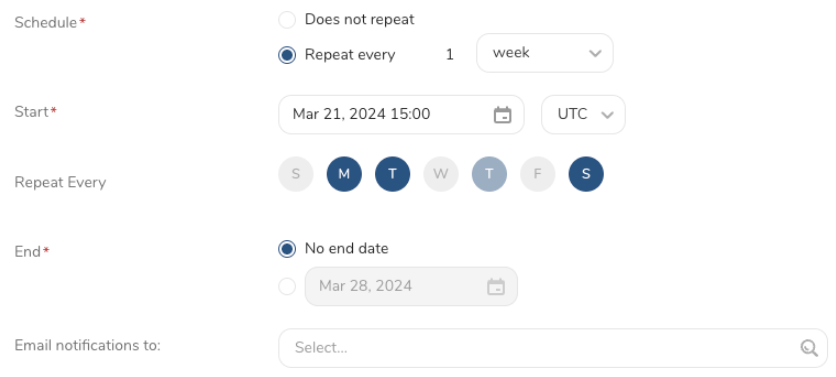

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

You can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

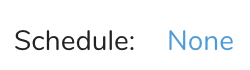

Next to Schedule, select None.

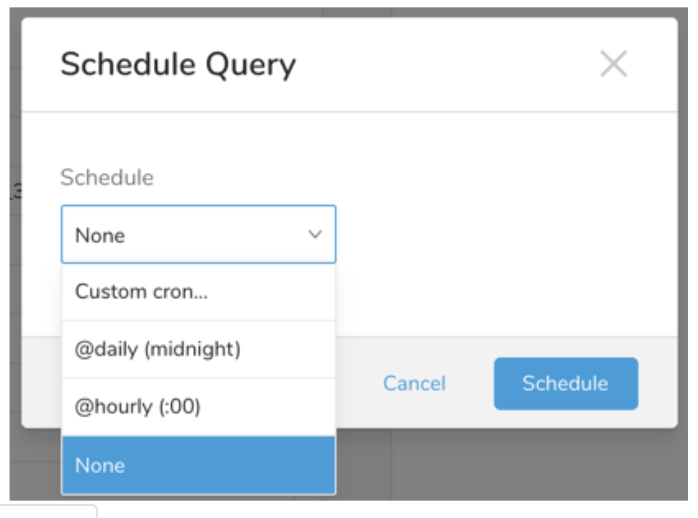

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

Within Treasure Workflow, you can specify the use of this integration to export data.

Learn more about Exporting Data with Parameters.

_export:

td:

database: amc_db

+amc_task:

td>: upload.sql

database: ${td.database}

result_connection: new_amc_auth

result_settings:

type: amazon_marketing_cloud

target: dataset

operation: upload

dataset_id: dataset_id

bucket: bucket_name

path_prefix: path_prefix/

file_name_prefix: file_name_prefix

wait_until_finish: true

failed_on_upload_status_error: false

clean_upload_file: true

add_consent_tcf: true

add_consent_gpp: true

add_consent_acs: true_export:

td:

database: amc_db

+amc_task:

td>: upload.sql

database: ${td.database}

result_connection: new_amc_auth

result_settings:

type: amazon_marketing_cloud

target: dataset

operation: upload

dataset_id: dataset_id

create_dataset: true

dataset_definition: dataset_definition_in_json_string

bucket: bucket_name

path_prefix: path_prefix

file_name_prefix: file_name_prefix

wait_until_finish: true

failed_on_upload_status_error: false

clean_upload_file: true

add_consent_tcf: true

add_consent_gpp: true

add_consent_acs: true_export:

td:

database: amc_db

+amc_task:

td>: delete.sql

database: ${td.database}

result_connection: new_amc_auth

result_settings:

type: amazon_marketing_cloud

target: dataset

operation: delete_export:

td:

database: amc_db

+amc_task:

td>: audience.sql

database: ${td.database}

result_connection: new_amc_auth

result_settings:

type: amazon_marketing_cloud

target: rule_based_audiences

amc_instance_id: amc_instance_id

amc_account_id: amc_account_id

audience_name: "test_audience"

audience_description: "test audience description"

advertiser_id: 123

query: "SELECT user_id FROM dsp_impressions"

time_window_start: "2023-06-25T00:00:00"

time_window_end: "2023-07-25T00:00:00"

refresh_rate_days: 1

time_window_relative: false- Fact vs. Dimension datasets: https://docs.aws.amazon.com/solutions/latest/amazon-marketing-cloud-uploader-from-aws/amc-fact-compared-with-dimension-datasets.html

- Dataset creation guideline: https://advertising.amazon.com/API/docs/en-us/guides/amazon-marketing-cloud/advertiser-data-upload/advertiser-data-sets