You can write job results from Treasure Data directly to Airship audience lists. In Airship, you can then use these lists to target specific users.

- Basic Knowledge of Treasure Data.

- Basic knowledge of Airship, Airship audience lists, attributes, and custom events.

If your security policy requires IP whitelisting, you must add Treasure Data's IP addresses to your allowlist to ensure a successful connection.

Please find the complete list of static IP addresses, organized by region, at the following document

- You can upload up to 10 million records.

- You can create up to 100 uploaded lists.

- Due to API limitations, you can override list content, but you cannot append to lists

In Treasure Data, you must create and configure the data connection before running your query. As part of the data connection, you provide authentication to access the integration.

- Open TD Console.

- Navigate to Integrations Hub > Catalog.

- Click the search icon on the far right of the Catalog screen and enter Airship.

- Hover over the AirShip connector and select Create Authentication.

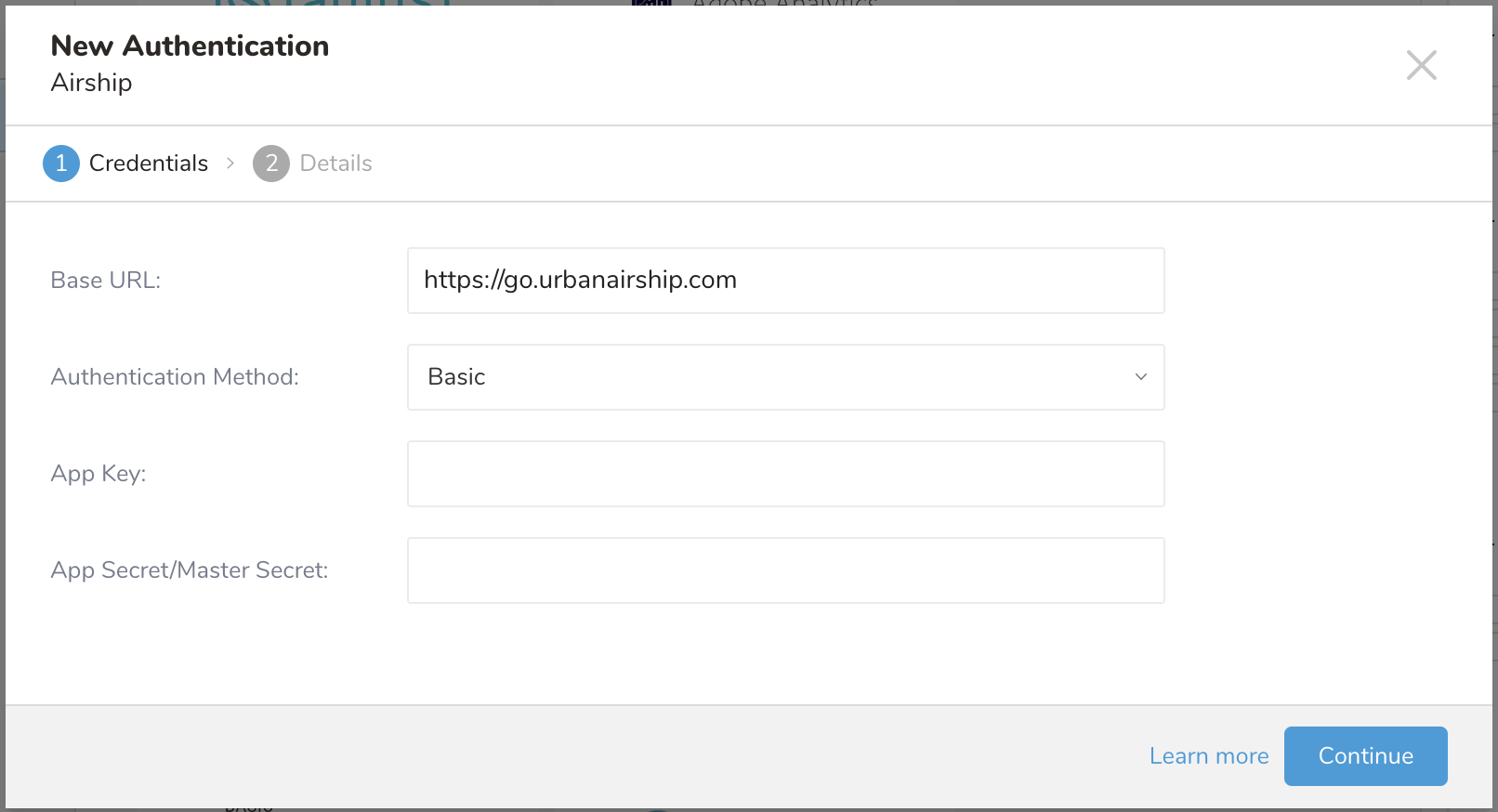

The New Authentication dialog opens.

5. Enter the Base URL:

5. Enter the Base URL:

- Airship North American cloud site: https://go.urbanairship.com

- Airship European cloud site: https://go.airship.eu

- Choose one of the following authentication methods

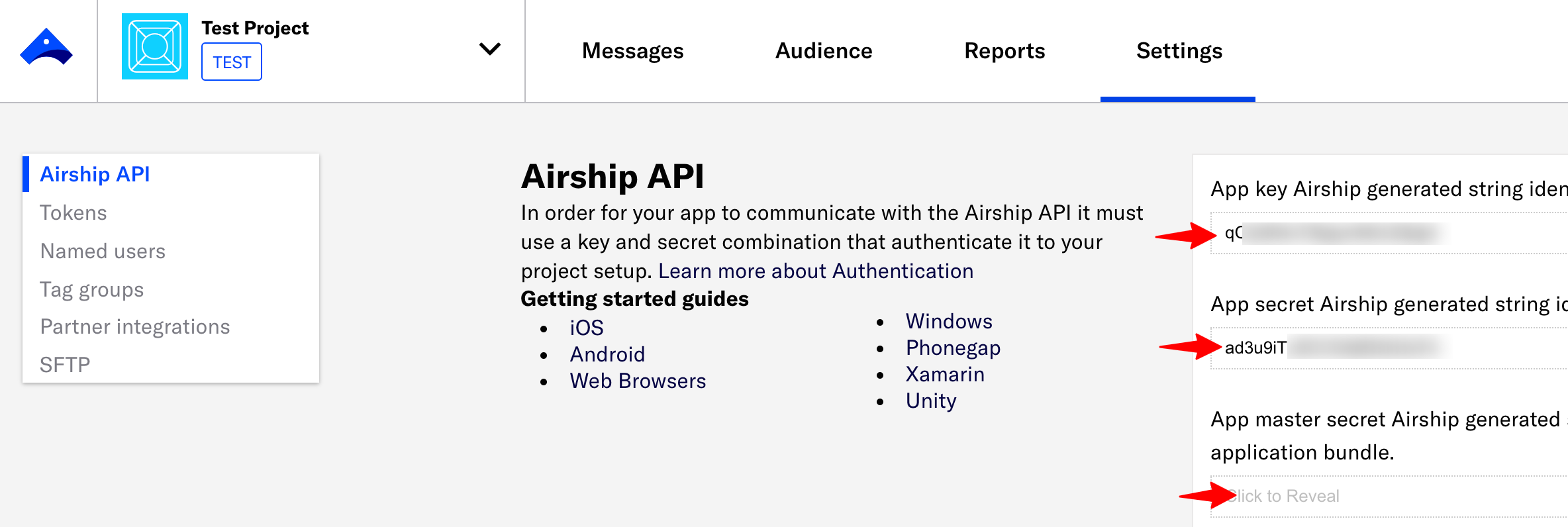

- In the Airship project dashboard, select Settings > APIs & Integrations.

- Enter the App key, App secret, and App master secret.

- Enter authentication credentials. If authentication is for custom events upload, access token method with app key is required. See below how to obtain App key, App secret and token.

- Enter a name for your connection.

- Select Continue.

This integration supports users syncing different types of data to Airship.

| Configurations | Options | Description |

|---|---|---|

| Data Type | Static List | Upload static list to Airship Audience Lists (Static list is automatically created if the target doesn't exist) |

| Non-JSON Attributes | Sync values to text, number or date time attributes on Airship. | |

| JSON Attributes | Sync values to a json attribute on Airship | |

| Custom Events | Sync custom events to Airship |

To configure the integration:

- Open the TD Console.

- Navigate to Data Workbench > Queries.

- Click New Query to create a new query or search for a saved query that you plan to use to export data.

- At the top of your query editor, select Export Results.

Refer to the sample query below to see how configure an export job to upload your list of users and channels to an Airship audience list.

| Parameter | Description |

|---|---|

| Data Type | Static list |

| List Name (required) | Audience list name |

| Description (optional) | Audience list description |

| Skip Empty Records (optional) | The default is True. If the query result contains an empty or null column, the row will be skipped, otherwise, the job will fail and you need to remove empty records manually. |

Sample query

SELECT identifier_type, identifier FROM table my_tableSample query result

identifier_type, identifiernamed_user,customer-42named_user,room-27ios_channel,5i4c91s5-9tg2-k5zc-m592150z5634web_channel,d132f5b7-abcf-4920-aeb3-9132ddac3d5aandroid_channel,52b2b587-0152-4134-a8a0-38ae6933c88aemail_channel,ab1a81e3-5af3-4c04-a7ae-d676960e6684open_channel,6bcf3e63-a38a-44d8-8b0d-2fb5941e74absms_channel,ab1a81e3-aaf3-ac04-a7ae-a676960e6684Refer to the sample query below to see how to sync values to text, number, or date and time attributes to an Airship audience list.

| Parameter | Description |

|---|---|

| Data Type | Non-JSON attributes |

| Target | Select either Named Users or Channel. |

| Action | - Batch CSV upload -- Treasure Data recommends the Batch CSV upload for a large number of records. This mode is used to add and update values of custom attributes only. - Set or Remove -- These options use a single upload call per record. This can result in faster propagation of data on Airship, but slower overall execution time. |

| Skip Empty Records (optional) | The default is True. If the query result contains an empty or null column, the row will be skipped, otherwise, the job will fail and you need to remove empty records manually. |

Sample Query for Named Users

In this example, fav_color and fav_sport should be defined as attribute keys on Airship.

SELECT n.named_user AS named_user, a.fav_color as fav_color, a.fav_sport as fav_sportFROM named_users n JOIN attributes a ON n.id = a.idSample Query Results

named_user, fav_color, fav_sportcustomer-42, red, footballcustomer-22, black, tennisSample Query for Channels

In this example:

- fav_color, fav_sport should be defined as attribute keys on Airship.

- Identifier_type expects one of the following: amazon_channel, android_channel, channel, email_address, ios_channel, sms_id, web_channel

- If identifier_type is sms_id, the column sender is expected as additional information is required. (Otherwise that record is invalid.)

- Identifer_value expects the id of that channel

SELECT c.channel_id AS identifier_value, 'email_address' AS identifier_type, a.fav_color as fav_color, a.fav_sport as fav_sportFROM channels c JOIN attributes a ON c.id = a.idSample Query Results

identifier_type, identifier_value, fav_color, fav_sportemail_address, customer11@email.com, red, footballemail_address, customer21@email.com, black, footballRefer to the sample query below to see how to sync JSON attributes to an Airship audience list.

| Parameter | Description |

|---|---|

| Data Type | JSON Attributes |

| Target | Select either Named Users or Channel |

| Action | - Set or Remove -- These options use a single upload call per record. This can result in faster propagation of data on Airship, but slower overall execution time. |

| Attribute name | The name of the JSON attribute. Only one attribute can be set at a time. |

| Skip Empty Records (optional) | The default is True. If the query result contains an empty or null column, the row will be skipped, otherwise, the job will fail and you need to remove empty records manually. |

| Json Composer (optional) | Default is true. Json composer composes json value from provided properties fields. Otherwise, a property field with valid json value expected. The property field name must be the same with the Attribute Name we want to update. |

Sample query

In this example, the integration expects the output of the query to be structured in a pivot or horizontal format. This means that the data for each reservation is presented in a single row, with multiple columns representing different attributes of the flights associated with that reservation. For example, if a customer has two flights, the output will list all relevant details for both flights in a single row, with columns specifically designated for each flight's attributes (e.g., flights[0].departure_port, flights[1].departure_port, etc.).

This approach contrasts with a vertical format, where each flight's details would be listed in separate rows. However, when collecting records in batches, if the records of an array of objects are described vertically, there may be misses in collecting array items belonging to same object.

Note that the integration creates the JSON object that is sent to Airship. When json composer flag is off, a column name of "attribute name" with value in JSON format is expected. The valid values are sent to Airship as attributes.

Json composer is on

WITH flights_with_index AS (

SELECT

f.reservation_id,

f.departure_port,

f.departure_time,

f.arrival_port,

f.arrival_time,

ROW_NUMBER() OVER (

PARTITION BY f.reservation_id

ORDER BY f.departure_time

) - 1 AS flight_index

FROM flights f

JOIN reservations r

ON f.reservation_id = r.reservation_id

)

SELECT

'named_users' AS identifier_type,

r.customer_name AS identifier_value,

t.reservation_id AS instance_id,

MAX(CASE WHEN t.flight_index = 0 THEN t.departure_port END) AS "flights[0].departure_port",

MAX(CASE WHEN t.flight_index = 0 THEN t.departure_time END) AS "flights[0].departure_time",

MAX(CASE WHEN t.flight_index = 0 THEN t.arrival_port END) AS "flights[0].arrival_port",

MAX(CASE WHEN t.flight_index = 0 THEN t.arrival_time END) AS "flights[0].arrival_time",

MAX(CASE WHEN t.flight_index = 1 THEN t.departure_port END) AS "flights[1].departure_port",

MAX(CASE WHEN t.flight_index = 1 THEN t.departure_time END) AS "flights[1].departure_time",

MAX(CASE WHEN t.flight_index = 1 THEN t.arrival_port END) AS "flights[1].arrival_port",

MAX(CASE WHEN t.flight_index = 1 THEN t.arrival_time END) AS "flights[1].arrival_time"

FROM flights_with_index t

JOIN reservations r

ON t.reservation_id = r.reservation_id

GROUP BY

r.customer_name,

t.reservation_id

ORDER BY

instance_id;Results

| identifier_type | identifier_value | instance_id | flights[0].departure_port | flights[0].departure_time | flights[0].arrival_port | flights[0].arrival_time | flights[1].departure_port | flights[1].departure_time | flights[1].arrival_port | flights[1].arrival_time |

|---|---|---|---|---|---|---|---|---|---|---|

| named_users | customer-1 | rev1 | PDX | 2025-01-08 00:00:00.000 | LAX | 2025-01-09 00:00:00.000 | LAX | 2025-01-09 00:00:00.000 | PDX | 2025-01-10 00:00:00.000 |

| named_users | customer-2 | rev2 | SGN | 2025-01-08 00:00:00.000 | BKK | 2025-01-09 00:00:00.000 | BKK | 2025-01-09 00:00:00.000 | SGN | 2025-01-10 00:00:00.000 |

| named_users | customer-3 | rev3 | SGN | 2025-01-08 00:00:00.000 | TDN | 2025-01-09 00:00:00.000 | TDN | 2025-01-09 00:00:00.000 | SGN | 2025-01-10 00:00:00.000 |

| named_users | customer-4 | rev4 | BKK | 2025-01-09 00:00:00.000 | TST | 2025-01-10 00:00:00.000 | ||||

| named_users | customer-5 | rev5 | ABC | 2025-01-09 00:00:00.000 | XTZ | 2025-01-10 00:00:00.000 | ||||

| named_users | customer-6 | rev6 | SSN | 2025-01-09 00:00:00.000 | BKK | 2025-01-10 00:00:00.000 |

Json composer is off

SELECT

named_user,

instance_id,

booking

FROM (

VALUES

(

'customer-1',

'u111',

'{"flights":[{"departure_port":"PDX","departure_time":"2025-01-08 00:00:00.000","arrival_port":"LAX","arrival_time":"2025-01-09 00:00:00.000"},{"departure_port":"LAX","departure_time":"2025-01-09 00:00:00.000","arrival_port":"PDX","arrival_time":"2025-01-10 00:00:00.000"}]}'

),

(

'customer-2',

'u222',

'{"flights":[{"departure_port":"PDX","departure_time":"2025-01-08 00:00:00.000","arrival_port":"LAX","arrival_time":"2025-01-09 00:00:00.000"},{"departure_port":"LAX","departure_time":"2025-01-09 00:00:00.000","arrival_port":"PDX","arrival_time":"2025-01-10 00:00:00.000"}]}'

)

) AS tbl(named_user, instance_id, booking);Results

| named_user | instance_id | booking |

|---|---|---|

| customer-1 | u111 | {"flights":[{"departure_port":"PDX","departure_time":"2025-01-08 00:00:00.000","arrival_port":"LAX","arrival_time":"2025-01-09 00:00:00.000"},{"departure_port":"LAX","departure_time":"2025-01-09 00:00:00.000","arrival_port":"PDX","arrival_time":"2025-01-10 00:00:00.000"}]} |

| customer-2 | u222 | {"flights":[{"departure_port":"PDX","departure_time":"2025-01-08 00:00:00.000","arrival_port":"LAX","arrival_time":"2025-01-09 00:00:00.000"},{"departure_port":"LAX","departure_time":"2025-01-09 00:00:00.000","arrival_port":"PDX","arrival_time":"2025-01-10 00:00:00.000"}]} |

Refer to the sample query below to see how to sync Custom Events to an Airship.

| Parameter | Description |

|---|---|

| Data Type | Custom Events |

Json Composer (optional) | Default is false. Properties field with valid json value expected. Otherwise, json composer composes json value from provided properties fields. |

| Skip Empty Records (optional) | The default is True. If the query result contains an empty or null column, the row will be skipped, otherwise, the job will fail and you need to remove empty records manually. |

Sample query

In this example, the integration expects the output of the query to be structured in a pivot or horizontal format. This means that the data for each purchase is presented in a single row, with multiple columns representing different attributes of the items associated with that purchase. For example, if a customer has two items, the output will list all relevant details for both items in a single row, with columns specifically designated for each item's attributes (e.g., items[0].text, items[1].text, etc.).

This approach contrasts with a vertical format, where each item's details would be listed in separate rows. However, when collecting records in batches, if the records of an array of objects are described vertically, there may be misses in collecting array items belonging to same object.

Note that the integration creates the JSON object that is sent to Airship.

WITH items_with_index AS (

SELECT

f.purchase_id,

f.text,

f.price,

ROW_NUMBER() OVER (

PARTITION BY f.purchase_id

ORDER BY f.text

) - 1 AS item_index

FROM purchase_items f

)

SELECT

r.identifier_type,

r.identifier_value,

r.name,

r.interaction_id,

r.interaction_type,

r.brand AS "properties.brand",

r.value,

r.unique_id,

r.occurred,

MAX(CASE WHEN t.item_index = 0 THEN t.text END) AS "properties.items[0].text",

MAX(CASE WHEN t.item_index = 0 THEN t.price END) AS "properties.items[0].price",

MAX(CASE WHEN t.item_index = 1 THEN t.text END) AS "properties.items[1].text",

MAX(CASE WHEN t.item_index = 1 THEN t.price END) AS "properties.items[1].price",

MAX(CASE WHEN t.item_index = 2 THEN t.text END) AS "properties.items[2].text",

MAX(CASE WHEN t.item_index = 2 THEN t.price END) AS "properties.items[2].price"

FROM items_with_index t

JOIN purchase r

ON t.purchase_id = r.id

GROUP BY

r.identifier_type,

r.identifier_value,

r.name,

r.interaction_id,

r.interaction_type,

r.brand,

r.value,

r.unique_id,

r.occurred;Sample Query Results

| identifier_type | identifier_value | name | interaction_id | interaction_type | properties.brand | value | unique_id | occurred | properties.items[0].text | properties.items[0].price | properties.items[1].text | properties.items[1].price | properties.items[2].text | properties.items[2].price |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| named_user_id | customer-1 | purchase 1 | interaction 1 | url | Brand 1 | 10.5 | unique id 1 | 2025-01-09 00:00:00.000 | text 1 purchase 1 | 4.0 | text 2 purchase 1 | 2.6 | text 3 purchase 1 | 3.5 |

| named_user_id | customer-2 | purchase 2 | interaction 2 | url | Brand 2 | 34.0 | unique id 2 | 2025-01-09 00:00:00.000 | text 1 purchase 2 | 6.7 | text 2 purchase 2 | 4.9 | NULL | NULL |

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

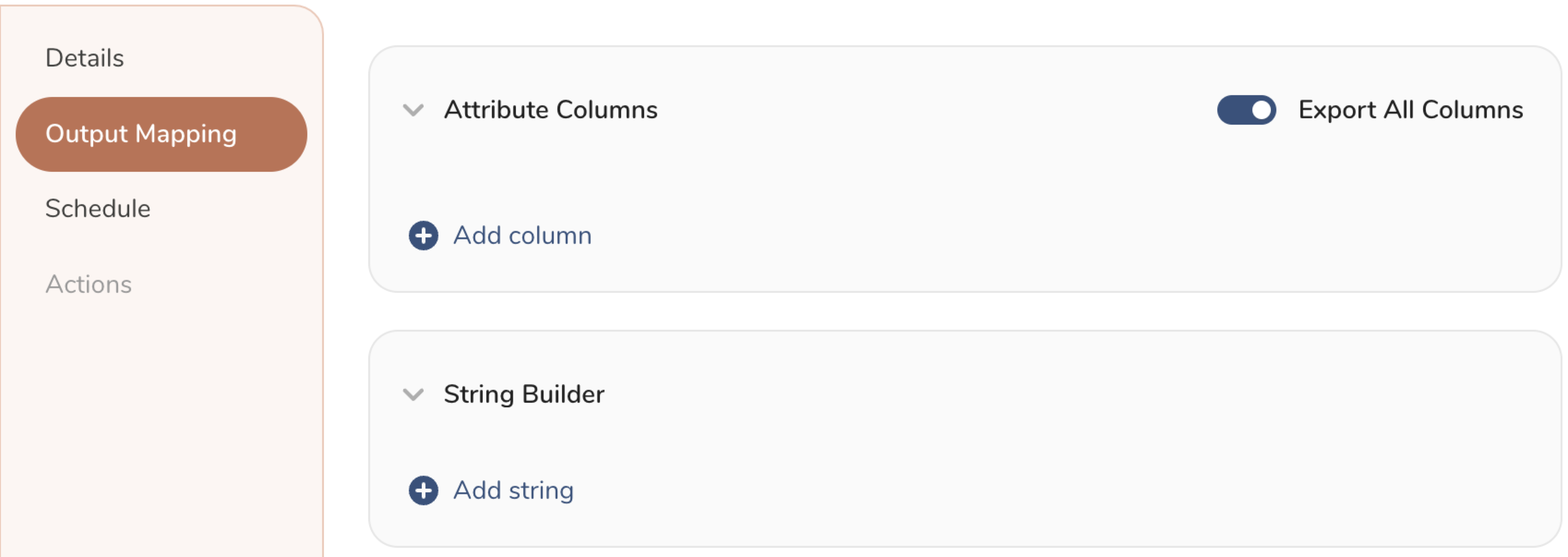

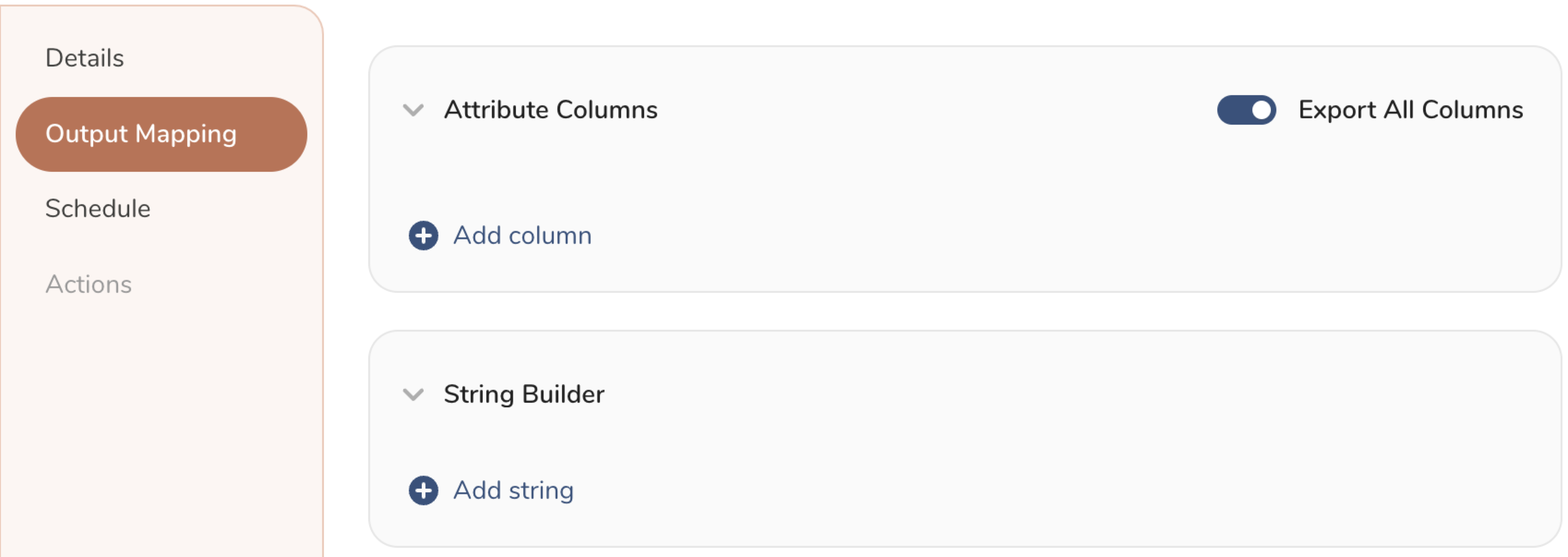

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

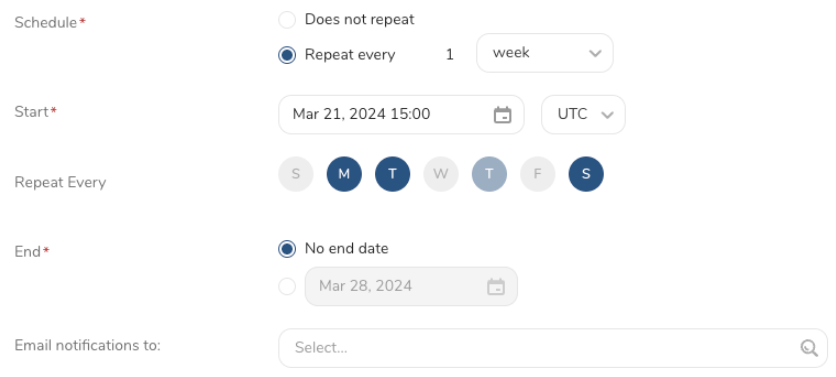

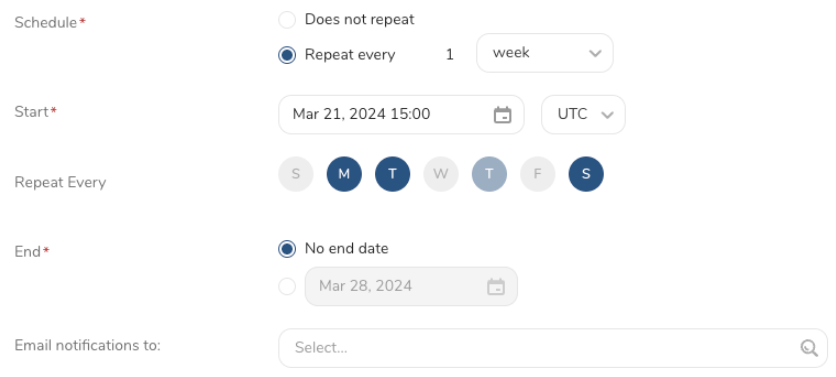

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

You can use Scheduled Jobs with Result Export to periodically write the output result to a specific target destination.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is ‘0 0 1 * 1’, then the ‘day of month’ specification and ‘day of week’ are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

You can use Scheduled Jobs with Result Export to periodically write the output result to a target destination that you specify.

Treasure Data's scheduler feature supports periodic query execution to achieve high availability.

When two specifications provide conflicting schedule specifications, the specification requesting to execute more often is followed while the other schedule specification is ignored.

For example, if the cron schedule is '0 0 1 * 1', then the 'day of month' specification and 'day of week' are discordant because the former specification requires it to run every first day of each month at midnight (00:00), while the latter specification requires it to run every Monday at midnight (00:00). The latter specification is followed.

Navigate to Data Workbench > Queries

Create a new query or select an existing query.

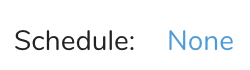

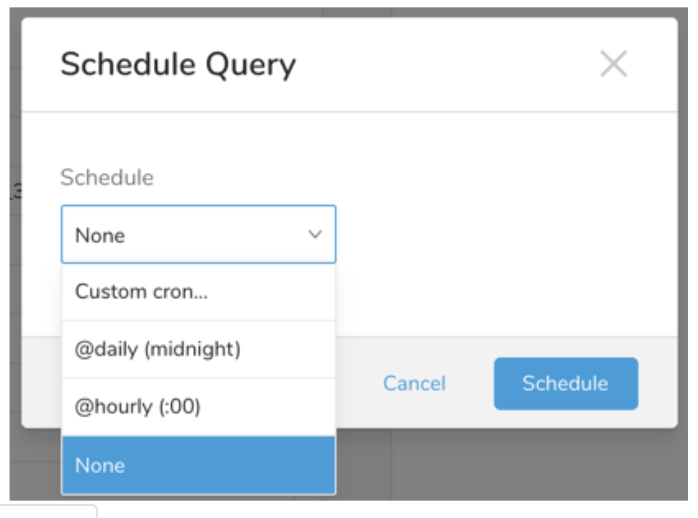

Next to Schedule, select None.

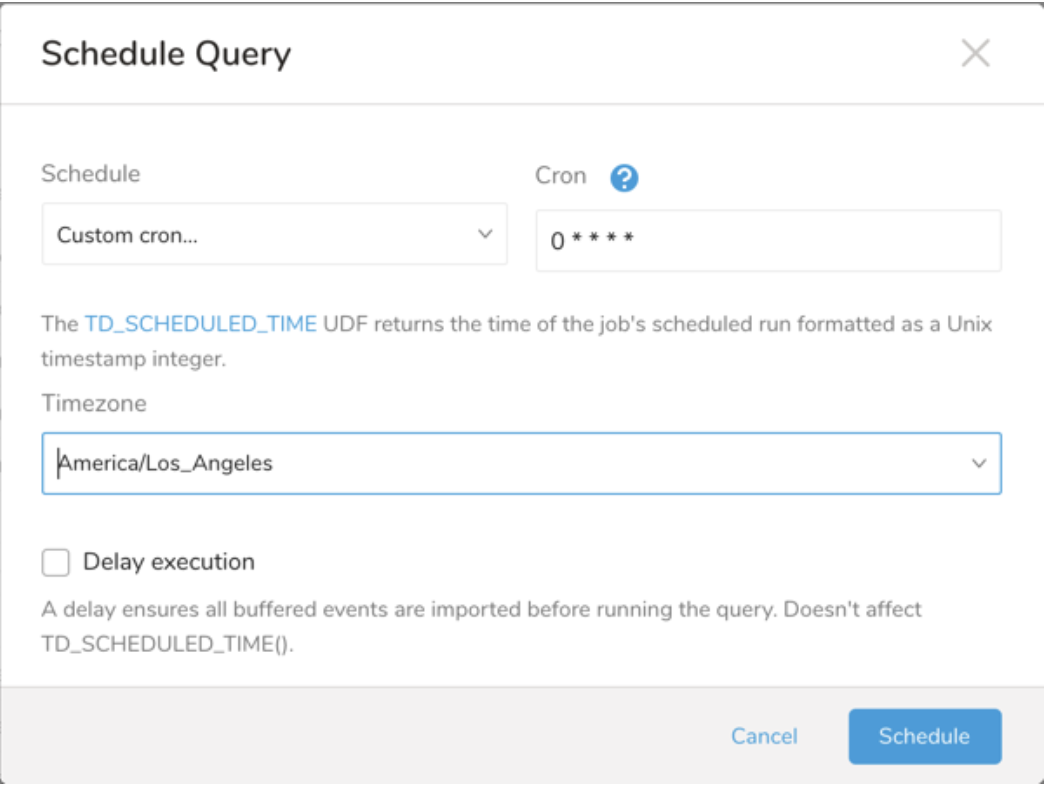

In the drop-down, select one of the following schedule options:

Drop-down Value Description Custom cron... Review Custom cron... details. @daily (midnight) Run once a day at midnight (00:00 am) in the specified time zone. @hourly (:00) Run every hour at 00 minutes. None No schedule.

| Cron Value | Description |

|---|---|

0 * * * * | Run once an hour. |

0 0 * * * | Run once a day at midnight. |

0 0 1 * * | Run once a month at midnight on the morning of the first day of the month. |

| "" | Create a job that has no scheduled run time. |

* * * * *

- - - - -

| | | | |

| | | | +----- day of week (0 - 6) (Sunday=0)

| | | +---------- month (1 - 12)

| | +--------------- day of month (1 - 31)

| +-------------------- hour (0 - 23)

+------------------------- min (0 - 59)The following named entries can be used:

- Day of Week: sun, mon, tue, wed, thu, fri, sat.

- Month: jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec.

A single space is required between each field. The values for each field can be composed of:

| Field Value | Example | Example Description |

|---|---|---|

| A single value, within the limits displayed above for each field. | ||

A wildcard '*' to indicate no restriction based on the field. | '0 0 1 * *' | Configures the schedule to run at midnight (00:00) on the first day of each month. |

A range '2-5', indicating the range of accepted values for the field. | '0 0 1-10 * *' | Configures the schedule to run at midnight (00:00) on the first 10 days of each month. |

A list of comma-separated values '2,3,4,5', indicating the list of accepted values for the field. | 0 0 1,11,21 * *' | Configures the schedule to run at midnight (00:00) every 1st, 11th, and 21st day of each month. |

A periodicity indicator '*/5' to express how often based on the field's valid range of values a schedule is allowed to run. | '30 */2 1 * *' | Configures the schedule to run on the 1st of every month, every 2 hours starting at 00:30. '0 0 */5 * *' configures the schedule to run at midnight (00:00) every 5 days starting on the 5th of each month. |

A comma-separated list of any of the above except the '*' wildcard is also supported '2,*/5,8-10'. | '0 0 5,*/10,25 * *' | Configures the schedule to run at midnight (00:00) every 5th, 10th, 20th, and 25th day of each month. |

- (Optional) You can delay the start time of a query by enabling the Delay execution.

Save the query with a name and run, or just run the query. Upon successful completion of the query, the query result is automatically exported to the specified destination.

Scheduled jobs that continuously fail due to configuration errors may be disabled on the system side after several notifications.

(Optional) You can delay the start time of a query by enabling the Delay execution.

You can also send segment data to the target platform by creating an activation in the Audience Studio.

- Navigate to Audience Studio.

- Select a parent segment.

- Open the target segment, right-mouse click, and then select Create Activation.

- In the Details panel, enter an Activation name and configure the activation according to the previous section on Configuration Parameters.

- Customize the activation output in the Output Mapping panel.

- Attribute Columns

- Select Export All Columns to export all columns without making any changes.

- Select + Add Columns to add specific columns for the export. The Output Column Name pre-populates with the same Source column name. You can update the Output Column Name. Continue to select + Add Columnsto add new columns for your activation output.

- String Builder

- + Add string to create strings for export. Select from the following values:

- String: Choose any value; use text to create a custom value.

- Timestamp: The date and time of the export.

- Segment Id: The segment ID number.

- Segment Name: The segment name.

- Audience Id: The parent segment number.

- + Add string to create strings for export. Select from the following values:

- Set a Schedule.

- Select the values to define your schedule and optionally include email notifications.

- Select Create.

If you need to create an activation for a batch journey, review Creating a Batch Journey Activation.

Within Treasure Workflow, you can specify the use of a data connector to export data.

Learn more at Using Workflows to Export Data with the TD Toolbelt.

Sample Workflow Configuration for Static List

timezone: UTC

_export:

td:

database:

sample_datasets

+td-result-into-target:

td>: queries/sample.sql

result_connection: airship result_settings:

list_name: 'td uploaded list' list_description: 'ios and android channels'Sample Workflow Configuration for Attributes Update

timezone: UTC

_export:

td:

database: sample_datasets

+td-result-into-target:

td>: queries/sample.sql

result_connection: airship

result_settings:

data_type: non_json_attributes

non_json_target: named_users

non_json_action: batch_csv

skip_invalid_nonjson_att: truetimezone: UTC

_export:

td:

database: sample_datasets

+td-result-into-target:

td>: queries/sample.sql

result_connection: airship

result_settings:

data_type: custom_events

json_composer_custom_events: true

skip_invalid_custom_events: trueRefer to Airship Export Integration CLI.

Airship API documents: